|

Linearization

In mathematics, linearization (British English: linearisation) is finding the linear approximation to a function at a given point. The linear approximation of a function is the first order Taylor expansion around the point of interest. In the study of dynamical systems, linearization is a method for assessing the local stability of an equilibrium point of a system of nonlinear differential equations or discrete dynamical systems. This method is used in fields such as engineering, physics, economics, and ecology. Linearization of a function Linearizations of a function are lines—usually lines that can be used for purposes of calculation. Linearization is an effective method for approximating the output of a function y = f(x) at any x = a based on the value and slope of the function at x = b, given that f(x) is differentiable on , b/math> (or , a/math>) and that a is close to b. In short, linearization approximates the output of a function near x = a. For example, \sq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linearization Theorem

In mathematics, linearization (British English: linearisation) is finding the linear approximation to a function (mathematics), function at a given point. The linear approximation of a function is the first order Taylor expansion around the point of interest. In the study of dynamical systems, linearization is a method for assessing the local stability theory, stability of an equilibrium point of a system of nonlinear differential equations or discrete dynamical systems. This method is used in fields such as engineering, physics, economics, and ecology. Linearization of a function Linearizations of a function (mathematics), function are linear function, lines—usually lines that can be used for purposes of calculation. Linearization is an effective method for approximating the output of a function y = f(x) at any x = a based on the value and slope of the function at x = b, given that f(x) is differentiable on [a, b] (or [b, a]) and that a is close to b. In short, linearization a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonlinear Differential Equation

In mathematics and science, a nonlinear system (or a non-linear system) is a system in which the change of the output is not proportional to the change of the input. Nonlinear problems are of interest to engineers, biologists, physicists, mathematicians, and many other scientists since most systems are inherently nonlinear in nature. Nonlinear dynamical systems, describing changes in variables over time, may appear chaotic, unpredictable, or counterintuitive, contrasting with much simpler linear systems. Typically, the behavior of a nonlinear system is described in mathematics by a nonlinear system of equations, which is a set of simultaneous equations in which the unknowns (or the unknown functions in the case of differential equations) appear as variables of a polynomial of degree higher than one or in the argument of a function which is not a polynomial of degree one. In other words, in a nonlinear system of equations, the equation(s) to be solved cannot be written as a line ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperbolic Equilibrium Point

In the study of dynamical systems, a hyperbolic equilibrium point or hyperbolic fixed point is a fixed point that does not have any center manifolds. Near a hyperbolic point the orbits of a two-dimensional, non-dissipative system resemble hyperbolas. This fails to hold in general. Strogatz notes that "hyperbolic is an unfortunate name—it sounds like it should mean 'saddle point'—but it has become standard." Several properties hold about a neighborhood of a hyperbolic point, notably * A stable manifold and an unstable manifold exist, * Shadowing occurs, * The dynamics on the invariant set can be represented via symbolic dynamics, * A natural measure can be defined, * The system is structurally stable. Maps If T \colon \mathbb^ \to \mathbb^ is a ''C''1 map and ''p'' is a fixed point then ''p'' is said to be a hyperbolic fixed point when the Jacobian matrix \operatorname T (p) has no eigenvalues on the complex unit circle. One example of a map whose only fixed point is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stability Theory

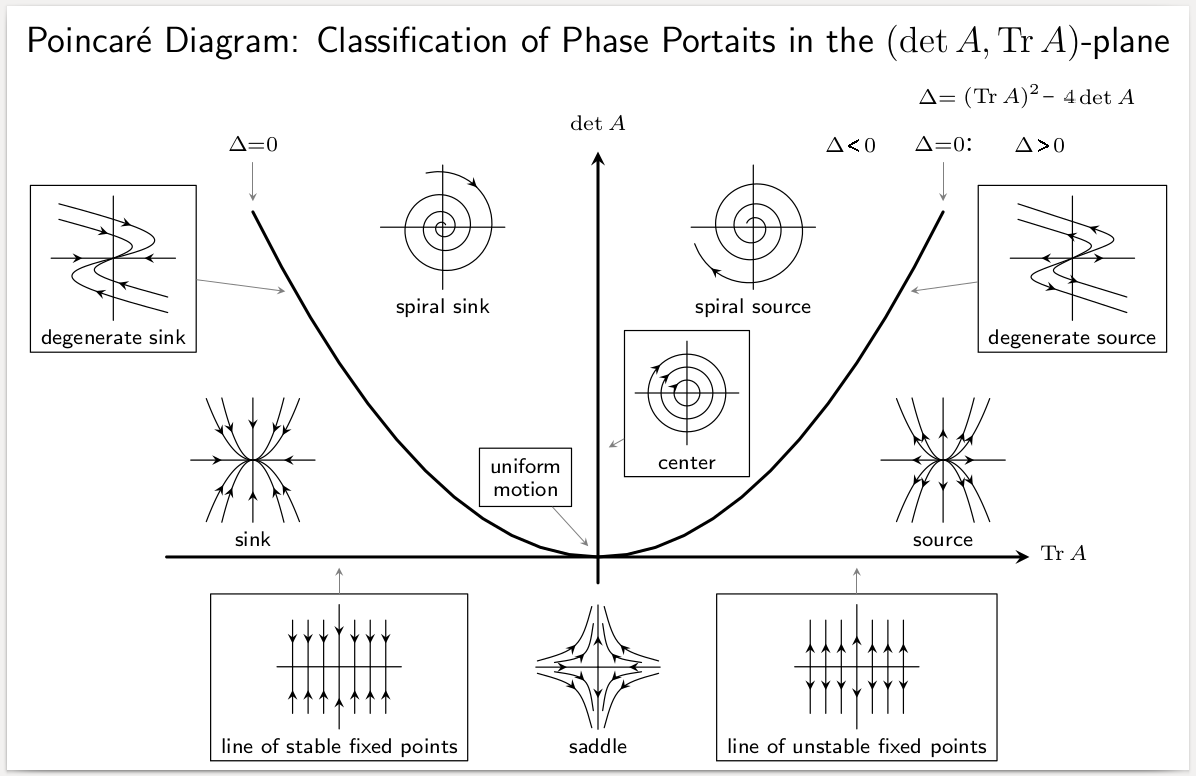

In mathematics, stability theory addresses the stability of solutions of differential equations and of trajectories of dynamical systems under small perturbations of initial conditions. The heat equation, for example, is a stable partial differential equation because small perturbations of initial data lead to small variations in temperature at a later time as a result of the maximum principle. In partial differential equations one may measure the distances between functions using Lp space, Lp norms or the sup norm, while in differential geometry one may measure the distance between spaces using the Gromov–Hausdorff convergence, Gromov–Hausdorff distance. In dynamical systems, an orbit (dynamics), orbit is called ''Lyapunov stability, Lyapunov stable'' if the forward orbit of any point is in a small enough neighborhood or it stays in a small (but perhaps, larger) neighborhood. Various criteria have been developed to prove stability or instability of an orbit. Under favorable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear System

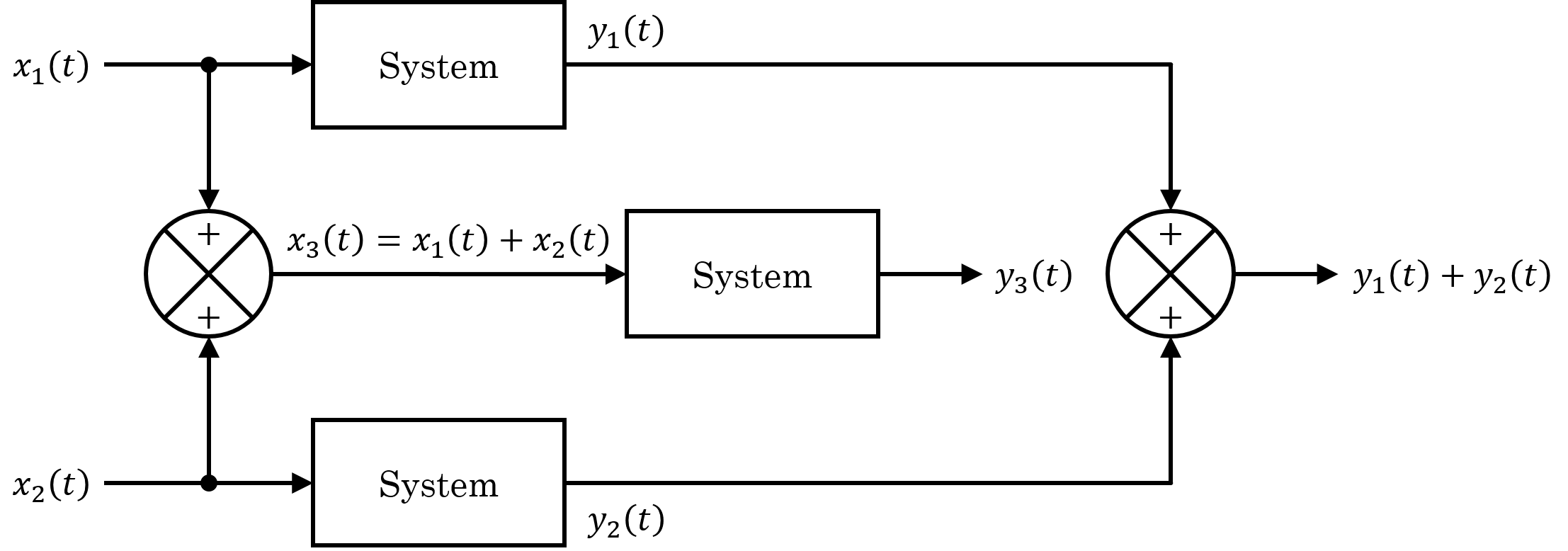

In systems theory, a linear system is a mathematical model of a system based on the use of a linear operator. Linear systems typically exhibit features and properties that are much simpler than the nonlinear case. As a mathematical abstraction or idealization, linear systems find important applications in automatic control theory, signal processing, and telecommunications. For example, the propagation medium for wireless communication systems can often be modeled by linear systems. Definition A general deterministic system can be described by an operator, , that maps an input, , as a function of to an output, , a type of black box description. A system is linear if and only if it satisfies the superposition principle, or equivalently both the additivity and homogeneity properties, without restrictions (that is, for all inputs, all scaling constants and all time.) The superposition principle means that a linear combination of inputs to the system produces a linear com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Equilibrium Point

In mathematics, specifically in differential equations, an equilibrium point is a constant solution to a differential equation. Formal definition The point \tilde\in \mathbb^n is an equilibrium point for the differential equation :\frac = \mathbf(t,\mathbf) if \mathbf(t,\tilde)=\mathbf for all t. Similarly, the point \tilde\in \mathbb^n is an equilibrium point (or fixed point) for the difference equation :\mathbf_ = \mathbf(k,\mathbf_k) if \mathbf(k,\tilde)= \tilde for k=0,1,2,\ldots. Equilibria can be classified by looking at the signs of the eigenvalues of the linearization of the equations about the equilibria. That is to say, by evaluating the Jacobian matrix at each of the equilibrium points of the system, and then finding the resulting eigenvalues, the equilibria can be categorized. Then the behavior of the system in the neighborhood of each equilibrium point can be qualitatively determined, (or even quantitatively determined, in some instances), by finding ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many areas of mathematics, which include number theory (the study of numbers), algebra (the study of formulas and related structures), geometry (the study of shapes and spaces that contain them), Mathematical analysis, analysis (the study of continuous changes), and set theory (presently used as a foundation for all mathematics). Mathematics involves the description and manipulation of mathematical object, abstract objects that consist of either abstraction (mathematics), abstractions from nature orin modern mathematicspurely abstract entities that are stipulated to have certain properties, called axioms. Mathematics uses pure reason to proof (mathematics), prove properties of objects, a ''proof'' consisting of a succession of applications of in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Derivative

In mathematics, the derivative is a fundamental tool that quantifies the sensitivity to change of a function's output with respect to its input. The derivative of a function of a single variable at a chosen input value, when it exists, is the slope of the tangent line to the graph of the function at that point. The tangent line is the best linear approximation of the function near that input value. For this reason, the derivative is often described as the instantaneous rate of change, the ratio of the instantaneous change in the dependent variable to that of the independent variable. The process of finding a derivative is called differentiation. There are multiple different notations for differentiation. '' Leibniz notation'', named after Gottfried Wilhelm Leibniz, is represented as the ratio of two differentials, whereas ''prime notation'' is written by adding a prime mark. Higher order notations represent repeated differentiation, and they are usually denoted in Leib ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gradient

In vector calculus, the gradient of a scalar-valued differentiable function f of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p gives the direction and the rate of fastest increase. The gradient transforms like a vector under change of basis of the space of variables of f. If the gradient of a function is non-zero at a point p, the direction of the gradient is the direction in which the function increases most quickly from p, and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to minimize a function by gradient descent. In coordinate-free terms, the gradient of a function f(\mathbf) may be defined by: df=\nabla f \cdot d\mathbf where df is the total infinitesimal change in f for a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenvalue

In linear algebra, an eigenvector ( ) or characteristic vector is a vector that has its direction unchanged (or reversed) by a given linear transformation. More precisely, an eigenvector \mathbf v of a linear transformation T is scaled by a constant factor \lambda when the linear transformation is applied to it: T\mathbf v=\lambda \mathbf v. The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor \lambda (possibly a negative or complex number). Geometrically, vectors are multi-dimensional quantities with magnitude and direction, often pictured as arrows. A linear transformation rotates, stretches, or shears the vectors upon which it acts. A linear transformation's eigenvectors are those vectors that are only stretched or shrunk, with neither rotation nor shear. The corresponding eigenvalue is the factor by which an eigenvector is stretched or shrunk. If the eigenvalue is negative, the eigenvector's direction is reversed. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jacobian Matrix And Determinant

In vector calculus, the Jacobian matrix (, ) of a vector-valued function of several variables is the matrix of all its first-order partial derivatives. If this matrix is square, that is, if the number of variables equals the number of components of function values, then its determinant is called the Jacobian determinant. Both the matrix and (if applicable) the determinant are often referred to simply as the Jacobian. They are named after Carl Gustav Jacob Jacobi. The Jacobian matrix is the natural generalization to vector valued functions of several variables of the derivative and the differential of a usual function. This generalization includes generalizations of the inverse function theorem and the implicit function theorem, where the non-nullity of the derivative is replaced by the non-nullity of the Jacobian determinant, and the multiplicative inverse of the derivative is replaced by the inverse of the Jacobian matrix. The Jacobian determinant is fundamentally use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |