|

Linear Matrix Inequality

In convex optimization, a linear matrix inequality (LMI) is an expression of the form : \operatorname(y):=A_0+y_1A_1+y_2A_2+\cdots+y_m A_m\succeq 0\, where * y= _i\,,~i\!=\!1,\dots, m/math> is a real vector, * A_0, A_1, A_2,\dots,A_m are n\times n symmetric matrices \mathbb^n, * B\succeq0 is a generalized inequality meaning B is a positive semidefinite matrix belonging to the positive semidefinite cone \mathbb_+ in the subspace of symmetric matrices \mathbb{S}. This linear matrix inequality specifies a convex constraint on y. Applications There are efficient numerical methods to determine whether an LMI is feasible (''e.g.'', whether there exists a vector ''y'' such that LMI(''y'') ≥ 0), or to solve a convex optimization problem with LMI constraints. Many optimization problems in control theory, system identification and signal processing can be formulated using LMIs. Also LMIs find application in Polynomial Sum-Of-Squares. The prototypical primal and dual sem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

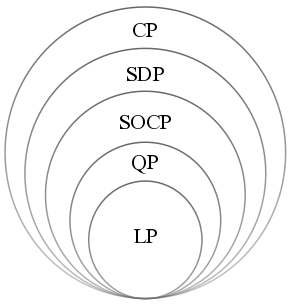

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Dual Cone

Dual cone and polar cone are closely related concepts in convex analysis, a branch of mathematics. Dual cone In a vector space The dual cone ''C'' of a subset ''C'' in a linear space ''X'' over the real numbers, reals, e.g. Euclidean space R''n'', with dual space ''X'' is the set :C^* = \left \, where \langle y, x \rangle is the dual system, duality pairing between ''X'' and ''X'', i.e. \langle y, x\rangle = y(x). ''C'' is always a convex cone, even if ''C'' is neither convex set, convex nor a linear cone, cone. In a topological vector space If ''X'' is a topological vector space over the real or complex numbers, then the dual cone of a subset ''C'' ⊆ ''X'' is the following set of continuous linear functionals on ''X'': :C^ := \left\, which is the polar set, polar of the set -''C''. No matter what ''C'' is, C^ will be a convex cone. If ''C'' ⊆ then C^ = X^. In a Hilbert space (internal dual cone) Alternatively, many authors define the dual cone in the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

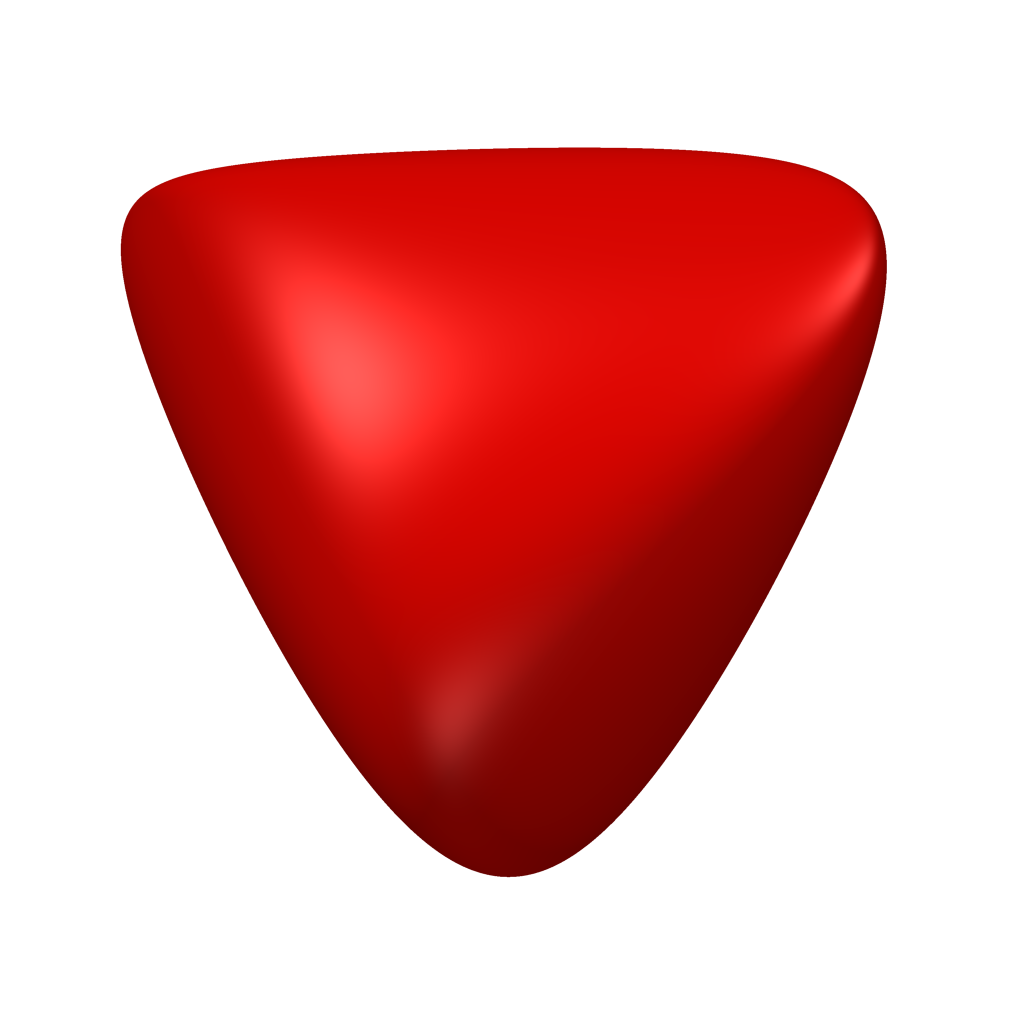

Spectrahedron

In convex geometry, a spectrahedron is a shape that can be represented as a linear matrix inequality. Alternatively, the set of positive semidefinite matrices forms a convex cone in , and a spectrahedron is a shape that can be formed by intersecting this cone with an affine subspace. Spectrahedra are the feasible regions of semidefinite programs. The images of spectrahedra under linear or affine transformations are called ''projected spectrahedra'' or ''spectrahedral shadows''. Every spectrahedral shadow is a convex set that is also semialgebraic, but the converse (conjectured to be true until 2017) is false. An example of a spectrahedron is the spectraplex, defined as : \mathrm_n = \, where \mathbf^n_+ is the set of positive semidefinite matrices and \operatorname(X) is the trace of the matrix X. The spectraplex is a compact set, and can be thought of as the "semidefinite" analog of the simplex. See also * N-ellipse In geometry, the -ellipse is a generalization ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Semidefinite Programming

Semidefinite programming (SDP) is a subfield of mathematical programming concerned with the optimization of a linear objective function (a user-specified function that the user wants to minimize or maximize) over the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron. Semidefinite programming is a relatively new field of optimization which is of growing interest for several reasons. Many practical problems in operations research and combinatorial optimization can be modeled or approximated as semidefinite programming problems. In automatic control theory, SDPs are used in the context of linear matrix inequalities. SDPs are in fact a special case of cone programming and can be efficiently solved by interior point methods. All linear programs and (convex) quadratic programs can be expressed as SDPs, and via hierarchies of SDPs the solutions of polynomial optimization problems can be approximated. Semidefinite programming ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Arkadi Nemirovski

Arkadi Nemirovski (; born March 14, 1947) is a professor at the H. Milton Stewart School of Industrial and Systems Engineering at the Georgia Institute of Technology. He has been a leader in continuous optimization and is best known for his work on the ellipsoid method, modern interior-point methods and robust optimization. Biography Nemirovski earned a Ph.D. in Mathematics in 1974 from Moscow State University and a Doctor of Sciences in Mathematics degree in 1990 from the Institute of Cybernetics of the Ukrainian Academy of Sciences in Kiev. He has won three prestigious prizes: the Fulkerson Prize, the George B. Dantzig Prize, and the John von Neumann Theory Prize. He was elected a member of the U.S. National Academy of Engineering (NAE) in 2017 "for the development of efficient algorithms for large-scale convex optimization problems", and the U.S National Academy of Sciences (NAS) in 2020. In 2023, Nemirovski and Yurii Nesterov were jointly awarded th2023 WLA Prize in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Yurii Nesterov

Yurii Nesterov is a Russian mathematician, an internationally recognized expert in convex optimization, especially in the development of efficient algorithms and numerical optimization analysis. He is currently a professor at the University of Louvain (UCLouvain). Biography In 1977, Yurii Nesterov graduated in applied mathematics at Moscow State University. From 1977 to 1992 he was a researcher at the Central Economic Mathematical Institute of the Russian Academy of Sciences. Since 1993, he has been working at UCLouvain, specifically in the Department of Mathematical Engineering from the Louvain School of Engineering, Center for Operations Research and Econometrics. In 2000, Nesterov received the Dantzig Prize. In 2009, Nesterov won the John von Neumann Theory Prize. In 2016, Nesterov received the EURO Gold Medal. In 2023, Yurii Nesterov and Arkadi Nemirovski received the WLA Prize in Computer Science or Mathematics, "for their seminal work in convex optimization theo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Interior-point Method

Interior-point methods (also referred to as barrier methods or IPMs) are algorithms for solving linear and non-linear convex optimization problems. IPMs combine two advantages of previously-known algorithms: * Theoretically, their run-time is polynomial—in contrast to the simplex method, which has exponential run-time in the worst case. * Practically, they run as fast as the simplex method—in contrast to the ellipsoid method, which has polynomial run-time in theory but is very slow in practice. In contrast to the simplex method which traverses the ''boundary'' of the feasible region, and the ellipsoid method which bounds the feasible region from ''outside'', an IPM reaches a best solution by traversing the ''interior'' of the feasible region—hence the name. History An interior point method was discovered by Soviet mathematician I. I. Dikin in 1967. The method was reinvented in the U.S. in the mid-1980s. In 1984, Narendra Karmarkar developed a method for linear programming ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Convex Cone

In linear algebra, a cone—sometimes called a linear cone to distinguish it from other sorts of cones—is a subset of a real vector space that is closed under positive scalar multiplication; that is, C is a cone if x\in C implies sx\in C for every . This is a broad generalization of the standard cone in Euclidean space. A convex cone is a cone that is also closed under addition, or, equivalently, a subset of a vector space that is closed under linear combinations with positive coefficients. It follows that convex cones are convex sets. The definition of a convex cone makes sense in a vector space over any ordered field, although the field of real numbers is used most often. Definition A subset C of a vector space is a cone if x\in C implies sx\in C for every s>0. Here s>0 refers to (strict) positivity in the scalar field. Competing definitions Some other authors require ,\infty)C\subset C or even 0\in C. Some require a cone to be convex and/or satisfy C\cap-C\subset\. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Semidefinite Programming

Semidefinite programming (SDP) is a subfield of mathematical programming concerned with the optimization of a linear objective function (a user-specified function that the user wants to minimize or maximize) over the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron. Semidefinite programming is a relatively new field of optimization which is of growing interest for several reasons. Many practical problems in operations research and combinatorial optimization can be modeled or approximated as semidefinite programming problems. In automatic control theory, SDPs are used in the context of linear matrix inequalities. SDPs are in fact a special case of cone programming and can be efficiently solved by interior point methods. All linear programs and (convex) quadratic programs can be expressed as SDPs, and via hierarchies of SDPs the solutions of polynomial optimization problems can be approximated. Semidefinite programming ha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Symmetric Matrix

In linear algebra, a symmetric matrix is a square matrix that is equal to its transpose. Formally, Because equal matrices have equal dimensions, only square matrices can be symmetric. The entries of a symmetric matrix are symmetric with respect to the main diagonal. So if a_ denotes the entry in the ith row and jth column then for all indices i and j. Every square diagonal matrix is symmetric, since all off-diagonal elements are zero. Similarly in characteristic different from 2, each diagonal element of a skew-symmetric matrix must be zero, since each is its own negative. In linear algebra, a real symmetric matrix represents a self-adjoint operator represented in an orthonormal basis over a real inner product space. The corresponding object for a complex inner product space is a Hermitian matrix with complex-valued entries, which is equal to its conjugate transpose. Therefore, in linear algebra over the complex numbers, it is often assumed that a symmetric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Polynomial SOS

In mathematics, a form (i.e. a homogeneous polynomial) ''h''(''x'') of degree 2''m'' in the real ''n''-dimensional vector ''x'' is sum of squares of forms (SOS) if and only if there exist forms g_1(x),\ldots,g_k(x) of degree ''m'' such that h(x) = \sum_^k g_i(x)^2 . Every form that is SOS is also a positive polynomial, and although the converse is not always true, Hilbert proved that for ''n'' = 2, 2''m'' = 2, or ''n'' = 3 and 2''m'' = 4 a form is SOS if and only if it is positive. The same is also valid for the analog problem on positive ''symmetric'' forms. Although not every form can be represented as SOS, explicit sufficient conditions for a form to be SOS have been found. Moreover, every real nonnegative form can be approximated as closely as desired (in the l_1-norm of its coefficient vector) by a sequence of forms \ that are SOS. Square matricial representation (SMR) To establish whether a form is SOS amounts to solving a convex optimization problem. Indeed, any ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Signal Processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomography, seismic signals, Altimeter, altimetry processing, and scientific measurements. Signal processing techniques are used to optimize transmissions, Data storage, digital storage efficiency, correcting distorted signals, improve subjective video quality, and to detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital refinement of these techniques can be found in the digital control systems of the 1940s and 1950s. In 1948, Claude Shannon wrote the influential paper "A Mathematical Theory of Communication" which was publis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |