|

Line Sampling

Line sampling is a method used in reliability engineering to compute small (i.e., rare event) failure probabilities encountered in engineering systems. The method is particularly suitable for Curse of dimensionality, high-dimensional reliability problems, in which the performance function exhibits moderate non-linearity with respect to the uncertain parameters The method is suitable for analyzing black box systems, and unlike the importance sampling method of variance reduction, does not require detailed knowledge of the system. The basic idea behind line sampling is to refine estimates obtained from the first-order reliability method (FORM), which may be incorrect due to the non-linearity of the Limit_state_design, limit state function. Conceptually, this is achieved by averaging the result of different FORM simulations. In practice, this is made possible by identifying the importance direction \boldsymbol \alpha in the input parameter space, which points towards the region w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reliability Engineering

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability is defined as the probability that a product, system, or service will perform its intended function adequately for a specified period of time, OR will operate in a defined environment without failure. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time. The ''reliability function'' is theoretically defined as the probability of success. In practice, it is calculated using different techniques, and its value ranges between 0 and 1, where 0 indicates no probability of success while 1 indicates definite success. This probability is estimated from detailed (physics of failure) analysis, previous data sets, or through reliability testing and reliability modeling. Availability, testability, maintainability, and maintenance ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Newton%27s Method

In numerical analysis, the Newton–Raphson method, also known simply as Newton's method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a real-valued function. The most basic version starts with a real-valued function , its derivative , and an initial guess for a root of . If satisfies certain assumptions and the initial guess is close, then x_ = x_0 - \frac is a better approximation of the root than . Geometrically, is the x-intercept of the tangent of the graph of at : that is, the improved guess, , is the unique root of the linear approximation of at the initial guess, . The process is repeated as x_ = x_n - \frac until a sufficiently precise value is reached. The number of correct digits roughly doubles with each step. This algorithm is first in the class of Householder's methods, and was succeeded by Halley's method. The method can also be extended t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

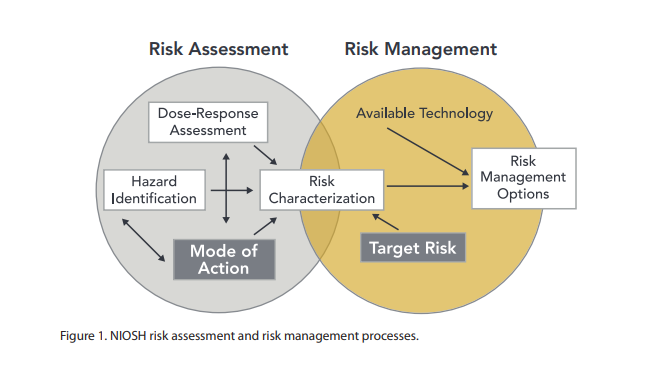

Risk Assessment

Risk assessment is a process for identifying hazards, potential (future) events which may negatively impact on individuals, assets, and/or the environment because of those hazards, their likelihood and consequences, and actions which can mitigate these effects. The output from such a process may also be called a risk assessment. Hazard analysis forms the first stage of a risk assessment process. Judgments "on the tolerability of the risk on the basis of a risk analysis" (i.e. risk evaluation) also form part of the process. The results of a risk assessment process may be expressed in a quantitative or qualitative fashion. Risk assessment forms a key part of a broader risk management strategy to help reduce any potential risk-related consequences. Categories Individual risk assessment Risk assessments can be undertaken in individual cases, including in patient and physician interactions. In the narrow sense chemical risk assessment is the assessment of a health risk in response ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Compact Set

In mathematics, a random compact set is essentially a compact set-valued random variable. Random compact sets are useful in the study of attractors for random dynamical systems. Definition Let (M, d) be a complete separable metric space. Let \mathcal denote the set of all compact subsets of M. The Hausdorff metric h on \mathcal is defined by :h(K_, K_) := \max \left\. (\mathcal, h) is also а complete separable metric space. The corresponding open subsets generate a σ-algebra on \mathcal, the Borel sigma algebra \mathcal(\mathcal) of \mathcal. A random compact set is а measurable function K from а probability space (\Omega, \mathcal, \mathbb) into (\mathcal, \mathcal (\mathcal) ). Put another way, a random compact set is a measurable function K \colon \Omega \to 2^ such that K(\omega) is almost surely In probability theory, an event is said to happen almost surely (sometimes abbreviated as a.s.) if it happens with probability 1 (with respect to the probability me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Box

A probability box (or p-box) is a characterization of uncertain numbers consisting of both aleatoric and epistemic uncertainties that is often used in risk analysis or quantitative uncertainty modeling where numerical calculations must be performed. Probability bounds analysis is used to make arithmetic and logical calculations with p-boxes. An example p-box is shown in the figure at right for an uncertain number ''x'' consisting of a left (upper) bound and a right (lower) bound on the probability distribution for ''x''. The bounds are coincident for values of ''x'' below 0 and above 24. The bounds may have almost any shape, including step functions, so long as they are monotonically increasing and do not cross each other. A p-box is used to express simultaneously incertitude (epistemic uncertainty), which is represented by the breadth between the left and right edges of the p-box, and variability (aleatory uncertainty), which is represented by the overall slant of the p- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncertainty Quantification

Uncertainty quantification (UQ) is the science of quantitative characterization and estimation of uncertainties in both computational and real world applications. It tries to determine how likely certain outcomes are if some aspects of the system are not exactly known. An example would be to predict the acceleration of a human body in a head-on crash with another car: even if the speed was exactly known, small differences in the manufacturing of individual cars, how tightly every bolt has been tightened, etc., will lead to different results that can only be predicted in a statistical sense. Many problems in the natural sciences and engineering are also rife with sources of uncertainty. Computer experiments on computer simulations are the most common approach to study problems in uncertainty quantification. Sources Uncertainty can enter mathematical models and experimental measurements in various contexts. One way to categorize the sources of uncertainty is to consider: ; Parame ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subset Simulation

Subset simulation is a method used in reliability engineering to compute small (i.e., rare event) failure probabilities encountered in engineering systems. The basic idea is to express a small failure probability as a product of larger conditional probabilities by introducing intermediate failure events. This conceptually converts the original rare-event problem into a series of frequent-event problems that are easier to solve. In the actual implementation, samples conditional on intermediate failure events are adaptively generated to gradually populate from the frequent to rare event region. These 'conditional samples' provide information for estimating the complementary cumulative distribution function (CCDF) of the quantity of interest (that governs failure), covering the high as well as the low probability regions. They can also be used for investigating the cause and consequence of failure events. The generation of conditional samples is not trivial but can be performed efficient ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Method

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. The name comes from the Monte Carlo Casino in Monaco, where the primary developer of the method, mathematician Stanisław Ulam, was inspired by his uncle's gambling habits. Monte Carlo methods are mainly used in three distinct problem classes: optimization, numerical integration, and generating draws from a probability distribution. They can also be used to model phenomena with significant uncertainty in inputs, such as calculating the risk of a nuclear power plant failure. Monte Carlo methods are often implemented using computer simulations, and they can provide approximate solutions to problems that are otherwise intractable or too complex to analyze mathematically. Monte Carlo methods are widely used in va ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Curse Of Dimensionality

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The expression was coined by Richard E. Bellman when considering problems in dynamic programming. The curse generally refers to issues that arise when the number of datapoints is small (in a suitably defined sense) relative to the intrinsic dimension of the data. Dimensionally cursed phenomena occur in domains such as numerical analysis, sampling, combinatorics, machine learning, data mining and databases. The common theme of these problems is that when the dimensionality increases, the volume of the space increases so fast that the available data become sparse. In order to obtain a reliable result, the amount of data needed often grows exponentially with the dimensionality. Also, organizing and searching data often relies on detecting a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |