|

Klee–Minty Cube

The Klee–Minty cube or Klee–Minty polytope (named after Victor Klee and George J. Minty) is a unit hypercube of variable dimension whose corners have been perturbed. Klee and Minty demonstrated that George Dantzig's simplex algorithm has poor worst-case performance when initialized at one corner of their "squashed cube". On the three-dimensional version, the simplex algorithm and the criss-cross algorithm visit all 8 corners in the worst case. In particular, many optimization algorithms for linear optimization exhibit poor performance when applied to the Klee–Minty cube. In 1973 Klee and Minty showed that Dantzig's simplex algorithm was not a polynomial-time algorithm when applied to their cube. Later, modifications of the Klee–Minty cube have shown poor behavior both for other basis-exchange pivoting algorithms and also for interior-point algorithms. Description The Klee–Minty cube was originally specified with a parameterized system of linear inequalities, with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Elimination

In mathematics, Gaussian elimination, also known as row reduction, is an algorithm for solving systems of linear equations. It consists of a sequence of row-wise operations performed on the corresponding matrix of coefficients. This method can also be used to compute the rank of a matrix, the determinant of a square matrix, and the inverse of an invertible matrix. The method is named after Carl Friedrich Gauss (1777–1855). To perform row reduction on a matrix, one uses a sequence of elementary row operations to modify the matrix until the lower left-hand corner of the matrix is filled with zeros, as much as possible. There are three types of elementary row operations: * Swapping two rows, * Multiplying a row by a nonzero number, * Adding a multiple of one row to another row. Using these operations, a matrix can always be transformed into an upper triangular matrix (possibly bordered by rows or columns of zeros), and in fact one that is in row echelon form. Once all of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Average-case Complexity

In computational complexity theory, the average-case complexity of an algorithm is the amount of some computational resource (typically time) used by the algorithm, averaged over all possible inputs. It is frequently contrasted with worst-case complexity which considers the maximal complexity of the algorithm over all possible inputs. There are three primary motivations for studying average-case complexity. First, although some problems may be intractable in the worst-case, the inputs which elicit this behavior may rarely occur in practice, so the average-case complexity may be a more accurate measure of an algorithm's performance. Second, average-case complexity analysis provides tools and techniques to generate hard instances of problems which can be utilized in areas such as cryptography and derandomization. Third, average-case complexity allows discriminating the most efficient algorithm in practice among algorithms of equivalent best case complexity (for instance Quicksort#For ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interior-point Method

Interior-point methods (also referred to as barrier methods or IPMs) are algorithms for solving linear and non-linear convex optimization problems. IPMs combine two advantages of previously-known algorithms: * Theoretically, their run-time is polynomial—in contrast to the simplex method, which has exponential run-time in the worst case. * Practically, they run as fast as the simplex method—in contrast to the ellipsoid method, which has polynomial run-time in theory but is very slow in practice. In contrast to the simplex method which traverses the ''boundary'' of the feasible region, and the ellipsoid method which bounds the feasible region from ''outside'', an IPM reaches a best solution by traversing the ''interior'' of the feasible region—hence the name. History An interior point method was discovered by Soviet mathematician I. I. Dikin in 1967. The method was reinvented in the U.S. in the mid-1980s. In 1984, Narendra Karmarkar developed a method for linear programming ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bland's Rule

In mathematical optimization, Bland's rule (also known as Bland's algorithm, Bland's anti-cycling rule or Bland's pivot rule) is an algorithmic refinement of the simplex method for linear optimization. With Bland's rule, the simplex algorithm solves feasible linear optimization problems without cycling.. The original simplex algorithm starts with an arbitrary basic feasible solution, and then changes the basis in order to decrease the minimization target and find an optimal solution. Usually, the target indeed decreases in every step, and thus after a bounded number of steps an optimal solution is found. However, there are examples of degenerate linear programs, on which the original simplex algorithm cycles forever. It gets stuck at a basic feasible solution (a corner of the feasible polytope) and changes bases in a cyclic way without decreasing the minimization target. Such cycles are avoided by Bland's rule for choosing a column to enter and a column to leave the basis. Bland's ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dimension (vector Space)

In mathematics, the dimension of a vector space ''V'' is the cardinality (i.e., the number of vectors) of a Basis (linear algebra), basis of ''V'' over its base Field (mathematics), field. p. 44, §2.36 It is sometimes called Hamel dimension (after Georg Hamel) or algebraic dimension to distinguish it from other types of dimension. For every vector space there exists a basis, and all bases of a vector space have equal cardinality; as a result, the dimension of a vector space is uniquely defined. We say V is if the dimension of V is wiktionary:finite, finite, and if its dimension is infinity, infinite. The dimension of the vector space V over the field F can be written as \dim_F(V) or as [V : F], read "dimension of V over F". When F can be inferred from context, \dim(V) is typically written. Examples The vector space \R^3 has \left\ as a standard basis, and therefore \dim_(\R^3) = 3. More generally, \dim_(\R^n) = n, and even more generally, \dim_(F^n) = n for any Field (mathe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Optimization

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements and objective are represented by linear relationships. Linear programming is a special case of mathematical programming (also known as mathematical optimization). More formally, linear programming is a technique for the optimization of a linear objective function, subject to linear equality and linear inequality constraints. Its feasible region is a convex polytope, which is a set defined as the intersection of finitely many half spaces, each of which is defined by a linear inequality. Its objective function is a real-valued affine (linear) function defined on this polytope. A linear programming algorithm finds a point in the polytope where this function has the largest (or smallest) value if such a point exists. Linear programs are problems that can be expressed in standard form as: : \begin & ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Algorithms

In computer science, the analysis of algorithms is the process of finding the computational complexity of algorithms—the amount of time, storage, or other resources needed to execute them. Usually, this involves determining a function that relates the size of an algorithm's input to the number of steps it takes (its time complexity) or the number of storage locations it uses (its space complexity). An algorithm is said to be efficient when this function's values are small, or grow slowly compared to a growth in the size of the input. Different inputs of the same size may cause the algorithm to have different behavior, so best, worst and average case descriptions might all be of practical interest. When not otherwise specified, the function describing the performance of an algorithm is usually an upper bound, determined from the worst case inputs to the algorithm. The term "analysis of algorithms" was coined by Donald Knuth. Algorithm analysis is an important part of a broa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Worst-case Complexity

In computer science (specifically computational complexity theory), the worst-case complexity measures the resources (e.g. running time, memory) that an algorithm requires given an input of arbitrary size (commonly denoted as in asymptotic notation). It gives an upper bound on the resources required by the algorithm. In the case of running time, the worst-case time complexity indicates the longest running time performed by an algorithm given ''any'' input of size , and thus guarantees that the algorithm will finish in the indicated period of time. The order of growth (e.g. linear, logarithmic) of the worst-case complexity is commonly used to compare the efficiency of two algorithms. The worst-case complexity of an algorithm should be contrasted with its average-case complexity, which is an average measure of the amount of resources the algorithm uses on a random input. Definition Given a model of computation and an algorithm \mathsf that halts on each input s, the mapping ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

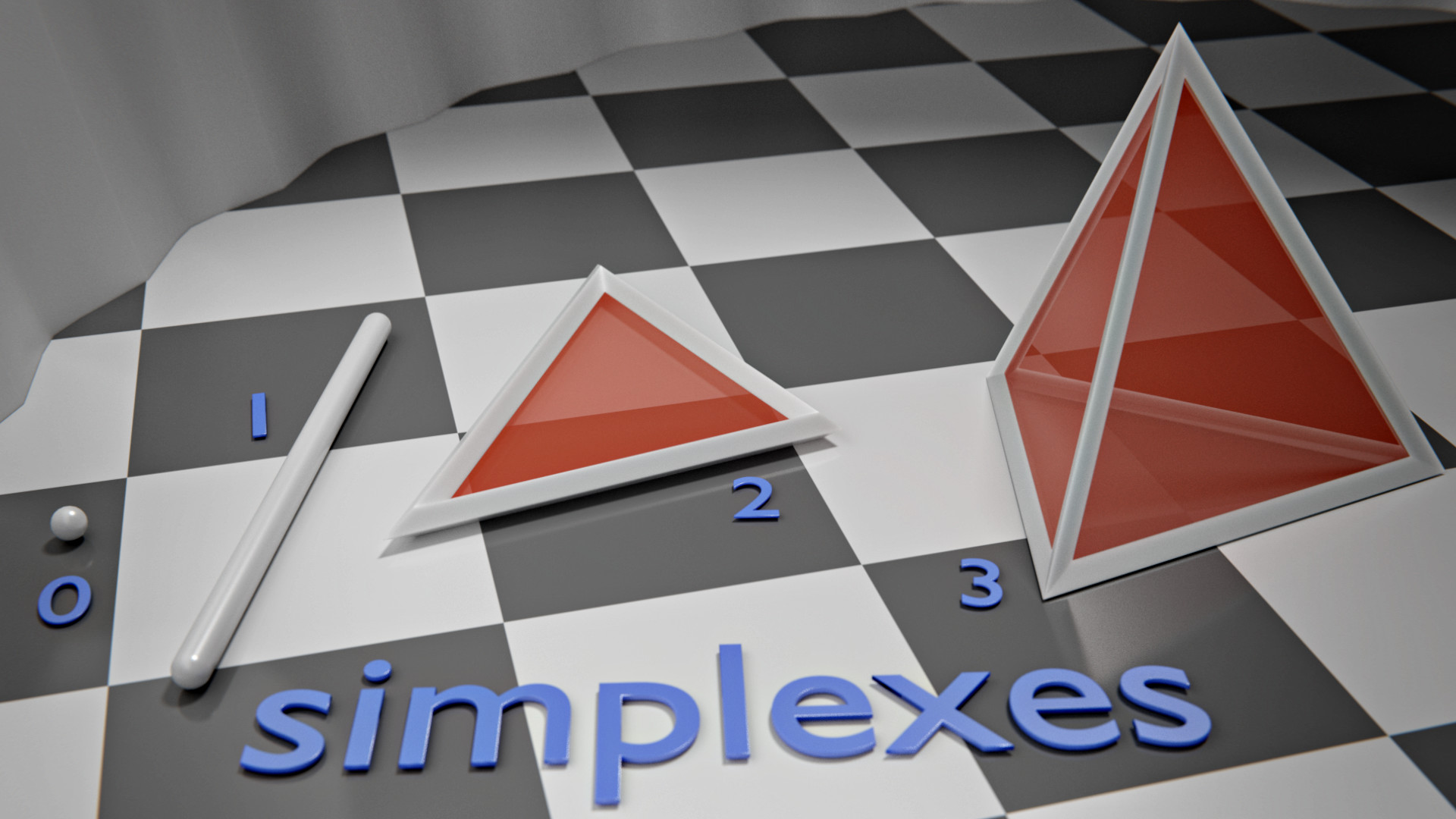

Simplex Description

In geometry, a simplex (plural: simplexes or simplices) is a generalization of the notion of a triangle or tetrahedron to arbitrary dimensions. The simplex is so-named because it represents the simplest possible polytope in any given dimension. For example, * a 0-dimensional simplex is a point, * a 1-dimensional simplex is a line segment, * a 2-dimensional simplex is a triangle, * a 3-dimensional simplex is a tetrahedron, and * a 4-dimensional simplex is a 5-cell. Specifically, a -simplex is a -dimensional polytope that is the convex hull of its vertices. More formally, suppose the points u_0, \dots, u_k are affinely independent, which means that the vectors u_1 - u_0,\dots, u_k-u_0 are linearly independent. Then, the simplex determined by them is the set of points C = \left\. A regular simplex is a simplex that is also a regular polytope. A regular -simplex may be constructed from a regular -simplex by connecting a new vertex to all original vertices by the common edge l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multivariate Polynomial

In mathematics, a polynomial is a mathematical expression consisting of indeterminates (also called variables) and coefficients, that involves only the operations of addition, subtraction, multiplication and exponentiation to nonnegative integer powers, and has a finite number of terms. An example of a polynomial of a single indeterminate is . An example with three indeterminates is . Polynomials appear in many areas of mathematics and science. For example, they are used to form polynomial equations, which encode a wide range of problems, from elementary word problems to complicated scientific problems; they are used to define polynomial functions, which appear in settings ranging from basic chemistry and physics to economics and social science; and they are used in calculus and numerical analysis to approximate other functions. In advanced mathematics, polynomials are used to construct polynomial rings and algebraic varieties, which are central concepts in algebra and algebr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degree Of A Polynomial

In mathematics, the degree of a polynomial is the highest of the degrees of the polynomial's monomials (individual terms) with non-zero coefficients. The degree of a term is the sum of the exponents of the variables that appear in it, and thus is a non-negative integer. For a univariate polynomial, the degree of the polynomial is simply the highest exponent occurring in the polynomial. The term order has been used as a synonym of ''degree'' but, nowadays, may refer to several other concepts (see Order of a polynomial (other)). For example, the polynomial 7x^2y^3 + 4x - 9, which can also be written as 7x^2y^3 + 4x^1y^0 - 9x^0y^0, has three terms. The first term has a degree of 5 (the sum of the powers 2 and 3), the second term has a degree of 1, and the last term has a degree of 0. Therefore, the polynomial has a degree of 5, which is the highest degree of any term. To determine the degree of a polynomial that is not in standard form, such as (x+1)^2 - (x-1)^2, one c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |