|

IBM A2

The IBM A2 is an open source massively multicore capable and multithreaded 64-bit Power ISA processor core designed by IBM using the Power ISA v.2.06 specification. Versions of processors based on the A2 core range from a 2.3 GHz version with 16 cores consuming 65 W to a less powerful, four core version, consuming 20 W at 1.4 GHz. Design The A2 core is a processor core designed for customization and embedded use in system on chip-devices, and was developed following IBM's game console processor designs, the Xbox 360-processor and Cell processor for the PlayStation 3. A2I A2I is a 4-way simultaneous multithreaded core which implements the 64-bit Power ISA v.2.06 Book III-E embedded platform specification with support for the embedded hypervisor features. It was designed for implementations with many cores and focusing on high throughput and many simultaneous threads. A2I was written in VHDL. The core has 4×32 64-bit general purpose registers (GPR) with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Open Source

Open source is source code that is made freely available for possible modification and redistribution. Products include permission to use and view the source code, design documents, or content of the product. The open source model is a decentralized software development model that encourages open collaboration. A main principle of Open-source software, open source software development is peer production, with products such as source code, blueprints, and documentation freely available to the public. The open source movement in software began as a response to the limitations of proprietary code. The model is used for projects such as in open source appropriate technology, and open source drug discovery. Open source promotes universal access via an open-source or free license to a product's design or blueprint, and universal redistribution of that design or blueprint. Before the phrase ''open source'' became widely adopted, developers and producers used a variety of other terms, suc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GitHub

GitHub () is a Proprietary software, proprietary developer platform that allows developers to create, store, manage, and share their code. It uses Git to provide distributed version control and GitHub itself provides access control, bug tracking system, bug tracking, software feature requests, task management, continuous integration, and wikis for every project. Headquartered in California, GitHub, Inc. has been a subsidiary of Microsoft since 2018. It is commonly used to host open source software development projects. GitHub reported having over 100 million developers and more than 420 million Repository (version control), repositories, including at least 28 million public repositories. It is the world's largest source code host Over five billion developer contributions were made to more than 500 million open source projects in 2024. About Founding The development of the GitHub platform began on October 19, 2005. The site was launched in April 2008 by Tom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PCI Express

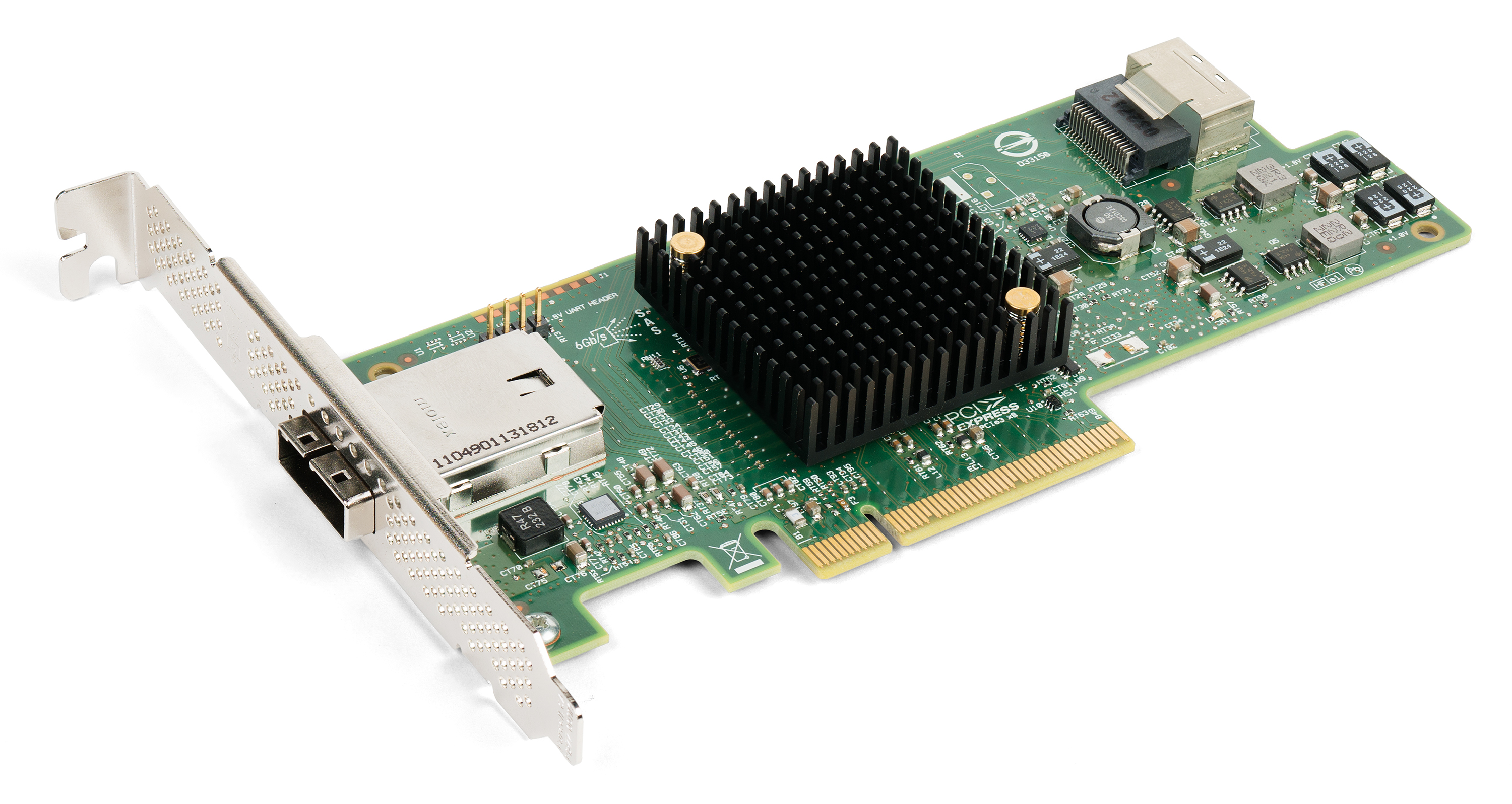

PCI Express (Peripheral Component Interconnect Express), officially abbreviated as PCIe, is a high-speed standard used to connect hardware components inside computers. It is designed to replace older expansion bus standards such as Peripheral Component Interconnect, PCI, PCI-X and Accelerated Graphics Port, AGP. Developed and maintained by the PCI-SIG (PCI Special Interest Group), PCIe is commonly used to connect graphics cards, sound cards, Wi-Fi and Ethernet adapters, and storage devices such as solid-state drives and hard disk drives. Compared to earlier standards, PCIe supports faster data transfer, uses fewer pins, takes up less space, and allows devices to be added or removed while the computer is running (hot swapping). It also includes better error detection and supports newer features like I/O virtualization for advanced computing needs. PCIe connections are made through "lanes," which are pairs of wires that send and receive data. Devices can use one or more lanes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

10 Gigabit Ethernet

10 Gigabit Ethernet (abbreviated 10GE, 10GbE, or 10 GigE) is a group of computer networking technologies for transmitting Ethernet frames at a rate of 10 gigabits per second. It was first defined by the IEEE 802.3ae-2002 standard. Unlike previous Ethernet standards, 10GbE defines only full-duplex point-to-point links which are generally connected by network switches; shared-medium CSMA/CD operation has not been carried over from the previous generations of Ethernet standards so half-duplex operation and repeater hubs do not exist in 10GbE. The first standard for faster 100 Gigabit Ethernet links was approved in 2010. The 10GbE standard encompasses a number of different physical layer (PHY) standards. A networking device, such as a switch or a network interface controller may have different PHY types through pluggable PHY modules, such as those based on SFP+. Like previous versions of Ethernet, 10GbE can use either copper or fiber cabling. Maximum distance over copper ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regular Expression

A regular expression (shortened as regex or regexp), sometimes referred to as rational expression, is a sequence of characters that specifies a match pattern in text. Usually such patterns are used by string-searching algorithms for "find" or "find and replace" operations on strings, or for input validation. Regular expression techniques are developed in theoretical computer science and formal language theory. The concept of regular expressions began in the 1950s, when the American mathematician Stephen Cole Kleene formalized the concept of a regular language. They came into common use with Unix text-processing utilities. Different syntaxes for writing regular expressions have existed since the 1980s, one being the POSIX standard and another, widely used, being the Perl syntax. Regular expressions are used in search engines, in search and replace dialogs of word processors and text editors, in text processing utilities such as sed and AWK, and in lexical analysis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cryptography

Cryptography, or cryptology (from "hidden, secret"; and ''graphein'', "to write", or ''-logy, -logia'', "study", respectively), is the practice and study of techniques for secure communication in the presence of Adversary (cryptography), adversarial behavior. More generally, cryptography is about constructing and analyzing Communication protocol, protocols that prevent third parties or the public from reading private messages. Modern cryptography exists at the intersection of the disciplines of mathematics, computer science, information security, electrical engineering, digital signal processing, physics, and others. Core concepts related to information security (confidentiality, data confidentiality, data integrity, authentication, and non-repudiation) are also central to cryptography. Practical applications of cryptography include electronic commerce, Smart card#EMV, chip-based payment cards, digital currencies, password, computer passwords, and military communications. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

International Solid-State Circuits Conference

International Solid-State Circuits Conference is a global forum for presentation of advances in solid-state circuits and Systems-on-a-Chip. The conference is held every year in February at the San Francisco Marriott Marquis in downtown San Francisco. ISSCC is sponsored by IEEE Solid-State Circuits Society. According to ''The Register'', "The ISSCC event is the second event of each new year, following the Consumer Electronics Show, where new PC processors and sundry other computing gadgets are brought to market." History of ISSCC Early participants in the inaugural conference in 1954 belonged to the Institute of Radio Engineers (IRE) Circuit Theory Group and the IRE subcommittee of Transistor Circuits. The conference was held in Philadelphia and local chapters of IRE and American Institute of Electrical Engineers (AIEE) were in attendance. Later on AIEE and IRE would merge to become the present-day IEEE. The first conference consisted of papers from six organizations: Bel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Routing

Routing is the process of selecting a path for traffic in a Network theory, network or between or across multiple networks. Broadly, routing is performed in many types of networks, including circuit-switched networks, such as the public switched telephone network (PSTN), and computer networks, such as the Internet. In packet switching networks, routing is the higher-level decision making that directs network packets from their source toward their destination through intermediate network nodes by specific packet forwarding mechanisms. Packet forwarding is the transit of network packets from one Network interface controller, network interface to another. Intermediate nodes are typically network hardware devices such as Router (computing), routers, gateway (telecommunications), gateways, Firewall (computing), firewalls, or network switch, switches. General-purpose computers also forward packets and perform routing, although they have no specially optimized hardware for the task. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Packet Switching

In telecommunications, packet switching is a method of grouping Data (computing), data into short messages in fixed format, i.e. ''network packet, packets,'' that are transmitted over a digital Telecommunications network, network. Packets consist of a header (computing), header and a payload (computing), payload. Data in the header is used by networking hardware to direct the packet to its destination, where the payload is extracted and used by an operating system, application software, or Protocol stack, higher layer protocols. Packet switching is the primary basis for data communications in computer networks worldwide. During the early 1960s, American engineer Paul Baran developed a concept he called ''distributed adaptive message block switching'', with the goal of providing a fault-tolerant, efficient routing method for telecommunication messages as part of a research program at the RAND Corporation, funded by the United States Department of Defense. His ideas contradicted t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Network Processor

A network processor is an integrated circuit which has a feature set specifically targeted at the Computer networking, networking application domain. Network processors are typically software programmable devices and would have generic characteristics similar to general purpose central processing units that are commonly used in many different types of equipment and products. History of development In modern telecommunications networks, information (voice, video, data) is transferred as Packet (information technology), packet data (termed packet switching) which is in contrast to older telecommunications networks that carried information as analog signals such as in the public switched telephone network (PSTN) or analog TV/Radio networks. The processing of these packets has resulted in the creation of integrated circuits (IC) that are optimised to deal with this form of packet data. Network processors have specific features or architectures that are provided to enhance and optimise ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |