|

Generative Adversarial Network

A generative adversarial network (GAN) is a class of machine learning frameworks and a prominent framework for approaching generative artificial intelligence. The concept was initially developed by Ian Goodfellow and his colleagues in June 2014. In a GAN, two neural networks compete with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics. Though originally proposed as a form of generative model for unsupervised learning, GANs have also proved useful for semi-supervised learning, fully supervised learning, and reinforcement learning. The core idea of a GAN is based on the "indirect" training through the discriminator, another neural network that can tell ho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Kernel

In probability theory, a Markov kernel (also known as a stochastic kernel or probability kernel) is a map that in the general theory of Markov processes plays the role that the transition matrix does in the theory of Markov processes with a finite state space. Formal definition Let (X,\mathcal A) and (Y,\mathcal B) be measurable spaces. A ''Markov kernel'' with source (X,\mathcal A) and target (Y,\mathcal B), sometimes written as \kappa:(X,\mathcal)\to(Y,\mathcal), is a function \kappa : \mathcal B \times X \to ,1/math> with the following properties: # For every (fixed) B_0 \in \mathcal B, the map x \mapsto \kappa(B_0, x) is \mathcal A- measurable # For every (fixed) x_0 \in X, the map B \mapsto \kappa(B, x_0) is a probability measure on (Y, \mathcal B) In other words it associates to each point x \in X a probability measure \kappa(dy, x): B \mapsto \kappa(B, x) on (Y,\mathcal B) such that, for every measurable set B\in\mathcal B, the map x\mapsto \kappa(B, x) is measur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Approximation Theorem

In the mathematical theory of artificial neural networks, universal approximation theorems are theorems of the following form: Given a family of neural networks, for each function f from a certain function space, there exists a sequence of neural networks \phi_1, \phi_2, \dots from the family, such that \phi_n \to f according to some criterion. That is, the family of neural networks is dense in the function space. The most popular version states that feedforward networks with non-polynomial activation functions are dense in the space of continuous functions between two Euclidean spaces, with respect to the compact convergence topology. Universal approximation theorems are existence theorems: They simply state that there ''exists'' such a sequence \phi_1, \phi_2, \dots \to f, and do not provide any way to actually find such a sequence. They also do not guarantee any method, such as backpropagation, might actually find such a sequence. Any method for searching the space of neural ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Component Analysis

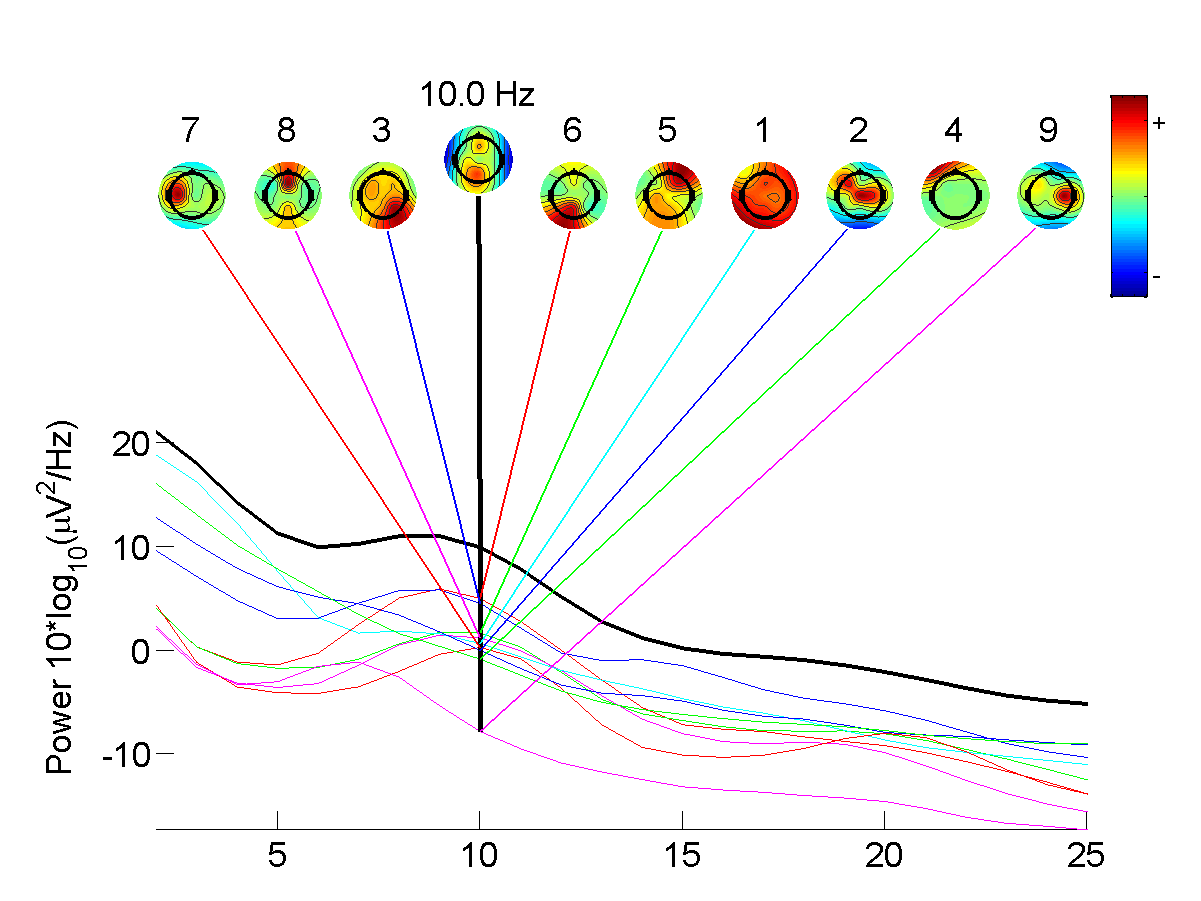

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate statistics, multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are Statistical independence, statistically independent from each other. ICA was invented by Jeanny Hérault and Christian Jutten in 1985. ICA is a special case of blind source separation. A common example application of ICA is the "cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising model), named after Ludwig Boltzmann, is a spin glass, spin-glass model with an external field, i.e., a Spin glass#Sherrington–Kirkpatrick model, Sherrington–Kirkpatrick model, that is a stochastic Ising model. It is a statistical physics technique applied in the context of cognitive science. It is also classified as a Markov random field. Boltzmann machines are theoretically intriguing because of the locality and Hebbian nature of their training algorithm (being trained by Hebb's rule), and because of their Parallelism (computing), parallelism and the resemblance of their dynamics to simple physical processes. Boltzmann machines with unconstrained connectivity have not been proven useful for practical problems in machine learning or inference, but if the connectivity is properly constrained, the learning can be made efficient enough to be useful for practical problems. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

WaveNet

WaveNet is a deep neural network for generating raw audio. It was created by researchers at London-based AI firm DeepMind. The technique, outlined in a paper in September 2016, is able to generate relatively realistic-sounding human-like voices by directly modelling waveforms using a neural network method trained with recordings of real speech. Tests with US English and Mandarin reportedly showed that the system outperforms Google's best existing text-to-speech (TTS) systems, although as of 2016 its text-to-speech synthesis still was less convincing than actual human speech. WaveNet's ability to generate raw waveforms means that it can model any kind of audio, including music. History Generating speech from text is an increasingly common task thanks to the popularity of software such as Apple's Siri, Microsoft's Cortana, Amazon Alexa and the Google Assistant. Most such systems use a variation of a technique that involves concatenated sound fragments together to form recognis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Types Of Deep Generative Models

Type may refer to: Science and technology Computing * Typing, producing text via a keyboard, typewriter, etc. * Data type, collection of values used for computations. * File type * TYPE (DOS command), a command to display contents of a file. * Type (Unix), a command in POSIX shells that gives information about commands. * Type safety, the extent to which a programming language discourages or prevents type errors. * Type system, defines a programming language's response to data types. Mathematics * Type (model theory) * Type theory, basis for the study of type systems * Arity or type, the number of operands a function takes * Type, any proposition or set in the intuitionistic type theory * Type, of an entire function ** Exponential type Biology * Type (biology), which fixes a scientific name to a taxon * Dog type, categorization by use or function of domestic dogs Lettering * Type is a design concept for lettering used in typography which helped bring about modern textual printi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flow-based Generative Model

A flow-based generative model is a generative model used in machine learning that explicitly models a probability distribution by leveraging normalizing flow, which is a statistical method using the change-of-variable law of probabilities to transform a simple distribution into a complex one. The direct modeling of likelihood provides many advantages. For example, the negative log-likelihood can be directly computed and minimized as the loss function. Additionally, novel samples can be generated by sampling from the initial distribution, and applying the flow transformation. In contrast, many alternative generative modeling methods such as variational autoencoder (VAE) and generative adversarial network do not explicitly represent the likelihood function. Method Let z_0 be a (possibly multivariate) random variable with distribution p_0(z_0). For i = 1, ..., K, let z_i = f_i(z_) be a sequence of random variables transformed from z_0. The functions f_1, ..., f_K should be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different types of data including text, images and audio. Convolution-based networks are the de-facto standard in deep learning-based approaches to computer vision and image processing, and have only recently been replaced—in some cases—by newer deep learning architectures such as the transformer. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization that comes from using shared weights over fewer connections. For example, for ''each'' neuron in the fully-connected layer, 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded ''convolution'' (or cross-correlation) kernels, only 25 weights for each convolutio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deconvolutional Neural Network

A convolutional neural network (CNN) is a type of feedforward neural network that learns feature engineering, features via filter (signal processing), filter (or kernel) optimization. This type of deep learning network has been applied to process and make Prediction#Statistics, predictions from many different types of data including text, images and audio. Convolution-based networks are the de-facto standard in deep learning-based approaches to computer vision and image processing, and have only recently been replaced—in some cases—by newer deep learning architectures such as the Transformer (deep learning architecture), transformer. Vanishing gradient problem, Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization (mathematics), regularization that comes from using shared weights over fewer connections. For example, for ''each'' neuron in the fully-connected layer, 10,000 weights would be requir ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenAI

OpenAI, Inc. is an American artificial intelligence (AI) organization founded in December 2015 and headquartered in San Francisco, California. It aims to develop "safe and beneficial" artificial general intelligence (AGI), which it defines as "highly autonomous systems that outperform humans at most economically valuable work". As a leading organization in the ongoing AI boom, OpenAI is known for the GPT family of large language models, the DALL-E series of text-to-image models, and a text-to-video model named Sora (text-to-video model), Sora. Its release of ChatGPT in November 2022 has been credited with catalyzing widespread interest in generative AI. The organization has a complex corporate structure. As of April 2025, it is led by the Nonprofit organization, non-profit OpenAI, Inc., Delaware General Corporation Law, registered in Delaware, and has multiple for-profit subsidiaries including OpenAI Holdings, LLC and OpenAI Global, LLC. Microsoft has invested US$13 billion ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates. It is an efficient application of the chain rule to neural networks. Backpropagation computes the gradient of a loss function with respect to the weights of the network for a single input–output example, and does so efficiently, computing the gradient one layer at a time, iterating backward from the last layer to avoid redundant calculations of intermediate terms in the chain rule; this can be derived through dynamic programming. Strictly speaking, the term ''backpropagation'' refers only to an algorithm for efficiently computing the gradient, not how the gradient is used; but the term is often used loosely to refer to the entire learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer, such as Adaptive Moment Estimation. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |