|

FastICA

FastICA is an efficient and popular algorithm for independent component analysis invented by Aapo Hyvärinen at Helsinki University of Technology. Like most ICA algorithms, FastICA seeks an orthogonal rotation of FastICA#Prewhitening the data, prewhitened data, through a fixed-point iterative method, iteration scheme, that maximizes a measure of non-Gaussianity of the rotated components. Non-gaussianity serves as a proxy for statistical independence, which is a very strong condition and requires infinite data to verify. FastICA can also be alternatively derived as an approximative Newton iteration. Algorithm ''Prewhitening'' the data Let the \mathbf := (x_) \in \mathbb^ denote the input data matrix, M the number of columns corresponding with the number of samples of mixed signals and N the number of rows corresponding with the number of independent source signals. The input data matrix \mathbf must be ''prewhitened'', or centered and whitened, before applying the FastICA algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Component Analysis

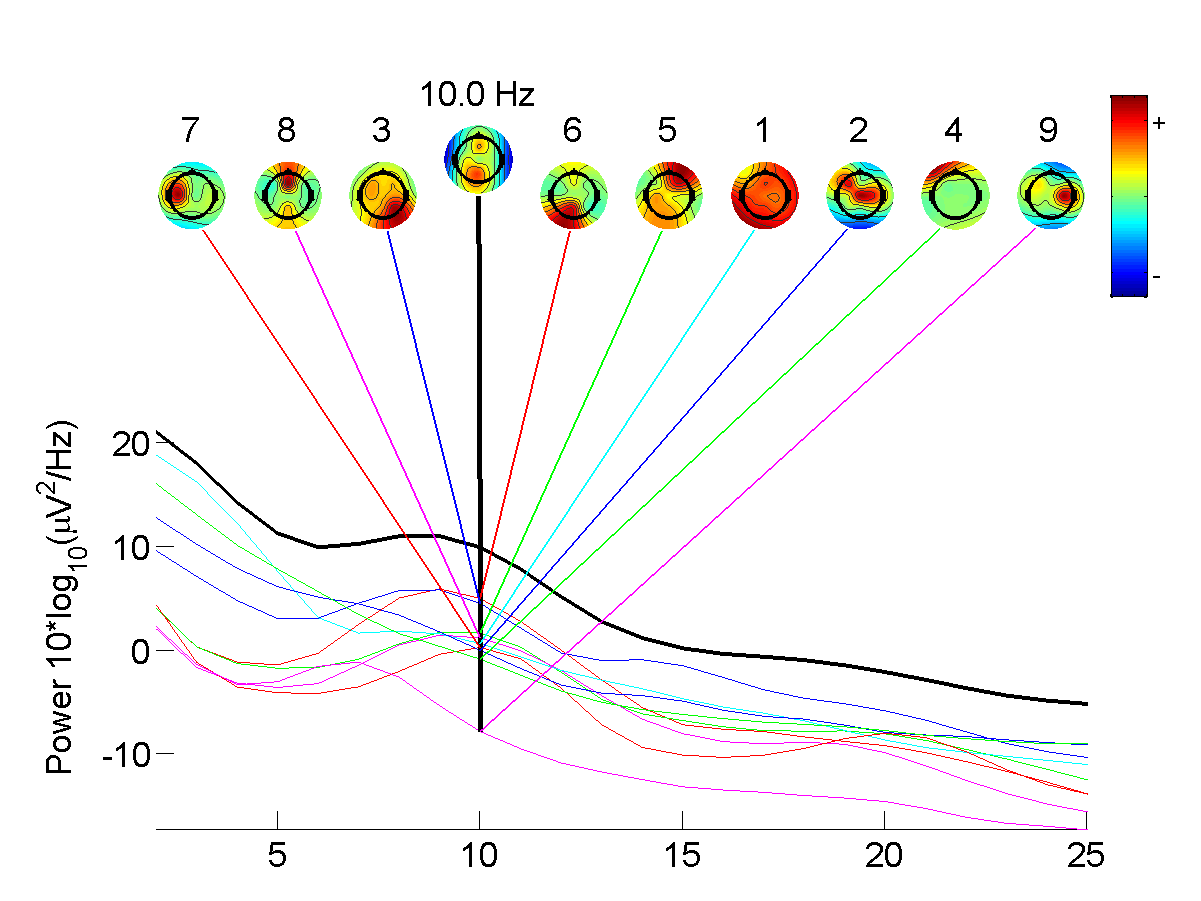

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate statistics, multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are Statistical independence, statistically independent from each other. ICA was invented by Jeanny Hérault and Christian Jutten in 1985. ICA is a special case of blind source separation. A common example application of ICA is the "cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Infomax

Infomax, or the ''principle of maximum information preservation'', is an optimization principle for artificial neural networks and other information processing systems. It prescribes that a function that maps a set of input values x to a set of output values z(x) should be chosen or learned so as to maximize the average Shannon mutual information between x and z(x), subject to a set of specified constraints and/or noise processes. Infomax algorithms are learning algorithms that perform this optimization process. The principle was described by Linsker in 1988. The objective function is called the InfoMax objective. As the InfoMax objective is difficult to compute exactly, a related notion uses two models giving two outputs z_1(x), z_2(x), and maximizes the mutual information between these. This contrastive InfoMax objective is a lower bound to the InfoMax objective. Infomax, in its zero-noise limit, is related to the ''principle of redundancy reduction'' proposed for biological se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Aapo Hyvärinen

Aapo Johannes Hyvärinen (born 1970 in Helsinki) is a Finnish professor of computer science at the University of Helsinki and known for his research in independent component analysis. Education and career Hyvärinen was born in Helsinki and studied mathematics at the University of Helsinki and received his Doctor of Technology in information science in 1997 at the Helsinki University of Technology under the supervision of Erkki Oja. His doctoral thesis, titled "Independent component analysis: A neural network approach", introduced the FastICA algorithm. Since then, Hyvärinen has conducted research especially in relation to the independent component analysis, as well as score matching (also known as Hyvärinen scoring rule). In November 2007, he was appointed as a professor at the University of Helsinki. Hyvärinen has been a member of the Finnish Academy of Sciences since 2016. From August 2016 to March 2019, he held a professorship in machine learning at the Gatsby Computatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two event (probability theory), events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called Pairwise independence, pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RapidMiner

RapidMiner is a data science platform that analyses the collective impact of an organization's data. It was acquired by Altair Engineering in September 2022. History RapidMiner, formerly known as YALE (Yet Another Learning Environment), was developed by Ralf Klinkenberg, Ingo Mierswa, and Simon Fischer in 2001 at the Artificial Intelligence Unit of the Technical University of Dortmund. Starting in 2006, its development was driven by Rapid-I, a company founded by Ingo Mierswa and Ralf Klinkenberg in the same year. In 2013, the company rebranded from Rapid-I to RapidMiner. Description RapidMiner uses a client/server model with the server offered either on-premises or in public or private cloud infrastructures. RapidMiner provides data mining and machine learning procedures including: Extract, transform, load, data loading and transformation (ETL), data preprocessing and visualization, predictive analytics and statistical modeling, evaluation, and deployment. RapidMiner is written ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SourceForge

SourceForge is a web service founded by Geoffrey B. Jeffery, Tim Perdue, and Drew Streib in November 1999. SourceForge provides a centralized software discovery platform, including an online platform for managing and hosting open-source software projects, and a directory for comparing and reviewing B2B software that lists over 104,500 business software titles. It provides source code repository hosting, bug tracking, mirroring of downloads for load balancing, a wiki for documentation, developer and user mailing lists, user-support forums, user-written reviews and ratings, a news bulletin, micro-blog for publishing project updates, and other features. SourceForge was one of the first to offer this service free of charge to open-source projects. Since 2012, the website has run on Apache Allura software. SourceForge offers free hosting and free access to tools for developers of free and open-source software. , the SourceForge repository claimed to host more than 502,00 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R Programming Language

R is a programming language for statistical computing and data visualization. It has been widely adopted in the fields of data mining, bioinformatics, data analysis, and data science. The core R language is extended by a large number of software packages, which contain reusable code, documentation, and sample data. Some of the most popular R packages are in the tidyverse collection, which enhances functionality for visualizing, transforming, and modelling data, as well as improves the ease of programming (according to the authors and users). R is free and open-source software distributed under the GNU General Public License. The language is implemented primarily in C, Fortran, and R itself. Precompiled executables are available for the major operating systems (including Linux, MacOS, and Microsoft Windows). Its core is an interpreted language with a native command line interface. In addition, multiple third-party applications are available as graphical user interf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IT++

Information technology (IT) is a set of related fields within information and communications technology (ICT), that encompass computer systems, software, programming languages, data and information processing, and storage. Information technology is an application of computer science and computer engineering. The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones. Several products or services within an economy are associated with information technology, including computer hardware, software, electronics, semiconductors, internet, telecom equipment, and e-commerce.. An information technology system (IT system) is generally an information system, a communications system, or, more specifically speaking, a computer system — including all hardware, software, and peripheral equipment — operated by a limited group of IT users, and an IT project usually refer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unsupervised Learning

Unsupervised learning is a framework in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Other frameworks in the spectrum of supervisions include weak- or semi-supervision, where a small portion of the data is tagged, and self-supervision. Some researchers consider self-supervised learning a form of unsupervised learning. Conceptually, unsupervised learning divides into the aspects of data, training, algorithm, and downstream applications. Typically, the dataset is harvested cheaply "in the wild", such as massive text corpus obtained by web crawling, with only minor filtering (such as Common Crawl). This compares favorably to supervised learning, where the dataset (such as the ImageNet1000) is typically constructed manually, which is much more expensive. There were algorithms designed specifically for unsupervised learning, such as clustering algorithms like k-means, dimensionality reduction techniques l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |