|

Eytzinger Binary Search

In computer science, multiplicative binary search is a variation of binary search that uses a specific permutation of keys in an array instead of the sorted order used by regular binary search. Multiplicative binary search was first described by Thomas Standish in 1980. This algorithm was originally proposed to simplify the midpoint index calculation on small computers without efficient division or shift operations. On modern hardware, the cache-friendly nature of multiplicative binary search makes it suitable for out-of-core search on block-oriented storage as an alternative to B-trees and B+ trees. For optimal performance, the branching factor of a B-tree or B+-tree must match the block size of the file system that it is stored on. The permutation used by multiplicative binary search places the optimal number of keys in the first (root) block, regardless of block size. Multiplicative binary search is used by some optimizing compilers to implement switch statements. Algor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Algorithm

In computer science, a search algorithm is an algorithm designed to solve a search problem. Search algorithms work to retrieve information stored within particular data structure, or calculated in the Feasible region, search space of a problem domain, with Continuous or discrete variable, either discrete or continuous values. Although Search engine (computing), search engines use search algorithms, they belong to the study of information retrieval, not algorithmics. The appropriate search algorithm to use often depends on the data structure being searched, and may also include prior knowledge about the data. Search algorithms can be made faster or more efficient by specially constructed database structures, such as search trees, hash maps, and database indexes. Search algorithms can be classified based on their mechanism of searching into three types of algorithms: linear, binary, and hashing. Linear search algorithms check every record for the one associated with a target key i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

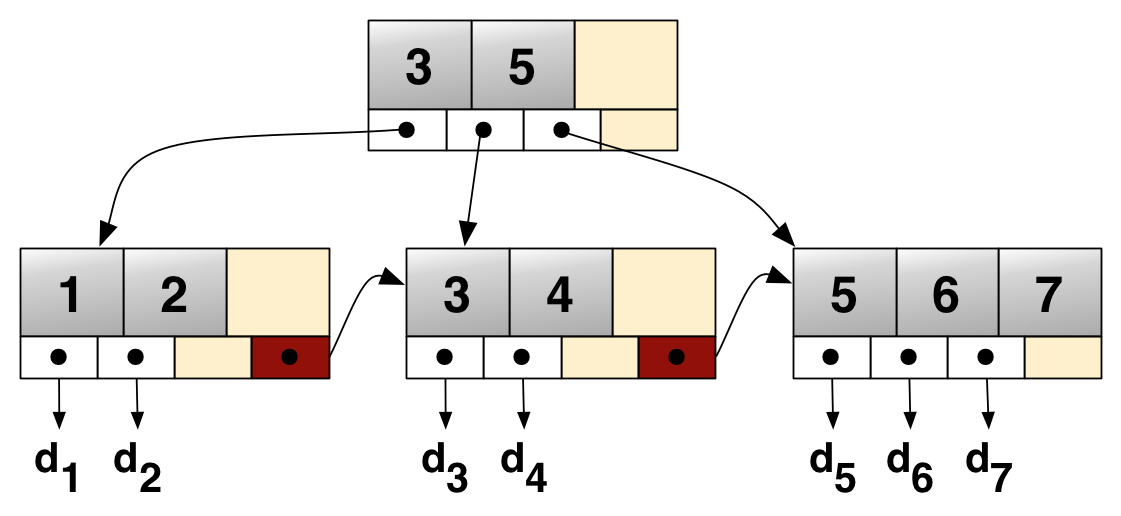

B+ Tree

A B+ tree is an m-ary tree with a variable but often large number of children per node. A B+ tree consists of a root, internal nodes and leaves. The root may be either a leaf or a node with two or more children. A B+ tree can be viewed as a B-tree in which each node contains only keys (not key–value pairs), and to which an additional level is added at the bottom with linked leaves. The primary value of a B+ tree is in storing data for efficient retrieval in a Block (data storage), block-oriented storage context—in particular, filesystems. This is primarily because unlike binary search trees, B+ trees have very high fanout (number of pointers to child nodes in a node, typically on the order of 100 or more), which reduces the number of I/O operations required to find an element in the tree. History There is no single paper introducing the B+ tree concept. Instead, the notion of maintaining all data in leaf nodes is repeatedly brought up as an interesting variant of the B- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Search Tree

In computer science, a binary search tree (BST), also called an ordered or sorted binary tree, is a Rooted tree, rooted binary tree data structure with the key of each internal node being greater than all the keys in the respective node's left subtree and less than the ones in its right subtree. The time complexity of operations on the binary search tree is Time complexity#Linear time, linear with respect to the height of the tree. Binary search trees allow Binary search algorithm, binary search for fast lookup, addition, and removal of data items. Since the nodes in a BST are laid out so that each comparison skips about half of the remaining tree, the lookup performance is proportional to that of binary logarithm. BSTs were devised in the 1960s for the problem of efficient storage of labeled data and are attributed to Conway Berners-Lee and David_Wheeler_(computer_scientist), David Wheeler. The performance of a binary search tree is dependent on the order of insertion of the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Switch Statement

In computer programming languages, a switch statement is a type of selection control mechanism used to allow the value of a variable or expression to change the control flow of program execution via search and map. Switch statements function somewhat similarly to the if statement used in programming languages like C/ C++, C#, Visual Basic .NET, Java and exist in most high-level imperative programming languages such as Pascal, Ada, C/ C++, C#, Visual Basic .NET, Java, and in many other types of language, using such keywords as switch, case, select, or inspect. Switch statements come in two main variants: a structured switch, as in Pascal, which takes exactly one branch, and an unstructured switch, as in C, which functions as a type of goto. The main reasons for using a switch include improving clarity, by reducing otherwise repetitive coding, and (if the heuristics permit) also offering the potential for faster execution through easier compiler optimization in many ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Optimizing Compiler

An optimizing compiler is a compiler designed to generate code that is optimized in aspects such as minimizing program execution time, memory usage, storage size, and power consumption. Optimization is generally implemented as a sequence of optimizing transformations, a.k.a. compiler optimizations algorithms that transform code to produce semantically equivalent code optimized for some aspect. Optimization is limited by a number of factors. Theoretical analysis indicates that some optimization problems are NP-complete, or even undecidable. Also, producing perfectly ''optimal'' code is not possible since optimizing for one aspect often degrades performance for another. Optimization is a collection of heuristic methods for improving resource usage in typical programs. Categorization Local vs. global scope Scope describes how much of the input code is considered to apply optimizations. Local scope optimizations use information local to a basic block. Since basic blocks cont ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tree (graph Theory)

In graph theory, a tree is an undirected graph in which any two vertices are connected by path, or equivalently a connected acyclic undirected graph. A forest is an undirected graph in which any two vertices are connected by path, or equivalently an acyclic undirected graph, or equivalently a disjoint union of trees. A directed tree, oriented tree,See .See . polytree,See . or singly connected networkSee . is a directed acyclic graph (DAG) whose underlying undirected graph is a tree. A polyforest (or directed forest or oriented forest) is a directed acyclic graph whose underlying undirected graph is a forest. The various kinds of data structures referred to as trees in computer science have underlying graphs that are trees in graph theory, although such data structures are generally rooted trees. A rooted tree may be directed, called a directed rooted tree, either making all its edges point away from the root—in which case it is called an arborescence or out-tree� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Branching Factor

In computing, tree data structures, and game theory, the branching factor is the number of children at each node, the outdegree. If this value is not uniform, an ''average branching factor'' can be calculated. For example, in chess, if a "node" is considered to be a legal position, the average branching factor has been said to be about 35, and a statistical analysis of over 2.5 million games revealed an average of 31. This means that, on average, a player has about 31 to 35 legal moves at their disposal at each turn. By comparison, the average branching factor for the game Go is 250. Higher branching factors make algorithms that follow every branch at every node, such as exhaustive brute force searches, computationally more expensive due to the exponentially increasing number of nodes, leading to combinatorial explosion. For example, if the branching factor is 10, then there will be 10 nodes one level down from the current position, 102 (or 100) nodes two levels down, 103 ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

B-tree

In computer science, a B-tree is a self-balancing tree data structure that maintains sorted data and allows searches, sequential access, insertions, and deletions in logarithmic time. The B-tree generalizes the binary search tree, allowing for nodes with more than two children. Unlike other self-balancing binary search trees, the B-tree is well suited for storage systems that read and write relatively large blocks of data, such as databases and file systems. History While working at Boeing Research Labs, Rudolf Bayer and Edward M. McCreight invented B-trees to efficiently manage index pages for large random-access files. The basic assumption was that indices would be so voluminous that only small chunks of the tree could fit in the main memory. Bayer and McCreight's paper ''Organization and maintenance of large ordered indices'' was first circulated in July 1970 and later published in '' Acta Informatica''. Bayer and McCreight never explained what, if anything, the ''B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Array Data Structure

In computer science, an array is a data structure consisting of a collection of ''elements'' (value (computer science), values or variable (programming), variables), of same memory size, each identified by at least one ''array index'' or ''key'', a collection of which may be a tuple, known as an index tuple. An array is stored such that the position (memory address) of each element can be computed from its index tuple by a mathematical formula. The simplest type of data structure is a linear array, also called a one-dimensional array. For example, an array of ten 32-bit (4-byte) integer variables, with indices 0 through 9, may be stored as ten Word (data type), words at memory addresses 2000, 2004, 2008, ..., 2036, (in hexadecimal: 0x7D0, 0x7D4, 0x7D8, ..., 0x7F4) so that the element with index ''i'' has the address 2000 + (''i'' × 4). The memory address of the first element of an array is called first address, foundation address, or base address. Because the mathematical conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Block (data Storage)

In computing (specifically data transmission and data storage), a block, sometimes called a physical record, is a sequence of bytes or bits, usually containing some whole number of records, having a fixed length; a ''block size''. Data thus structured are said to be ''blocked''. The process of putting data into blocks is called ''blocking'', while ''deblocking'' is the process of extracting data from blocks. Blocked data is normally stored in a data buffer, and read or written a whole block at a time. Blocking reduces the overhead and speeds up the handling of the data stream. For some devices, such as magnetic tape and CKD disk devices, blocking reduces the amount of external storage required for the data. Blocking is almost universally employed when storing data to 9-track magnetic tape, NAND flash memory, and rotating media such as floppy disks, hard disks, and optical discs. Most file systems are based on a block device, which is a level of abstraction for the hard ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Out-of-core

In computing, external memory algorithms or out-of-core algorithms are algorithms that are designed to process data that are too large to fit into a computer's main memory at once. Such algorithms must be optimized to efficiently fetch and access data stored in slow bulk memory ( auxiliary memory) such as hard drives or tape drives, or when memory is on a computer network. External memory algorithms are analyzed in the external memory model. Model External memory algorithms are analyzed in an idealized model of computation called the external memory model (or I/O model, or disk access model). The external memory model is an abstract machine similar to the RAM machine model, but with a cache in addition to main memory. The model captures the fact that read and write operations are much faster in a cache than in main memory, and that reading long contiguous blocks is faster than reading randomly using a disk read-and-write head. The running time of an algorithm in the externa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |