|

Executable And Linkable Format

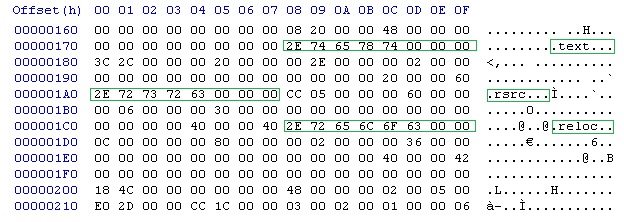

In computing, the Executable and Linkable FormatTool Interface Standard (TIS) Portable Formats SpecificationVersion 1.1'' (October 1993) (ELF, formerly named Extensible Linking Format) is a common standard file format for executable files, object code, Shared library, shared libraries, and core dumps. First published in the specification for the application binary interface (ABI) of the Unix operating system version named System V Release 4 (SVR4), and later in the Tool Interface Standard,Tool Interface Standard (TIS) Executable and Linking Format (ELF) SpecificationVersion 1.2'' (May 1995) it was quickly accepted among different vendors of Unix systems. In 1999, it was chosen as the standard binary file format for Unix and Unix-like systems on x86 processors by the #86open, 86open project. By design, the ELF format is flexible, extensible, and cross-platform. For instance, it supports different endiannesses and address sizes so it does not exclude any particular central process ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Unix System Laboratories

Unix System Laboratories (USL), sometimes written UNIX System Laboratories to follow relevant trademark guidelines of the time, was an American software laboratory and product development company that existed from 1989 through 1993. At first wholly, and then majority, owned by AT&T, it was responsible for the development and maintenance of one of the main branches of the Unix operating system, the UNIX System V Release 4 source code product. Through Univel, a partnership with Novell, it was also responsible for the development and production of the UnixWare packaged operating system for Intel architecture. In addition it developed Tuxedo, a transaction processing monitor, and was responsible for certain products related to the C++ programming language. USL was based in Summit, New Jersey, and its CEOs were Larry Dooling followed by Roel Pieper. Created from earlier AT&T entities, USL was, as industry writer Christopher Negus has observed, the culmination of AT&T's lon ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Central Processing Unit

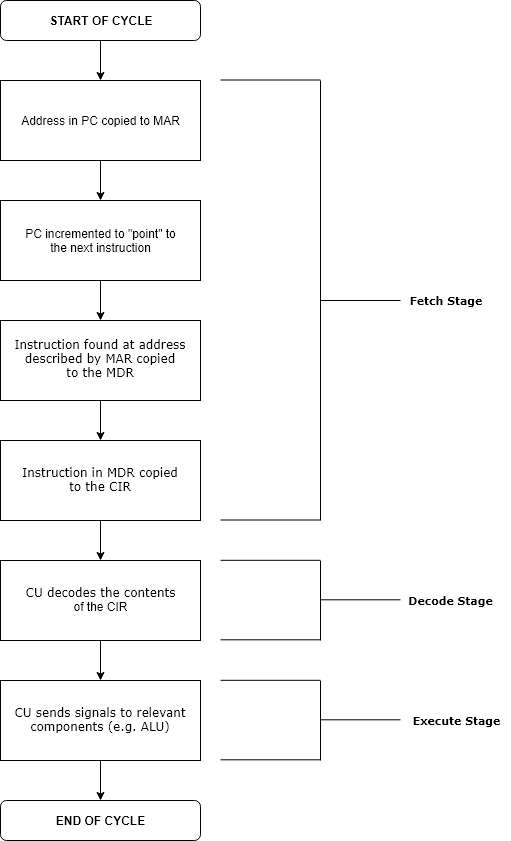

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs). The form, CPU design, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic operation, arithmetic and Bitwise operation, logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the #Fetch, fetching (from memory), #Decode, decoding and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

System V

Unix System V (pronounced: "System Five") is one of the first commercial versions of the Unix operating system. It was originally developed by AT&T and first released in 1983. Four major versions of System V were released, numbered 1, 2, 3, and 4. System V Release 4 (SVR4) was commercially the most successful version, being the result of an effort, marketed as ''Unix System Unification'', which solicited the collaboration of the major Unix vendors. It was the source of several common commercial Unix features. System V is sometimes abbreviated to SysV. , the AT&T-derived Unix market is divided between four System V variants: IBM's AIX, Hewlett Packard Enterprise's HP-UX and Oracle's Solaris, plus the free-software illumos forked from OpenSolaris. Overview Introduction System V was the successor to 1982's UNIX System III. While AT&T developed and sold hardware that ran System V, most customers ran a version from a reseller, based on AT&T's reference implementation. A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Endianness

file:Gullivers_travels.jpg, ''Gulliver's Travels'' by Jonathan Swift, the novel from which the term was coined In computing, endianness is the order in which bytes within a word (data type), word of digital data are transmitted over a data communication medium or Memory_address, addressed (by rising addresses) in computer memory, counting only byte Bit_numbering#Bit significance and indexing, significance compared to earliness. Endianness is primarily expressed as big-endian (BE) or little-endian (LE), terms introduced by Danny Cohen (computer scientist), Danny Cohen into computer science for data ordering in an Internet Experiment Note published in 1980. Also published at The adjective ''endian'' has its origin in the writings of 18th century Anglo-Irish writer Jonathan Swift. In the 1726 novel ''Gulliver's Travels'', he portrays the conflict between sects of Lilliputians divided into those breaking the shell of a boiled egg from the big end or from the little end. By analogy, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Magic Number (programming)

In computer programming, a magic number is any of the following: * A unique value with unexplained meaning or multiple occurrences which could (preferably) be replaced with a named constant * A constant numerical or text value used to identify a file format or protocol ) * A distinctive unique value that is unlikely to be mistaken for other meanings (e.g., Universally Unique Identifiers) Unnamed numerical constants The term ''magic number'' or ''magic constant'' refers to the anti-pattern of using numbers directly in source code. This breaks one of the oldest rules of programming, dating back to the COBOL, FORTRAN and PL/1 manuals of the 1960s. In the following example that computes the price after tax, 1.05 is considered a magic number: price_tax = 1.05 * price The use of unnamed magic numbers in code obscures the developers' intent in choosing that number, increases opportunities for subtle errors, and makes it more difficult for the program to be adapted and extended ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

ASCII

ASCII ( ), an acronym for American Standard Code for Information Interchange, is a character encoding standard for representing a particular set of 95 (English language focused) printable character, printable and 33 control character, control characters a total of 128 code points. The set of available punctuation had significant impact on the syntax of computer languages and text markup. ASCII hugely influenced the design of character sets used by modern computers; for example, the first 128 code points of Unicode are the same as ASCII. ASCII encodes each code-point as a value from 0 to 127 storable as a seven-bit integer. Ninety-five code-points are printable, including digits ''0'' to ''9'', lowercase letters ''a'' to ''z'', uppercase letters ''A'' to ''Z'', and commonly used punctuation symbols. For example, the letter is represented as 105 (decimal). Also, ASCII specifies 33 non-printing control codes which originated with ; most of which are now obsolete. The control cha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

64-bit Computing

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit central processing units (CPU) and arithmetic logic units (ALU) are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer. From the software perspective, 64-bit computing means the use of machine code with 64-bit virtual memory addresses. However, not all 64-bit instruction sets support full 64-bit virtual memory addresses; x86-64 and AArch64, for example, support only 48 bits of virtual address, with the remaining 16 bits of the virtual address required to be all zeros (000...) or all ones (111...), and several 64-bit instruction sets support fewer than 64 bits of physical memory address. The term ''64-bit'' also describes a generation of computers in which 64-bit processors are the norm. 64 bits is a Word (computer architecture), word size that defines ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

32-bit Computing

In computer architecture, 32-bit computing refers to computer systems with a processor, memory, and other major system components that operate on data in a maximum of 32- bit units. Compared to smaller bit widths, 32-bit computers can perform large calculations more efficiently and process more data per clock cycle. Typical 32-bit personal computers also have a 32-bit address bus, permitting up to 4 GiB of RAM to be accessed, far more than previous generations of system architecture allowed. 32-bit designs have been used since the earliest days of electronic computing, in experimental systems and then in large mainframe and minicomputer systems. The first hybrid 16/32-bit microprocessor, the Motorola 68000, was introduced in the late 1970s and used in systems such as the original Apple Macintosh. Fully 32-bit microprocessors such as the HP FOCUS, Motorola 68020 and Intel 80386 were launched in the early to mid 1980s and became dominant by the early 1990s. This generat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Byte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words of 12, 18, 24, 30, 36, 48, or 60 bits, corresponding t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Run Time (program Lifecycle Phase)

Execution in computer and software engineering is the process by which a computer or virtual machine interprets and acts on the instructions of a computer program. Each instruction of a program is a description of a particular action which must be carried out, in order for a specific problem to be solved. Execution involves repeatedly following a " fetch–decode–execute" cycle for each instruction done by the control unit. As the executing machine follows the instructions, specific effects are produced in accordance with the semantics of those instructions. Programs for a computer may be executed in a batch process without human interaction or a user may type commands in an interactive session of an interpreter. In this case, the "commands" are simply program instructions, whose execution is chained together. The term run is used almost synonymously. A related meaning of both "to run" and "to execute" refers to the specific action of a user starting (or ''launching'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

ELF Executable And Linkable Format Diagram By Ange Albertini

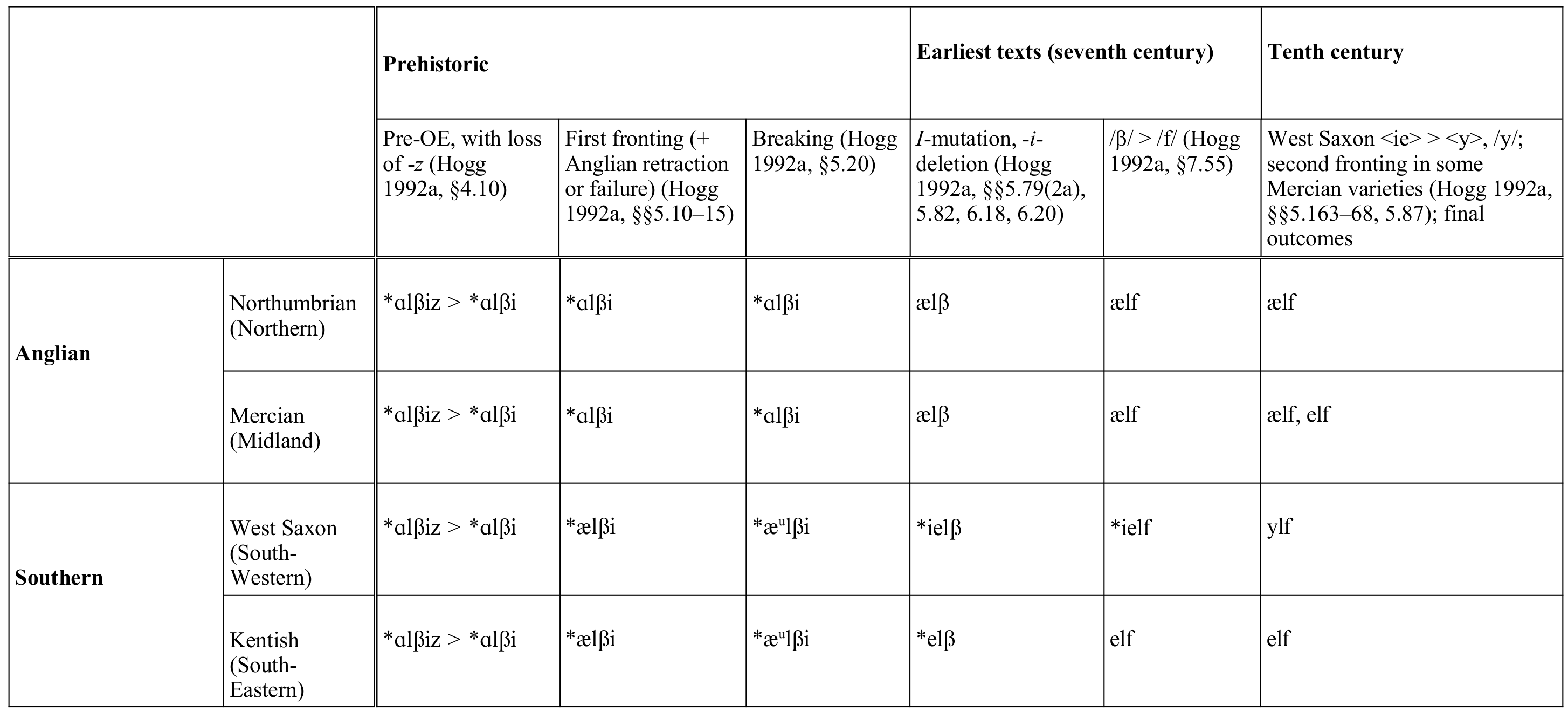

An elf (: elves) is a type of humanoid supernatural being in Germanic peoples, Germanic folklore. Elves appear especially in Norse mythology, North Germanic mythology, being mentioned in the Icelandic ''Poetic Edda'' and the ''Prose Edda''. In medieval Germanic languages, Germanic-speaking cultures, elves were thought of as beings with magical powers and supernatural beauty, ambivalent towards everyday people and capable of either helping or hindering them. Beliefs varied considerably over time and space and flourished in both pre-Christian and Christian cultures. The word ''elf'' is found throughout the Germanic languages. It seems originally to have meant 'white being'. However, reconstructing the early concept depends largely on texts written by Christians, in Old English, Old and Middle English, medieval German, and Old Norse. These associate elves variously with the gods of Norse mythology, with causing illness, with magic, and with beauty and seduction. After the med ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Memory Segmentation

Memory segmentation is an operating system memory management technique of dividing a computer's primary memory into segments or sections. In a computer system using segmentation, a reference to a memory location includes a value that identifies a segment and an offset (memory location) within that segment. Segments or sections are also used in object files of compiled programs when they are linked together into a program image and when the image is loaded into memory. Segments usually correspond to natural divisions of a program such as individual routines or data tables so segmentation is generally more visible to the programmer than paging alone. Segments may be created for program modules, or for classes of memory usage such as code segments and data segments. Certain segments may be shared between programs. Segmentation was originally invented as a method by which system software could isolate software processes ( tasks) and data they are using. It was intended to in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |