|

Erasure Channel

In coding theory and information theory, a binary erasure channel (BEC) is a communications channel model. A transmitter sends a bit (a zero or a one), and the receiver either receives the bit correctly, or with some probability P_e receives a message that the bit was not received ("erased") . Definition A binary erasure channel with erasure probability P_e is a channel with binary input, ternary output, and probability of erasure P_e. That is, let X be the transmitted random variable with alphabet \. Let Y be the received variable with alphabet \, where \text is the erasure symbol. Then, the channel is characterized by the conditional probabilities: :\begin \operatorname X = 0 &= 1 - P_e \\ \operatorname X = 1 &= 0 \\ \operatorname X = 0 &= 0 \\ \operatorname X = 1 &= 1 - P_e \\ \operatorname X = 0 &= P_e \\ \operatorname X = 1 &= P_e \end Capacity The channel capacity of a BEC is 1-P_e, attained with a uniform distribution for X (i.e. half of the inputs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coding Theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and computer data storage, data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error detection and correction, Error control (or ''channel coding'') # Cryptography, Cryptographic coding # Line code, Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, DEFLATE data compression makes files small ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Communications Channel

A communication channel refers either to a physical transmission medium such as a wire, or to a logical connection over a multiplexed medium such as a radio channel in telecommunications and computer networking. A channel is used for information transfer of, for example, a digital bit stream, from one or several '' senders'' to one or several '' receivers''. A channel has a certain capacity for transmitting information, often measured by its bandwidth in Hz or its data rate in bits per second. Communicating an information signal across distance requires some form of pathway or medium. These pathways, called communication channels, use two types of media: Transmission line-based telecommunications cable (e.g. twisted-pair, coaxial, and fiber-optic cable) and broadcast (e.g. microwave, satellite, radio, and infrared). In information theory, a channel refers to a theoretical ''channel model'' with certain error characteristics. In this more general view, a storage de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a Mathematics, mathematical formalization of a quantity or object which depends on randomness, random events. The term 'random variable' in its mathematical definition refers to neither randomness nor variability but instead is a mathematical function (mathematics), function in which * the Domain of a function, domain is the set of possible Outcome (probability), outcomes in a sample space (e.g. the set \ which are the possible upper sides of a flipped coin heads H or tails T as the result from tossing a coin); and * the Range of a function, range is a measurable space (e.g. corresponding to the domain above, the range might be the set \ if say heads H mapped to -1 and T mapped to 1). Typically, the range of a random variable is a subset of the Real number, real numbers. Informally, randomness typically represents some fundamental element of chance, such as in the roll of a dice, d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Probability

In probability theory, conditional probability is a measure of the probability of an Event (probability theory), event occurring, given that another event (by assumption, presumption, assertion or evidence) is already known to have occurred. This particular method relies on event A occurring with some sort of relationship with another event B. In this situation, the event A can be analyzed by a conditional probability with respect to B. If the event of interest is and the event is known or assumed to have occurred, "the conditional probability of given ", or "the probability of under the condition ", is usually written as or occasionally . This can also be understood as the fraction of probability B that intersects with A, or the ratio of the probabilities of both events happening to the "given" one happening (how many times A occurs rather than not assuming B has occurred): P(A \mid B) = \frac. For example, the probability that any given person has a cough on any given day ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

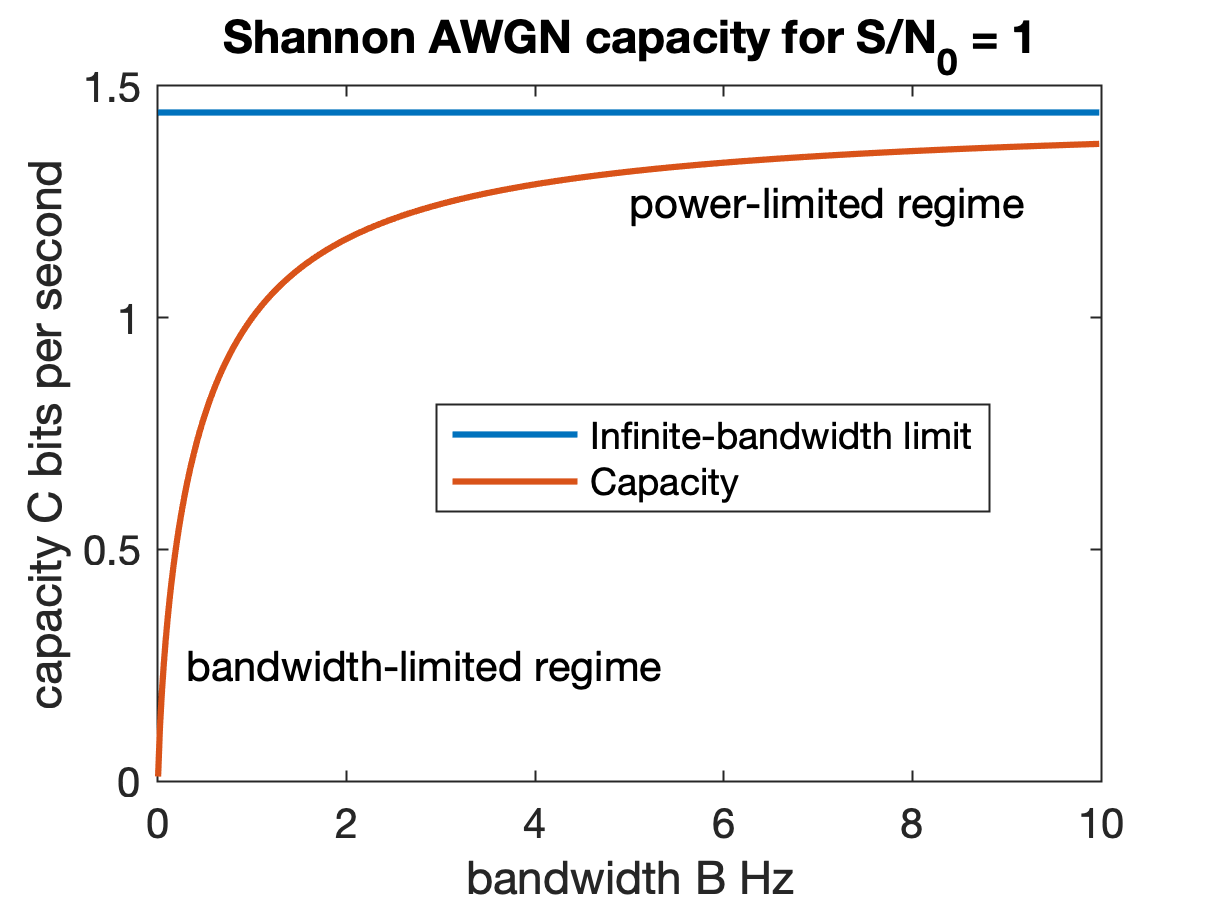

Channel Capacity

Channel capacity, in electrical engineering, computer science, and information theory, is the theoretical maximum rate at which information can be reliably transmitted over a communication channel. Following the terms of the noisy-channel coding theorem, the channel capacity of a given Channel (communications), channel is the highest information rate (in units of information entropy, information per unit time) that can be achieved with arbitrarily small error probability. Information theory, developed by Claude E. Shannon in 1948, defines the notion of channel capacity and provides a mathematical model by which it may be computed. The key result states that the capacity of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization is with respect to the input distribution. The notion of channel capacity has been central to the development of modern wireline and wireless communication system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Entropy Function

Binary may refer to: Science and technology Mathematics * Binary number, a representation of numbers using only two values (0 and 1) for each digit * Binary function, a function that takes two arguments * Binary operation, a mathematical operation that takes two arguments * Binary relation, a relation involving two elements * Finger binary, a system for counting in binary numbers on the fingers of human hands Computing * Binary code, the representation of text and data using only the digits 1 and 0 * Bit, or binary digit, the basic unit of information in computers * Binary file, composed of something other than human-readable text ** Executable, a type of binary file that contains machine code for the computer to execute * Binary tree, a computer tree data structure in which each node has at most two children * Binary-coded decimal, a method for encoding for decimal digits in binary sequences Astronomy * Binary star, a star system with two stars in it * Binary planet, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noisy-channel Coding Theorem

In information theory, the noisy-channel coding theorem (sometimes Shannon's theorem or Shannon's limit), establishes that for any given degree of noise contamination of a communication channel, it is possible (in theory) to communicate discrete data (digital information) nearly error-free up to a computable maximum rate through the channel. This result was presented by Claude Shannon in 1948 and was based in part on earlier work and ideas of Harry Nyquist and Ralph Hartley. The Shannon limit or Shannon capacity of a communication channel refers to the maximum rate of error-free data that can theoretically be transferred over the channel if the link is subject to random data transmission errors, for a particular noise level. It was first described by Shannon (1948), and shortly after published in a book by Shannon and Warren Weaver entitled '' The Mathematical Theory of Communication'' (1949). This founded the modern discipline of information theory. Overview Stated by C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Symmetric Channel

A binary symmetric channel (or BSCp) is a common communications channel model used in coding theory and information theory. In this model, a transmitter wishes to send a bit (a zero or a one), and the receiver will receive a bit. The bit will be "flipped" with a "crossover probability" of ''p'', and otherwise is received correctly. This model can be applied to varied communication channels such as telephone lines or disk drive storage. The noisy-channel coding theorem applies to BSCp, saying that information can be transmitted at any rate up to the channel capacity with arbitrarily low error. The channel capacity is 1 - \operatorname H_\text(p) bits, where \operatorname H_\text is the binary entropy function. Codes including Forney's code have been designed to transmit information efficiently across the channel. Definition A binary symmetric channel with crossover probability p, denoted by BSCp, is a channel with binary input and binary output and probability of error p. That ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deletion Channel

A deletion channel is a communications channel model used in coding theory and information theory. In this model, a transmitter sends a bit (a zero or a one), and the receiver either receives the bit (with probability p) or does not receive anything without being notified that the bit was dropped (with probability 1-p). Determining the capacity of the deletion channel is an open problem.. The deletion channel should not be confused with the binary erasure channel which is much simpler to analyze. Formal description Let p be the deletion probability, 0 < p < 1. The iid binary deletion channel is defined as follows: Given an input sequence of bits as input, each bit in can be deleted with probability . The deletion positions are unknown to the sender and the receiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Peter Elias

Peter Elias (November 23, 1923 – December 7, 2001) was a pioneer in the field of information theory. Born in New Brunswick, New Jersey, he was a member of the Massachusetts Institute of Technology faculty from 1953 to 1991. In 1955, Elias introduced convolutional codes as an alternative to block codes. He also established the binary erasure channel and proposed list decoding of error-correcting codes as an alternative to unique decoding. Career Peter Elias was a member of the Massachusetts Institute of Technology faculty from 1953 to 1991. His students included Elwyn Berlekamp and he was a colleague of Claude Shannon. From 1957 until 1966, he served as one of three founding editors of ''Information and Control''. Awards Elias received the Claude E. Shannon Award of the IEEE Information Theory Society (1977); the Golden Jubilee Award for Technological Innovation of the IEEE Information Theory Society (1998); and the IEEE Richard W. Hamming Medal (2002). Family background ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |