|

Autoregressive

In statistics, econometrics, and signal processing, an autoregressive (AR) model is a representation of a type of random process; as such, it can be used to describe certain time-varying processes in nature, economics, behavior, etc. The autoregressive model specifies that the output variable depends linearly on its own previous values and on a stochastic term (an imperfectly predictable term); thus the model is in the form of a stochastic difference equation (or recurrence relation) which should not be confused with a differential equation. Together with the moving-average (MA) model, it is a special case and key component of the more general autoregressive–moving-average (ARMA) and autoregressive integrated moving average (ARIMA) models of time series, which have a more complicated stochastic structure; it is also a special case of the vector autoregressive model (VAR), which consists of a system of more than one interlocking stochastic difference equation in more than one ev ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoregressive Integrated Moving Average

In time series analysis used in statistics and econometrics, autoregressive integrated moving average (ARIMA) and seasonal ARIMA (SARIMA) models are generalizations of the autoregressive moving average (ARMA) model to non-stationary series and periodic variation, respectively. All these models are fitted to time series in order to better understand it and predict future values. The purpose of these generalizations is to fit the data as well as possible. Specifically, ARMA assumes that the series is stationary, that is, its expected value is constant in time. If instead the series has a trend (but a constant variance/ autocovariance), the trend is removed by "differencing", leaving a stationary series. This operation generalizes ARMA and corresponds to the " integrated" part of ARIMA. Analogously, periodic variation is removed by "seasonal differencing". Components As in ARMA, the "autoregressive" () part of ARIMA indicates that the evolving variable of interest is regressed on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoregressive–moving-average Model

In the statistical analysis of time series, autoregressive–moving-average (ARMA) models are a way to describe a (weakly) stationary stochastic process using autoregression (AR) and a moving average (MA), each with a polynomial. They are a tool for understanding a series and predicting future values. AR involves regressing the variable on its own lagged (i.e., past) values. MA involves modeling the error as a linear combination of error terms occurring contemporaneously and at various times in the past. The model is usually denoted ARMA(''p'', ''q''), where ''p'' is the order of AR and ''q'' is the order of MA. The general ARMA model was described in the 1951 thesis of Peter Whittle, ''Hypothesis testing in time series analysis'', and it was popularized in the 1970 book by George E. P. Box and Gwilym Jenkins. ARMA models can be estimated by using the Box–Jenkins method. Mathematical formulation Autoregressive model The notation AR(''p'') refers to the autoregressi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moving-average Model

In time series analysis, the moving-average model (MA model), also known as moving-average process, is a common approach for modeling univariate time series. The moving-average model specifies that the output variable is cross-correlated with a non-identical to itself random-variable. Together with the autoregressive (AR) model, the moving-average model is a special case and key component of the more general ARMA and ARIMA models of time series, which have a more complicated stochastic structure. Contrary to the AR model, the finite MA model is always stationary. The moving-average model should not be confused with the moving average, a distinct concept despite some similarities. Definition The notation MA(''q'') refers to the moving average model of order ''q'': : X_t = \mu + \varepsilon_t + \theta_1 \varepsilon_ + \cdots + \theta_q \varepsilon_ = \mu + \sum_^q \theta_i \varepsilon_ + \varepsilon_, where \mu is the mean of the series, the \theta_1,...,\theta_q are the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation Function

Autocorrelation, sometimes known as serial correlation in the discrete time case, measures the correlation of a signal with a delayed copy of itself. Essentially, it quantifies the similarity between observations of a random variable at different points in time. The analysis of autocorrelation is a mathematical tool for identifying repeating patterns or hidden periodicities within a signal obscured by noise. Autocorrelation is widely used in signal processing, time domain and time series analysis to understand the behavior of data over time. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Various time series models incorporate autocorrelation, such as unit root processes, trend-stationary processes, autoregressive processes, and moving average processes. Autocorrelation of stochastic processes In statistics, the autocorrelation of a real or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stationary Process

In mathematics and statistics, a stationary process (also called a strict/strictly stationary process or strong/strongly stationary process) is a stochastic process whose statistical properties, such as mean and variance, do not change over time. More formally, the joint probability distribution of the process remains the same when shifted in time. This implies that the process is statistically consistent across different time periods. Because many statistical procedures in time series analysis assume stationarity, non-stationary data are frequently transformed to achieve stationarity before analysis. A common cause of non-stationarity is a trend in the mean, which can be due to either a unit root or a deterministic trend. In the case of a unit root, stochastic shocks have permanent effects, and the process is not mean-reverting. With a deterministic trend, the process is called trend-stationary, and shocks have only transitory effects, with the variable tending towards a determin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

A large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pretrained transformers (GPTs), which are largely used in generative chatbots such as ChatGPT or Gemini. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained in. History Before the emergence of transformer-based models in 2017, some language models were considered large relative to the computational and data constraints of their time. In the early 1990s, IBM's statistical models pioneered word alignment techniques for machine translation, laying the groundwork for corpus-based language modeling. A sm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stationary Process

In mathematics and statistics, a stationary process (also called a strict/strictly stationary process or strong/strongly stationary process) is a stochastic process whose statistical properties, such as mean and variance, do not change over time. More formally, the joint probability distribution of the process remains the same when shifted in time. This implies that the process is statistically consistent across different time periods. Because many statistical procedures in time series analysis assume stationarity, non-stationary data are frequently transformed to achieve stationarity before analysis. A common cause of non-stationarity is a trend in the mean, which can be due to either a unit root or a deterministic trend. In the case of a unit root, stochastic shocks have permanent effects, and the process is not mean-reverting. With a deterministic trend, the process is called trend-stationary, and shocks have only transitory effects, with the variable tending towards a determin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backshift Operator

In time series analysis, the lag operator (L) or backshift operator (B) operates on an element of a time series to produce the previous element. For example, given some time series :X= \ then : L X_t = X_ for all t > 1 or similarly in terms of the backshift operator ''B'': B X_t = X_ for all t > 1. Equivalently, this definition can be represented as : X_t = L X_ for all t \geq 1 The lag operator (as well as backshift operator) can be raised to arbitrary integer powers so that : L^ X_ = X_ and : L^k X_ = X_. Lag polynomials Polynomials of the lag operator can be used, and this is a common notation for ARMA (autoregressive moving average) models. For example, : \varepsilon_t = X_t - \sum_^p \varphi_i X_ = \left(1 - \sum_^p \varphi_i L^i\right) X_t specifies an AR(''p'') model. A polynomial of lag operators is called a lag polynomial so that, for example, the ARMA model can be concisely specified as : \varphi (L) X_t = \theta (L) \varepsilon_t where \varphi (L) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

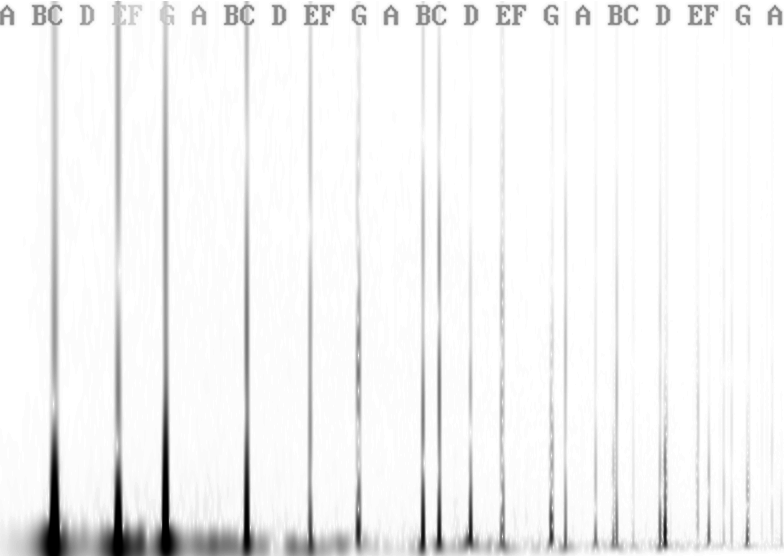

Spectral Density

In signal processing, the power spectrum S_(f) of a continuous time signal x(t) describes the distribution of power into frequency components f composing that signal. According to Fourier analysis, any physical signal can be decomposed into a number of discrete frequencies, or a spectrum of frequencies over a continuous range. The statistical average of any sort of signal (including noise) as analyzed in terms of its frequency content, is called its spectrum. When the energy of the signal is concentrated around a finite time interval, especially if its total energy is finite, one may compute the energy spectral density. More commonly used is the power spectral density (PSD, or simply power spectrum), which applies to signals existing over ''all'' time, or over a time period large enough (especially in relation to the duration of a measurement) that it could as well have been over an infinite time interval. The PSD then refers to the spectral energy distribution that would be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cauchy Distribution

The Cauchy distribution, named after Augustin-Louis Cauchy, is a continuous probability distribution. It is also known, especially among physicists, as the Lorentz distribution (after Hendrik Lorentz), Cauchy–Lorentz distribution, Lorentz(ian) function, or Breit–Wigner distribution. The Cauchy distribution f(x; x_0,\gamma) is the distribution of the -intercept of a ray issuing from (x_0,\gamma) with a uniformly distributed angle. It is also the distribution of the Ratio distribution, ratio of two independent Normal distribution, normally distributed random variables with mean zero. The Cauchy distribution is often used in statistics as the canonical example of a "pathological (mathematics), pathological" distribution since both its expected value and its variance are undefined (but see below). The Cauchy distribution does not have finite moment (mathematics), moments of order greater than or equal to one; only fractional absolute moments exist., Chapter 16. The Cauchy dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

In mathematics, the Fourier transform (FT) is an integral transform that takes a function as input then outputs another function that describes the extent to which various frequencies are present in the original function. The output of the transform is a complex-valued function of frequency. The term ''Fourier transform'' refers to both this complex-valued function and the mathematical operation. When a distinction needs to be made, the output of the operation is sometimes called the frequency domain representation of the original function. The Fourier transform is analogous to decomposing the sound of a musical chord into the intensities of its constituent pitches. Functions that are localized in the time domain have Fourier transforms that are spread out across the frequency domain and vice versa, a phenomenon known as the uncertainty principle. The critical case for this principle is the Gaussian function, of substantial importance in probability theory and statist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Process

In probability theory and statistics, a Gaussian process is a stochastic process (a collection of random variables indexed by time or space), such that every finite collection of those random variables has a multivariate normal distribution. The distribution of a Gaussian process is the joint distribution of all those (infinitely many) random variables, and as such, it is a distribution over functions with a continuous domain, e.g. time or space. The concept of Gaussian processes is named after Carl Friedrich Gauss because it is based on the notion of the Gaussian distribution (normal distribution). Gaussian processes can be seen as an infinite-dimensional generalization of multivariate normal distributions. Gaussian processes are useful in statistical modelling, benefiting from properties inherited from the normal distribution. For example, if a random process is modelled as a Gaussian process, the distributions of various derived quantities can be obtained explicitly. Such quanti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |