|

Apriori Algorithm

AprioriRakesh Agrawal and Ramakrishnan SrikanFast algorithms for mining association rules Proceedings of the 20th International Conference on Very Large Data Bases, VLDB, pages 487-499, Santiago, Chile, September 1994. is an algorithm for frequent item set mining and association rule learning over relational databases. It proceeds by identifying the frequent individual items in the database and extending them to larger and larger item sets as long as those item sets appear sufficiently often in the database. The frequent item sets determined by Apriori can be used to determine association rules which highlight general trends in the database: this has applications in domains such as market basket analysis. Overview The Apriori algorithm was proposed by Agrawal and Srikant in 1994. Apriori is designed to operate on databases containing transactions (for example, collections of items bought by customers, or details of a website frequentation or IP addresses). Other algorithms are de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hash Tree (persistent Data Structure)

In computer science, a hash tree (or hash trie) is a persistent data structure that can be used to implement sets and maps, intended to replace hash tables in purely functional programming In computer science, purely functional programming usually designates a programming paradigm—a style of building the structure and elements of computer programs—that treats all computation as the evaluation of function (mathematics), mathematic .... In its basic form, a hash tree stores the hashes of its keys, regarded as strings of bits, in a trie, with the actual keys and (optional) values stored at the trie's "final" nodes. Hash array mapped tries and Ctries are refined versions of this data structure, using particular type of trie implementations. References Functional data structures Hashing {{compu-prog-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (programming Language)

R is a programming language for statistical computing and Data and information visualization, data visualization. It has been widely adopted in the fields of data mining, bioinformatics, data analysis, and data science. The core R language is extended by a large number of R package, software packages, which contain Reusability, reusable code, documentation, and sample data. Some of the most popular R packages are in the tidyverse collection, which enhances functionality for visualizing, transforming, and modelling data, as well as improves the ease of programming (according to the authors and users). R is free and open-source software distributed under the GNU General Public License. The language is implemented primarily in C (programming language), C, Fortran, and Self-hosting (compilers), R itself. Preprocessor, Precompiled executables are available for the major operating systems (including Linux, MacOS, and Microsoft Windows). Its core is an interpreted language with a na ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MIT License

The MIT License is a permissive software license originating at the Massachusetts Institute of Technology (MIT) in the late 1980s. As a permissive license, it puts very few restrictions on reuse and therefore has high license compatibility. Unlike copyleft software licenses, the MIT License also permits reuse within proprietary software, provided that all copies of the software or its substantial portions include a copy of the terms of the MIT License and also a copyright notice. In 2015, the MIT License was the most popular software license on GitHub, and was still the most popular in 2025. Notable projects that use the MIT License include the X Window System, Ruby on Rails, Node.js, Lua (programming language), Lua, jQuery, .NET, Angular (web framework), Angular, and React (JavaScript library), React. License terms The MIT License has the identifier MIT in the SPDX License List. It is also known as the "#Ambiguity and variants, Expat License". It has the following terms: Co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frequent Pattern Mining

Frequent pattern discovery (or FP discovery, FP mining, or Frequent itemset mining) is part of knowledge discovery in databases, Massive Online Analysis, and data mining; it describes the task of finding the most frequent and relevant patterns in large datasets. The concept was first introduced for mining transaction databases. Frequent patterns are defined as subsets (itemsets, subsequences, or substructures) that appear in a data set with frequency no less than a user-specified or auto-determined threshold. Techniques Techniques for FP mining include: * market basket analysis * cross-marketing * catalog design * clustering * classification * recommendation systems For the most part, FP discovery can be done using association rule learning with particular algorithms Eclat, FP-growth and the Apriori algorithm. Other strategies include: * Frequent subtree mining *Structure mining * Sequential pattern mining and respective specific techniques. Implementations exist for var ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stock-keeping Unit

In inventory management, a stock keeping unit (abbreviated as SKU, pronounced or ) is the unit of measure in which the stocks of a material are managed. It is a distinct type of item for sale, purchase, or tracking in inventory, such as a product or service, and all attributes associated with the item type that distinguish it from other item types (for a product, these attributes can include manufacturer, description, material, size, color, packaging, and warranty terms). When a business records the inventory of its stock, it counts the quantity it has of each unit, or SKU. SKU can also refer to a unique identifier or code, sometimes represented via a barcode for scanning and tracking, which refers to the particular stock keeping unit. These identifiers are not regulated or standardized. When a company receives items from a vendor, it has a choice of maintaining the vendor's SKU or creating its own. This makes them distinct from Global Trade Item Number (GTIN), which are stand ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiset

In mathematics, a multiset (or bag, or mset) is a modification of the concept of a set that, unlike a set, allows for multiple instances for each of its elements. The number of instances given for each element is called the ''multiplicity'' of that element in the multiset. As a consequence, an infinite number of multisets exist that contain only elements and , but vary in the multiplicities of their elements: * The set contains only elements and , each having multiplicity 1 when is seen as a multiset. * In the multiset , the element has multiplicity 2, and has multiplicity 1. * In the multiset , and both have multiplicity 3. These objects are all different when viewed as multisets, although they are the same set, since they all consist of the same elements. As with sets, and in contrast to ''tuples'', the order in which elements are listed does not matter in discriminating multisets, so and denote the same multiset. To distinguish between sets and multisets, a notat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Breadth-first Search

Breadth-first search (BFS) is an algorithm for searching a tree data structure for a node that satisfies a given property. It starts at the tree root and explores all nodes at the present depth prior to moving on to the nodes at the next depth level. Extra memory, usually a queue, is needed to keep track of the child nodes that were encountered but not yet explored. For example, in a chess endgame, a chess engine may build the game tree from the current position by applying all possible moves and use breadth-first search to find a win position for White. Implicit trees (such as game trees or other problem-solving trees) may be of infinite size; breadth-first search is guaranteed to find a solution node if one exists. In contrast, (plain) depth-first search (DFS), which explores the node branch as far as possible before backtracking and expanding other nodes, may get lost in an infinite branch and never make it to the solution node. Iterative deepening depth-first search ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Association Rule Learning

Association rule learning is a rule-based machine learning method for discovering interesting relations between variables in large databases. It is intended to identify strong rules discovered in databases using some measures of interestingness.Piatetsky-Shapiro, Gregory (1991), ''Discovery, analysis, and presentation of strong rules'', in Piatetsky-Shapiro, Gregory; and Frawley, William J.; eds., ''Knowledge Discovery in Databases'', AAAI/MIT Press, Cambridge, MA. In any given transaction with a variety of items, association rules are meant to discover the rules that determine how or why certain items are connected. Based on the concept of strong rules, Rakesh Agrawal, Tomasz Imieliński and Arun Swami introduced association rules for discovering regularities between products in large-scale transaction data recorded by point-of-sale (POS) systems in supermarkets. For example, the rule \ \Rightarrow \ found in the sales data of a supermarket would indicate that if a customer bu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

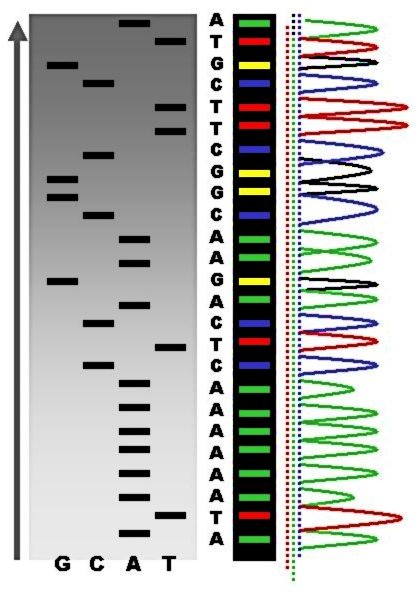

DNA Sequencing

DNA sequencing is the process of determining the nucleic acid sequence – the order of nucleotides in DNA. It includes any method or technology that is used to determine the order of the four bases: adenine, thymine, cytosine, and guanine. The advent of rapid DNA sequencing methods has greatly accelerated biological and medical research and discovery. Knowledge of DNA sequences has become indispensable for basic biological research, Genographic Project, DNA Genographic Projects and in numerous applied fields such as medical diagnosis, biotechnology, forensic biology, virology and biological systematics. Comparing healthy and mutated DNA sequences can diagnose different diseases including various cancers, characterize antibody repertoire, and can be used to guide patient treatment. Having a quick way to sequence DNA allows for faster and more individualized medical care to be administered, and for more organisms to be identified and cataloged. The rapid advancements in DNA seque ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |