|

Algorithmic Information Theory

Algorithmic information theory (AIT) is a branch of theoretical computer science that concerns itself with the relationship between computation and information of computably generated objects (as opposed to stochastically generated), such as strings or any other data structure. In other words, it is shown within algorithmic information theory that computational incompressibility "mimics" (except for a constant that only depends on the chosen universal programming language) the relations or inequalities found in information theory. According to Gregory Chaitin, it is "the result of putting Shannon's information theory and Turing's computability theory into a cocktail shaker and shaking vigorously." Besides the formalization of a universal measure for irreducible information content of computably generated objects, some main achievements of AIT were to show that: in fact algorithmic complexity follows (in the self-delimited case) the same inequalities (except for a constant) that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Theoretical Computer Science

Theoretical computer science is a subfield of computer science and mathematics that focuses on the Abstraction, abstract and mathematical foundations of computation. It is difficult to circumscribe the theoretical areas precisely. The Association for Computing Machinery, ACM's Special Interest Group on Algorithms and Computation Theory (SIGACT) provides the following description: History While logical inference and mathematical proof had existed previously, in 1931 Kurt Gödel proved with his incompleteness theorem that there are fundamental limitations on what statements could be proved or disproved. Information theory was added to the field with A Mathematical Theory of Communication, a 1948 mathematical theory of communication by Claude Shannon. In the same decade, Donald Hebb introduced a mathematical model of Hebbian learning, learning in the brain. With mounting biological data supporting this hypothesis with some modification, the fields of neural networks and para ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference ( or ) is a method of statistical inference in which Bayes' theorem is used to calculate a probability of a hypothesis, given prior evidence, and update it as more information becomes available. Fundamentally, Bayesian inference uses a prior distribution to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov Complexity

In algorithmic information theory (a subfield of computer science and mathematics), the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program (in a predetermined programming language) that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory. The notion of Kolmogorov complexity can be used to state and prove impossibility results akin to Cantor's diagonal argument, Gödel's incompleteness theorem, and Turing's halting problem. In particular, no program ''P'' computing a lower bound for each text's Kolmogorov complexity can return a value essentially larger than ''P'''s own len ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rigour

Rigour (British English) or rigor (American English; see spelling differences) describes a condition of stiffness or strictness. These constraints may be environmentally imposed, such as "the rigours of famine"; logically imposed, such as mathematical proofs which must maintain consistent answers; or socially imposed, such as the process of defining ethics and law. Etymology "Rigour" comes to English through old French (13th c., Modern French '' rigueur'') meaning "stiffness", which itself is based on the Latin">Wiktionary:fr:rigueur">rigueur'') meaning "stiffness", which itself is based on the Latin ''rigorem'' (nominative ''rigor'') "numbness, stiffness, hardness, firmness; roughness, rudeness", from the verb ''rigere'' "to be stiff". The noun was frequently used to describe a condition of strictness or stiffness, which arises from a situation or constraint either chosen or experienced passively. For example, the title of the book ''Theologia Moralis Inter Rigorem et Lax ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Formal System

A formal system is an abstract structure and formalization of an axiomatic system used for deducing, using rules of inference, theorems from axioms. In 1921, David Hilbert proposed to use formal systems as the foundation of knowledge in mathematics. The term ''formalism'' is sometimes a rough synonym for ''formal system'', but it also refers to a given style of notation, for example, Paul Dirac's bra–ket notation. Concepts A formal system has the following: * Formal language, which is a set of well-formed formulas, which are strings of symbols from an alphabet, formed by a formal grammar (consisting of production rules or formation rules). * Deductive system, deductive apparatus, or proof system, which has rules of inference that take axioms and infers theorems, both of which are part of the formal language. A formal system is said to be recursive (i.e. effective) or recursively enumerable if the set of axioms and the set of inference rules are decidable ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

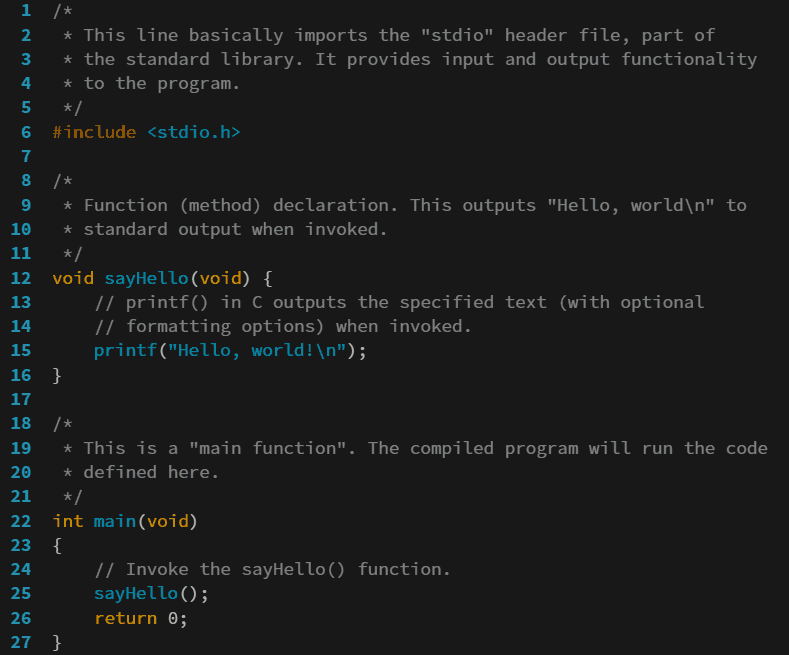

Programming Language

A programming language is a system of notation for writing computer programs. Programming languages are described in terms of their Syntax (programming languages), syntax (form) and semantics (computer science), semantics (meaning), usually defined by a formal language. Languages usually provide features such as a type system, Variable (computer science), variables, and mechanisms for Exception handling (programming), error handling. An Programming language implementation, implementation of a programming language is required in order to Execution (computing), execute programs, namely an Interpreter (computing), interpreter or a compiler. An interpreter directly executes the source code, while a compiler produces an executable program. Computer architecture has strongly influenced the design of programming languages, with the most common type (imperative languages—which implement operations in a specified order) developed to perform well on the popular von Neumann architecture. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Program (computing)

A computer program is a sequence or set of instructions in a programming language for a computer to execute. It is one component of software, which also includes documentation and other intangible components. A ''computer program'' in its human-readable form is called source code. Source code needs another computer program to execute because computers can only execute their native machine instructions. Therefore, source code may be translated to machine instructions using a compiler written for the language. (Assembly language programs are translated using an assembler.) The resulting file is called an executable. Alternatively, source code may execute within an interpreter written for the language. If the executable is requested for execution, then the operating system loads it into memory and starts a process. The central processing unit will soon switch to this process so it can fetch, decode, and then execute each machine instruction. If the source code is requested ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymptotic Complexity

In computer science, the computational complexity or simply complexity of an algorithm is the amount of resources required to run it. Particular focus is given to computation time (generally measured by the number of needed elementary operations) and memory storage requirements. The complexity of a problem is the complexity of the best algorithms that allow solving the problem. The study of the complexity of explicitly given algorithms is called analysis of algorithms, while the study of the complexity of problems is called computational complexity theory. Both areas are highly related, as the complexity of an algorithm is always an upper bound on the complexity of the problem solved by this algorithm. Moreover, for designing efficient algorithms, it is often fundamental to compare the complexity of a specific algorithm to the complexity of the problem to be solved. Also, in most cases, the only thing that is known about the complexity of a problem is that it is lower than the c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Probability

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s. It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the method together with Bayes' rule to obtain probabilities of prediction for an algorithm's future outputs. In the mathematical formalism used, the observations have the form of finite binary strings viewed as outputs of Turing machines, and the universal prior is a probability distribution over the set of finite binary strings calculated from a probability distribution over programs (that is, inputs to a universal Turing machine). The prior is universal in the Turing-computability sense, i.e. no string has zero probability. It is not computable, but it can be approximated. Formally, the probability P is not a probabil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kolmogorov Complexity

In algorithmic information theory (a subfield of computer science and mathematics), the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program (in a predetermined programming language) that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory. The notion of Kolmogorov complexity can be used to state and prove impossibility results akin to Cantor's diagonal argument, Gödel's incompleteness theorem, and Turing's halting problem. In particular, no program ''P'' computing a lower bound for each text's Kolmogorov complexity can return a value essentially larger than ''P'''s own len ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stationary Process

In mathematics and statistics, a stationary process (also called a strict/strictly stationary process or strong/strongly stationary process) is a stochastic process whose statistical properties, such as mean and variance, do not change over time. More formally, the joint probability distribution of the process remains the same when shifted in time. This implies that the process is statistically consistent across different time periods. Because many statistical procedures in time series analysis assume stationarity, non-stationary data are frequently transformed to achieve stationarity before analysis. A common cause of non-stationarity is a trend in the mean, which can be due to either a unit root or a deterministic trend. In the case of a unit root, stochastic shocks have permanent effects, and the process is not mean-reverting. With a deterministic trend, the process is called trend-stationary, and shocks have only transitory effects, with the variable tending towards a determin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |