Multivariate adaptive regression splines on:

[Wikipedia]

[Google]

[Amazon]

In

A

A  The figure on the right shows a plot of this function: the predicted versus ''x'', with the original values of ''y'' once again shown as red dots. The predicted response is now a better fit to the original ''y'' values.

MARS has automatically produced a kink in the predicted ''y'' to take into account non-linearity. The kink is produced by ''hinge functions''. The hinge functions are the expressions starting with (where is if , else ). Hinge functions are described in more detail below.

In this simple example, we can easily see from the plot that ''y'' has a non-linear relationship with ''x'' (and might perhaps guess that y varies with the square of ''x''). However, in general there will be multiple

The figure on the right shows a plot of this function: the predicted versus ''x'', with the original values of ''y'' once again shown as red dots. The predicted response is now a better fit to the original ''y'' values.

MARS has automatically produced a kink in the predicted ''y'' to take into account non-linearity. The kink is produced by ''hinge functions''. The hinge functions are the expressions starting with (where is if , else ). Hinge functions are described in more detail below.

In this simple example, we can easily see from the plot that ''y'' has a non-linear relationship with ''x'' (and might perhaps guess that y varies with the square of ''x''). However, in general there will be multiple  This expression models air pollution (the ozone level) as a function of the temperature and a few other variables. Note that the last term in the formula (on the last line) incorporates an interaction between and .

The figure on the right plots the predicted as and vary, with the other variables fixed at their median values. The figure shows that wind does not affect the ozone level unless visibility is low. We see that MARS can build quite flexible regression surfaces by combining hinge functions.

To obtain the above expression, the MARS model building procedure automatically selects which variables to use (some variables are important, others not), the positions of the kinks in the hinge functions, and how the hinge functions are combined.

This expression models air pollution (the ozone level) as a function of the temperature and a few other variables. Note that the last term in the formula (on the last line) incorporates an interaction between and .

The figure on the right plots the predicted as and vary, with the other variables fixed at their median values. The figure shows that wind does not affect the ozone level unless visibility is low. We see that MARS can build quite flexible regression surfaces by combining hinge functions.

To obtain the above expression, the MARS model building procedure automatically selects which variables to use (some variables are important, others not), the positions of the kinks in the hinge functions, and how the hinge functions are combined.

A key part of MARS models are ''hinge functions'' taking the form

:

or

:

where is a constant, called the ''knot''.

The figure on the right shows a mirrored pair of hinge functions with a knot at 3.1.

A hinge function is zero for part of its range, so can be used to partition the data into disjoint regions, each of which can be treated independently. Thus for example a mirrored pair of hinge functions in the expression

:

creates the

A key part of MARS models are ''hinge functions'' taking the form

:

or

:

where is a constant, called the ''knot''.

The figure on the right shows a mirrored pair of hinge functions with a knot at 3.1.

A hinge function is zero for part of its range, so can be used to partition the data into disjoint regions, each of which can be treated independently. Thus for example a mirrored pair of hinge functions in the expression

:

creates the

''The Elements of Statistical Learning''

2nd edition. Springer, (has a section on MARS) * Faraway J. (2005

''Extending the Linear Model with R''

CRC, (has an example using MARS with R) * Heping Zhang and Burton H. Singer (2010

''Recursive Partitioning and Applications''

2nd edition. Springer, (has a chapter on MARS and discusses some tweaks to the algorithm) * Denison D.G.T., Holmes C.C., Mallick B.K., and Smith A.F.M. (2004

Wiley, * Berk R.A. (2008) ''Statistical learning from a regression perspective'', Springer,

earth

/code> package **

/code> package **

/code> package. Not Friedman's MARS. **

/code> package for Bayesian MARS. * Matlab code: *

*

from the book ''Bayesian Methods for Nonlinear Classification and Regression''{{cite book , last1=Denison , first1=D. G. T. , last2=Holmes , first2=C. C. , last3=Mallick , first3=B. K. , last4=Smith , first4=A. F. M. , title=Bayesian methods for nonlinear classification and regression , date=2002 , publisher=Wiley , location=Chichester, England , isbn=978-0-471-49036-4 for Bayesian MARS. * Python *

Earth – Multivariate adaptive regression splines

*

py-earth

*

pyBASS

for Bayesian MARS. ; Commercial software:

MARS

from Salford Systems. Based on Friedman's implementation.

STATISTICA Data Miner

from StatSoft

from SAS. Nonparametric regression Machine learning

statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, indust ...

, multivariate adaptive regression splines (MARS) is a form of regression analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one ...

introduced by Jerome H. Friedman

Jerome Harold Friedman (born December 29, 1939) is an American statistician, consultant and Professor of Statistics at Stanford University, known for his contributions in the field of statistics and data mining.

in 1991. It is a non-parametric regression

Nonparametric regression is a category of regression analysis in which the predictor does not take a predetermined form but is constructed according to information derived from the data. That is, no parametric form is assumed for the relationship ...

technique and can be seen as an extension of linear model

In statistics, the term linear model is used in different ways according to the context. The most common occurrence is in connection with regression models and the term is often taken as synonymous with linear regression model. However, the term ...

s that automatically models nonlinearities and interactions between variables.

The term "MARS" is trademarked and licensed to Salford Systems. In order to avoid trademark infringements, many open-source implementations of MARS are called "Earth".

The basics

This section introduces MARS using a few examples. We start with a set of data: a matrix of input variables ''x'', and a vector of the observed responses ''y'', with a response for each row in ''x''. For example, the data could be: Here there is only oneindependent variable

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or dema ...

, so the ''x'' matrix is just a single column. Given these measurements, we would like to build a model which predicts the expected ''y'' for a given ''x''.

A

A linear model

In statistics, the term linear model is used in different ways according to the context. The most common occurrence is in connection with regression models and the term is often taken as synonymous with linear regression model. However, the term ...

for the above data is

:

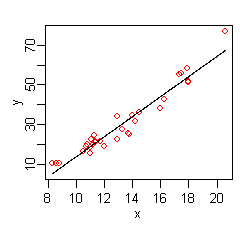

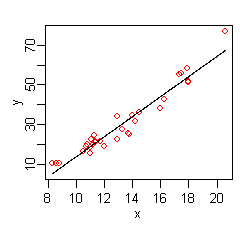

The hat on the indicates that is estimated from the data. The figure on the right shows a plot of this function:

a line giving the predicted versus ''x'', with the original values of ''y'' shown as red dots.

The data at the extremes of ''x'' indicates that the relationship between ''y'' and ''x'' may be non-linear (look at the red dots relative to the regression line at low and high values of ''x''). We thus turn to MARS to automatically build a model taking into account non-linearities. MARS software constructs a model from the given ''x'' and ''y'' as follows

:

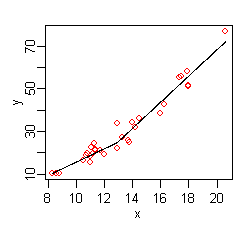

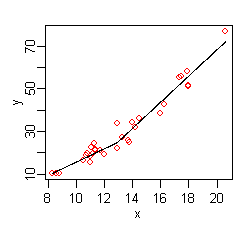

The figure on the right shows a plot of this function: the predicted versus ''x'', with the original values of ''y'' once again shown as red dots. The predicted response is now a better fit to the original ''y'' values.

MARS has automatically produced a kink in the predicted ''y'' to take into account non-linearity. The kink is produced by ''hinge functions''. The hinge functions are the expressions starting with (where is if , else ). Hinge functions are described in more detail below.

In this simple example, we can easily see from the plot that ''y'' has a non-linear relationship with ''x'' (and might perhaps guess that y varies with the square of ''x''). However, in general there will be multiple

The figure on the right shows a plot of this function: the predicted versus ''x'', with the original values of ''y'' once again shown as red dots. The predicted response is now a better fit to the original ''y'' values.

MARS has automatically produced a kink in the predicted ''y'' to take into account non-linearity. The kink is produced by ''hinge functions''. The hinge functions are the expressions starting with (where is if , else ). Hinge functions are described in more detail below.

In this simple example, we can easily see from the plot that ''y'' has a non-linear relationship with ''x'' (and might perhaps guess that y varies with the square of ''x''). However, in general there will be multiple independent variables

Dependent and independent variables are variables in mathematical modeling, statistical modeling and experimental sciences. Dependent variables receive this name because, in an experiment, their values are studied under the supposition or deman ...

, and the relationship between ''y'' and these variables will be unclear and not easily visible by plotting. We can use MARS to discover that non-linear relationship.

An example MARS expression with multiple variables is

:

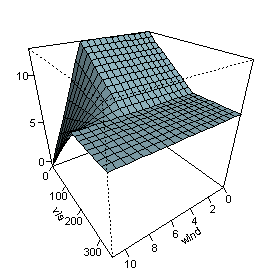

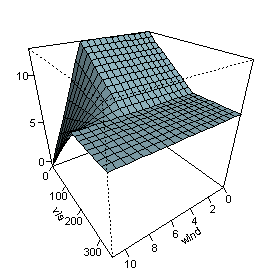

This expression models air pollution (the ozone level) as a function of the temperature and a few other variables. Note that the last term in the formula (on the last line) incorporates an interaction between and .

The figure on the right plots the predicted as and vary, with the other variables fixed at their median values. The figure shows that wind does not affect the ozone level unless visibility is low. We see that MARS can build quite flexible regression surfaces by combining hinge functions.

To obtain the above expression, the MARS model building procedure automatically selects which variables to use (some variables are important, others not), the positions of the kinks in the hinge functions, and how the hinge functions are combined.

This expression models air pollution (the ozone level) as a function of the temperature and a few other variables. Note that the last term in the formula (on the last line) incorporates an interaction between and .

The figure on the right plots the predicted as and vary, with the other variables fixed at their median values. The figure shows that wind does not affect the ozone level unless visibility is low. We see that MARS can build quite flexible regression surfaces by combining hinge functions.

To obtain the above expression, the MARS model building procedure automatically selects which variables to use (some variables are important, others not), the positions of the kinks in the hinge functions, and how the hinge functions are combined.

The MARS model

MARS builds models of the form : The model is a weighted sum of basis functions . Each is a constant coefficient. For example, each line in the formula for ozone above is one basis function multiplied by its coefficient. Eachbasis function

In mathematics, a basis function is an element of a particular basis for a function space. Every function in the function space can be represented as a linear combination of basis functions, just as every vector in a vector space can be repres ...

takes one of the following three forms:

1) a constant 1. There is just one such term, the intercept.

In the ozone formula above, the intercept term is 5.2.

2) a ''hinge'' function. A hinge function has the form or . MARS automatically selects variables and values of those variables for knots of the hinge functions. Examples of such basis functions can be seen in the middle three lines of the ozone formula.

3) a product of two or more hinge functions.

These basis functions can model interaction between two or more variables.

An example is the last line of the ozone formula.

Hinge functions

A key part of MARS models are ''hinge functions'' taking the form

:

or

:

where is a constant, called the ''knot''.

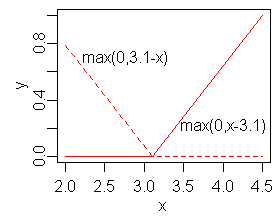

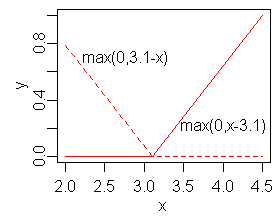

The figure on the right shows a mirrored pair of hinge functions with a knot at 3.1.

A hinge function is zero for part of its range, so can be used to partition the data into disjoint regions, each of which can be treated independently. Thus for example a mirrored pair of hinge functions in the expression

:

creates the

A key part of MARS models are ''hinge functions'' taking the form

:

or

:

where is a constant, called the ''knot''.

The figure on the right shows a mirrored pair of hinge functions with a knot at 3.1.

A hinge function is zero for part of its range, so can be used to partition the data into disjoint regions, each of which can be treated independently. Thus for example a mirrored pair of hinge functions in the expression

:

creates the piecewise

In mathematics, a piecewise-defined function (also called a piecewise function, a hybrid function, or definition by cases) is a function defined by multiple sub-functions, where each sub-function applies to a different interval in the domain. P ...

linear graph shown for the simple MARS model in the previous section.

One might assume that only piecewise linear functions can be formed from hinge functions, but hinge functions can be multiplied together to form non-linear functions.

Hinge functions are also called ramp

An inclined plane, also known as a ramp, is a flat supporting surface tilted at an angle from the vertical direction, with one end higher than the other, used as an aid for raising or lowering a load. The inclined plane is one of the six clas ...

, hockey stick

A hockey stick is a piece of sports equipment used by the players in all the forms of hockey to move the ball or puck (as appropriate to the type of hockey) either to push, pull, hit, strike, flick, steer, launch or stop the ball/ puck during pla ...

, or rectifier

A rectifier is an electrical device that converts alternating current (AC), which periodically reverses direction, to direct current (DC), which flows in only one direction. The reverse operation (converting DC to AC) is performed by an inve ...

functions. Instead of the notation used in this article, hinge functions are often represented by where means take the positive part.

The model building process

MARS builds a model in two phases: the forward and the backward pass. This two-stage approach is the same as that used byrecursive partitioning Recursive partitioning is a statistical method for multivariable analysis. Recursive partitioning creates a decision tree that strives to correctly classify members of the population by splitting it into sub-populations based on several dichotomous ...

trees.

The forward pass

MARS starts with a model which consists of just the intercept term (which is the mean of the response values). MARS then repeatedly adds basis function in pairs to the model. At each step it finds the pair of basis functions that gives the maximum reduction in sum-of-squares residual error (it is a greedy algorithm). The two basis functions in the pair are identical except that a different side of a mirrored hinge function is used for each function. Each new basis function consists of a term already in the model (which could perhaps be the intercept term) multiplied by a new hinge function. A hinge function is defined by a variable and a knot, so to add a new basis function, MARS must search over all combinations of the following: 1) existing terms (called ''parent terms'' in this context) 2) all variables (to select one for the new basis function) 3) all values of each variable (for the knot of the new hinge function). To calculate the coefficient of each term MARS applies a linear regression over the terms. This process of adding terms continues until the change in residual error is too small to continue or until the maximum number of terms is reached. The maximum number of terms is specified by the user before model building starts. The search at each step is done in a brute-force fashion, but a key aspect of MARS is that because of the nature of hinge functions the search can be done relatively quickly using a fast least-squares update technique. Actually, the search is not quite brute force. The search can be sped up with aheuristic

A heuristic (; ), or heuristic technique, is any approach to problem solving or self-discovery that employs a practical method that is not guaranteed to be optimal, perfect, or rational, but is nevertheless sufficient for reaching an immediate ...

that reduces the number of parent terms to consider at each step ("Fast MARS").

The backward pass

The forward pass usually builds anoverfit

mathematical modeling, overfitting is "the production of an analysis that corresponds too closely or exactly to a particular set of data, and may therefore fail to fit to additional data or predict future observations reliably". An overfitt ...

model. (An overfit model has a good fit to the data used to build the model but will not generalize well to new data.) To build a model with better generalization ability, the backward pass prunes the model. It removes terms one by one, deleting the least effective term at each step until it finds the best submodel. Model subsets are compared using the Generalized cross validation (GCV) criterion described below.

The backward pass has an advantage over the forward pass: at any step it can choose any term to delete, whereas the forward pass at each step can only see the next pair of terms.

The forward pass adds terms in pairs, but the backward pass typically discards one side of the pair and so terms are often not seen in pairs in the final model. A paired hinge can be seen in the equation for in the first MARS example above; there are no complete pairs retained in the ozone example.

Generalized cross validation

The backward pass uses generalized cross validation (GCV) to compare the performance of model subsets in order to choose the best subset: lower values of GCV are better. The GCV is a form ofregularization

Regularization may refer to:

* Regularization (linguistics)

* Regularization (mathematics)

* Regularization (physics)

* Regularization (solid modeling)

* Regularization Law, an Israeli law intended to retroactively legalize settlements

See also ...

: it trades off goodness-of-fit against model complexity.

(We want to estimate how well a model performs on ''new'' data, not on the training data. Such new data is usually not available at the time of model building, so instead we use GCV to estimate what performance would be on new data. The raw residual sum-of-squares

In statistics, the residual sum of squares (RSS), also known as the sum of squared estimate of errors (SSE), is the sum of the squares of residuals (deviations predicted from actual empirical values of data). It is a measure of the discrepa ...

(RSS) on the training data is inadequate for comparing models, because the RSS always increases as MARS terms are dropped. In other words, if the RSS were used to compare models, the backward pass would always choose the largest model—but the largest model typically does not have the best generalization performance.)

The formula for the GCV is

: GCV = RSS / (''N'' · (1 − (effective number of parameters) / ''N'')2)

where RSS is the residual sum-of-squares measured on the training data and ''N'' is the number of observations (the number of rows in the x matrix).

The ''EffectiveNumberOfParameters'' is defined in

the MARS context as

: (effective number of parameters) = (number of mars terms) + (penalty) · ((number of Mars terms) − 1 ) / 2

where penalty is about 2 or 3 (the MARS software allows the user to preset penalty).

Note that

: (number of Mars terms − 1 ) / 2

is the number of hinge-function knots, so the formula penalizes the addition of knots. Thus the GCV formula adjusts (i.e. increases) the training RSS to take into account the flexibility of the model. We penalize flexibility because models that are too flexible will model the specific realization of noise in the data instead of just the systematic structure of the data.

Generalized cross-validation is so named because it uses a formula to approximate the error that would be determined by leave-one-out validation. It is just an approximation but works well in practice. GCVs were introduced by Craven and Wahba and extended by Friedman for MARS.

Constraints

One constraint has already been mentioned: the user can specify the maximum number of terms in the forward pass. A further constraint can be placed on the forward pass by specifying a maximum allowable degree of interaction. Typically only one or two degrees of interaction are allowed, but higher degrees can be used when the data warrants it. The maximum degree of interaction in the first MARS example above is one (i.e. no interactions or an ''additive model''); in the ozone example it is two. Other constraints on the forward pass are possible. For example, the user can specify that interactions are allowed only for certain input variables. Such constraints could make sense because of knowledge of the process that generated the data.Pros and cons

No regression modeling technique is best for all situations. The guidelines below are intended to give an idea of the pros and cons of MARS, but there will be exceptions to the guidelines. It is useful to compare MARS torecursive partitioning Recursive partitioning is a statistical method for multivariable analysis. Recursive partitioning creates a decision tree that strives to correctly classify members of the population by splitting it into sub-populations based on several dichotomous ...

and this is done below.

(Recursive partitioning is also commonly called ''regression trees'',

''decision trees'', or CART

A cart or dray (Australia and New Zealand) is a vehicle designed for transport, using two wheels and normally pulled by one or a pair of draught animals. A handcart is pulled or pushed by one or more people.

It is different from the flatbed ...

;

see the recursive partitioning Recursive partitioning is a statistical method for multivariable analysis. Recursive partitioning creates a decision tree that strives to correctly classify members of the population by splitting it into sub-populations based on several dichotomous ...

article for details).

*MARS models are more flexible than linear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is cal ...

models.

*MARS models are simple to understand and interpret. Compare the equation for ozone concentration above to, say, the innards of a trained neural network

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological ...

or a random forest

Random forests or random decision forests is an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time. For classification tasks, the output of ...

.

*MARS can handle both continuous and categorical data

In statistics, a categorical variable (also called qualitative variable) is a variable that can take on one of a limited, and usually fixed, number of possible values, assigning each individual or other unit of observation to a particular group or ...

. MARS tends to be better than recursive partitioning for numeric data because hinges are more appropriate for numeric variables than the piecewise constant segmentation used by recursive partitioning.

*Building MARS models often requires little or no data preparation. The hinge functions automatically partition the input data, so the effect of outliers is contained. In this respect MARS is similar to recursive partitioning Recursive partitioning is a statistical method for multivariable analysis. Recursive partitioning creates a decision tree that strives to correctly classify members of the population by splitting it into sub-populations based on several dichotomous ...

which also partitions the data into disjoint regions, although using a different method. (Nevertheless, as with most statistical modeling techniques, known outliers should be considered for removal before training a MARS model.)

*MARS (like recursive partitioning) does automatic variable selection

In machine learning and statistics, feature selection, also known as variable selection, attribute selection or variable subset selection, is the process of selecting a subset of relevant features (variables, predictors) for use in model construc ...

(meaning it includes important variables in the model and excludes unimportant ones). However, there can be some arbitrariness in the selection, especially when there are correlated predictors, and this can affect interpretability

*MARS models tend to have a good bias-variance trade-off. The models are flexible enough to model non-linearity and variable interactions (thus MARS models have fairly low bias), yet the constrained form of MARS basis functions prevents too much flexibility (thus MARS models have fairly low variance).

*MARS is suitable for handling fairly large datasets. It is a routine matter to build a MARS model from an input matrix with, say, 100 predictors and 105 observations. Such a model can be built in about a minute on a 1 GHz machine, assuming the maximum degree of interaction of MARS terms is limited to one (i.e. additive terms only). A degree two model with the same data on the same 1 GHz machine takes longer—about 12 minutes. Be aware that these times are highly data dependent. Recursive partitioning is much faster than MARS.

*With MARS models, as with any non-parametric regression, parameter confidence intervals and other checks on the model cannot be calculated directly (unlike linear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is cal ...

models). Cross-validation and related techniques must be used for validating the model instead.

*MARS models do not give as good fits as boosted trees, but can be built much more quickly and are more interpretable. (An 'interpretable' model is in a form that makes it clear what the effect of each predictor is.)

*The earth, mda, and polspline implementations do not allow missing values in predictors, but free implementations of regression trees (such as rpart and party) do allow missing values using a technique called surrogate splits.

*MARS models can make predictions quickly. The prediction function simply has to evaluate the MARS model formula. Compare that to making a prediction with say a Support Vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised learning models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laborat ...

, where every variable has to be multiplied by the corresponding element of every support vector. That can be a slow process if there are many variables and many support vectors.

*The resulting fitted function is not smooth (not differentiable along hinges).

Extensions and related concepts

*Generalized linear model

In statistics, a generalized linear model (GLM) is a flexible generalization of ordinary linear regression. The GLM generalizes linear regression by allowing the linear model to be related to the response variable via a ''link function'' and by ...

s (GLMs) can be incorporated into MARS models by applying a link function after the MARS model is built. Thus, for example, MARS models can incorporate logistic regression

In statistics, the logistic model (or logit model) is a statistical model that models the probability of an event taking place by having the log-odds for the event be a linear combination of one or more independent variables. In regression an ...

to predict probabilities.

* Non-linear regression is used when the underlying form of the function is known and regression is used only to estimate the parameters of that function. MARS, on the other hand, estimates the functions themselves, albeit with severe constraints on the nature of the functions. (These constraints are necessary because discovering a model from the data is an inverse problem

An inverse problem in science is the process of calculating from a set of observations the causal factors that produced them: for example, calculating an image in X-ray computed tomography, source reconstruction in acoustics, or calculating the ...

that is not well-posed without constraints on the model.)

* Recursive partitioning Recursive partitioning is a statistical method for multivariable analysis. Recursive partitioning creates a decision tree that strives to correctly classify members of the population by splitting it into sub-populations based on several dichotomous ...

(commonly called CART). MARS can be seen as a generalization of recursive partitioning that allows the model to better handle numerical (i.e. non-categorical) data.

* Generalized additive model In statistics, a generalized additive model (GAM) is a generalized linear model in which the linear response variable depends linearly on unknown smooth functions of some predictor variables, and interest focuses on inference about these smooth func ...

s. From the user's perspective GAMs are similar to MARS but (a) fit smooth loess

Loess (, ; from german: Löss ) is a clastic, predominantly silt-sized sediment that is formed by the accumulation of wind-blown dust. Ten percent of Earth's land area is covered by loess or similar deposits.

Loess is a periglacial or aeoli ...

or polynomial splines instead of MARS basis functions, and (b) do not automatically model variable interactions. The fitting method used internally by GAMs is very different from that of MARS. For models that do not require automatic discovery of variable interactions GAMs often compete favorably with MARS.

* TSMARS. Time Series Mars is the term used when MARS models are applied in a time series context. Typically in this set up the predictors are the lagged time series values resulting in autoregressive spline models. These models and extensions to include moving average spline models are described in "Univariate Time Series Modelling and Forecasting using TSMARS: A study of threshold time series autoregressive, seasonal and moving average models using TSMARS".

* Bayesian MARS (BMARS) uses the same model form, but builds the model using a Bayesian approach. It may arrive at different optimal MARS models because the model building approach is different. The result of BMARS is typically an ensemble of posterior samples of MARS models, which allows for probabilistic prediction.

See also

*Linear regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is cal ...

* Local regression

Local regression or local polynomial regression, also known as moving regression, is a generalization of the moving average and polynomial regression.

Its most common methods, initially developed for scatterplot smoothing, are LOESS (locally e ...

* Rational function modeling In statistical modeling (especially process modeling), polynomial functions and rational functions are sometimes used as an empirical technique for curve fitting.

Polynomial function models

A polynomial function is one that has the form

:

y = a_ ...

* Segmented regression

Segmented regression, also known as piecewise regression or broken-stick regression, is a method in regression analysis in which the independent variable is partitioned into intervals and a separate line segment is fit to each interval. Segmented r ...

* Spline interpolation

In the mathematical field of numerical analysis, spline interpolation is a form of interpolation where the interpolant is a special type of piecewise polynomial called a spline. That is, instead of fitting a single, high-degree polynomial to all ...

* Spline regression

References

Further reading

* Hastie T., Tibshirani R., and Friedman J.H. (2009''The Elements of Statistical Learning''

2nd edition. Springer, (has a section on MARS) * Faraway J. (2005

''Extending the Linear Model with R''

CRC, (has an example using MARS with R) * Heping Zhang and Burton H. Singer (2010

''Recursive Partitioning and Applications''

2nd edition. Springer, (has a chapter on MARS and discusses some tweaks to the algorithm) * Denison D.G.T., Holmes C.C., Mallick B.K., and Smith A.F.M. (2004

Wiley, * Berk R.A. (2008) ''Statistical learning from a regression perspective'', Springer,

External links

Several free and commercial software packages are available for fitting MARS-type models. ; Free software: * R packages: **earth function in the /code> package **

mars function in the /code> package **

polymars function in the /code> package. Not Friedman's MARS. **

bass function in the /code> package for Bayesian MARS. * Matlab code: *

*

from the book ''Bayesian Methods for Nonlinear Classification and Regression''{{cite book , last1=Denison , first1=D. G. T. , last2=Holmes , first2=C. C. , last3=Mallick , first3=B. K. , last4=Smith , first4=A. F. M. , title=Bayesian methods for nonlinear classification and regression , date=2002 , publisher=Wiley , location=Chichester, England , isbn=978-0-471-49036-4 for Bayesian MARS. * Python *

Earth – Multivariate adaptive regression splines

*

py-earth

*

pyBASS

for Bayesian MARS. ; Commercial software:

MARS

from Salford Systems. Based on Friedman's implementation.

STATISTICA Data Miner

from StatSoft

from SAS. Nonparametric regression Machine learning