|

Local Regression

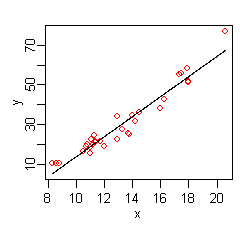

Local regression or local polynomial regression, also known as moving regression, is a generalization of the moving average and polynomial regression. Its most common methods, initially developed for scatterplot smoothing, are LOESS (locally estimated scatterplot smoothing) and LOWESS (locally weighted scatterplot smoothing), both pronounced . They are two strongly related non-parametric regression methods that combine multiple regression models in a ''k''-nearest-neighbor-based meta-model. In some fields, LOESS is known and commonly referred to as Savitzky–Golay filter (proposed 15 years before LOESS). LOESS and LOWESS thus build on "classical" methods, such as linear and nonlinear least squares regression. They address situations in which the classical procedures do not perform well or cannot be effectively applied without undue labor. LOESS combines much of the simplicity of linear least squares regression with the flexibility of nonlinear regression. It does this by fit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loess Curve

Local regression or local polynomial regression, also known as moving regression, is a generalization of the moving average and polynomial regression. Its most common methods, initially developed for scatterplot smoothing, are LOESS (locally estimated scatterplot smoothing) and LOWESS (locally weighted scatterplot smoothing), both pronounced . They are two strongly related non-parametric regression methods that combine multiple regression models in a ''k''-nearest-neighbor-based meta-model. In some fields, LOESS is known and commonly referred to as Savitzky–Golay filter (proposed 15 years before LOESS). LOESS and LOWESS thus build on "classical" methods, such as linear and nonlinear least squares regression. They address situations in which the classical procedures do not perform well or cannot be effectively applied without undue labor. LOESS combines much of the simplicity of linear least squares regression with the flexibility of nonlinear regression. It does this by f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighted Least Squares

Weighted least squares (WLS), also known as weighted linear regression, is a generalization of ordinary least squares and linear regression in which knowledge of the variance of observations is incorporated into the regression. WLS is also a specialization of generalized least squares. Introduction A special case of generalized least squares called weighted least squares can be used when all the off-diagonal entries of Ω, the covariance matrix of the residuals, are null; the variances of the observations (along the covariance matrix diagonal) may still be unequal (heteroscedasticity). The fit of a model to a data point is measured by its residual, r_i , defined as the difference between a measured value of the dependent variable, y_i and the value predicted by the model, f(x_i, \boldsymbol\beta): : r_i(\boldsymbol\beta) = y_i - f(x_i, \boldsymbol\beta). If the errors are uncorrelated and have equal variance, then the function : S(\boldsymbol\beta) = \sum_i r_i(\boldsymbol\b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multivariate Adaptive Regression Splines

In statistics, multivariate adaptive regression splines (MARS) is a form of regression analysis introduced by Jerome H. Friedman in 1991. It is a non-parametric regression technique and can be seen as an extension of linear models that automatically models nonlinearities and interactions between variables. The term "MARS" is trademarked and licensed to Salford Systems. In order to avoid trademark infringements, many open-source implementations of MARS are called "Earth". The basics This section introduces MARS using a few examples. We start with a set of data: a matrix of input variables ''x'', and a vector of the observed responses ''y'', with a response for each row in ''x''. For example, the data could be: Here there is only one independent variable, so the ''x'' matrix is just a single column. Given these measurements, we would like to build a model which predicts the expected ''y'' for a given ''x''. A linear model for the above data is : \widehat = -37 + 5.1 x The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moving Average

In statistics, a moving average (rolling average or running average) is a calculation to analyze data points by creating a series of averages of different subsets of the full data set. It is also called a moving mean (MM) or rolling mean and is a type of finite impulse response filter. Variations include: simple, cumulative, or weighted forms (described below). Given a series of numbers and a fixed subset size, the first element of the moving average is obtained by taking the average of the initial fixed subset of the number series. Then the subset is modified by "shifting forward"; that is, excluding the first number of the series and including the next value in the subset. A moving average is commonly used with time series data to smooth out short-term fluctuations and highlight longer-term trends or cycles. The threshold between short-term and long-term depends on the application, and the parameters of the moving average will be set accordingly. It is also used in economics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moving Least Squares

Moving least squares is a method of reconstructing continuous functions from a set of unorganized point samples via the calculation of a weighted least squares measure biased towards the region around the point at which the reconstructed value is requested. In computer graphics, the moving least squares method is useful for reconstructing a surface from a set of points. Often it is used to create a 3D surface from a point cloud through either downsampling or upsampling. Definition Consider a function f: \mathbb^n \to \mathbb and a set of sample points S = \ . Then, the moving least square approximation of degree m at the point x is \tilde(x) where \tilde minimizes the weighted least-square error :\sum_ (p(x_i)-f_i)^2\theta(\, x-x_i\, ) over all polynomials p of degree m in \mathbb^n. \theta(s) is the weight and it tends to zero as s\to \infty. In the example \theta(s) = e^. The smooth interpolator of "order 3" is a quadratic interpolator. See also *Local regression * Diffus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel Regression

In statistics, kernel regression is a non-parametric technique to estimate the conditional expectation of a random variable. The objective is to find a non-linear relation between a pair of random variables ''X'' and ''Y''. In any nonparametric regression, the conditional expectation of a variable Y relative to a variable X may be written: : \operatorname(Y \mid X) = m(X) where m is an unknown function. Nadaraya–Watson kernel regression Nadaraya and Watson, both in 1964, proposed to estimate m as a locally weighted average, using a kernel as a weighting function. The Nadaraya–Watson estimator is: : \widehat_h(x)=\frac where K_h is a kernel with a bandwidth h. Derivation : \operatorname(Y \mid X=x) = \int y f(y\mid x) \, dy = \int y \frac \, dy Using the kernel density estimation for the joint distribution ''f''(''x'',''y'') and ''f''(''x'') with a kernel ''K'', : \hat(x,y) = \frac\sum_^n K_h(x-x_i) K_h(y-y_i), : \hat(x) = \frac \sum_^n K_h(x-x_i), we get ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of ''N'' independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to ''N'' − 1. Mathematically, degrees of freedom is the number of dimensions of the domain o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Outliers

In statistics, an outlier is a data point that differs significantly from other observations. An outlier may be due to a variability in the measurement, an indication of novel data, or it may be the result of experimental error; the latter are sometimes excluded from the data set. An outlier can be an indication of exciting possibility, but can also cause serious problems in statistical analyses. Outliers can occur by chance in any distribution, but they can indicate novel behaviour or structures in the data-set, measurement error, or that the population has a heavy-tailed distribution. In the case of measurement error, one wishes to discard them or use statistics that are robust to outliers, while in the case of heavy-tailed distributions, they indicate that the distribution has high skewness and that one should be very cautious in using tools or intuitions that assume a normal distribution. A frequent cause of outliers is a mixture of two distributions, which may be two dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robust Statistics

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normal. Robust statistical methods have been developed for many common problems, such as estimating location, scale, and regression parameters. One motivation is to produce statistical methods that are not unduly affected by outliers. Another motivation is to provide methods with good performance when there are small departures from a parametric distribution. For example, robust methods work well for mixtures of two normal distributions with different standard deviations; under this model, non-robust methods like a t-test work poorly. Introduction Robust statistics seek to provide methods that emulate popular statistical methods, but which are not unduly affected by outliers or other small departures from model assumptions. In statistics, classical estimation methods rely heavily on assumptions which are often ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite Impulse Response

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response (or response to any finite length input) is of ''finite'' duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely (usually decaying). The impulse response (that is, the output in response to a Kronecker delta input) of an Nth-order discrete-time FIR filter lasts exactly N+1 samples (from first nonzero element through last nonzero element) before it then settles to zero. FIR filters can be discrete-time or continuous-time, and digital or analog. Definition For a causal discrete-time FIR filter of order ''N'', each value of the output sequence is a weighted sum of the most recent input values: :\begin y &= b_0 x + b_1 x -1+ \cdots + b_N x -N\\ &= \sum_^N b_i\cdot x -i \end where: * x /math> is the input signal, * y /math> is the output s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonlinear Regression

In statistics, nonlinear regression is a form of regression analysis in which observational data are modeled by a function which is a nonlinear combination of the model parameters and depends on one or more independent variables. The data are fitted by a method of successive approximations. General In nonlinear regression, a statistical model of the form, : \mathbf \sim f(\mathbf, \boldsymbol\beta) relates a vector of independent variables, \mathbf, and its associated observed dependent variables, \mathbf. The function f is nonlinear in the components of the vector of parameters \beta, but otherwise arbitrary. For example, the Michaelis–Menten model for enzyme kinetics has two parameters and one independent variable, related by f by: : f(x,\boldsymbol\beta)= \frac This function is nonlinear because it cannot be expressed as a linear combination of the two ''\beta''s. Systematic error may be present in the independent variables but its treatment is outside the scope o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Function

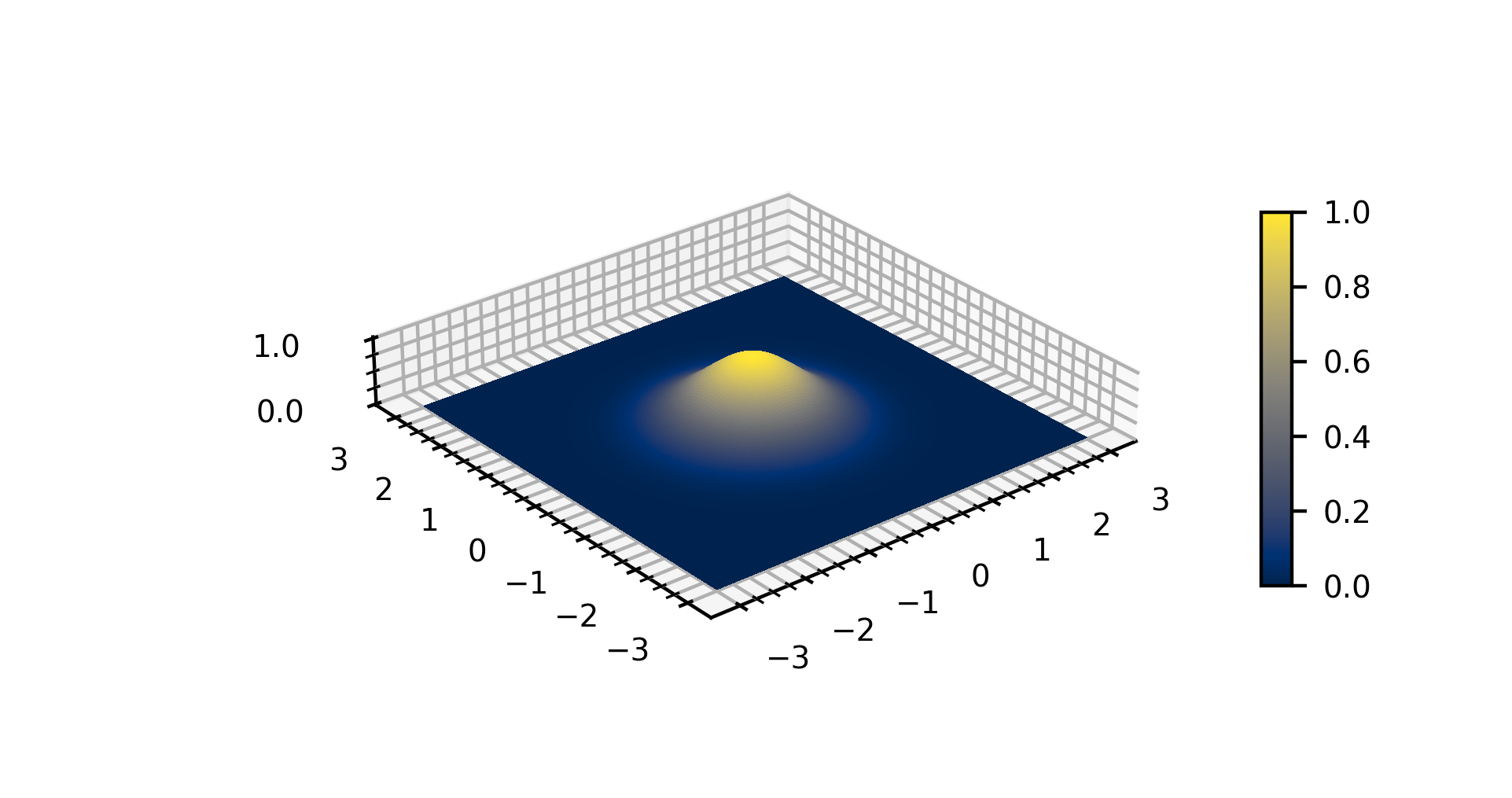

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form f(x) = \exp (-x^2) and with parametric extension f(x) = a \exp\left( -\frac \right) for arbitrary real constants , and non-zero . It is named after the mathematician Carl Friedrich Gauss. The graph of a Gaussian is a characteristic symmetric " bell curve" shape. The parameter is the height of the curve's peak, is the position of the center of the peak, and (the standard deviation, sometimes called the Gaussian RMS width) controls the width of the "bell". Gaussian functions are often used to represent the probability density function of a normally distributed random variable with expected value and variance . In this case, the Gaussian is of the form g(x) = \frac \exp\left( -\frac \frac \right). Gaussian functions are widely used in statistics to describe the normal distributions, in signal processing to define Gaussian filters, in image processing where two-dimen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |