|

AVX-512

AVX-512 are 512-bit extensions to the 256-bit Advanced Vector Extensions SIMD instructions for x86 instruction set architecture (ISA) proposed by Intel in July 2013, and implemented in Intel's Xeon Phi x200 (Knights Landing) and Skylake-X CPUs; this includes the Core-X series (excluding the Core i5-7640X and Core i7-7740X), as well as the new Xeon Scalable Processor Family and Xeon D-2100 Embedded Series. AVX-512 consists of multiple extensions that may be implemented independently. This policy is a departure from the historical requirement of implementing the entire instruction block. Only the core extension AVX-512F (AVX-512 Foundation) is required by all AVX-512 implementations. Besides widening most 256-bit instructions, the extensions introduce various new operations, such as new data conversions, scatter operations, and permutations. The number of AVX registers is increased from 16 to 32, and eight new "mask registers" are added, which allow for variable selection and blend ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Advanced Vector Extensions

Advanced Vector Extensions (AVX) are extensions to the x86 instruction set architecture for microprocessors from Intel and Advanced Micro Devices (AMD). They were proposed by Intel in March 2008 and first supported by Intel with the Sandy Bridge processor shipping in Q1 2011 and later by AMD with the Bulldozer processor shipping in Q3 2011. AVX provides new features, new instructions and a new coding scheme. AVX2 (also known as Haswell New Instructions) expands most integer commands to 256 bits and introduces new instructions. They were first supported by Intel with the Haswell processor, which shipped in 2013. AVX-512 expands AVX to 512-bit support using a new EVEX prefix encoding proposed by Intel in July 2013 and first supported by Intel with the Knights Landing co-processor, which shipped in 2016. In conventional processors, AVX-512 was introduced with Skylake server and HEDT processors in 2017. Advanced Vector Extensions AVX uses sixteen YMM registers to perform a s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Xeon Phi

Xeon Phi was a series of x86 manycore processors designed and made by Intel. It was intended for use in supercomputers, servers, and high-end workstations. Its architecture allowed use of standard programming languages and application programming interfaces (APIs) such as OpenMP. Xeon Phi launched in 2010. Since it was originally based on an earlier GPU design ( codenamed "Larrabee") by Intel that was cancelled in 2009, it shared application areas with GPUs. The main difference between Xeon Phi and a GPGPU like Nvidia Tesla was that Xeon Phi, with an x86-compatible core, could, with less modification, run software that was originally targeted to a standard x86 CPU. Initially in the form of PCIe-based add-on cards, a second-generation product, codenamed ''Knights Landing'', was announced in June 2013. These second-generation chips could be used as a standalone CPU, rather than just as an add-in card. In June 2013, the Tianhe-2 supercomputer at the National Supercomputer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skylake (microarchitecture)

Skylake is the codename used by Intel for a processor microarchitecture that was launched in August 2015 succeeding the Broadwell microarchitecture. Skylake is a microarchitecture redesign using the same 14 nm manufacturing process technology as its predecessor, serving as a tock in Intel's tick–tock manufacturing and design model. According to Intel, the redesign brings greater CPU and GPU performance and reduced power consumption. Skylake CPUs share their microarchitecture with Kaby Lake, Coffee Lake, Cannon Lake, Whiskey Lake, and Comet Lake CPUs. Skylake is the last Intel platform on which Windows earlier than Windows 10 will be officially supported by Microsoft, although enthusiast-created modifications exist that allow Windows 8.1 and earlier to continue to receive Windows Updates on later platforms. Some of the processors based on the Skylake microarchitecture are marketed as 6th-generation Core. Intel officially declared end of life and discontinued S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cannon Lake (microarchitecture)

Cannon Lake (formerly Skymont) is Intel's codename for the 10 nm die shrink of the Kaby Lake microarchitecture. As a die shrink, Cannon Lake is a new ''process'' in Intel's process-architecture-optimization execution plan as the next step in semiconductor fabrication. Cannon Lake CPUs are the first mainstream CPUs to include the AVX-512 instruction set. Prior to Cannon Lake's launch, Intel launched another 14 nm process refinement with the codename Coffee Lake. The successor of Cannon Lake is Ice Lake, powered by the Sunny Cove microarchitecture, which represents the ''architecture'' phase in the ''process-architecture-optimization'' model. Design history and features Cannon Lake was initially expected to be released in 2015/2016, but the release was pushed back to 2018. Intel demonstrated a laptop with an unknown Cannon Lake CPU at CES 2017 and announced that Cannon Lake based products would be available in 2018 at the earliest. At CES 2018 Intel announced tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Permute Instruction

Permute (and Shuffle) instructions, part of bit manipulation as well as vector processing, copy unaltered contents from a source array to a destination array, where the indices are specified by a second source array. The size (bitwidth) of the source elements is not restricted but remains the same as the destination size. There exists two important permute variants, known as gather and scatter, respectively. The gather variant is as follows: for i = 0 to length-1 dest = src ndices[i where the scatter variant is: for i = 0 to length-1 dest ndices[i = src[i] Note that unlike in memory-based Gather-scatter (vector addressing), gather-scatter all three of dest, src, and indices are ''registers'' (or parts of registers in the case of bit-level permute), not memory locations. The scatter variant can be seen to "scatter" the source elements ''across'' (into) to the destination, where the "gather" variant is gathering data ''from'' the indexed source elements. Given th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gather-scatter

Gather/scatter is a type of memory addressing that at once collects (gathers) from, or stores (scatters) data to, multiple, arbitrary indices. Examples of its use include sparse linear algebra operations, sorting algorithms, fast Fourier transforms, and some computational graph theory problems. It is the vector equivalent of register indirect addressing, with gather involving indexed reads, and scatter, indexed writes. Vector processors (and some SIMD units in CPUs) have hardware support for gather and scatter operations. Definitions Gather A sparsely populated vector y holding N non-empty elements can be represented by two densely populated vectors of length N; x containing the non-empty elements of y, and idx giving the index in y where x's element is located. The gather of y into x, denoted x \leftarrow y, _x, assigns x(i)=y(idx(i)) with idx having already been calculated. Assuming no pointer aliasing between x[], y[],idx[], a C (programming language), C implementation is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SIMD

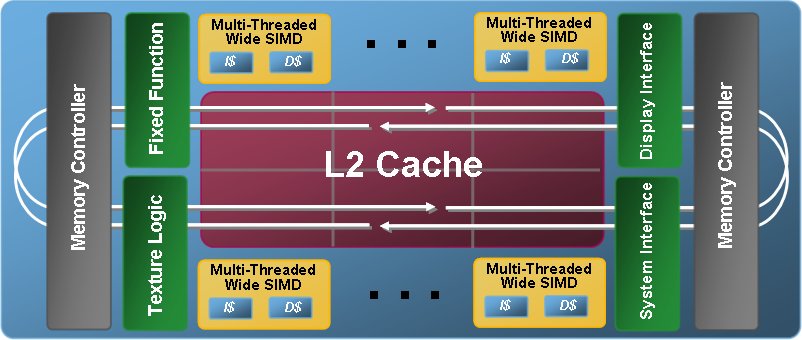

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but each unit performs the exact same instruction at any given moment (just with different data). SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD has three different subcategories in Flynn's 1972 Taxonomy, one of which is SIMT. SIMT should not be confused with software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EVEX Prefix

The EVEX prefix (enhanced vector extension) and corresponding coding scheme is an extension to the 32-bit x86 (IA-32) and 64-bit x86-64 (AMD64) instruction set architecture. EVEX is based on, but should not be confused with the MVEX prefix used by the Knights Corner processor. The EVEX scheme is a 4-byte extension to the VEX scheme which supports the AVX-512 instruction set and allows addressing new 512-bit ZMM registers and new 64-bit operand mask registers. Features EVEX coding can address 8 operand mask registers, 16 general-purpose registers and 32 vector registers in 64-bit mode (otherwise, 8 general-purpose and 8 vector), and can support up to 4 operands. Like the VEX coding scheme, the EVEX prefix unifies existing opcode prefixes and escape codes, memory addressing and operand length modifiers of the x86 instruction set . The following features are carried over from the VEX scheme: * Direct encoding of three SIMD registers (XMM, YMM, or ZMM) as source operands (MMX or x8 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cascade Lake (microarchitecture)

Cascade Lake is an Intel codename for a 14 nm server, workstation and enthusiast processor microarchitecture, launched in April 2019. In Intel's Process-Architecture-Optimization model, Cascade Lake is an optimization of Skylake. Intel states that this will be their first microarchitecture to support 3D XPoint 3D XPoint (pronounced ''three-D cross point'') is a discontinued non-volatile memory (NVM) technology developed jointly by Intel and Micron Technology. It was announced in July 2015 and is available on the open market under the brand name Optane ...-based memory modules. It also features Deep Learning Boost instructions and mitigations for Meltdown and Spectre. Intel officially launched new Xeon Scalable SKUs on February 24, 2020. Variants *Server: Cascade Lake-SP, Cascade Lake-AP *Workstation: Cascade Lake-W *Enthusiast: Cascade Lake-X List of Cascade Lake processors Cascade Lake-X (Enthusiast) Cascade Lake-AP (Advanced Performance) Cascade Lake-AP is br ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Larrabee (microarchitecture)

Larrabee is the codename for a cancelled GPGPU chip that Intel was developing separately from its current line of integrated graphics accelerators. It is named after either Mount Larrabee or Larrabee State Park in Whatcom County, Washington, near the town of Bellingham. The chip was to be released in 2010 as the core of a consumer 3D graphics card, but these plans were cancelled due to delays and disappointing early performance figures. The project to produce a GPU retail product directly from the Larrabee research project was terminated in May 2010 and its technology was passed on to the Xeon Phi. The Intel MIC multiprocessor architecture announced in 2010 inherited many design elements from the Larrabee project, but does not function as a graphics processing unit; the product is intended as a co-processor for high performance computing. Almost a decade later, on June 12, 2018; the idea of an Intel dedicated GPU was revived again with Intel's desire to create a discrete GPU by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CLMUL Instruction Set

Carry-less Multiplication (CLMUL) is an extension to the x86 instruction set used by microprocessors from Intel and AMD which was proposed by Intel in March 2008 and made available in the Intel Westmere processors announced in early 2010. Mathematically, the instruction implements multiplication of polynomials over the finite field GF(2) where the bitstring a_0a_1\ldots a_ represents the polynomial a_0 + a_1X + a_2X^2 + \cdots + a_X^. The CLMUL instruction also allows a more efficient implementation of the closely related multiplication of larger finite fields GF(2''k'') than the traditional instruction set. One use of these instructions is to improve the speed of applications doing block cipher encryption in Galois/Counter Mode, which depends on finite field GF(2''k'') multiplication. Another application is the fast calculation of CRC values, including those used to implement the LZ77 sliding window DEFLATE algorithm in zlib and pngcrush. ARMv8 also has a version of CLMU ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Streaming SIMD Extensions

In computing, Streaming SIMD Extensions (SSE) is a single instruction, multiple data ( SIMD) instruction set extension to the x86 architecture, designed by Intel and introduced in 1999 in their Pentium III series of Central processing units (CPUs) shortly after the appearance of Advanced Micro Devices (AMD's) 3DNow!. SSE contains 70 new instructions (65 unique mnemonics using 70 encodings), most of which work on single precision floating-point data. SIMD instructions can greatly increase performance when exactly the same operations are to be performed on multiple data objects. Typical applications are digital signal processing and graphics processing. Intel's first IA-32 SIMD effort was the MMX instruction set. MMX had two main problems: it re-used existing x87 floating-point registers making the CPUs unable to work on both floating-point and SIMD data at the same time, and it only worked on integers. SSE floating-point instructions operate on a new independent register set ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |