History

Wai-Kai Chen wrote: "The concept of a signal-flow graph was originally worked out by Shannon 942ref name=Shannon> Reprinted in in dealing with analog computers. The greatest credit for the formulation of signal-flow graphs is normally extended to MasonMIT Research Laboratory of Electronics

He showed how to use the signal-flow graph technique to solve some difficult electronic problems in a relatively simple manner. The term signal flow graph was used because of its original application to electronic problems and the association with electronic signals and flowcharts of the systems under study." Lorens wrote: "Previous to Mason's work, C. E. Shannon worked out a number of the properties of what are now known as flow graphs. Unfortunately, the paper originally had a restricted classification and very few people had access to the material." "The rules for the evaluation of the graph determinant of a Mason Graph were first given and proven by Shannon 942using mathematical induction. His work remained essentially unknown even after Mason published his classical work in 1953. Three years later, Mason

Domain of application

Robichaud ''et al.'' identify the domain of application of SFGs as follows: :"All the physical systems analogous to these networks onstructed of ideal transformers, active elements and gyratorsconstitute the domain of application of the techniques developed ere Trent has shown that all the physical systems which satisfy the following conditions fall into this category. # The finite lumped system is composed of a number of simple parts, each of which has known dynamical properties which can be defined by equations using two types of scalar variables and parameters of the system. Variables of the first type represent quantities which can be measured, at least conceptually, by attaching an indicating instrument to two connection points of the element. Variables of the second type characterize quantities which can be measured by connecting a meter in series with the element. Relative velocities and positions, pressure differentials and voltages are typical quantities of the first class, whereas electric currents, forces, rates of heat flow, are variables of the second type. Firestone has been the first to distinguish these two types of variables with the names ''across variables'' and ''through variables''. # Variables of the first type must obey a mesh law, analogous to Kirchhoff's voltage law, whereas variables of the second type must satisfy an incidence law analogous to Kirchhoff's current law. # Physical dimensions of appropriate products of the variables of the two types must be consistent. For the systems in which these conditions are satisfied, it is possible to draw a linear graph isomorphic with the dynamical properties of the system as described by the chosen variables. The techniques ..can be applied directly to these linear graphs as well as to electrical networks, to obtain a signal flow graph of the system."Basic flow graph concepts

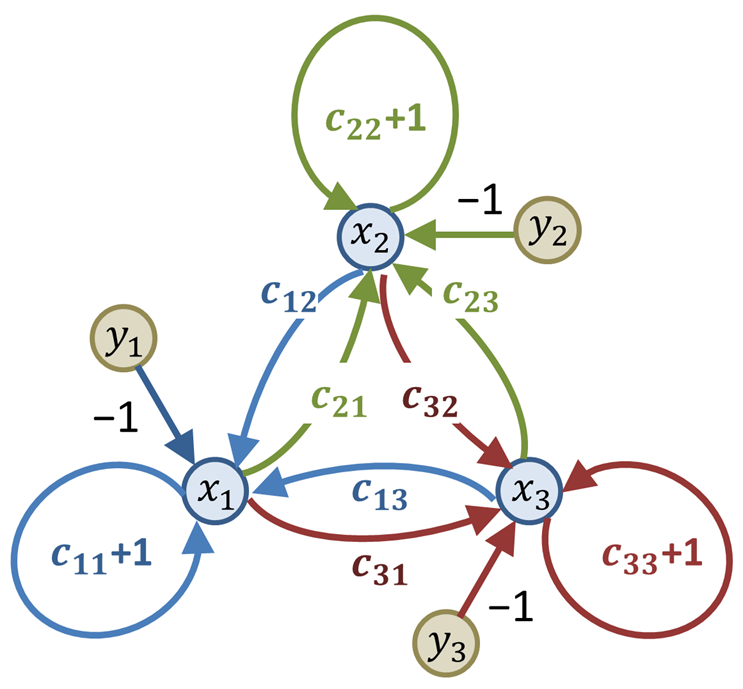

The following illustration and its meaning were introduced by Mason to illustrate basic concepts:Choosing the variables

Non-uniqueness

Robichaud et al. wrote: "The signal flow graph contains the same information as the equations from which it is derived; but there does not exist a one-to-one correspondence between the graph and the system of equations. One system will give different graphs according to the order in which the equations are used to define the variable written on the left-hand side." If all equations relate all dependent variables, then there are ''n!'' possible SFGs to choose from.Linear signal-flow graphs

Linear signal-flow graph (SFG) methods only apply to linear time-invariant systems, as studied by their associated theory. When modeling a system of interest, the first step is often to determine the equations representing the system's operation without assigning causes and effects (this is called acausal modeling). A SFG is then derived from this system of equations. A linear SFG consists of nodes indicated by dots and weighted directional branches indicated by arrows. The nodes are the variables of the equations and the branch weights are the coefficients. Signals may only traverse a branch in the direction indicated by its arrow. The elements of a SFG can only represent the operations of multiplication by a coefficient and addition, which are sufficient to represent the constrained equations. When a signal traverses a branch in its indicated direction, the signal is multiplied the weight of the branch. When two or more branches direct into the same node, their outputs are added. For systems described by linear algebraic or differential equations, the signal-flow graph is mathematically equivalent to the system of equations describing the system, and the equations governing the nodes are discovered for each node by summing incoming branches to that node. These incoming branches convey the contributions of the other nodes, expressed as the connected node value multiplied by the weight of the connecting branch, usually a real number or function of some parameter (for example a Laplace transform variable ''s''). For linear active networks, Choma writes: "By a 'signal flow representation' r 'graph', as it is commonly referred towe mean a diagram that, by displaying the algebraic relationships among relevant branch variables of network, paints an unambiguous picture of the way an applied input signal ‘flows’ from input-to-output ... ports." A motivation for a SFG analysis is described by Chen: Partly accessible usinAmazon's look-inside feature

:"The analysis of a linear system reduces ultimately to the solution of a system of linear algebraic equations. As an alternative to conventional algebraic methods of solving the system, it is possible to obtain a solution by considering the properties of certain directed graphs associated with the system." Solving_linear_equations..html" ;"title="#Solving linear equations">Solving linear equations.">#Solving linear equations">Solving linear equations."The unknowns of the equations correspond to the nodes of the graph, while the linear relations between them appear in the form of directed edges connecting the nodes. ...The associated directed graphs in many cases can be set up directly by inspection of the physical system without the necessity of first formulating the →associated equations..."

Basic components

A linear signal flow graph is related to a system of linear equations

See, for example, However, there is not a one-to-one correspondence:

of the following form:

:

::where = transmittance (or gain) from to .

The figure to the right depicts various elements and constructs of a signal flow graph (SFG).

:Exhibit (a) is a node. In this case, the node is labeled . A node is a vertex representing a variable or signal.

::A ''source'' node has only outgoing branches (represents an independent variable). As a special case, an ''input'' node is characterized by having one or more attached arrows pointing away from the node and no arrows pointing into the node. Any open, complete SFG will have at least one input node.

::An ''output'' or ''sink'' node has only incoming branches (represents a dependent variable). Although any node can be an output, explicit output nodes are often used to provide clarity. Explicit output nodes are characterized by having one or more attached arrows pointing into the node and no arrows pointing away from the node. Explicit output nodes are not required.

::A ''mixed'' node has both incoming and outgoing branches.

:Exhibit (b) is a branch with a multiplicative gain of . The meaning is that the output, at the tip of the arrow, is times the input at the tail of the arrow. The gain can be a simple constant or a function (for example: a function of some transform variable such as , , or , for Laplace, Fourier or Z-transform relationships).

:Exhibit (c) is a branch with a multiplicative gain of one. When the gain is omitted, it is assumed to be unity.

:Exhibit (d) is an input node. In this case, is multiplied by the gain .

:Exhibit (e) is an explicit output node; the incoming edge has a gain of .

:Exhibit (f) depicts addition. When two or more arrows point into a node, the signals carried by the edges are added.

:Exhibit (g) depicts a simple loop. The loop gain is .

:Exhibit (h) depicts the expression .

Terms used in linear SFG theory also include:

*Path. A path is a continuous set of branches traversed in the direction indicated by the branch arrows.

** Open path. If no node is re-visited, the path is open.

** Forward path. A path from an input node (source) to an output node (sink) that does not re-visit any node.

*Path gain: the product of the gains of all the branches in the path.

* Loop. A closed path. (it originates and ends on the same node, and no node is touched more than once).

*Loop gain: the product of the gains of all the branches in the loop.

* Non-touching loops. Non-touching loops have no common nodes.

* Graph reduction. Removal of one or more nodes from a graph using graph transformations.

** Residual node. In any contemplated process of graph reduction, the nodes to be retained in the new graph are called residual nodes.

* Splitting a node. Splitting a node corresponds to splitting a node into two half nodes, one being a sink and the other a source.

* Index: The index of a graph is the minimum number of nodes which have to be split in order to remove all the loops in a graph.

** Index node. The nodes that are split to determine the index of a graph are called ''index'' nodes, and in general they are not unique.

A linear signal flow graph is related to a system of linear equations

See, for example, However, there is not a one-to-one correspondence:

of the following form:

:

::where = transmittance (or gain) from to .

The figure to the right depicts various elements and constructs of a signal flow graph (SFG).

:Exhibit (a) is a node. In this case, the node is labeled . A node is a vertex representing a variable or signal.

::A ''source'' node has only outgoing branches (represents an independent variable). As a special case, an ''input'' node is characterized by having one or more attached arrows pointing away from the node and no arrows pointing into the node. Any open, complete SFG will have at least one input node.

::An ''output'' or ''sink'' node has only incoming branches (represents a dependent variable). Although any node can be an output, explicit output nodes are often used to provide clarity. Explicit output nodes are characterized by having one or more attached arrows pointing into the node and no arrows pointing away from the node. Explicit output nodes are not required.

::A ''mixed'' node has both incoming and outgoing branches.

:Exhibit (b) is a branch with a multiplicative gain of . The meaning is that the output, at the tip of the arrow, is times the input at the tail of the arrow. The gain can be a simple constant or a function (for example: a function of some transform variable such as , , or , for Laplace, Fourier or Z-transform relationships).

:Exhibit (c) is a branch with a multiplicative gain of one. When the gain is omitted, it is assumed to be unity.

:Exhibit (d) is an input node. In this case, is multiplied by the gain .

:Exhibit (e) is an explicit output node; the incoming edge has a gain of .

:Exhibit (f) depicts addition. When two or more arrows point into a node, the signals carried by the edges are added.

:Exhibit (g) depicts a simple loop. The loop gain is .

:Exhibit (h) depicts the expression .

Terms used in linear SFG theory also include:

*Path. A path is a continuous set of branches traversed in the direction indicated by the branch arrows.

** Open path. If no node is re-visited, the path is open.

** Forward path. A path from an input node (source) to an output node (sink) that does not re-visit any node.

*Path gain: the product of the gains of all the branches in the path.

* Loop. A closed path. (it originates and ends on the same node, and no node is touched more than once).

*Loop gain: the product of the gains of all the branches in the loop.

* Non-touching loops. Non-touching loops have no common nodes.

* Graph reduction. Removal of one or more nodes from a graph using graph transformations.

** Residual node. In any contemplated process of graph reduction, the nodes to be retained in the new graph are called residual nodes.

* Splitting a node. Splitting a node corresponds to splitting a node into two half nodes, one being a sink and the other a source.

* Index: The index of a graph is the minimum number of nodes which have to be split in order to remove all the loops in a graph.

** Index node. The nodes that are split to determine the index of a graph are called ''index'' nodes, and in general they are not unique.

Systematic reduction to sources and sinks

A signal-flow graph may be simplified by graph transformation rules. These simplification rules are also referred to as ''signal-flow graph algebra''. The purpose of this reduction is to relate the dependent variables of interest (residual nodes, sinks) to its independent variables (sources). The systematic reduction of a linear signal-flow graph is a graphical method equivalent to the Gauss-Jordan elimination method for solving linear equations. The rules presented below may be applied over and over until the signal flow graph is reduced to its "minimal residual form". Further reduction can require loop elimination or the use of a "reduction formula" with the goal to directly connect sink nodes representing the dependent variables to the source nodes representing the independent variables. By these means, any signal-flow graph can be simplified by successively removing internal nodes until only the input and output and index nodes remain. Robichaud described this process of systematic flow-graph reduction: For digitally reducing a flow graph using an algorithm, Robichaud extends the notion of a simple flow graph to a ''generalized'' flow graph: The definition of an elementary transformation varies from author to author: * Some authors only consider as elementary transformations the summation of parallel-edge gains and the multiplication of series-edge gains, but not the elimination of self-loops * Other authors consider the elimination of a self-loop as an elementary transformation Parallel edges. Replace parallel edges with a single edge having a gain equal to the sum of original gains.Implementations

The above procedure for building the SFG from an acausal system of equations and for solving the SFG's gains have been implemented as an add-on to MATHLAB 68, an on-line system providing machine aid for the mechanical symbolic processes encountered in analysis.Solving linear equations

Signal flow graphs can be used to solve sets of simultaneous linear equations."... solving a set of simultaneous, linear algebraic equations. This problem, usually solved by matrix methods, can also be solved via graph theory. " also on-line a/ref> The set of equations must be consistent and all equations must be linearly independent.

Putting the equations in "standard form"

For M equations with N unknowns where each yj is a known value and each xj is an unknown value, there is equation for each known of the following form.

: ; the usual form for simultaneous linear equations with 1 ≤ j ≤ M

Although it is feasible, particularly for simple cases, to establish a signal flow graph using the equations in this form, some rearrangement allows a general procedure that works easily for any set of equations, as now is presented. To proceed, first the equations are rewritten as

:

and further rewritten as

:

and finally rewritten as

: ; form suitable to be expressed as a signal flow graph.

::where δkj =

For M equations with N unknowns where each yj is a known value and each xj is an unknown value, there is equation for each known of the following form.

: ; the usual form for simultaneous linear equations with 1 ≤ j ≤ M

Although it is feasible, particularly for simple cases, to establish a signal flow graph using the equations in this form, some rearrangement allows a general procedure that works easily for any set of equations, as now is presented. To proceed, first the equations are rewritten as

:

and further rewritten as

:

and finally rewritten as

: ; form suitable to be expressed as a signal flow graph.

::where δkj = /ref> Often the known values, yj are taken as the primary causes and the unknowns values, xj to be effects, but regardless of this interpretation, the last form for the set of equations can be represented as a signal-flow graph. This point is discussed further in the subsection #Interpreting 'causality', Interpreting 'causality'.

Applying Mason's gain formula

In the most general case, the values for all the xk variables can be calculated by computing Mason's gain formula for the path from each yj to each xk and using superposition. : ::where Gkj = the sum of Mason's gain formula computed for all the paths from input yj to variable xk. In general, there are N-1 paths from yj to variable xk so the computational effort to calculated Gkj is proportional to N-1. Since there are M values of yj, Gkj must be computed M times for a single value of xk. The computational effort to calculate a single xk variable is proportional to (N-1)(M). The effort to compute all the xk variables is proportional to (N)(N-1)(M). If there are N equations and N unknowns, then the computation effort is on the order of N3.Relation to block diagrams

Interpreting 'causality'

The term "cause and effect" was applied by Mason to SFGs: :"The process of constructing a graph is one of tracing a succession of cause and effects through the physical system. One variable is expressed as an explicit effect due to certain causes; they in turn, are recognized as effects due to still other causes." :::— S.J. Mason: Section IV: ''Illustrative applications of flow graph technique'' and has been repeated by many later authors: For example, see :"The ''signal flow graph'' is another visual tool for representing causal relationships between components of the system. It is a simplified version of a block diagram introduced by S.J. Mason as a cause-and-effect representation of linear systems." :::— Arthur G.O. Mutambara: ''Design and Analysis of Control Systems'', p.238 However, Mason's paper is concerned to show in great detail how a ''set of equations'' is connected to an SFG, an emphasis unrelated to intuitive notions of "cause and effect". Intuitions can be helpful for arriving at an SFG or for gaining insight from an SFG, but are inessential to the SFG. The essential connection of the SFG is to its own set of equations, as described, for example, by Ogata: :"A signal-flow graph is a diagram that represents a set of simultaneous algebraic equations. When applying the signal flow graph method to analysis of control systems, we must first transform linear differential equations into algebraic equations in Laplace_transform_variable.html" ;"title="he Laplace transform variable">he Laplace transform variable''s''.." :::— Katsuhiko Ogata: ''Modern Control Engineering'', p. 104 There is no reference to "cause and effect" here, and as said by Barutsky: :"Like block diagrams, signal flow graphs represent the computational, not the physical structure of a system." :::— Wolfgang Borutzky, ''Bond Graph Methodology'', p. 10 The term "cause and effect" may be misinterpreted as it applies to the SFG, and taken incorrectly to suggest a system view of causality, rather than a ''computationally'' based meaning. To keep discussion clear, it may be advisable to use the term "computational causality", as is suggested for bond graphs: :"Bond-graph literature uses the term computational causality, indicating the order of calculation in a simulation, in order to avoid any interpretation in the sense of intuitive causality." The term "computational causality" is explained using the example of current and voltage in a resistor: :"The ''computational causality'' of physical laws can therefore not be predetermined, but depends upon the particular use of that law. We cannot conclude whether it is the current flowing through a resistor that causes a voltage drop, or whether it is the difference in potentials at the two ends of the resistor that cause current to flow. Physically these are simply two concurrent aspects of one and the same physical phenomenon. Computationally, we may have to assume at times one position, and at other times the other." :::— François Cellier & Ernesto Kofman: §1.5 ''Simulation software today and tomorrow'', p. 15 A computer program or algorithm can be arranged to solve a set of equations using various strategies. They differ in how they prioritize finding some of the variables in terms of the others, and these algorithmic decisions, which are simply about solution strategy, then set up the variables expressed as dependent variables earlier in the solution to be "effects", determined by the remaining variables that now are "causes", in the sense of "computational causality". Using this terminology, it is ''computational'' causality, not ''system'' causality, that is relevant to the SFG. There exists a wide-ranging philosophical debate, not concerned specifically with the SFG, over connections between computational causality and system causality.See, for example,Signal-flow graphs for analysis and design

Signal-flow graphs can be used for analysis, that is for understanding a model of an existing system, or for synthesis, that is for determining the properties of a design alternative.Signal-flow graphs for dynamic systems analysis

When building a model of a dynamic system, a list of steps is provided by Dorf & Bishop: * Define the system and its components. * Formulate the mathematical model and list the needed assumptions. * Write the differential equations describing the model. * Solve the equations for the desired output variables. * Examine the solutions and the assumptions. * If needed, reanalyze or redesign the system. ::—RC Dorf and RH Bishop, ''Modern Control Systems'', Chapter 2, p. 2 In this workflow, equations of the physical system's mathematical model are used to derive the signal-flow graph equations.Signal-flow graphs for design synthesis

Signal-flow graphs have been used in Design Space Exploration (DSE), as an intermediate representation towards a physical implementation. The DSE process seeks a suitable solution among different alternatives. In contrast with the typical analysis workflow, where a system of interest is first modeled with the physical equations of its components, the specification for synthesizing a design could be a desired transfer function. For example, different strategies would create different signal-flow graphs, from which implementations are derived. Another example uses an annotated SFG as an expression of the continuous-time behavior, as input to an architecture generatorShannon and Shannon-Happ formulas

Shannon's formula is an analytic expression for calculating the gain of an interconnected set of amplifiers in an analog computer. During World War II, while investigating the functional operation of an analog computer, Claude Shannon developed his formula. Because of wartime restrictions, Shannon's work was not published at that time, and, in 1952, Mason rediscovered the same formula. William W. Happ generalized the Shannon formula for topologically closed systems. The Shannon-Happ formula can be used for deriving transfer functions, sensitivities, and error functions. For a consistent set of linear unilateral relations, the Shannon-Happ formula expresses the solution using direct substitution (non-iterative). NASA's electrical circuit software NASAP is based on the Shannon-Happ formula.Linear signal-flow graph examples

Simple voltage amplifier

The amplification of a signal ''V1'' by an amplifier with gain ''a12'' is described mathematically by :: This relationship represented by the signal-flow graph of Figure 1. is that V2 is dependent on V1 but it implies no dependency of V1 on V2. See Kou page 57.Ideal negative feedback amplifier

A possible SFG for the

A possible SFG for the Electrical circuit containing a two-port network

The figure to the right depicts a circuit that contains a ''y''-parameter two-port network. Vin is the input of the circuit and V2 is the output. The two-port equations impose a set of linear constraints between its port voltages and currents. The terminal equations impose other constraints. All these constraints are represented in the SFG (Signal Flow Graph) below the circuit. There is only one path from input to output which is shown in a different color and has a (voltage) gain of -RLy21. There are also three loops: -Riny11, -RLy22, Riny21RLy12. Sometimes a loop indicates intentional feedback but it can also indicate a constraint on the relationship of two variables. For example, the equation that describes a resistor says that the ratio of the voltage across the resistor to the current through the resistor is a constant which is called the resistance. This can be interpreted as the voltage is the input and the current is the output, or the current is the input and the voltage is the output, or merely that the voltage and current have a linear relationship. Virtually all passive two terminal devices in a circuit will show up in the SFG as a loop.

The SFG and the schematic depict the same circuit, but the schematic also suggests the circuit's purpose. Compared to the schematic, the SFG is awkward but it does have the advantage that the input to output gain can be written down by inspection using

The figure to the right depicts a circuit that contains a ''y''-parameter two-port network. Vin is the input of the circuit and V2 is the output. The two-port equations impose a set of linear constraints between its port voltages and currents. The terminal equations impose other constraints. All these constraints are represented in the SFG (Signal Flow Graph) below the circuit. There is only one path from input to output which is shown in a different color and has a (voltage) gain of -RLy21. There are also three loops: -Riny11, -RLy22, Riny21RLy12. Sometimes a loop indicates intentional feedback but it can also indicate a constraint on the relationship of two variables. For example, the equation that describes a resistor says that the ratio of the voltage across the resistor to the current through the resistor is a constant which is called the resistance. This can be interpreted as the voltage is the input and the current is the output, or the current is the input and the voltage is the output, or merely that the voltage and current have a linear relationship. Virtually all passive two terminal devices in a circuit will show up in the SFG as a loop.

The SFG and the schematic depict the same circuit, but the schematic also suggests the circuit's purpose. Compared to the schematic, the SFG is awkward but it does have the advantage that the input to output gain can be written down by inspection using Mechatronics : Position servo with multi-loop feedback

This example is representative of a SFG (signal-flow graph) used to represent a servo control system and illustrates several features of SFGs. Some of the loops (loop 3, loop 4 and loop 5) are extrinsic intentionally designed feedback loops. These are shown with dotted lines. There are also intrinsic loops (loop 0, loop1, loop2) that are not intentional feedback loops, although they can be analyzed as though they were. These loops are shown with solid lines. Loop 3 and loop 4 are also known as minor loops because they are inside a larger loop.

*The forward path begins with θC, the desired position command. This is multiplied by KP which could be a constant or a function of frequency. KP incorporates the conversion gain of the DAC and any filtering on the DAC output. The output of KP is the velocity command VωC which is multiplied by KV which can be a constant or a function of frequency. The output of KV is the current command, VIC which is multiplied by KC which can be a constant or a function of frequency. The output of KC is the amplifier output voltage, VA. The current, IM, though the motor winding is the integral of the voltage applied to the inductance. The motor produces a torque, T, proportional to IM. Permanent magnet motors tend to have a linear current to torque function. The conversion constant of current to torque is KM. The torque, T, divided by the load moment of inertia, M, is the acceleration, α, which is integrated to give the load velocity ω which is integrated to produce the load position, θLC.

*The forward path of loop 0 asserts that acceleration is proportional to torque and the velocity is the time integral of acceleration. The backward path says that as the speed increases there is a friction or drag that counteracts the torque. Torque on the load decreases proportionately to the load velocity until the point is reached that all the torque is used to overcome friction and the acceleration drops to zero. Loop 0 is intrinsic.

*Loop1 represents the interaction of an inductor's current with its internal and external series resistance. The current through an inductance is the time integral of the voltage across the inductance. When a voltage is first applied, all of it appears across the inductor. This is shown by the forward path through . As the current increases, voltage is dropped across the inductor internal resistance RM and the external resistance RS. This reduces the voltage across the inductor and is represented by the feedback path -(RM + RS). The current continues to increase but at a steadily decreasing rate until the current reaches the point at which all the voltage is dropped across (RM + RS). Loop 1 is intrinsic.

*Loop2 expresses the effect of the motor back EMF. Whenever a permanent magnet motor rotates, it acts like a generator and produces a voltage in its windings. It does not matter whether the rotation is caused by a torque applied to the drive shaft or by current applied to the windings. This voltage is referred to as back EMF. The conversion gain of rotational velocity to back EMF is GM. The polarity of the back EMF is such that it diminishes the voltage across the winding inductance. Loop 2 is intrinsic.

*Loop 3 is extrinsic. The current in the motor winding passes through a sense resister. The voltage, VIM, developed across the sense resister is fed back to the negative terminal of the power amplifier KC. This feedback causes the voltage amplifier to act like a voltage controlled current source. Since the motor torque is proportional to motor current, the sub-system VIC to the output torque acts like a voltage controlled torque source. This sub-system may be referred to as the "current loop" or "torque loop". Loop 3 effectively diminishes the effects of loop 1 and loop 2.

*Loop 4 is extrinsic. A tachometer (actually a low power dc generator) produces an output voltage VωM that is proportional to is angular velocity. This voltage is fed to the negative input of KV. This feedback causes the sub-system from VωC to the load angular velocity to act like a voltage to velocity source. This sub-system may be referred to as the "velocity loop". Loop 4 effectively diminishes the effects of loop 0 and loop 3.

*Loop 5 is extrinsic. This is the overall position feedback loop. The feedback comes from an angle encoder that produces a digital output. The output position is subtracted from the desired position by digital hardware which drives a DAC which drives KP. In the SFG, the conversion gain of the DAC is incorporated into KP.

See

This example is representative of a SFG (signal-flow graph) used to represent a servo control system and illustrates several features of SFGs. Some of the loops (loop 3, loop 4 and loop 5) are extrinsic intentionally designed feedback loops. These are shown with dotted lines. There are also intrinsic loops (loop 0, loop1, loop2) that are not intentional feedback loops, although they can be analyzed as though they were. These loops are shown with solid lines. Loop 3 and loop 4 are also known as minor loops because they are inside a larger loop.

*The forward path begins with θC, the desired position command. This is multiplied by KP which could be a constant or a function of frequency. KP incorporates the conversion gain of the DAC and any filtering on the DAC output. The output of KP is the velocity command VωC which is multiplied by KV which can be a constant or a function of frequency. The output of KV is the current command, VIC which is multiplied by KC which can be a constant or a function of frequency. The output of KC is the amplifier output voltage, VA. The current, IM, though the motor winding is the integral of the voltage applied to the inductance. The motor produces a torque, T, proportional to IM. Permanent magnet motors tend to have a linear current to torque function. The conversion constant of current to torque is KM. The torque, T, divided by the load moment of inertia, M, is the acceleration, α, which is integrated to give the load velocity ω which is integrated to produce the load position, θLC.

*The forward path of loop 0 asserts that acceleration is proportional to torque and the velocity is the time integral of acceleration. The backward path says that as the speed increases there is a friction or drag that counteracts the torque. Torque on the load decreases proportionately to the load velocity until the point is reached that all the torque is used to overcome friction and the acceleration drops to zero. Loop 0 is intrinsic.

*Loop1 represents the interaction of an inductor's current with its internal and external series resistance. The current through an inductance is the time integral of the voltage across the inductance. When a voltage is first applied, all of it appears across the inductor. This is shown by the forward path through . As the current increases, voltage is dropped across the inductor internal resistance RM and the external resistance RS. This reduces the voltage across the inductor and is represented by the feedback path -(RM + RS). The current continues to increase but at a steadily decreasing rate until the current reaches the point at which all the voltage is dropped across (RM + RS). Loop 1 is intrinsic.

*Loop2 expresses the effect of the motor back EMF. Whenever a permanent magnet motor rotates, it acts like a generator and produces a voltage in its windings. It does not matter whether the rotation is caused by a torque applied to the drive shaft or by current applied to the windings. This voltage is referred to as back EMF. The conversion gain of rotational velocity to back EMF is GM. The polarity of the back EMF is such that it diminishes the voltage across the winding inductance. Loop 2 is intrinsic.

*Loop 3 is extrinsic. The current in the motor winding passes through a sense resister. The voltage, VIM, developed across the sense resister is fed back to the negative terminal of the power amplifier KC. This feedback causes the voltage amplifier to act like a voltage controlled current source. Since the motor torque is proportional to motor current, the sub-system VIC to the output torque acts like a voltage controlled torque source. This sub-system may be referred to as the "current loop" or "torque loop". Loop 3 effectively diminishes the effects of loop 1 and loop 2.

*Loop 4 is extrinsic. A tachometer (actually a low power dc generator) produces an output voltage VωM that is proportional to is angular velocity. This voltage is fed to the negative input of KV. This feedback causes the sub-system from VωC to the load angular velocity to act like a voltage to velocity source. This sub-system may be referred to as the "velocity loop". Loop 4 effectively diminishes the effects of loop 0 and loop 3.

*Loop 5 is extrinsic. This is the overall position feedback loop. The feedback comes from an angle encoder that produces a digital output. The output position is subtracted from the desired position by digital hardware which drives a DAC which drives KP. In the SFG, the conversion gain of the DAC is incorporated into KP.

See Terminology and classification of signal-flow graphs

There is some confusion in literature about what a signal-flow graph is;Standards covering signal-flow graphs

* IEEE Std 155-1960, IEEE Standards on Circuits: Definitions of Terms for Linear Signal Flow Graphs, 1960. : This IEEE standard defines a signal-flow graph as a ''network'' of ''directed branches'' representing dependent and independent ''signals'' as ''nodes''. Incoming branches carry ''branch signals'' to the dependent node signals. A ''dependent node'' signal is the algebraic sum of the incoming branch signals at that node, i.e. nodes are summative.State transition signal-flow graph

Closed flowgraph

Nonlinear flow graphs

Mason introduced both nonlinear and linear flow graphs. To clarify this point, Mason wrote : "A linear flow graph is one whose associated equations are linear."Examples of nonlinear branch functions

It we denote by xj the signal at node j, the following are examples of node functions that do not pertain to a linear time-invariant system: :Examples of nonlinear signal-flow graph models

* Although they generally can't be transformed between time domain and frequency domain representations for classical control theory analysis, nonlinear signal-flow graphs can be found in electrical engineering literature. * Nonlinear signal-flow graphs can also be found in life sciences, for example, DrApplications of SFG techniques in various fields of science

*See also

*Notes

References

* Book almost entirely devoted to this topic. * * * * * * © Copyright by Khoman Phang 2001Further reading

* Chapter 3 for the essentials, but applications are scattered throughout the book. * * * Compares Mason and Coates graph approaches with Maxwell's k-tree approach. * A comparison of the utility of the Coates flow graph and the Mason flow graph.External links

M. L. Edwards: ''S-parameters, signal flow graphs, and other matrix representations''

All Rights Reserved

H Schmid: ''Signal-Flow Graphs in 12 Short Lessons''

* * {{commons category-inline, Signal flow graphs Classical control theory Signal processing Application-specific graphs Linear algebra