Energy-based Model on:

[Wikipedia]

[Google]

[Amazon]

An energy-based model (EBM) (also called Canonical Ensemble Learning or Learning via Canonical Ensemble – CEL and LCE, respectively) is an application of

Joint energy-based models (JEM), proposed in 2020 by Grathwohl et al., allow any classifier with

Joint energy-based models (JEM), proposed in 2020 by Grathwohl et al., allow any classifier with

canonical ensemble

In statistical mechanics, a canonical ensemble is the statistical ensemble that represents the possible states of a mechanical system in thermal equilibrium with a heat bath at a fixed temperature. The system can exchange energy with the hea ...

formulation from statistical physics

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. Sometimes called statistical physics or statistical thermodynamics, its applicati ...

for learning from data. The approach prominently appears in generative artificial intelligence

Generative artificial intelligence (Generative AI, GenAI, or GAI) is a subfield of artificial intelligence that uses generative models to produce text, images, videos, or other forms of data. These models Machine learning, learn the underlyin ...

.

EBMs provide a unified framework for many probabilistic and non-probabilistic approaches to such learning, particularly for training graphical

Graphics () are visual images or designs on some surface, such as a wall, canvas, screen, paper, or stone, to inform, illustrate, or entertain. In contemporary usage, it includes a pictorial representation of the data, as in design and manufactu ...

and other structured models.

An EBM learns the characteristics of a target dataset

A data set (or dataset) is a collection of data. In the case of tabular data, a data set corresponds to one or more database tables, where every column of a table represents a particular variable, and each row corresponds to a given record o ...

and generates a similar but larger dataset. EBMs detect the latent variables

In statistics, latent variables (from Latin: present participle of ) are variables that can only be inferred indirectly through a mathematical model from other observable variables that can be directly observed or measured. Such ''latent vari ...

of a dataset and generate new datasets with a similar distribution.

Energy-based generative neural networks is a class of generative model

In statistical classification, two main approaches are called the generative approach and the discriminative approach. These compute classifiers by different approaches, differing in the degree of statistical modelling. Terminology is inconsiste ...

s, which aim to learn explicit probability distributions of data in the form of energy-based models, the energy functions of which are parameterized by modern deep neural networks

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either Cell (biology), biological cells or signal pathways. While individual neurons are simple, many of them together in a netwo ...

.

Boltzmann machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising model), named after Ludwig Boltzmann, is a spin glass, spin-glass model with an external field, i.e., a Spin glass#Sherrington–Kirkpatrick m ...

s are a special form of energy-based models with a specific parametrization of the energy.

Description

For a given input , the model describes an energy such that theBoltzmann distribution

In statistical mechanics and mathematics, a Boltzmann distribution (also called Gibbs distribution Translated by J.B. Sykes and M.J. Kearsley. See section 28) is a probability distribution or probability measure that gives the probability tha ...

is a probability (density), and typically .

Since the normalization constant:

(also known as the partition function) depends on all the Boltzmann factors of all possible inputs , it cannot be easily computed or reliably estimated during training simply using standard maximum likelihood estimation

In statistics, maximum likelihood estimation (MLE) is a method of estimation theory, estimating the Statistical parameter, parameters of an assumed probability distribution, given some observed data. This is achieved by Mathematical optimization, ...

.

However, for maximizing the likelihood during training, the gradient of the log-likelihood

A likelihood function (often simply called the likelihood) measures how well a statistical model explains observed data by calculating the probability of seeing that data under different parameter values of the model. It is constructed from the j ...

of a single training example is given by using the chain rule

In calculus, the chain rule is a formula that expresses the derivative of the Function composition, composition of two differentiable functions and in terms of the derivatives of and . More precisely, if h=f\circ g is the function such that h ...

:

The expectation in the above formula for the gradient can be ''approximately estimated'' by drawing samples from the distribution using Markov chain Monte Carlo

In statistics, Markov chain Monte Carlo (MCMC) is a class of algorithms used to draw samples from a probability distribution. Given a probability distribution, one can construct a Markov chain whose elements' distribution approximates it – that ...

(MCMC).

Early energy-based models, such as the 2003 Boltzmann machine

A Boltzmann machine (also called Sherrington–Kirkpatrick model with external field or stochastic Ising model), named after Ludwig Boltzmann, is a spin glass, spin-glass model with an external field, i.e., a Spin glass#Sherrington–Kirkpatrick m ...

by Hinton, estimated this expectation via blocked Gibbs sampling. Newer approaches make use of more efficient Stochastic Gradient Langevin Dynamics (LD), drawing samples using:

,

where . A replay buffer of past values is used with LD to initialize the optimization module.

The parameters of the neural network are therefore trained in a generative manner via MCMC-based maximum likelihood estimation:

the learning process follows an "analysis by synthesis" scheme, where within each learning iteration, the algorithm samples the synthesized examples from the current model by a gradient-based MCMC method (e.g., Langevin dynamics

In physics, Langevin dynamics is an approach to the mathematical modeling of the dynamics of molecular systems using the Langevin equation. It was originally developed by French physicist Paul Langevin. The approach is characterized by the use o ...

or Hybrid Monte Carlo

The Hamiltonian Monte Carlo algorithm (originally known as hybrid Monte Carlo) is a Markov chain Monte Carlo method for obtaining a sequence of random samples whose distribution converges to a target probability distribution that is difficult to ...

), and then updates the parameters based on the difference between the training examples and the synthesized ones – see equation . This process can be interpreted as an alternating ''mode seeking'' and ''mode shifting'' process, and also has an adversarial interpretation.

Essentially, the model learns a function that associates low energies to correct values, and higher energies to incorrect values.

After training, given a converged energy model , the Metropolis–Hastings algorithm

In statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples from a probability distribution from which direct sampling is difficult. New sample ...

can be used to draw new samples. The acceptance probability is given by:

History

The term "energy-based models" was first coined in a 2003 JMLR paper where the authors defined a generalisation of independent components analysis to the overcomplete setting using EBMs. Other early work on EBMs proposed models that represented energy as a composition of latent and observable variables.Characteristics

EBMs demonstrate useful properties: * Simplicity and stability–The EBM is the only object that needs to be designed and trained. Separate networks need not be trained to ensure balance. * Adaptive computation time–An EBM can generate sharp, diverse samples or (more quickly) coarse, less diverse samples. Given infinite time, this procedure produces true samples. * Flexibility–In Variational Autoencoders (VAE) and flow-based models, the generator learns a map from a continuous space to a (possibly) discontinuous space containing different data modes. EBMs can learn to assign low energies to disjoint regions (multiple modes). * Adaptive generation–EBM generators are implicitly defined by the probability distribution, and automatically adapt as the distribution changes (without training), allowing EBMs to address domains where generator training is impractical, as well as minimizingmode collapse

In machine learning, mode collapse is a failure mode observed in generative models, originally noted in Generative Adversarial Networks (GANs). It occurs when the model produces outputs that are less diverse than expected, effectively "collapsing ...

and avoiding spurious modes from out-of-distribution samples.

* Compositionality–Individual models are unnormalized probability distributions, allowing models to be combined through product of experts

Product of experts (PoE) is a machine learning technique. It models a probability distribution by combining the output from several simpler distributions.

It was proposed by Geoffrey Hinton in 1999, along with an algorithm for training the paramete ...

or other hierarchical techniques.

Experimental results

On image datasets such asCIFAR-10

The CIFAR-10 dataset ( Canadian Institute For Advanced Research) is a collection of images that are commonly used to train machine learning and computer vision algorithms. It is one of the most widely used datasets for machine learning research. Th ...

and ImageNet

The ImageNet project is a large visual database designed for use in Outline of object recognition, visual object recognition software research. More than 14 million images have been hand-annotated by the project to indicate what objects are pictur ...

32x32, an EBM model generated high-quality images relatively quickly. It supported combining features learned from one type of image for generating other types of images. It was able to generalize using out-of-distribution datasets, outperforming flow-based and autoregressive model

In statistics, econometrics, and signal processing, an autoregressive (AR) model is a representation of a type of random process; as such, it can be used to describe certain time-varying processes in nature, economics, behavior, etc. The autoregre ...

s. EBM was relatively resistant to adversarial perturbations, behaving better than models explicitly trained against them with training for classification.

Applications

Target applications includenatural language processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related ...

, robotics

Robotics is the interdisciplinary study and practice of the design, construction, operation, and use of robots.

Within mechanical engineering, robotics is the design and construction of the physical structures of robots, while in computer s ...

and computer vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical ...

.

The first energy-based generative neural network is the generative ConvNet proposed in 2016 for image patterns, where the neural network is a convolutional neural network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different ty ...

. The model has been generalized to various domains to learn distributions of videos, and 3D voxels. They are made more effective in their variants. They have proven useful for data generation (e.g., image synthesis, video synthesis,

3D shape synthesis, etc.), data recovery (e.g., recovering videos with missing pixels or image frames, 3D super-resolution, etc), data reconstruction (e.g., image reconstruction and linear interpolation ).

Alternatives

EBMs compete with techniques such asvariational autoencoder

In machine learning, a variational autoencoder (VAE) is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling. It is part of the families of probabilistic graphical models and variational Bayesian metho ...

s (VAEs), generative adversarial network

A generative adversarial network (GAN) is a class of machine learning frameworks and a prominent framework for approaching generative artificial intelligence. The concept was initially developed by Ian Goodfellow and his colleagues in June ...

s (GANs) or normalizing flow

A flow-based generative model is a generative model used in machine learning that explicitly models a probability distribution by leveraging normalizing flow, which is a statistical method using the Probability density function#Function of random ...

s.

Extensions

Joint energy-based models

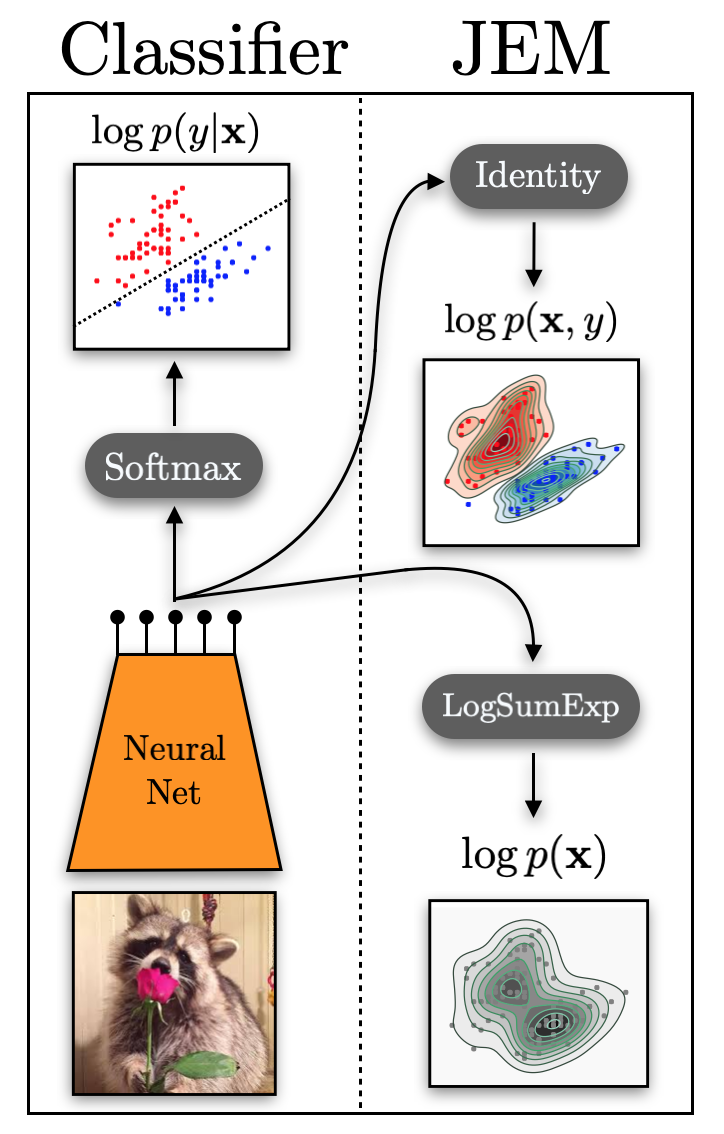

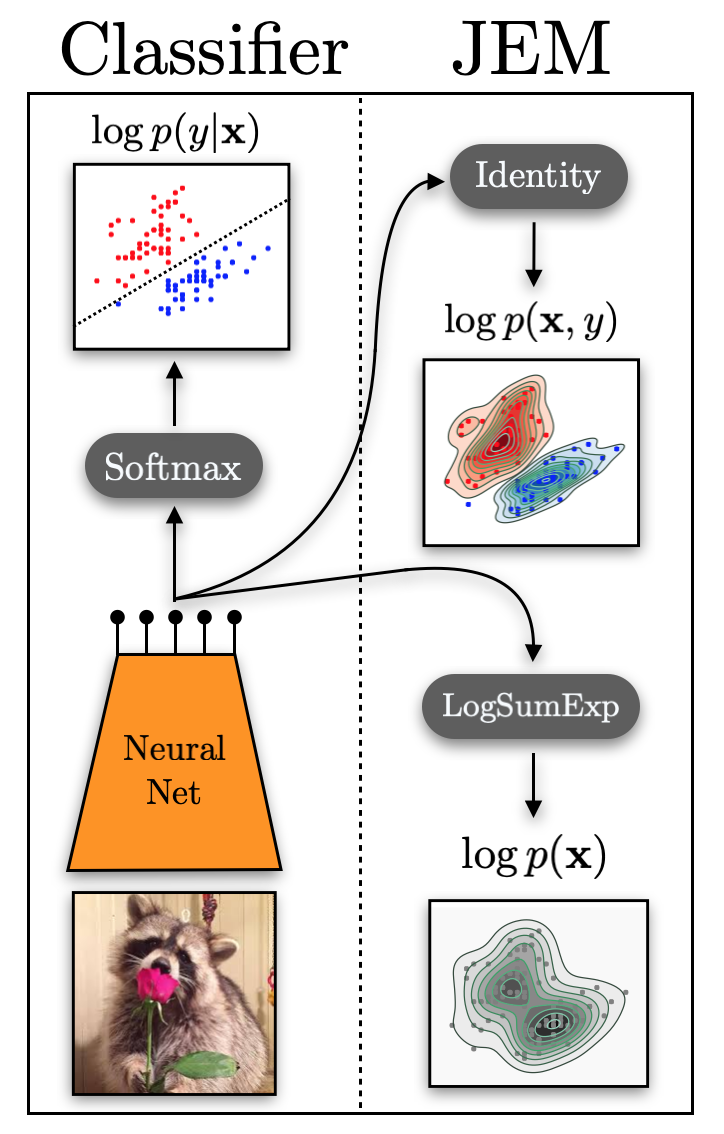

Joint energy-based models (JEM), proposed in 2020 by Grathwohl et al., allow any classifier with

Joint energy-based models (JEM), proposed in 2020 by Grathwohl et al., allow any classifier with softmax

The softmax function, also known as softargmax or normalized exponential function, converts a tuple of real numbers into a probability distribution of possible outcomes. It is a generalization of the logistic function to multiple dimensions, a ...

output to be interpreted as energy-based model. The key observation is that such a classifier is trained to predict the conditional probability

where