|

Universal Approximator

In the mathematical theory of artificial neural networks, universal approximation theorems are theorems of the following form: Given a family of neural networks, for each function f from a certain function space, there exists a sequence of neural networks \phi_1, \phi_2, \dots from the family, such that \phi_n \to f according to some criterion. That is, the family of neural networks is dense in the function space. The most popular version states that feedforward networks with non-polynomial activation functions are dense in the space of continuous functions between two Euclidean spaces, with respect to the compact convergence topology. Universal approximation theorems are existence theorems: They simply state that there ''exists'' such a sequence \phi_1, \phi_2, \dots \to f, and do not provide any way to actually find such a sequence. They also do not guarantee any method, such as backpropagation, might actually find such a sequence. Any method for searching the space of neural ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many areas of mathematics, which include number theory (the study of numbers), algebra (the study of formulas and related structures), geometry (the study of shapes and spaces that contain them), Mathematical analysis, analysis (the study of continuous changes), and set theory (presently used as a foundation for all mathematics). Mathematics involves the description and manipulation of mathematical object, abstract objects that consist of either abstraction (mathematics), abstractions from nature orin modern mathematicspurely abstract entities that are stipulated to have certain properties, called axioms. Mathematics uses pure reason to proof (mathematics), prove properties of objects, a ''proof'' consisting of a succession of applications of in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Feed-forward Network

Feedforward refers to recognition-inference architecture of neural networks. Artificial neural network architectures are based on inputs multiplied by weights to obtain outputs (inputs-to-output): feedforward. Recurrent neural networks, or neural networks with loops allow information from later processing stages to feed back to earlier stages for sequence processing. However, at every stage of inference a feedforward multiplication remains the core, essential for backpropagationRumelhart, David E., Geoffrey E. Hinton, and R. J. Williams.Learning Internal Representations by Error Propagation. David E. Rumelhart, James L. McClelland, and the PDP research group. (editors), Parallel distributed processing: Explorations in the microstructure of cognition, Volume 1: Foundation. MIT Press, 1986. or backpropagation through time. Thus neural networks cannot contain feedback like negative feedback or positive feedback where the outputs feed back to the ''very same'' inputs and modify them, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Radial Basis Functions

In mathematics a radial basis function (RBF) is a real-valued function \varphi whose value depends only on the distance between the input and some fixed point, either the origin, so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ), or some other fixed point \mathbf, called a ''center'', so that \varphi(\mathbf) = \hat\varphi(\left\, \mathbf-\mathbf\right\, ). Any function \varphi that satisfies the property \varphi(\mathbf) = \hat\varphi(\left\, \mathbf\right\, ) is a radial function. The distance is usually Euclidean distance, although other metrics are sometimes used. They are often used as a collection \_k which forms a basis for some function space of interest, hence the name. Sums of radial basis functions are typically used to approximate given functions. This approximation process can also be interpreted as a simple kind of neural network; this was the context in which they were originally applied to machine learning, in work by David Broomhead and David Lowe i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Applied And Computational Harmonic Analysis

''Applied and Computational Harmonic Analysis'' is a bimonthly peer-reviewed scientific journal published by Elsevier. The journal covers studies on the applied and computational aspects of harmonic analysis. Its editors-in-chief are Ronald Coifman (Yale University) and David Donoho (Stanford University Leland Stanford Junior University, commonly referred to as Stanford University, is a Private university, private research university in Stanford, California, United States. It was founded in 1885 by railroad magnate Leland Stanford (the eighth ...). Abstracting and indexing The journal is abstracted and indexed in: *CompuMath Citation Index *Current Contents/Engineering, Computing & Technology *Current Contents/Physical, Chemical & Earth Sciences *Inspec *Scopus *Science Citation Index Expanded *Zentralblatt MATH According to the ''Journal Citation Reports'', the journal has a 2022 impact factor of 2.5. References External links * English-language journals Academic jou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Convolutional Neural Network

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep learning network has been applied to process and make predictions from many different types of data including text, images and audio. Convolution-based networks are the de-facto standard in deep learning-based approaches to computer vision and image processing, and have only recently been replaced—in some cases—by newer deep learning architectures such as the transformer. Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization that comes from using shared weights over fewer connections. For example, for ''each'' neuron in the fully-connected layer, 10,000 weights would be required for processing an image sized 100 × 100 pixels. However, applying cascaded ''convolution'' (or cross-correlation) kernels, only 25 weights for each convolutio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Non-Euclidean Space

In mathematics, non-Euclidean geometry consists of two geometries based on axioms closely related to those that specify Euclidean geometry. As Euclidean geometry lies at the intersection of metric geometry and affine geometry, non-Euclidean geometry arises by either replacing the parallel postulate with an alternative, or relaxing the metric requirement. In the former case, one obtains hyperbolic geometry and elliptic geometry, the traditional non-Euclidean geometries. When the metric requirement is relaxed, then there are affine planes associated with the planar algebras, which give rise to kinematic geometries that have also been called non-Euclidean geometry. Principles The essential difference between the metric geometries is the nature of parallel lines. Euclid's fifth postulate, the parallel postulate, is equivalent to Playfair's postulate, which states that, within a two-dimensional plane, for any given line and a point ''A'', which is not on , there is exactly one lin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

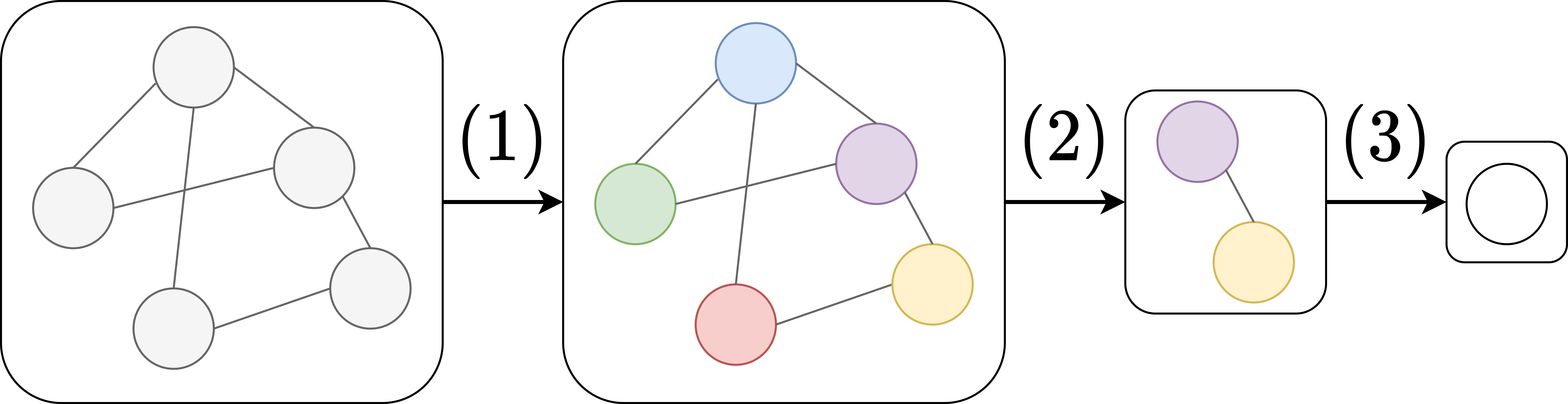

Graph Neural Network

Graph neural networks (GNN) are specialized artificial neural networks that are designed for tasks whose inputs are graphs. One prominent example is molecular drug design. Each input sample is a graph representation of a molecule, where atoms form the nodes and chemical bonds between atoms form the edges. In addition to the graph representation, the input also includes known chemical properties for each of the atoms. Dataset samples may thus differ in length, reflecting the varying numbers of atoms in molecules, and the varying number of bonds between them. The task is to predict the efficacy of a given molecule for a specific medical application, like eliminating ''E. coli'' bacteria. The key design element of GNNs is the use of ''pairwise message passing'', such that graph nodes iteratively update their representations by exchanging information with their neighbors. Several GNN architectures have been proposed, which implement different flavors of message passing, started ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Graph Isomorphism

In graph theory, an isomorphism of graphs ''G'' and ''H'' is a bijection between the vertex sets of ''G'' and ''H'' : f \colon V(G) \to V(H) such that any two vertices ''u'' and ''v'' of ''G'' are adjacent in ''G'' if and only if f(u) and f(v) are adjacent in ''H''. This kind of bijection is commonly described as "edge-preserving bijection", in accordance with the general notion of isomorphism being a structure-preserving bijection. If an isomorphism exists between two graphs, then the graphs are called isomorphic, often denoted by G\simeq H. In the case when the isomorphism is a mapping of a graph onto itself, i.e., when ''G'' and ''H'' are one and the same graph, the isomorphism is called an automorphism of ''G''. Graph isomorphism is an equivalence relation on graphs and as such it partitions the class of all graphs into equivalence classes. A set of graphs isomorphic to each other is called an isomorphism class of graphs. The question of whether graph isomorphism can be dete ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Step Function

In mathematics, a function on the real numbers is called a step function if it can be written as a finite linear combination of indicator functions of intervals. Informally speaking, a step function is a piecewise constant function having only finitely many pieces. Definition and first consequences A function f\colon \mathbb \rightarrow \mathbb is called a step function if it can be written as :f(x) = \sum\limits_^n \alpha_i \chi_(x), for all real numbers x where n\ge 0, \alpha_i are real numbers, A_i are intervals, and \chi_A is the indicator function of A: :\chi_A(x) = \begin 1 & \text x \in A \\ 0 & \text x \notin A \\ \end In this definition, the intervals A_i can be assumed to have the following two properties: # The intervals are pairwise disjoint: A_i \cap A_j = \emptyset for i \neq j # The union of the intervals is the entire real line: \bigcup_^n A_i = \mathbb R. Indeed, if that is not the case to start with, a different set of intervals can be picked for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Robert Hecht-Nielsen

Robert Hecht-Nielsen (July 18, 1947 – May 25, 2019) was an American computer scientist, neuroscientist, entrepreneur and professor of electrical and computer engineering at the University of California, San Diego. He co-founded HNC Software Inc. (NASDAQ: HNCS) in 1986 which went on to develop the pervasive card fraud detection system, Falcon®. He became a vice president of R&D at Fair Isaac Corporation when it acquired the company in 2002. Education and career Hecht-Nielsen was born in San Francisco, California to a Danish immigrant father and an American mother. His family moved to Denver, Colorado when he was eight until his college years. Hecht-Nielsen studied at University of Colorado Denver before moving to Arizona State University, where he completed his bachelor's degree in mathematics with a minor in anthropology in 1971 and his doctoral degree in mathematics in 1974 with a thesis topic on functional analysis. He worked at Motorola from 1979 to 1983, and at TRW fro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Kolmogorov–Arnold Representation Theorem

In real analysis and approximation theory, the Kolmogorov–Arnold representation theorem (or superposition theorem) states that every multivariate continuous function f\colon ,1n\to \R can be represented as a superposition of continuous single-variable functions. The works of Vladimir Arnold and Andrey Kolmogorov established that if ''f'' is a multivariate continuous function, then ''f'' can be written as a finite composition of continuous functions of a single variable and the binary operation of addition. More specifically, : where \phi_\colon ,1to \R and \Phi_\colon \R \to \R. There are proofs with specific constructions. It solved a more constrained form of Hilbert's thirteenth problem, so the original Hilbert's thirteenth problem is a corollary. In a sense, they showed that the only true continuous multivariate function is the sum, since every other continuous function can be written using univariate continuous functions and summing. History The Kolmogorov–Arnold repr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Control Theory

Control theory is a field of control engineering and applied mathematics that deals with the control system, control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the application of system inputs to drive the system to a desired state, while minimizing any ''delay'', ''overshoot'', or ''steady-state error'' and ensuring a level of control Stability theory, stability; often with the aim to achieve a degree of Optimal control, optimality. To do this, a controller with the requisite corrective behavior is required. This controller monitors the controlled process variable (PV), and compares it with the reference or Setpoint (control system), set point (SP). The difference between actual and desired value of the process variable, called the ''error'' signal, or SP-PV error, is applied as feedback to generate a control action to bring the controlled process variable to the same value as the set point. Other aspects ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |