|

Self-organizing Map

A self-organizing map (SOM) or self-organizing feature map (SOFM) is an unsupervised machine learning technique used to produce a low-dimensional (typically two-dimensional) representation of a higher-dimensional data set while preserving the topological structure of the data. For example, a data set with p variables measured in n observations could be represented as clusters of observations with similar values for the variables. These clusters then could be visualized as a two-dimensional "map" such that observations in proximal clusters have more similar values than observations in distal clusters. This can make high-dimensional data easier to visualize and analyze. An SOM is a type of artificial neural network but is trained using competitive learning rather than the error-correction learning (e.g., backpropagation with gradient descent) used by other artificial neural networks. The SOM was introduced by the Finnish professor Teuvo Kohonen in the 1980s and therefore is some ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Unsupervised Learning

Unsupervised learning is a framework in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Other frameworks in the spectrum of supervisions include weak- or semi-supervision, where a small portion of the data is tagged, and self-supervision. Some researchers consider self-supervised learning a form of unsupervised learning. Conceptually, unsupervised learning divides into the aspects of data, training, algorithm, and downstream applications. Typically, the dataset is harvested cheaply "in the wild", such as massive text corpus obtained by web crawling, with only minor filtering (such as Common Crawl). This compares favorably to supervised learning, where the dataset (such as the ImageNet1000) is typically constructed manually, which is much more expensive. There were algorithms designed specifically for unsupervised learning, such as clustering algorithms like k-means, dimensionality reduction techniques l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hexagonal

In geometry, a hexagon (from Greek , , meaning "six", and , , meaning "corner, angle") is a six-sided polygon. The total of the internal angles of any simple (non-self-intersecting) hexagon is 720°. Regular hexagon A regular hexagon is defined as a hexagon that is both equilateral and equiangular. In other words, a hexagon is said to be regular if the edges are all equal in length, and each of its internal angle is equal to 120°. The Schläfli symbol denotes this polygon as \ . However, the regular hexagon can also be considered as the cutting off the vertices of an equilateral triangle, which can also be denoted as \mathrm\ . A regular hexagon is bicentric, meaning that it is both cyclic (has a circumscribed circle) and tangential (has an inscribed circle). The common length of the sides equals the radius of the circumscribed circle or circumcircle, which equals \tfrac times the apothem (radius of the inscribed circle). Measurement The longest diagonal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Jackknife Resampling

In statistics, the jackknife (jackknife cross-validation) is a cross-validation technique and, therefore, a form of resampling. It is especially useful for bias and variance estimation. The jackknife pre-dates other common resampling methods such as the bootstrap. Given a sample of size n, a jackknife estimator can be built by aggregating the parameter estimates from each subsample of size (n-1) obtained by omitting one observation. The jackknife is a linear approximation of the bootstrap. The jackknife technique was developed by Maurice Quenouille (1924–1973) from 1949 and refined in 1956. John Tukey expanded on the technique in 1958 and proposed the name "jackknife" because, like a physical jack-knife (a compact folding knife), it is a rough-and-ready tool that can improvise a solution for a variety of problems even though specific problems may be more efficiently solved with a purpose-designed tool. A simple example: mean estimation The jackknife estimator of a param ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Bootstrap Sampling

Bootstrapping is a procedure for estimating the distribution of an estimator by resampling (often with replacement) one's data or a model estimated from the data. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Neighbourhood (mathematics)

In topology and related areas of mathematics, a neighbourhood (or neighborhood) is one of the basic concepts in a topological space. It is closely related to the concepts of open set and Interior (topology), interior. Intuitively speaking, a neighbourhood of a point is a Set (mathematics), set of points containing that point where one can move some amount in any direction away from that point without leaving the set. Definitions Neighbourhood of a point If X is a topological space and p is a point in X, then a neighbourhood of p is a subset V of X that includes an open set U containing p, p \in U \subseteq V \subseteq X. This is equivalent to the point p \in X belonging to the Interior (topology)#Interior point, topological interior of V in X. The neighbourhood V need not be an open subset of X. When V is open (resp. closed, compact, etc.) in X, it is called an (resp. closed neighbourhood, compact neighbourhood, etc.). Some authors require neighbourhoods to be open, so i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Monotonically Decreasing

In mathematics, a monotonic function (or monotone function) is a function between ordered sets that preserves or reverses the given order. This concept first arose in calculus, and was later generalized to the more abstract setting of order theory. In calculus and analysis In calculus, a function f defined on a subset of the real numbers with real values is called ''monotonic'' if it is either entirely non-decreasing, or entirely non-increasing. That is, as per Fig. 1, a function that increases monotonically does not exclusively have to increase, it simply must not decrease. A function is termed ''monotonically increasing'' (also ''increasing'' or ''non-decreasing'') if for all x and y such that x \leq y one has f\!\left(x\right) \leq f\!\left(y\right), so f preserves the order (see Figure 1). Likewise, a function is called ''monotonically decreasing'' (also ''decreasing'' or ''non-increasing'') if, whenever x \leq y, then f\!\left(x\right) \geq f\!\left(y\right), so i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Eigenvectors

In linear algebra, an eigenvector ( ) or characteristic vector is a Vector (mathematics and physics), vector that has its direction (geometry), direction unchanged (or reversed) by a given linear map, linear transformation. More precisely, an eigenvector \mathbf v of a linear transformation T is scalar multiplication, scaled by a constant factor \lambda when the linear transformation is applied to it: T\mathbf v=\lambda \mathbf v. The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor \lambda (possibly a negative number, negative or complex number, complex number). Euclidean vector, Geometrically, vectors are multi-dimensional quantities with magnitude and direction, often pictured as arrows. A linear transformation Rotation (mathematics), rotates, Scaling (geometry), stretches, or Shear mapping, shears the vectors upon which it acts. A linear transformation's eigenvectors are those vectors that are only stretched or shrunk, with nei ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Principal Component

Principal component analysis (PCA) is a linear dimensionality reduction technique with applications in exploratory data analysis, visualization and data preprocessing. The data is linearly transformed onto a new coordinate system such that the directions (principal components) capturing the largest variation in the data can be easily identified. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the i-th vector is the direction of a line that best fits the data while being orthogonal to the first i-1 vectors. Here, a best-fitting line is defined as one that minimizes the average squared perpendicular distance from the points to the line. These directions (i.e., principal components) constitute an orthonormal basis in which different individual dimensions of the data are linearly uncorrelated. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

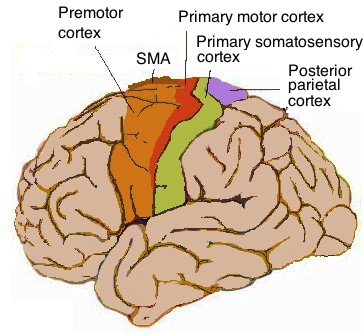

Cerebral Cortex

The cerebral cortex, also known as the cerebral mantle, is the outer layer of neural tissue of the cerebrum of the brain in humans and other mammals. It is the largest site of Neuron, neural integration in the central nervous system, and plays a key role in attention, perception, awareness, thought, memory, language, and consciousness. The six-layered neocortex makes up approximately 90% of the Cortex (anatomy), cortex, with the allocortex making up the remainder. The cortex is divided into left and right parts by the longitudinal fissure, which separates the two cerebral hemispheres that are joined beneath the cortex by the corpus callosum and other commissural fibers. In most mammals, apart from small mammals that have small brains, the cerebral cortex is folded, providing a greater surface area in the confined volume of the neurocranium, cranium. Apart from minimising brain and cranial volume, gyrification, cortical folding is crucial for the Neural circuit, brain circuitry ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Sense

A sense is a biological system used by an organism for sensation, the process of gathering information about the surroundings through the detection of Stimulus (physiology), stimuli. Although, in some cultures, five human senses were traditionally identified as such (namely Visual perception, sight, Olfaction, smell, Somatosensory system, touch, taste, and hearing), many more are now recognized. Senses used by non-human organisms are even greater in variety and number. During sensation, sense organs collect various stimuli (such as a sound or smell) for Transduction (physiology), transduction, meaning transformation into a form that can be understood by the brain. Sensation and perception are fundamental to nearly every aspect of an organism's cognition, behavior and thought. In organisms, a sensory organ consists of a group of interrelated Sensory neuron, sensory cells that respond to a specific type of physical stimulus. Via Cranial nerves, cranial and spinal nerves (nerves ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Euclidean Distance

In mathematics, the Euclidean distance between two points in Euclidean space is the length of the line segment between them. It can be calculated from the Cartesian coordinates of the points using the Pythagorean theorem, and therefore is occasionally called the Pythagorean distance. These names come from the ancient Greek mathematicians Euclid and Pythagoras. In the Greek deductive geometry exemplified by Euclid's ''Elements'', distances were not represented as numbers but line segments of the same length, which were considered "equal". The notion of distance is inherent in the compass tool used to draw a circle, whose points all have the same distance from a common center point. The connection from the Pythagorean theorem to distance calculation was not made until the 18th century. The distance between two objects that are not points is usually defined to be the smallest distance among pairs of points from the two objects. Formulas are known for computing distances b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |