|

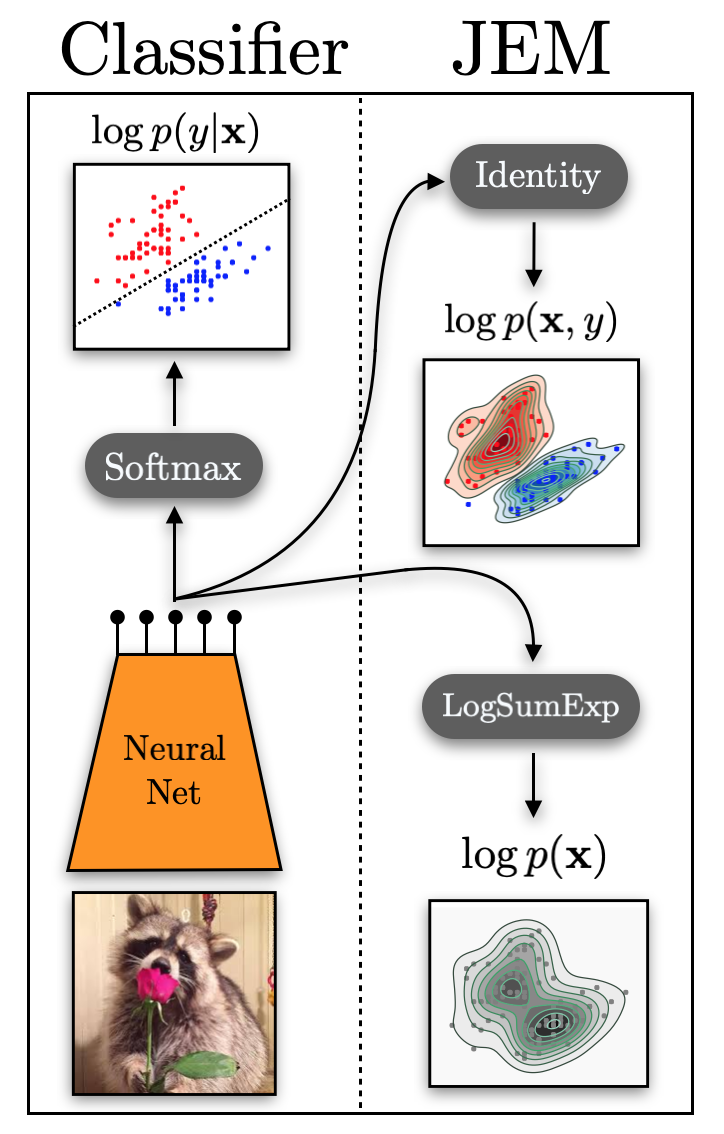

Energy-based Model

An energy-based model (EBM) (also called Canonical Ensemble Learning or Learning via Canonical Ensemble – CEL and LCE, respectively) is an application of canonical ensemble formulation from statistical physics for learning from data. The approach prominently appears in generative artificial intelligence. EBMs provide a unified framework for many probabilistic and non-probabilistic approaches to such learning, particularly for training graphical and other structured models. An EBM learns the characteristics of a target dataset and generates a similar but larger dataset. EBMs detect the latent variables of a dataset and generate new datasets with a similar distribution. Energy-based generative neural networks is a class of generative models, which aim to learn explicit probability distributions of data in the form of energy-based models, the energy functions of which are parameterized by modern deep neural networks. Boltzmann machines are a special form of energy-based models ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Canonical Ensemble

In statistical mechanics, a canonical ensemble is the statistical ensemble that represents the possible states of a mechanical system in thermal equilibrium with a heat bath at a fixed temperature. The system can exchange energy with the heat bath, so that the states of the system will differ in total energy. The principal thermodynamic variable of the canonical ensemble, determining the probability distribution of states, is the absolute temperature (symbol: ). The ensemble typically also depends on mechanical variables such as the number of particles in the system (symbol: ) and the system's volume (symbol: ), each of which influence the nature of the system's internal states. An ensemble with these three parameters, which are assumed constant for the ensemble to be considered canonical, is sometimes called the ensemble. The canonical ensemble assigns a probability to each distinct microstate given by the following exponential: :P = e^, where is the total energy of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Geoffrey Hinton

Geoffrey Everest Hinton (born 1947) is a British-Canadian computer scientist, cognitive scientist, and cognitive psychologist known for his work on artificial neural networks, which earned him the title "the Godfather of AI". Hinton is University Professor Emeritus at the University of Toronto. From 2013 to 2023, he divided his time working for Google (Google Brain) and the University of Toronto before publicly announcing his departure from Google in May 2023, citing concerns about the many risks of artificial intelligence (AI) technology. In 2017, he co-founded and became the chief scientific advisor of the Vector Institute in Toronto. With David Rumelhart and Ronald J. Williams, Hinton was co-author of a highly cited paper published in 1986 that popularised the backpropagation algorithm for training multi-layer neural networks, although they were not the first to propose the approach. Hinton is viewed as a leading figure in the deep learning community. The image-recognitio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural-language understanding, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoregressive Model

In statistics, econometrics, and signal processing, an autoregressive (AR) model is a representation of a type of random process; as such, it can be used to describe certain time-varying processes in nature, economics, behavior, etc. The autoregressive model specifies that the output variable depends linearly on its own previous values and on a stochastic term (an imperfectly predictable term); thus the model is in the form of a stochastic difference equation (or recurrence relation) which should not be confused with a differential equation. Together with the moving-average (MA) model, it is a special case and key component of the more general autoregressive–moving-average (ARMA) and autoregressive integrated moving average (ARIMA) models of time series, which have a more complicated stochastic structure; it is also a special case of the vector autoregressive model (VAR), which consists of a system of more than one interlocking stochastic difference equation in more than one e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ImageNet

The ImageNet project is a large visual database designed for use in Outline of object recognition, visual object recognition software research. More than 14 million images have been hand-annotated by the project to indicate what objects are pictured and in at least one million of the images, bounding boxes are also provided. ImageNet contains more than 20,000 categories, with a typical category, such as "balloon" or "strawberry", consisting of several hundred images. The database of annotations of third-party image URLs is freely available directly from ImageNet, though the actual images are not owned by ImageNet. Since 2010, the ImageNet project runs an annual software contest, the ImageNet Large Scale Visual Recognition Challenge (#History_of_the_ImageNet_challenge, ILSVRC), where software programs compete to correctly classify and detect objects and scenes. The challenge uses a "trimmed" list of one thousand non-overlapping classes. History AI researcher Fei-Fei Li began working ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CIFAR-10

The CIFAR-10 dataset ( Canadian Institute For Advanced Research) is a collection of images that are commonly used to train machine learning and computer vision algorithms. It is one of the most widely used datasets for machine learning research. The CIFAR-10 dataset contains 60,000 32x32 color images in 10 different classes. The 10 different classes represent airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks. There are 6,000 images of each class. Computer algorithms for recognizing objects in photos often learn by example. CIFAR-10 is a set of images that can be used to teach a computer how to recognize objects. Since the images in CIFAR-10 are low-resolution (32x32), this dataset can allow researchers to quickly try different algorithms to see what works. CIFAR-10 is a labeled subset of the 80 Million Tiny Images dataset from 2008, published in 2009. When the dataset was created, students were paid to label all of the images. Various kinds of convolution ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Product Of Experts

Product of experts (PoE) is a machine learning technique. It models a probability distribution by combining the output from several simpler distributions. It was proposed by Geoffrey Hinton in 1999, along with an algorithm for training the parameters of such a system. The core idea is to combine several probability distributions ("experts") by multiplying their density functions—making the PoE classification similar to an "and" operation. This allows each expert to make decisions on the basis of a few dimensions without having to cover the full dimensionality of a problem: P(y, \)=\frac\prod_^M f_j(y, \) where f_j are unnormalized expert densities and Z=\int\mboxy \prod_^M f_j(y, \) is a normalization constant (see partition function (statistical mechanics)). This is related to (but quite different from) a mixture model, where several probability distributions p_j(y, \) are combined via an "or" operation, which is a weighted sum of their density functions: P(y, \)=\sum_^M \a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mode Collapse

In machine learning, mode collapse is a failure mode observed in generative models, originally noted in Generative Adversarial Networks (GANs). It occurs when the model produces outputs that are less diverse than expected, effectively "collapsing" to generate only a few modes of the data distribution while ignoring others. This phenomenon undermines the goal of generative models to capture the full diversity of the training data. There are typically two times at which a model can collapse: either during training or during post-training finetuning. Mode collapse reduces the utility of generative models in applications, such as in *image synthesis (repetitive or near-identical images); * data augmentation (limited diversity in synthetic data); * scientific simulations (failure to explore all plausible scenarios). Distinctions Mode collapse is distinct from overfitting, where a model learns detailed patterns in the training data that does not generalize to the test data, and un ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normalizing Flow

A flow-based generative model is a generative model used in machine learning that explicitly models a probability distribution by leveraging normalizing flow, which is a statistical method using the Probability density function#Function of random variables and change of variables in the probability density function, change-of-variable law of probabilities to transform a simple distribution into a complex one. The direct modeling of likelihood provides many advantages. For example, the negative log-likelihood can be directly computed and minimized as the loss function. Additionally, novel samples can be generated by sampling from the initial distribution, and applying the flow transformation. In contrast, many alternative generative modeling methods such as Variational autoencoder, variational autoencoder (VAE) and generative adversarial network do not explicitly represent the likelihood function. Method Let z_0 be a (possibly multivariate) random variable with distribution ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoencoder

An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data (unsupervised learning). An autoencoder learns two functions: an encoding function that transforms the input data, and a decoding function that recreates the input data from the encoded representation. The autoencoder learns an efficient representation (encoding) for a set of data, typically for dimensionality reduction, to generate lower-dimensional embeddings for subsequent use by other machine learning algorithms. Variants exist which aim to make the learned representations assume useful properties. Examples are regularized autoencoders (''sparse'', ''denoising'' and ''contractive'' autoencoders), which are effective in learning representations for subsequent classification tasks, and ''variational'' autoencoders, which can be used as generative models. Autoencoders are applied to many problems, including facial recognition, feature detection, anomaly detection, and l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JMLR

The ''Journal of Machine Learning Research'' is a peer-reviewed open access scientific journal covering machine learning. It was established in 2000 and the first editor-in-chief was Leslie Kaelbling. The current editors-in-chief are Francis Bach (Inria) and David Blei (Columbia University). History The journal was established as an open-access alternative to the journal ''Machine Learning''. In 2001, forty editorial board members of ''Machine Learning'' resigned, saying that in the era of the Internet, it was detrimental for researchers to continue publishing their papers in expensive journals with pay-access archives. The open access model employed by the ''Journal of Machine Learning Research'' allows authors to publish articles for free and retain copyright, while archives are freely available online. Print editions of the journal were published by MIT Press until 2004 and by Microtome Publishing thereafter. From its inception, the journal received no revenue from the pri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metropolis–Hastings Algorithm

In statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples from a probability distribution from which direct sampling is difficult. New samples are added to the sequence in two steps: first a new sample is proposed based on the previous sample, then the proposed sample is either added to the sequence or rejected depending on the value of the probability distribution at that point. The resulting sequence can be used to approximate the distribution (e.g. to generate a histogram) or to compute an integral (e.g. an expected value). Metropolis–Hastings and other MCMC algorithms are generally used for sampling from multi-dimensional distributions, especially when the number of dimensions is high. For single-dimensional distributions, there are usually other methods (e.g. adaptive rejection sampling) that can directly return independent samples from the distribution, and these are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |