|

Wolfe Duality

In mathematical optimization, Wolfe duality, named after Philip Wolfe, is type of dual problem in which the objective function and constraints are all differentiable functions. Using this concept a lower bound for a minimization problem can be found because of the weak duality principle. Mathematical formulation For a minimization problem with inequality constraints, : \begin &\underset& & f(x) \\ &\operatorname & &g_i(x) \leq 0, \quad i = 1,\dots,m \end the Lagrangian dual problem is : \begin &\underset& & \inf_x \left(f(x) + \sum_^m u_j g_j(x)\right) \\ &\operatorname & &u_i \geq 0, \quad i = 1,\dots,m \end where the objective function is the Lagrange dual function. Provided that the functions f and g_1, \ldots, g_m are convex and continuously differentiable, the infimum In mathematics, the infimum (abbreviated inf; : infima) of a subset S of a partially ordered set P is the greatest element in P that is less than or equal to each element of S, if such an element e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Philip Wolfe (mathematician)

Philip Starr "Phil" Wolfe (August 11, 1927 – December 29, 2016) was an American mathematician and one of the founders of convex optimization theory and mathematical programming. Life Wolfe received his bachelor's degree, masters, and Ph.D. degrees from the University of California, Berkeley. He and his wife, Hallie, lived in Ossining, New York. Career In 1954, he was offered an instructorship at Princeton, where he worked on generalizations of linear programming, such as quadratic programming and general non-linear programming, leading to the Frank–Wolfe algorithm in joint work with Marguerite Frank, then a visitor at Princeton. When Maurice Sion was on sabbatical at the Institute for Advanced Study, Sion and Wolfe published in 1957 an example of a zero-sum game without a minimax value. Wolfe joined RAND corporation in 1957, where he worked with George Dantzig, resulting in the now well known Dantzig–Wolfe decomposition method. In 1965, he moved to IBM Int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Duality (optimization)

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Objective Function

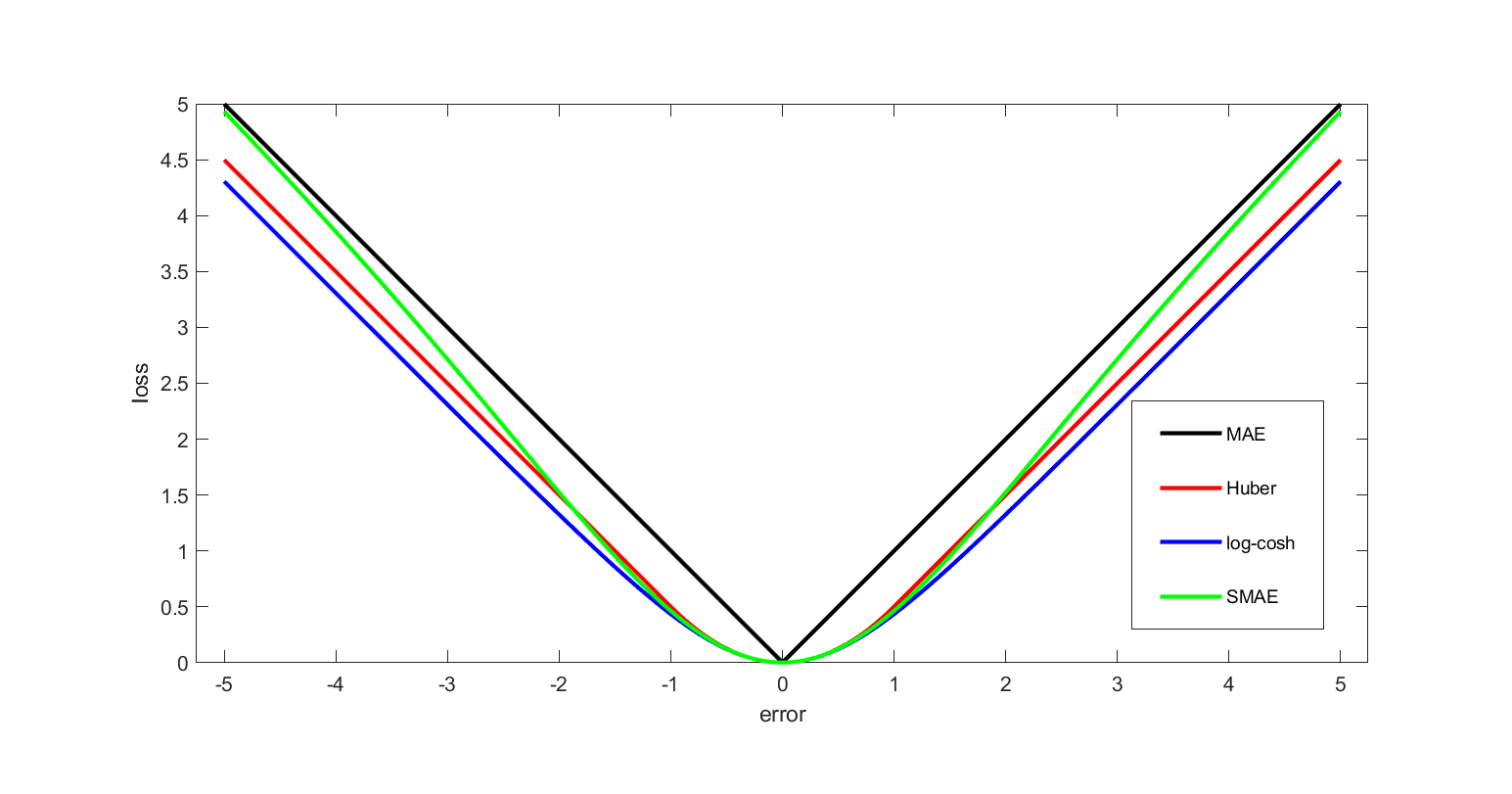

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Differentiable Function

In mathematics, a differentiable function of one real variable is a function whose derivative exists at each point in its domain. In other words, the graph of a differentiable function has a non- vertical tangent line at each interior point in its domain. A differentiable function is smooth (the function is locally well approximated as a linear function at each interior point) and does not contain any break, angle, or cusp. If is an interior point in the domain of a function , then is said to be ''differentiable at'' if the derivative f'(x_0) exists. In other words, the graph of has a non-vertical tangent line at the point . is said to be differentiable on if it is differentiable at every point of . is said to be ''continuously differentiable'' if its derivative is also a continuous function over the domain of the function f. Generally speaking, is said to be of class if its first k derivatives f^(x), f^(x), \ldots, f^(x) exist and are continuous over the domain of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weak Duality

In applied mathematics, weak duality is a concept in optimization which states that the duality gap is always greater than or equal to 0. This means that for any minimization problem, called the ''primal problem'', the solution to the primal problem is always greater than or equal to the solution to the dual maximization problem. Alternatively, the solution to a primal maximization problem is always less than or equal to the solution to the dual minimization problem. So, in short: weak duality states that any solution feasible for the dual problem is an upper bound to the solution of the primal problem. Weak duality is in contrast to strong duality, which states that the primal optimal objective and the dual optimal objective are ''equal''. Strong duality only holds in certain cases. Uses Many primal-dual approximation algorithms are based on the principle of weak duality.. Weak duality theorem Consider a linear programming problem, whe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrangian Duality

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but othe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Infimum And Supremum

In mathematics, the infimum (abbreviated inf; : infima) of a subset S of a partially ordered set P is the greatest element in P that is less than or equal to each element of S, if such an element exists. If the infimum of S exists, it is unique, and if ''b'' is a lower bound of S, then ''b'' is less than or equal to the infimum of S. Consequently, the term ''greatest lower bound'' (abbreviated as ) is also commonly used. The supremum (abbreviated sup; : suprema) of a subset S of a partially ordered set P is the least element in P that is greater than or equal to each element of S, if such an element exists. If the supremum of S exists, it is unique, and if ''b'' is an upper bound of S, then the supremum of S is less than or equal to ''b''. Consequently, the supremum is also referred to as the ''least upper bound'' (or ). The infimum is, in a precise sense, dual to the concept of a supremum. Infima and suprema of real numbers are common special cases that are important in analys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

KKT Conditions

KKT may refer to: * Karush–Kuhn–Tucker conditions, in mathematical optimization of nonlinear programming * kkt (), a type of general partnership in Hungary * Koi language, of Nepal, by ISO 639-3 code * Kappa Kappa Tau, a fictional sorority in the television series ''Scream Queens A scream queen (a wordplay on ''screen queen'') is an actress who is prominent and influential in horror films, either through a notable appearance or recurring roles. Scream king is the equivalent for men. Notable scream queen examples include ...'' * Kumamoto Kenmin Televisions, a Japanese TV station {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fenchel Duality

In mathematics, Fenchel's duality theorem is a result in the theory of convex functions named after Werner Fenchel. Let ''ƒ'' be a proper convex function on R''n'' and let ''g'' be a proper concave function on R''n''. Then, if regularity conditions are satisfied, :\inf_x ( f(x)-g(x) ) = \sup_p ( g_*(p)-f^*(p)). where ''ƒ'' * is the convex conjugate of ''ƒ'' (also referred to as the Fenchel–Legendre transform) and ''g'' * is the concave conjugate of ''g''. That is, :f^ \left( x^ \right) := \sup \left \ :g_ \left( x^ \right) := \inf \left \ Mathematical theorem Let ''X'' and ''Y'' be Banach spaces, f: X \to \mathbb \cup \ and g: Y \to \mathbb \cup \ be convex functions and A: X \to Y be a bounded linear map. Then the Fenchel problems: :p^* = \inf_ \ :d^* = \sup_ \ satisfy weak duality, i.e. p^* \geq d^*. Note that f^*,g^* are the convex conjugates of ''f'',''g'' respectively, and A^* is the adjoint operator. The perturbation function for this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |