|

Weight Initialization

In deep learning, weight initialization or parameter initialization describes the initial step in creating a neural network. A neural network contains trainable parameters that are modified during training: weight initialization is the pre-training step of assigning initial values to these parameters. The choice of weight initialization method affects the speed of convergence, the scale of neural activation within the network, the scale of gradient signals during backpropagation, and the quality of the final model. Proper initialization is necessary for avoiding issues such as vanishing and exploding gradients and activation function saturation. Note that even though this article is titled "weight initialization", both weights and biases are used in a neural network as trainable parameters, so this article describes how both of these are initialized. Similarly, trainable parameters in convolutional neural networks (CNNs) are called kernels and biases, and this article also de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kaiming He

Kaiming He () is a Chinese computer scientist who primarily researches computer vision and deep learning. He is an associate professor at Massachusetts Institute of Technology and is known as one of the creators of residual neural network (ResNet). Early life and education He attended the public Guangzhou Zhixin High School in Guangzhou, Guangdong, China. He scored first place for the total scores in the 2003 Guangdong provincial undergraduate admissions exam. He went to Tsinghua University for undergraduate education and received a Bachelor of Science degree in 2007. In 2007 to 2011, he pursued doctoral studies in information engineering at the Chinese University of Hong Kong at its Multimedia Laboratory, receiving a PhD degree in 2011. His doctoral dissertation was titled ''Single image haze removal using dark channel prior'' (2011), and his doctoral adviser was Tang Xiao'ou. Career He worked at Microsoft Research Asia from 2011 to 2016 and at Facebook Artificial Inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

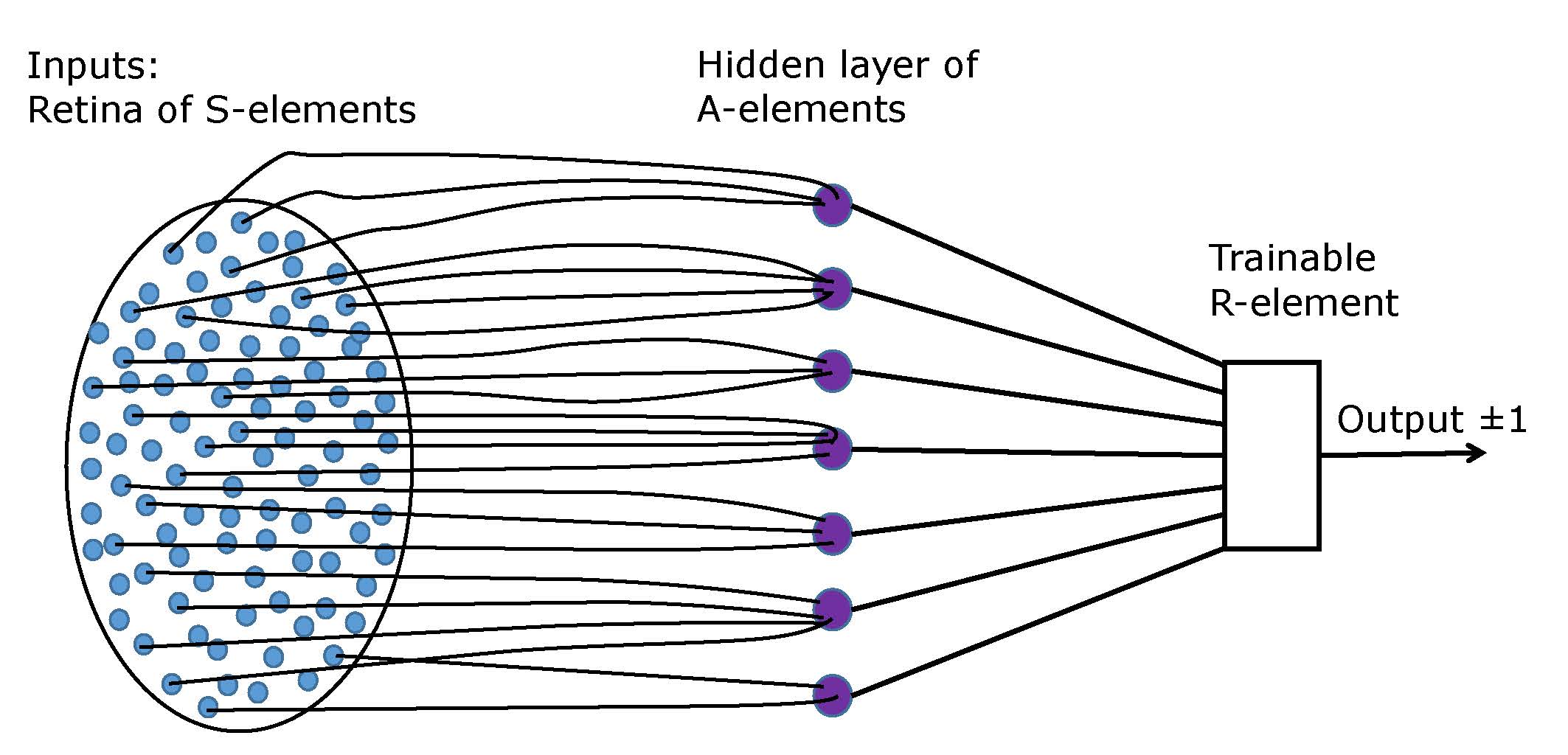

Frank Rosenblatt

Frank Rosenblatt (July 11, 1928July 11, 1971) was an American psychologist notable in the field of artificial intelligence. He is sometimes called the father of deep learning for his pioneering work on artificial neural networks. Life and career Rosenblatt was born into a Jewish family in New Rochelle, New York as the son of Dr. Frank and Katherine Rosenblatt. After graduating from The Bronx High School of Science in 1946, he attended Cornell University, where he obtained his Bachelor of Arts, A.B. in 1950 and his Doctor of Philosophy, Ph.D. in 1956. For his PhD thesis he built a custom-made computer, the Electronic Profile Analyzing Computer (EPAC), to perform multidimensional analysis for psychometrics. He used it between 1951 and 1953 to analyze psychometric data collected for his PhD thesis. The data were collected from a paid, 600 item survey of more than 200 Cornell undergraduates. The total computational cost was 2.5 million arithmetic operations, necessitating the use of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yann LeCun

Yann André Le Cun ( , ; usually spelled LeCun; born 8 July 1960) is a French-American computer scientist working primarily in the fields of machine learning, computer vision, mobile robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at New York University and Vice President, Chief AI Scientist at Meta. He is well known for his work on optical character recognition and computer vision using convolutional neural networks (CNNs). He is also one of the main creators of the DjVu image compression technology (together with Léon Bottou and Patrick Haffner). He co-developed the Lush programming language with Léon Bottou. In 2018, LeCun, Yoshua Bengio, and Geoffrey Hinton, received the Turing Award for their work on deep learning. The three are sometimes referred to as the "Godfathers of AI" and "Godfathers of Deep Learning". Early life and education LeCun was born on 8 July 1960, at Soisy-sous-Montmorency ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperbolic Functions

In mathematics, hyperbolic functions are analogues of the ordinary trigonometric functions, but defined using the hyperbola rather than the circle. Just as the points form a circle with a unit radius, the points form the right half of the unit hyperbola. Also, similarly to how the derivatives of and are and respectively, the derivatives of and are and respectively. Hyperbolic functions are used to express the angle of parallelism in hyperbolic geometry. They are used to express Lorentz boosts as hyperbolic rotations in special relativity. They also occur in the solutions of many linear differential equations (such as the equation defining a catenary), cubic equations, and Laplace's equation in Cartesian coordinates. Laplace's equations are important in many areas of physics, including electromagnetic theory, heat transfer, and fluid dynamics. The basic hyperbolic functions are: * hyperbolic sine "" (), * hyperbolic cosine "" (),''Collins Concise Dictionary'', p. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Matrix

In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements (e.g., ''m'' × ''n'' for an ''m'' × ''n'' matrix) is sometimes referred to as the sparsity of the matrix. Conceptually, sparsity corresponds to systems with few pairwise interactions. For example, consider a line of balls connected by springs from one to the next: this is a sparse system, as only adjacent balls are coupled. By contrast, if the same line of balls were to have springs connecting each ball to all other balls, the system would correspond to a dense matrix. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Layer Normalization

In machine learning, normalization is a statistical technique with various applications. There are two main forms of normalization, namely ''data normalization'' and ''activation normalization''. Data normalization (or feature scaling) includes methods that rescale input data so that the features have the same range, mean, variance, or other statistical properties. For instance, a popular choice of feature scaling method is min-max normalization, where each feature is transformed to have the same range (typically ,1/math> or 1,1/math>). This solves the problem of different features having vastly different scales, for example if one feature is measured in kilometers and another in nanometers. Activation normalization, on the other hand, is specific to deep learning, and includes methods that rescale the activation of hidden neurons inside neural networks. Normalization is often used to: * increase the speed of training convergence, * reduce sensitivity to variations and feat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformer (deep Learning Architecture)

The transformer is a deep learning architecture based on the multi-head attention mechanism, in which text is converted to numerical representations called tokens, and each token is converted into a vector via lookup from a word embedding table. At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism, allowing the signal for key tokens to be amplified and less important tokens to be diminished. Transformers have the advantage of having no recurrent units, therefore requiring less training time than earlier recurrent neural architectures (RNNs) such as long short-term memory (LSTM). Later variations have been widely adopted for training large language models (LLM) on large (language) datasets. The modern version of the transformer was proposed in the 2017 paper " Attention Is All You Need" by researchers at Google. Transformers were first developed as an improvement ov ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normalization (machine Learning)

In machine learning, normalization is a statistical technique with various applications. There are two main forms of normalization, namely ''data normalization'' and ''activation normalization''. Data normalization (or feature scaling) includes methods that rescale input data so that the features have the same range, mean, variance, or other statistical properties. For instance, a popular choice of feature scaling method is min-max normalization, where each feature is transformed to have the same range (typically ,1/math> or 1,1/math>). This solves the problem of different features having vastly different scales, for example if one feature is measured in kilometers and another in nanometers. Activation normalization, on the other hand, is specific to deep learning, and includes methods that rescale the activation of hidden neurons inside neural networks. Normalization is often used to: * increase the speed of training convergence, * reduce sensitivity to variations and feat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

VGGNet

The VGGNets are a series of convolutional neural networks (CNNs) developed by the Visual Geometry Group (VGG) at the University of Oxford. The VGG family includes various configurations with different depths, denoted by the letter "VGG" followed by the number of weight layers. The most common ones are VGG-16 (13 convolutional layers + 3 fully connected layers, 138M parameters) and VGG-19 (16 + 3, 144M parameters). The VGG family were widely applied in various computer vision areas. An Ensemble learning, ensemble model of VGGNets achieved state-of-the-art results in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2014. It was used as a baseline comparison in the Residual neural network, ResNet paper for image classification, as the network in the Region Based Convolutional Neural Networks, Fast Region-based CNN for object detection, and as a base network in neural style transfer. The series was historically important as an early influential model designed by co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |