|

Unstructured Data

Unstructured data (or unstructured information) is information that either does not have a pre-defined data model or is not organized in a pre-defined manner. Unstructured information is typically text-heavy, but may contain data such as dates, numbers, and facts as well. This results in irregularities and ambiguities that make it difficult to understand using traditional programs as compared to data stored in fielded form in databases or annotated ( semantically tagged) in documents. In 1998, Merrill Lynch said "unstructured data comprises the vast majority of data found in an organization, some estimates run as high as 80%." It's unclear what the source of this number is, but nonetheless it is accepted by some. Other sources have reported similar or higher percentages of unstructured data. , IDC and Dell EMC project that data will grow to 40 zettabytes by 2020, resulting in a 50-fold growth from the beginning of 2010. More recently, IDC and Seagate predict that the global data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Model

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be composed of a number of other elements which, in turn, represent the color and size of the car and define its owner. The term data model can refer to two distinct but closely related concepts. Sometimes it refers to an abstract formalization of the objects and relationships found in a particular application domain: for example the customers, products, and orders found in a manufacturing organization. At other times it refers to the set of concepts used in defining such formalizations: for example concepts such as entities, attributes, relations, or tables. So the "data model" of a banking application may be defined using the entity-relationship "data model". This article uses the term in both senses. A data model explicitly determines th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dimensional Analysis

In engineering and science, dimensional analysis is the analysis of the relationships between different physical quantities by identifying their base quantities (such as length, mass, time, and electric current) and units of measure (such as miles vs. kilometres, or pounds vs. kilograms) and tracking these dimensions as calculations or comparisons are performed. The conversion of units from one dimensional unit to another is often easier within the metric or the SI than in others, due to the regular 10-base in all units. ''Commensurable'' physical quantities are of the same kind and have the same dimension, and can be directly compared to each other, even if they are expressed in differing units of measure, e.g. yards and metres, pounds (mass) and kilograms, seconds and years. ''Incommensurable'' physical quantities are of different kinds and have different dimensions, and can not be directly compared to each other, no matter what units they are expressed in, e.g. metres and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pattern Recognition

Pattern recognition is the automated recognition of patterns and regularities in data. It has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Pattern recognition has its origins in statistics and engineering; some modern approaches to pattern recognition include the use of machine learning, due to the increased availability of big data and a new abundance of processing power. These activities can be viewed as two facets of the same field of application, and they have undergone substantial development over the past few decades. Pattern recognition systems are commonly trained from labeled "training" data. When no labeled data are available, other algorithms can be used to discover previously unknown patterns. KDD and data mining have a larger focus on unsupervised methods and stronger connection to business use. Pattern recognition focuses more on the si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Analytics

Text mining, also referred to as ''text data mining'', similar to text analytics, is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005) we can distinguish between three different perspectives of text mining: information extraction, data mining, and a KDD (Knowledge Discovery in Databases) process. Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled " Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semi-structured Data

Semi-structured data is a form of structured data that does not obey the tabular structure of data models associated with relational databases or other forms of data tables, but nonetheless contains tags or other markers to separate semantic elements and enforce hierarchies of records and fields within the data. Therefore, it is also known as self-describing structure. In semi-structured data, the entities belonging to the same class may have different attributes even though they are grouped together, and the attributes' order is not important. Semi-structured data are increasingly occurring since the advent of the Internet where full-text documents and databases are not the only forms of data anymore, and different applications need a medium for exchanging information. In object-oriented databases, one often finds semi-structured data. Types XML XML, other markup languages, email, and EDI are all forms of semi-structured data. OEM (Object Exchange Model) was created prior ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structure

A structure is an arrangement and organization of interrelated elements in a material object or system, or the object or system so organized. Material structures include man-made objects such as buildings and machines and natural objects such as biological organisms, minerals and chemicals. Abstract structures include data structures in computer science and musical form. Types of structure include a hierarchy (a cascade of one-to-many relationships), a network featuring many-to-many links, or a lattice featuring connections between components that are neighbors in space. Load-bearing Buildings, aircraft, skeletons, anthills, beaver dams, bridges and salt domes are all examples of load-bearing structures. The results of construction are divided into buildings and non-building structures, and make up the infrastructure of a human society. Built structures are broadly divided by their varying design approaches and standards, into categories including building st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

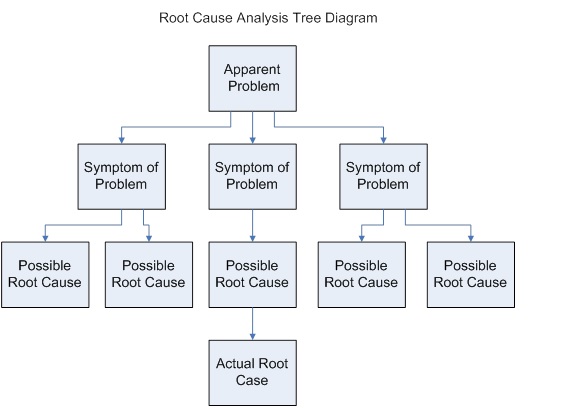

Root Cause Analysis

In science and engineering, root cause analysis (RCA) is a method of problem solving used for identifying the root causes of faults or problems. It is widely used in IT operations, manufacturing, telecommunications, industrial process control, accident analysis (e.g., in aviation, rail transport, or nuclear plants), medicine (for medical diagnosis), healthcare industry (e.g., for epidemiology), etc. Root cause analysis is a form of deductive inference since it requires an understanding of the underlying causal mechanisms of the potential root causes and the problem. RCA can be decomposed into four steps: * Identify and describe the problem clearly * Establish a timeline from the normal situation until the problem occurs * Distinguish between the root cause and other causal factors (e.g., using event correlation) * Establish a causal graph between the root cause and the problem RCA generally serves as input to a remediation process whereby corrective actions are taken to p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predictive Analytics

Predictive analytics encompasses a variety of statistical techniques from data mining, predictive modeling, and machine learning that analyze current and historical facts to make predictions about future or otherwise unknown events. In business, predictive models exploit patterns found in historical and transactional data to identify risks and opportunities. Models capture relationships among many factors to allow assessment of risk or potential associated with a particular set of conditions, guiding decision-making for candidate transactions. The defining functional effect of these technical approaches is that predictive analytics provides a predictive score (probability) for each individual (customer, employee, healthcare patient, product SKU, vehicle, component, machine, or other organizational unit) in order to determine, inform, or influence organizational processes that pertain across large numbers of individuals, such as in marketing, credit risk assessment, fraud det ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller amounts. In it primary definition though, Big data refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. Data with many fields (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Voice Of The Customer

In marketing, the voice of the customer (VOC) summarizes customers' expectations, preferences and aversions. A widely used form of VOC market research produces a detailed set of customer wants and needs, organized into a hierarchical structure, and then prioritized in terms of relative importance and satisfaction with current alternatives. VOC studies typically consist of both qualitative and quantitative research steps and are generally conducted at the start of any new product, process, or service design initiative in order to better understand the customer's wants and needs, and as the key input for new product definition, Quality Function Deployment (QFD), and the setting of detailed design specifications. Much has been written about this process, and there are many possible ways to gather the information – focus groups, individual interviews, contextual inquiry, ethnographic techniques, conjoint analysis, etc. All involve a series of structured in-depth interviews, whi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |