|

Transfer Entropy

Transfer entropy is a non-parametric statistic measuring the amount of directed (time-asymmetric) transfer of information between two random processes. Transfer entropy from a process ''X'' to another process ''Y'' is the amount of uncertainty reduced in future values of ''Y'' by knowing the past values of ''X'' given past values of ''Y''. More specifically, if X_t and Y_t for t\in \mathbb denote two random processes and the amount of information is measured using Shannon's entropy, the transfer entropy can be written as: : T_ = H\left( Y_t \mid Y_\right) - H\left( Y_t \mid Y_, X_\right), where ''H''(''X'') is Shannon's entropy of ''X''. The above definition of transfer entropy has been extended by other types of entropy measures such as Rényi entropy. Transfer entropy is conditional mutual information, with the history of the influenced variable Y_ in the condition: : T_ = I(Y_t ; X_ \mid Y_). Transfer entropy reduces to Granger causality for vector auto-regre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-parametric Statistics

Nonparametric statistics is a type of statistical analysis that makes minimal assumptions about the underlying distribution of the data being studied. Often these models are infinite-dimensional, rather than finite dimensional, as in parametric statistics. Nonparametric statistics can be used for descriptive statistics or statistical inference. Nonparametric tests are often used when the assumptions of parametric tests are evidently violated. Definitions The term "nonparametric statistics" has been defined imprecisely in the following two ways, among others: The first meaning of ''nonparametric'' involves techniques that do not rely on data belonging to any particular parametric family of probability distributions. These include, among others: * Methods which are ''distribution-free'', which do not rely on assumptions that the data are drawn from a given parametric family of probability distributions. * Statistics defined to be a function on a sample, without dependency on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Association For Computing Machinery

The Association for Computing Machinery (ACM) is a US-based international learned society for computing. It was founded in 1947 and is the world's largest scientific and educational computing society. The ACM is a non-profit professional membership group, reporting nearly 110,000 student and professional members . Its headquarters are in New York City. The ACM is an umbrella organization for academic and scholarly interests in computer science (informatics). Its motto is "Advancing Computing as a Science & Profession". History In 1947, a notice was sent to various people: On January 10, 1947, at the Symposium on Large-Scale Digital Calculating Machinery at the Harvard computation Laboratory, Professor Samuel H. Caldwell of Massachusetts Institute of Technology spoke of the need for an association of those interested in computing machinery, and of the need for communication between them. ..After making some inquiries during May and June, we believe there is ample interest to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google Code

Google Developers (previously Google Code) , application programming interfaces (APIs), and technical resources. The site contains documentation on using Google developer tools and APIs—including discussion groups and blogs for developers using Google's developer products. There are APIs offered for almost all of Google's popular consumer products, like Google Maps, YouTube, Google Apps, and others. The site also features a variety of developer products and tools built specifically for developers. Google App Engine is a hosting service for web apps. Project Hosting gives users version control for open source code. Google Web Toolkit (GWT) allows developers to create Ajax applications in the Java programming language.(All languages) The site contains reference information for community based developer products that Google is involved with like Android from the Open Handset Alliance and OpenSocial from the OpenSocial Foundation. Google APIs Google offers a variety of API ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rubin Causal Model

The Rubin causal model (RCM), also known as the Neyman–Rubin causal model, is an approach to the statistical analysis of cause and effect based on the framework of potential outcomes, named after Donald Rubin. The name "Rubin causal model" was first coined by Paul W. Holland. The potential outcomes framework was first proposed by Jerzy Neyman in his 1923 Master's thesis,Neyman, Jerzy. ''Sur les applications de la theorie des probabilites aux experiences agricoles: Essai des principes.'' Master's Thesis (1923). Excerpts reprinted in English, Statistical Science, Vol. 5, pp. 463–472. ( D. M. Dabrowska, and T. P. Speed, Translators.) though he discussed it only in the context of completely randomized experiments. Rubin extended it into a general framework for thinking about causation in both observational and experimental studies. Introduction The Rubin causal model is based on the idea of potential outcomes. For example, a person would have a particular income at age 4 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Structural Equation Modeling

Structural equation modeling (SEM) is a diverse set of methods used by scientists for both observational and experimental research. SEM is used mostly in the social and behavioral science fields, but it is also used in epidemiology, business, and other fields. A common definition of SEM is, "...a class of methodologies that seeks to represent hypotheses about the means, variances, and covariances of observed data in terms of a smaller number of 'structural' parameters defined by a hypothesized underlying conceptual or theoretical model,". SEM involves a model representing how various aspects of some phenomenon are thought to causally connect to one another. Structural equation models often contain postulated causal connections among some latent variables (variables thought to exist but which can't be directly observed). Additional causal connections link those latent variables to observed variables whose values appear in a data set. The causal connections are represented using ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Causality (physics)

Causality is the relationship between causes and effects. While causality is also a topic studied from the perspectives of philosophy and physics, it is operationalized so that causes of an event must be in the past light cone of the event and ultimately reducible to fundamental interactions. Similarly, a cause cannot have an effect outside its future light cone. Macroscopic vs microscopic causality Causality can be defined macroscopically, at the level of human observers, or microscopically, for fundamental events at the atomic level. The strong causality principle forbids information transfer faster than the speed of light; the weak causality principle operates at the microscopic level and need not lead to information transfer. Physical models can obey the weak principle without obeying the strong version. Macroscopic causality In classical physics, an effect cannot occur ''before'' its cause which is why solutions such as the advanced time solutions of the Liénard–W ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Causality

Causality is an influence by which one Event (philosophy), event, process, state, or Object (philosophy), object (''a'' ''cause'') contributes to the production of another event, process, state, or object (an ''effect'') where the cause is at least partly responsible for the effect, and the effect is at least partly dependent on the cause. The cause of something may also be described as the reason for the event or process. In general, a process can have multiple causes,Compare: which are also said to be ''causal factors'' for it, and all lie in its past. An effect can in turn be a cause of, or causal factor for, many other effects, which all lie in its future. Some writers have held that causality is metaphysics , metaphysically prior to notions of time and space. Causality is an abstraction that indicates how the world progresses. As such it is a basic concept; it is more apt to be an explanation of other concepts of progression than something to be explained by other more fun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Mutual Information

Conditional (if then) may refer to: *Causal conditional, if X then Y, where X is a cause of Y *Conditional probability, the probability of an event A given that another event B *Conditional proof, in logic: a proof that asserts a conditional, and proves that the antecedent leads to the consequent *Material conditional, in propositional calculus, or logical calculus in mathematics *Relevance conditional, in relevance logic *Conditional (computer programming), a statement or expression in computer programming languages *A conditional expression in computer programming languages such as ?: *Conditions in a contract Grammar and linguistics *Conditional mood (or conditional tense), a verb form in many languages *Conditional sentence, a sentence type used to refer to hypothetical situations and their consequences **Indicative conditional, a conditional sentence expressing "if A then B" in a natural language **Counterfactual conditional, a conditional sentence indicating what would b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information content, amount of information" (in Units of information, units such as shannon (unit), shannons (bits), Nat (unit), nats or Hartley (unit), hartleys) obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of Entropy (information theory), entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable. Not limited to real-valued random variables and linear dependence like the Pearson correlation coefficient, correlation coefficient, MI is more general and determines how different the joint distribution of the pair (X,Y) is from the product of the marginal distributions of X and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Directed Information

Directed information is an information theory measure that quantifies the information flow from the random string X^n = (X_1,X_2,\dots,X_n) to the random string Y^n = (Y_1,Y_2,\dots,Y_n). The term ''directed information'' was coined by James Massey and is defined as :I(X^n\to Y^n) \triangleq \sum_^n I(X^i;Y_i, Y^) where I(X^;Y_i, Y^) is the conditional mutual information I(X_1,X_2,...,X_;Y_i, Y_1,Y_2,...,Y_). Directed information has applications to problems where causality plays an important role such as the capacity of channels with feedback, capacity of discrete memoryless networks, capacity of networks with in-block memory, gambling with causal side information, compression with causal side information, real-time control communication settings, and statistical physics. Causal conditioning The essence of directed information is causal conditioning. The probability of x^n causally conditioned on y^n is defined as :P(x^n, , y^n) \triangleq \prod_^n P(x_i, x^,y^). This is s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gambling

Gambling (also known as betting or gaming) is the wagering of something of Value (economics), value ("the stakes") on a Event (probability theory), random event with the intent of winning something else of value, where instances of strategy (game theory), strategy are discounted. Gambling thus requires three elements to be present: consideration (an amount wagered), risk (chance), and a prize. The outcome of the wager is often immediate, such as a single roll of dice, a spin of a roulette wheel, or a horse crossing the finish line, but longer time frames are also common, allowing wagers on the outcome of a future sports contest or even an entire sports season. The term "gaming" in this context typically refers to instances in which the activity has been specifically permitted by law. The two words are not mutually exclusive; ''i.e.'', a "gaming" company offers (legal) "gambling" activities to the public and may be regulated by one of many gaming control boards, for example, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Channel Capacity

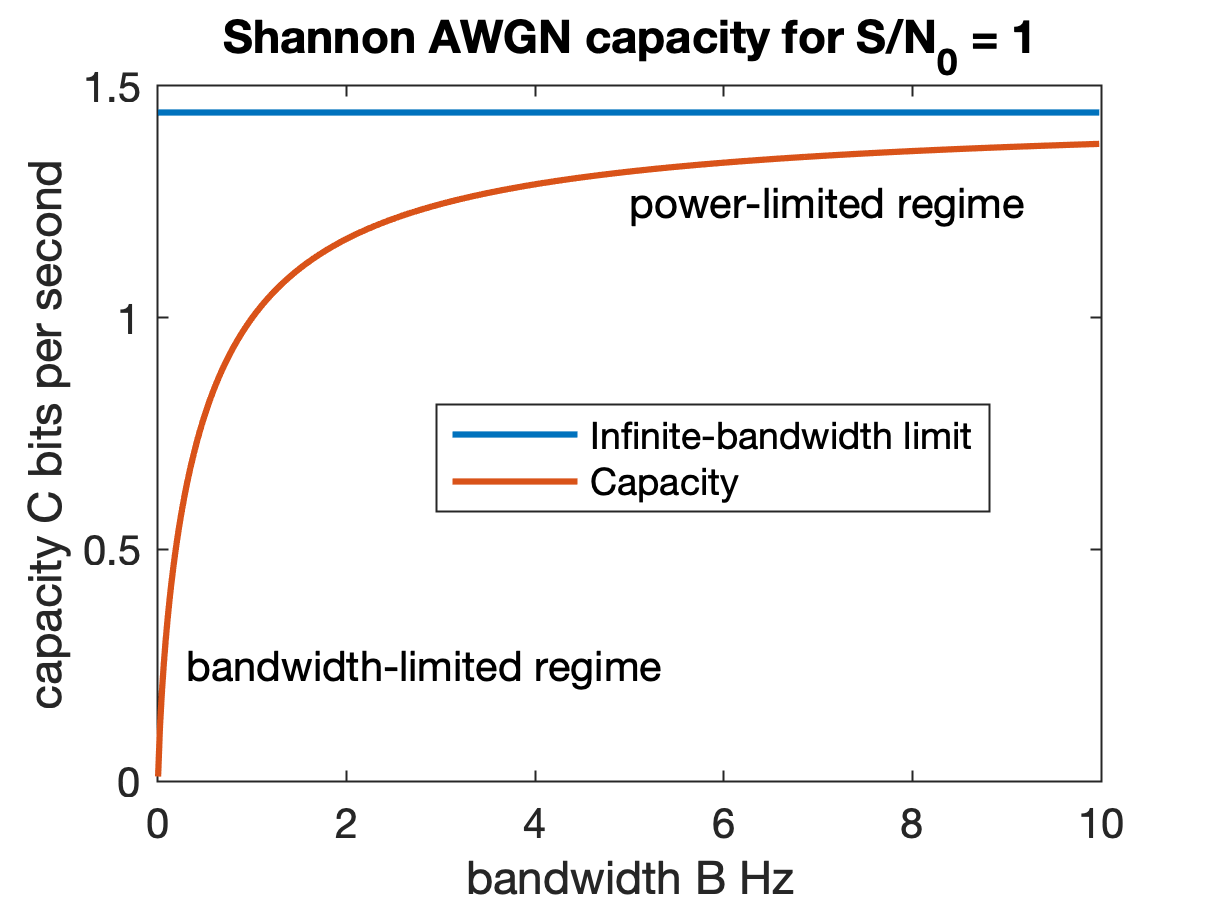

Channel capacity, in electrical engineering, computer science, and information theory, is the theoretical maximum rate at which information can be reliably transmitted over a communication channel. Following the terms of the noisy-channel coding theorem, the channel capacity of a given Channel (communications), channel is the highest information rate (in units of information entropy, information per unit time) that can be achieved with arbitrarily small error probability. Information theory, developed by Claude E. Shannon in 1948, defines the notion of channel capacity and provides a mathematical model by which it may be computed. The key result states that the capacity of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization is with respect to the input distribution. The notion of channel capacity has been central to the development of modern wireline and wireless communication system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |