|

Text Analytics

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from plain text, text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as pattern recognition, statistical pattern learning. According to Hotho et al. (2005), there are three perspectives of text mining: information extraction, data mining, and knowledge discovery in databases (KDD). Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and interpretation of the output. 'High qu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Information

Information is an Abstraction, abstract concept that refers to something which has the power Communication, to inform. At the most fundamental level, it pertains to the Interpretation (philosophy), interpretation (perhaps Interpretation (logic), formally) of that which may be sensed, or their abstractions. Any natural process that is not completely random and any observable pattern in any Media (communication), medium can be said to convey some amount of information. Whereas digital signals and other data use discrete Sign (semiotics), signs to convey information, other phenomena and artifacts such as analog signals, analogue signals, poems, pictures, music or other sounds, and current (fluid), currents convey information in a more continuous form. Information is not knowledge itself, but the meaning (philosophy), meaning that may be derived from a representation (mathematics), representation through interpretation. The concept of ''information'' is relevant or connected t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Sentiment Analysis

Sentiment analysis (also known as opinion mining or emotion AI) is the use of natural language processing, text analysis, computational linguistics, and biometrics to systematically identify, extract, quantify, and study affective states and subjective information. Sentiment analysis is widely applied to voice of the customer materials such as reviews and survey responses, online and social media, and healthcare materials for applications that range from marketing to customer service to clinical medicine. With the rise of deep language models, such as RoBERTa, also more difficult data domains can be analyzed, e.g., news texts where authors typically express their opinion/sentiment less explicitly.Hamborg, Felix; Donnay, Karsten (2021)"NewsMTSC: A Dataset for (Multi-)Target-dependent Sentiment Classification in Political News Articles" "Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume" Simple cases * "Coron ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Document

A document is a writing, written, drawing, drawn, presented, or memorialized representation of thought, often the manifestation of nonfiction, non-fictional, as well as fictional, content. The word originates from the Latin ', which denotes a "teaching" or "lesson": the verb ' denotes "to teach". In the past, the word was usually used to denote written proof useful as evidence of a truth or fact. In the computer age, Computer Age, "document" usually denotes a primarily textual computer file, including its structure and format, e.g. fonts, colors, and Computer-generated imagery, images. Contemporarily, "document" is not defined by its transmission medium, e.g., paper, given the existence of electronic documents. "Documentation" is distinct because it has more denotations than "document". Documents are also distinguished from "Realia (library science), realia", which are three-dimensional objects that would otherwise satisfy the definition of "document" because they memorialize ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Natural Language

A natural language or ordinary language is a language that occurs naturally in a human community by a process of use, repetition, and change. It can take different forms, typically either a spoken language or a sign language. Natural languages are distinguished from constructed and formal languages such as those used to program computers or to study logic. Defining natural language Natural languages include ones that are associated with linguistic prescriptivism or language regulation. ( Nonstandard dialects can be viewed as a wild type in comparison with standard languages.) An official language with a regulating academy such as Standard French, overseen by the , is classified as a natural language (e.g. in the field of natural language processing), as its prescriptive aspects do not make it constructed enough to be a constructed language or controlled enough to be a controlled natural language. Natural language are different from: * artificial and constructed la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural-language understanding, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Predictive Analytics

Predictive analytics encompasses a variety of Statistics, statistical techniques from data mining, Predictive modelling, predictive modeling, and machine learning that analyze current and historical facts to make predictions about future or otherwise unknown events. In business, predictive models exploit Pattern detection, patterns found in historical and transactional data to identify risks and opportunities. Models capture relationships among many factors to allow assessment of risk or potential associated with a particular set of conditions, guiding decision-making for candidate transactions. The defining functional effect of these technical approaches is that predictive analytics provides a predictive score (probability) for each individual (customer, employee, healthcare patient, product SKU, vehicle, component, machine, or other organizational unit) in order to determine, inform, or influence organizational processes that pertain across large numbers of individuals, such as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

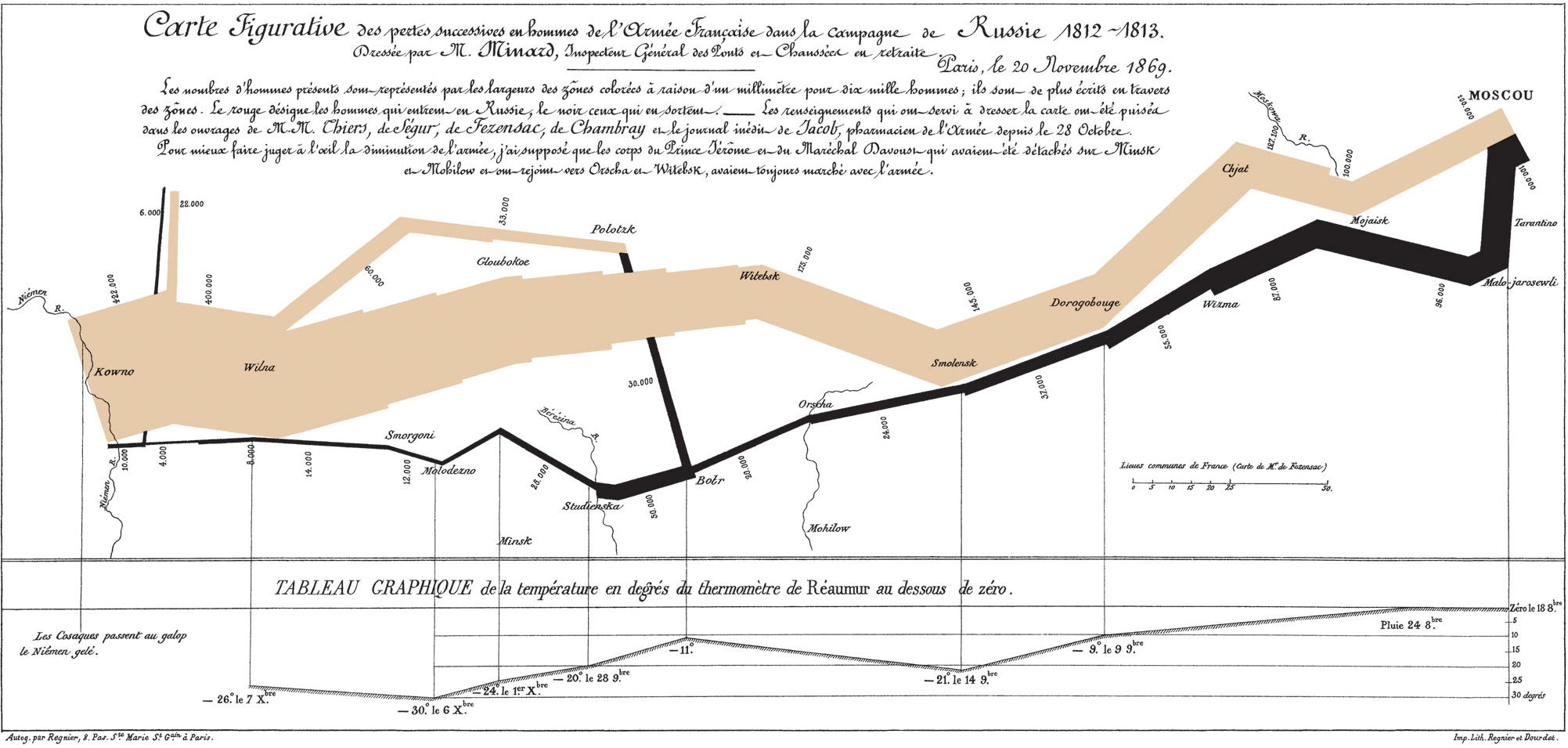

Information Visualization

Data and information visualization (data viz/vis or info viz/vis) is the practice of designing and creating Graphics, graphic or visual Representation (arts), representations of a large amount of complex quantitative and qualitative data and information with the help of static, dynamic or interactive visual items. Typically based on data and information collected from a certain domain of expertise, these visualizations are intended for a broader audience to help them visually explore and discover, quickly understand, interpret and gain important insights into otherwise difficult-to-identify structures, relationships, correlations, local and global patterns, trends, variations, constancy, clusters, outliers and unusual groupings within data (''exploratory visualization''). When intended for the general public (mass communication) to convey a concise version of known, specific information in a clear and engaging manner (''presentational'' or ''explanatory visualization''), it is t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Annotation

An annotation is extra information associated with a particular point in a document or other piece of information. It can be a note that includes a comment or explanation. Annotations are sometimes presented Marginalia, in the margin of book pages. For annotations of different digital media, see web annotation and text annotation. Literature, grammar and educational purposes Practising visually Annotation Practices are highlighting a phrase or sentence and including a comment, circling a word that needs defining, posing a question when something is not fully understood and writing a short summary of a key section. It also invites students to "(re)construct a history through material engagement and exciting DIY (Do-It-Yourself) annotation practices." Annotation practices that are available today offer a remarkable set of tools for students to begin to work, and in a more collaborative, connected way than has been previously possible. Text and film annotation Text and Film A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Tag (metadata)

In information systems, a tag is a keyword or term assigned to a piece of information (such as an Internet bookmark, multimedia, database record, or computer file). This kind of metadata helps describe an item and allows it to be found again by browsing or searching. Tags are generally chosen informally and personally by the item's creator or by its viewer, depending on the system, although they may also be chosen from a controlled vocabulary. Tagging was popularized by websites associated with Web 2.0 and is an important feature of many Web 2.0 services. It is now also part of other database systems, desktop applications, and operating systems. Overview People use tags to aid classification, mark ownership, note boundaries, and indicate online identity. Tags may take the form of words, images, or other identifying marks. An analogous example of tags in the physical world is museum object tagging. People were using textual keywords to classify information and objec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Lexical Analysis

Lexical tokenization is conversion of a text into (semantically or syntactically) meaningful ''lexical tokens'' belonging to categories defined by a "lexer" program. In case of a natural language, those categories include nouns, verbs, adjectives, punctuations etc. In case of a programming language, the categories include identifiers, operators, grouping symbols, data types and language keywords. Lexical tokenization is related to the type of tokenization used in large language models (LLMs) but with two differences. First, lexical tokenization is usually based on a lexical grammar, whereas LLM tokenizers are usually probability-based. Second, LLM tokenizers perform a second step that converts the tokens into numerical values. Rule-based programs A rule-based program, performing lexical tokenization, is called ''tokenizer'', or ''scanner'', although ''scanner'' is also a term for the first stage of a lexer. A lexer forms the first phase of a compiler frontend in processing. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

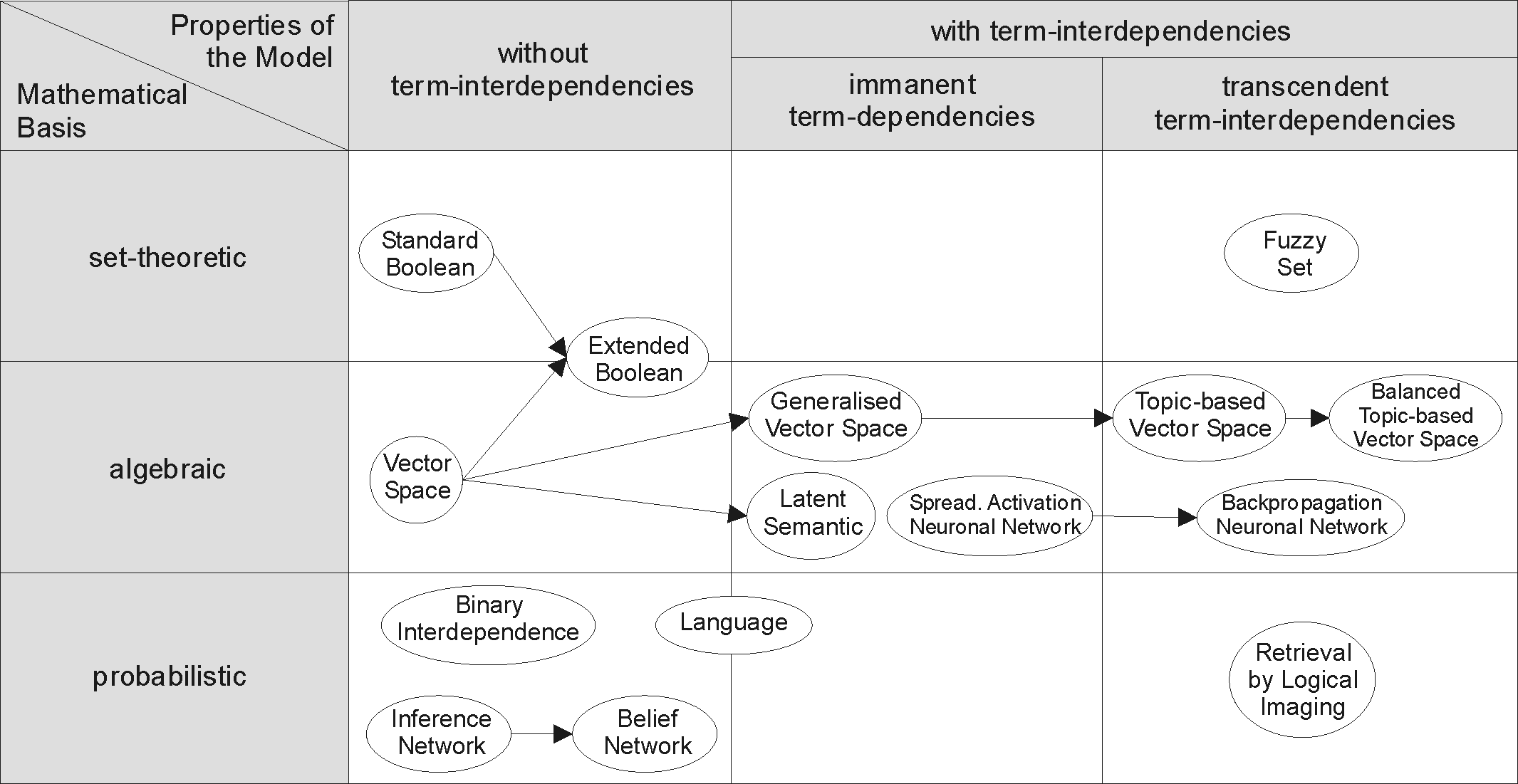

Information Retrieval

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text search, full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; it also stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user enters a query into the sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |