|

Slowly Changing Dimension

In data management and data warehousing, a slowly changing dimension (SCD) is a dimension that stores data which, while generally stable, may change over time, often in an unpredictable manner. This contrasts with a rapidly changing dimension, such as transactional parameters like customer ID, product ID, quantity, and price, which undergo frequent updates. Common examples of SCDs include geographical locations, customer details, or product attributes. Various methodologies address the complexities of SCD management. The Kimball Toolkit has popularized a categorization of techniques for handling SCD attributes as Types 1 through 6. These range from simple overwrites (Type 1), to creating new rows for each change (Type 2), adding new attributes (Type 3), maintaining separate history tables (Type 4), or employing hybrid approaches (Type 6 and 7). Type 0 is available to model an attribute as not really changing at all. Each type offers a trade-off between historical accuracy, data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Management

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making. Concept The concept of data management emerged alongside the evolution of computing technology. In the 1950s, as computers became more prevalent, organizations began to grapple with the challenge of organizing and storing data efficiently. Early methods relied on punch cards and manual sorting, which were labor-intensive and prone to errors. The introduction of database management systems in the 1970s marked a significant milestone, enabling structured storage and retrieval of data. By the 1980s, relational database models revolutionized data management, emphasizing the importance of data as an asset and fostering a data-centric mindset in business. This era also saw the rise of data governance practices, which prioritized the organization and regulation of data to ensure quality and complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Element

In metadata, the term data element is an atomic unit of data that has precise meaning or precise semantics. A data element has: # An identification such as a data element name # A clear data element definition # One or more representation terms # Optional enumerated values Code (metadata) # A list of synonyms to data elements in other metadata registries Synonym ring Data elements usage can be discovered by inspection of software applications or application data files through a process of manual or automated Application Discovery and Understanding. Once data elements are discovered they can be registered in a metadata registry. In telecommunications, the term data element has the following components: #A named unit of data that, in some contexts, is considered indivisible and in other contexts may consist of data items. #A named identifier of each of the entities and their attributes that are represented in a database. #A basic unit of information built on standard stru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Business Process Management

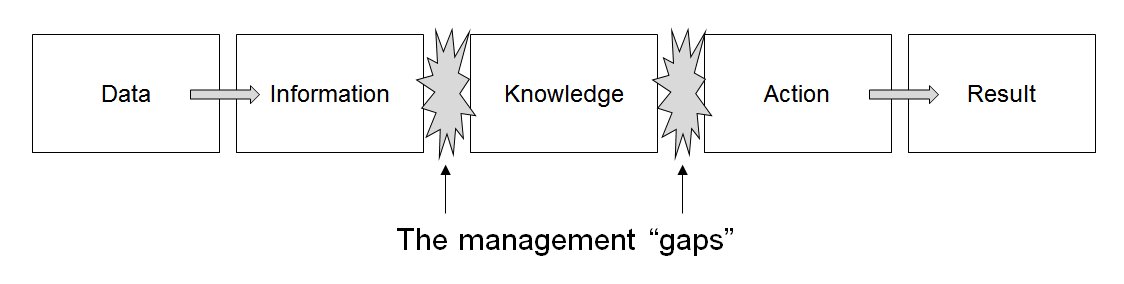

Business process management (BPM) is the discipline in which people use various methods to Business process discovery, discover, Business process modeling, model, Business analysis, analyze, measure, improve, optimize, and Business process automation, automate business processes. Any combination of methods used to manage a company's business processes is BPM. Processes can be structured and repeatable or unstructured and variable. Though not required, enabling technologies are often used with BPM. As an approach, BPM sees processes as important assets of an organization that must be understood, managed, and developed to announce and deliver value-added products and services to clients or customers. This approach closely resembles other total quality management or continual improvement process methodologies. ISO 9000:2015 promotes the process approach to managing an organization. ...promotes the adoption of a process approach when developing, implementing and improving the effe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operational Planning

Operational planning (OP) is the process of implementing strategic plans and objectives to reach specific goals. In an ''Introduction to Management and Organizational Behavior'', Barbara Carlin and Marina Sebastijanovic suggest that operational planning is one of the four basic types of planning involved in organizational management.Barbara Carlin and Marina Sebastijanovic1.2 Planning in ''Introduction to Management and Organizational Behavior'', OpenStax, subject to Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, accessed on 6 January 2025 Function and preparation An operational plan (or operations plan) describes the specific steps in any given strategic planning model and explains how and what portion of resources will be put into operation during a given operational period: in the case of commercial- or government budget balance, a fiscal year. An operational plan is the basis for, and justification of, an annual operating budget needed to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multitenancy

Software multitenancy is a software architecture in which a single instance of software runs on a server and serves multiple tenants. Systems designed in such manner are "shared" (rather than "dedicated" or "isolated"). A tenant is a group of users who share a common access with specific privileges to the software instance. With a multitenant architecture, a software application is designed to provide every tenant a dedicated share of the instance—including its data, configuration, user management, tenant individual functionality and non-functional properties. Multitenancy contrasts with multi-instance architectures, where separate software instances operate on behalf of different tenants. Some commentators regard multitenancy as an important feature of cloud computing. Adoption History of multitenant applications Multitenant applications have evolved from—and combine some characteristics of—three types of services: # Timesharing: From the 1960s companies rented spac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entity–attribute–value Model

An entity–attribute–value model (EAV) is a data model optimized for the space-efficient storage of sparse—or ''ad-hoc''—property or data values, intended for situations where runtime usage patterns are arbitrary, subject to user variation, or otherwise unforeseeable using a fixed design. The use-case targets applications which offer a large or rich system of defined property types, which are in turn appropriate to a wide set of entities, but where typically only a small, specific selection of these are instantiated (or persisted) for a given entity. Therefore, this type of data model relates to the mathematical notion of a sparse matrix. EAV is also known as object–attribute–value model, vertical database model, and open schema. Data structure This data representation is analogous to space-efficient methods of storing a sparse matrix, where only non-empty values are stored. In an EAV data model, each attribute–value pair is a fact describing an entity, and a row in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log Trigger

In relational databases, the log trigger or history trigger is a mechanism for automatic recording of information about changes inserting or/and updating or/and deleting rows in a database table. It is a particular technique for change data capturing, and in data warehousing for dealing with slowly changing dimensions. Definition Suppose there is a table which we want to audit. This table contains the following columns: Column1, Column2, ..., Columnn The column Column1 is assumed to be the primary key. These columns are defined to have the following types: Type1, Type2, ..., Typen The Log Trigger works writing the changes (INSERT, UPDATE and DELETE operations) on the table in another, history table, defined as following: CREATE TABLE HistoryTable ( Column1 Type1, Column2 Type2, : : Columnn Typen, StartDate DATETIME, EndDate DATETIME ) As shown above, this new table contains the same columns as the original table, and additionally ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Change Data Capture

In databases, change data capture (CDC) is a set of software design patterns used to determine and track the data that has changed (the "deltas") so that action can be taken using the changed data. The result is a delta-driven dataset. CDC is an approach to data integration that is based on the identification, capture and delivery of the changes made to enterprise data sources. For instance it can be used for incremental update of data loading. CDC occurs often in data warehouse environments since capturing and preserving the state of data across time is one of the core functions of a data warehouse, but CDC can be utilized in any database or data repository system. Methodology System developers can set up CDC mechanisms in a number of ways and in any one or a combination of system layers from application logic down to physical storage. In a simplified CDC context, one computer system has data believed to have changed from a previous point in time, and a second computer syste ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extract, Transform, Load

Extract, transform, load (ETL) is a three-phase computing process where data is ''extracted'' from an input source, ''transformed'' (including cleaning), and ''loaded'' into an output data container. The data can be collected from one or more sources and it can also be output to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on recurring schedules either as single jobs or aggregated into a batch of jobs. A properly designed ETL system extracts data from source systems and enforces data type and data validity standards and ensures it conforms structurally to the requirements of the output. Some ETL systems can also deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions. The ETL process is often used in data warehousing. ETL sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Temporal Database

A temporal database stores data relating to time instances. It offers temporal data types and stores information relating to past, present and future time. Temporal databases can be uni-temporal, bi-temporal or tri-temporal. More specifically the temporal aspects usually include valid time, transaction time and/or decision time. * Valid time is the time period during or event time at which a fact is true in the real world. * Transaction time is the time at which a fact was recorded in the database. * Decision time is the time at which the decision was made about the fact. Used to keep a history of decisions about valid times. Types Uni-temporal A uni-temporal database has one axis of time, either the validity range or the system time range. Bi-temporal A bi-temporal database has two axes of time: * Valid time * Transaction time or decision time Tri-temporal A tri-temporal database has three axes of time: * Valid time * Transaction time * Decision time This approach intro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |