|

Replication Crisis

The replication crisis, also known as the reproducibility or replicability crisis, refers to the growing number of published scientific results that other researchers have been unable to reproduce or verify. Because the reproducibility of empirical results is an essential part of the scientific method, such failures undermine the credibility of theories that build on them and can call into question substantial parts of scientific knowledge. The replication crisis is frequently discussed in relation to psychology and medicine, wherein considerable efforts have been undertaken to reinvestigate the results of classic studies to determine whether they are reliable, and if they turn out not to be, the reasons for the failure. Data strongly indicate that other natural science, natural and social sciences are also affected. The phrase "replication crisis" was coined in the early 2010s as part of a growing awareness of the problem. Considerations of causes and remedies have given rise ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ioannidis (2005) Why Most Published Research Findings Are False

Ioannidis or Ioannides () is a Greek language, Greek surname. The female version of the name is Ioannidou or Ioannides. ''Ioannidis'' or ''Ioannides'' is a patronymic surname which literally means "the son of Ioannis (Yiannis)", thus making it equivalent to English Johnson (surname), Johnson. Notable people with surname Ioannidis or Ioannides include: Men * Alkinoos Ioannidis (born 1969), Cypriot composer and singer * Andreas Ioannides (footballer) (born 1975), Cypriot football player * Dimitrios Ioannides (1923–2010), military officer involved in the Greek military junta of 1967–1974 * Evgenios Ioannidis (born 2001), Greek chess player * Fotis Ioannidis (born 2000), Greek footballer * Georgios Ioannidis (born 1988), Greek footballer * Giannis Ioannidis (1945–2023), former basketball coach and politician * John Ioannidis (born 1965), medical researcher * Matt Ioannidis (born 1994), American football player * Nikolaos Ioannidis (born 1994), Greek footballer * Paul Ioannidis (1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paul Meehl

Paul Everett Meehl (3 January 1920 – 14 February 2003) was an American clinical psychologist. He was the Hathaway and Regents' Professor of Psychology at the University of Minnesota, and past president of the American Psychological Association. A '' Review of General Psychology'' survey, published in 2002, ranked Meehl as the 74th most cited psychologist of the 20th century, in a tie with Eleanor J. Gibson. Throughout his nearly 60-year career, Meehl made seminal contributions to psychology, including empirical studies and theoretical accounts of construct validity, schizophrenia etiology, psychological assessment, behavioral prediction, metascience, and philosophy of science. Biography Childhood Paul Meehl was born January 3, 1920, in Minneapolis, Minnesota, to Otto and Blanche Swedal. His family name "Meehl" was his stepfather's. When he was age 16, his mother died as the result of poor medical care which, according to Meehl, greatly affected his faith in the expertise o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when a result at least as "extreme" would be very infrequent if the null hypothesis were true. More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the p-value, ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is said to be ''statistically significant'', by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or Observational study, observation that involves drawing a Sampling (statistics), sample from a Statistical population, population, there is always the possibility that an observed effect would have occurred due to sampling error al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributions Of The Observed Signal Strength V2

Distribution may refer to: Mathematics *Distribution (mathematics), generalized functions used to formulate solutions of partial differential equations *Probability distribution, the probability of a particular value or value range of a variable **Cumulative distribution function, in which the probability of being no greater than a particular value is a function of that value *Frequency distribution, a list of the values recorded in a sample * Inner distribution, and outer distribution, in coding theory *Distribution (differential geometry), a subset of the tangent bundle of a manifold * Distributed parameter system, systems that have an infinite-dimensional state-space *Distribution of terms, a situation in which all members of a category are accounted for *Distributivity, a property of binary operations that generalises the distributive law from elementary algebra *Distribution (number theory) *Distribution problems, a common type of problems in combinatorics where the goal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cramér–Rao Bound

In estimation theory and statistics, the Cramér–Rao bound (CRB) relates to estimation of a deterministic (fixed, though unknown) parameter. The result is named in honor of Harald Cramér and Calyampudi Radhakrishna Rao, but has also been derived independently by Maurice Fréchet, Georges Darmois, and by Alexander Aitken and Harold Silverstone. It is also known as Fréchet-Cramér–Rao or Fréchet-Darmois-Cramér-Rao lower bound. It states that the precision of any unbiased estimator is at most the Fisher information; or (equivalently) the reciprocal of the Fisher information is a lower bound on its variance. An unbiased estimator that achieves this bound is said to be (fully) '' efficient''. Such a solution achieves the lowest possible mean squared error among all unbiased methods, and is, therefore, the minimum variance unbiased (MVU) estimator. However, in some cases, no unbiased technique exists which achieves the bound. This may occur either if for any unbiased ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bias Of An Estimator

In statistics, the bias of an estimator (or bias function) is the difference between this estimator's expected value and the true value of the parameter being estimated. An estimator or decision rule with zero bias is called ''unbiased''. In statistics, "bias" is an property of an estimator. Bias is a distinct concept from consistency: consistent estimators converge in probability to the true value of the parameter, but may be biased or unbiased (see bias versus consistency for more). All else being equal, an unbiased estimator is preferable to a biased estimator, although in practice, biased estimators (with generally small bias) are frequently used. When a biased estimator is used, bounds of the bias are calculated. A biased estimator may be used for various reasons: because an unbiased estimator does not exist without further assumptions about a population; because an estimator is difficult to compute (as in unbiased estimation of standard deviation); because a biased esti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Efficiency (statistics)

In statistics, efficiency is a measure of quality of an estimator, of an experimental design, or of a hypothesis testing procedure. Essentially, a more efficient estimator needs fewer input data or observations than a less efficient one to achieve the Cramér–Rao bound. An ''efficient estimator'' is characterized by having the smallest possible variance, indicating that there is a small deviance between the estimated value and the "true" value in the L2 norm sense. The relative efficiency of two procedures is the ratio of their efficiencies, although often this concept is used where the comparison is made between a given procedure and a notional "best possible" procedure. The efficiencies and the relative efficiency of two procedures theoretically depend on the sample size available for the given procedure, but it is often possible to use the asymptotic relative efficiency (defined as the limit of the relative efficiencies as the sample size grows) as the principal comparison ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cohen's D

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the value of one parameter for a hypothetical population, or to the equation that operationalizes how statistics or parameters lead to the effect size value. Examples of effect sizes include the correlation between two variables, the regression coefficient in a regression, the mean difference, or the risk of a particular event (such as a heart attack) happening. Effect sizes are a complement tool for statistical hypothesis testing, and play an important role in power analyses to assess the sample size required for new experiments. Effect size are fundamental in meta-analyses which aim to provide the combined effect size based on data from multiple studies. The cluster of data-analysis methods concerning effect sizes is referred to as estimat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

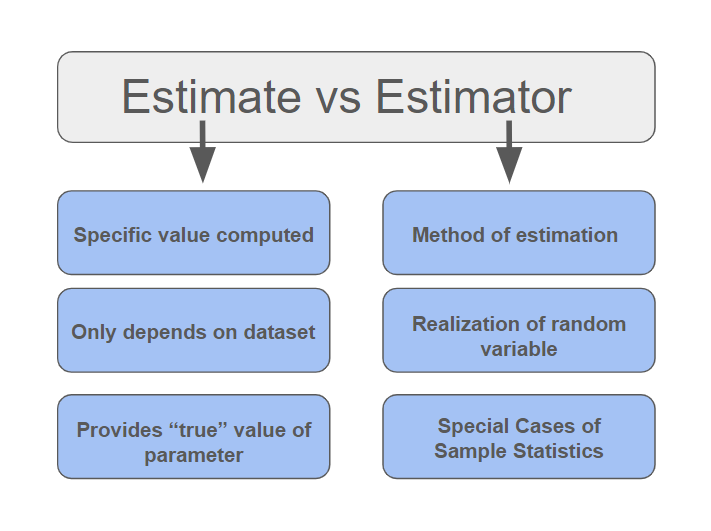

Estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Effect Size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the value of one parameter for a hypothetical population, or to the equation that operationalizes how statistics or parameters lead to the effect size value. Examples of effect sizes include the correlation between two variables, the regression coefficient in a regression, the mean difference, or the risk of a particular event (such as a heart attack) happening. Effect sizes are a complement tool for statistical hypothesis testing, and play an important role in power analyses to assess the sample size required for new experiments. Effect size are fundamental in meta-analyses which aim to provide the combined effect size based on data from multiple studies. The cluster of data-analysis methods concerning effect sizes is referred to as estima ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two event (probability theory), events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called Pairwise independence, pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |