|

Register Renaming

In computer architecture, register renaming is a technique that abstracts logical processor register, registers from physical registers. Every logical register has a set of physical registers associated with it. When a machine language instruction refers to a particular logical register, the processor transposes this name to one specific physical register on the fly. The physical registers are opaque and cannot be referenced directly but only via the canonical names. This technique is used to eliminate false Data dependency, data dependencies arising from the reuse of registers by successive Instruction (computer science), instructions that do not have any real data dependencies between them. The elimination of these false data dependencies reveals more instruction-level parallelism in an instruction stream, which can be exploited by various and complementary techniques such as superscalar and out-of-order execution for better Computer performance, performance. Problem approach ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minicomputer

A minicomputer, or colloquially mini, is a type of general-purpose computer mostly developed from the mid-1960s, built significantly smaller and sold at a much lower price than mainframe computers . By 21st century-standards however, a mini is an exceptionally large machine. Minicomputers in the traditional technical sense covered here are only small relative to generally even earlier and much bigger machines. The class formed a distinct group with its own software architectures and operating systems. Minis were designed for control, instrumentation, human interaction, and communication switching, as distinct from calculation and record keeping. Many were sold indirectly to original equipment manufacturers (OEMs) for final end-use application. During the two-decade lifetime of the minicomputer class (1965–1985), almost 100 minicomputer vendor companies formed. Only a half-dozen remained by the mid-1980s. When single-chip CPU microprocessors appeared in the 1970s, the defi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Content-addressable Memory

Content-addressable memory (CAM) is a special type of computer memory used in certain very-high-speed searching applications. It is also known as associative memory or associative storage and compares input search data against a table of stored data, and returns the address of matching data. CAM is frequently used in networking devices where it speeds up forwarding information base and routing table operations. This kind of associative memory is also used in cache memory. In associative cache memory, both address and content is stored side by side. When the address matches, the corresponding content is fetched from cache memory. History Dudley Allen Buck invented the concept of content-addressable memory in 1955. Buck is credited with the idea of ''recognition unit''. Hardware associative array Unlike standard computer memory, random-access memory (RAM), in which the user supplies a memory address and the RAM returns the data word stored at that address, a CAM is designed suc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

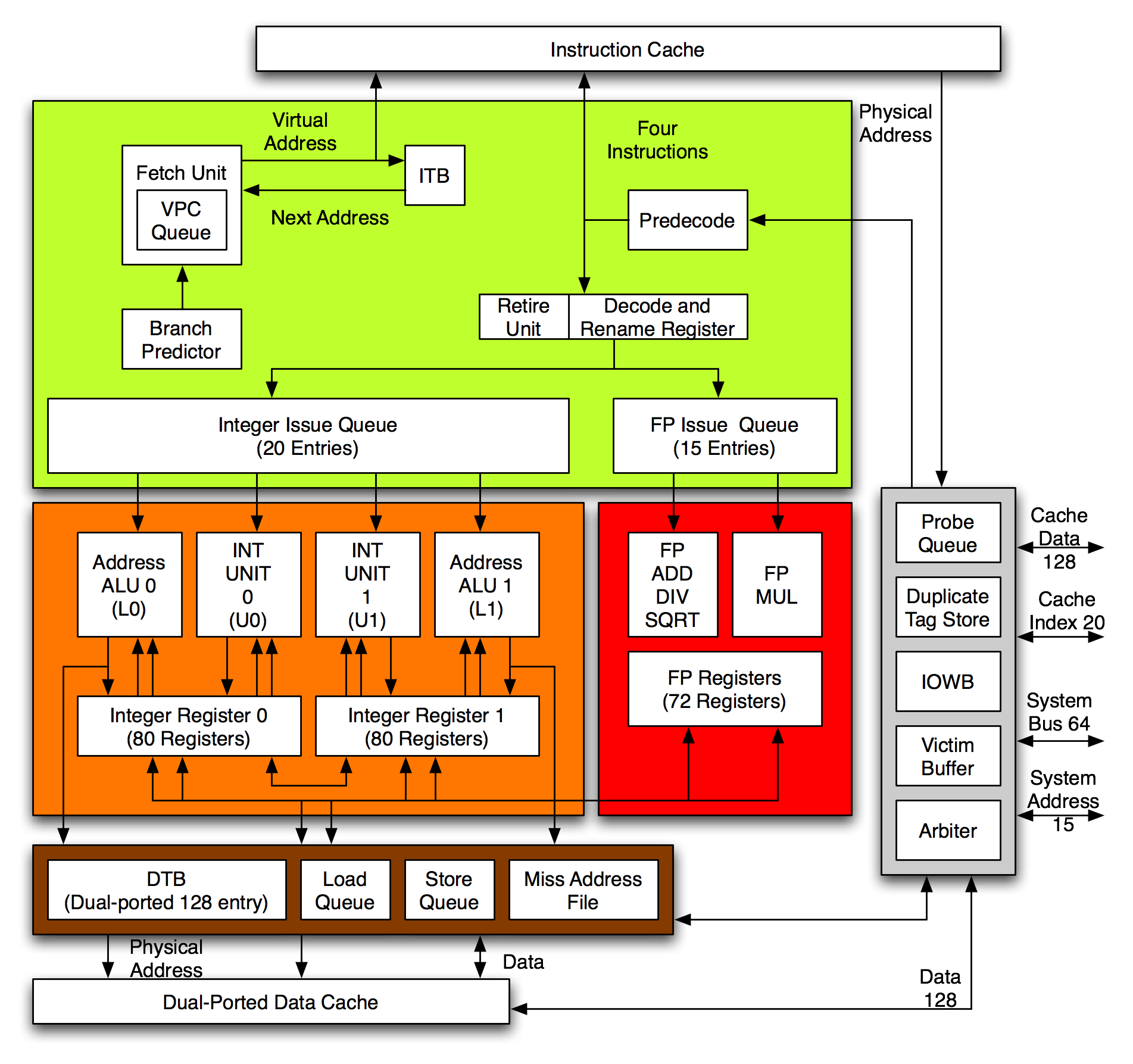

Alpha 21264

The Alpha 21264, also known by its code name, EV6, is a RISC microprocessor developed by Digital Equipment Corporation launched on 19 October 1998. The 21264 implemented the Alpha instruction set architecture (ISA). Description The Alpha 21264 is a four-issue superscalar microprocessor with out-of-order execution and speculative execution. It has a peak execution rate of six instructions per cycle and could sustain four instructions per cycle. It has a seven-stage instruction pipeline. Out of order execution At any given stage, the microprocessor could have up to 80 instructions in various stages of execution, surpassing any other contemporary microprocessor. Decoded instructions are held in instruction queues and are issued when their operands are available. The integer queue contained 20 entries and the floating-point queue 15. Each queue could issue as many instructions as there were pipelines. Ebox The Ebox executes integer, load and store instructions. It has tw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DEC Alpha

Alpha (original name Alpha AXP) is a 64-bit reduced instruction set computer (RISC) instruction set architecture (ISA) developed by Digital Equipment Corporation (DEC). Alpha was designed to replace 32-bit VAX complex instruction set computers (CISC) and to be a highly competitive RISC processor for Unix workstations and similar markets. Alpha was implemented in a series of microprocessors originally developed and fabricated by DEC. These microprocessors were most prominently used in a variety of DEC workstations and servers, which eventually formed the basis for almost all of their mid-to-upper-scale lineup. Several third-party vendors also produced Alpha systems, including PC form factor motherboards. Operating systems that support Alpha included OpenVMS (formerly named OpenVMS AXP), Tru64 UNIX (formerly named DEC OSF/1 AXP and Digital UNIX), Windows NT (discontinued after NT 4.0; and prerelease Windows 2000 RC2), Linux (Debian, SUSE, Gentoo and Red Hat), BSD UNIX (NetBS ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Set Architecture

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation'' of that ISA. In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Self-modifying Code

In computer science, self-modifying code (SMC or SMoC) is source code, code that alters its own instruction (computer science), instructions while it is execution (computing), executing – usually to reduce the instruction path length and improve computer performance, performance or simply to reduce otherwise duplicate code, repetitively similar code, thus simplifying software maintenance, maintenance. The term is usually only applied to code where the self-modification is intentional, not in situations where code accidentally modifies itself due to an error such as a buffer overflow. Self-modifying code can involve overwriting existing instructions or generating new code at run time and transferring control to that code. Self-modification can be used as an alternative to the method of "flag setting" and conditional program branching, used primarily to reduce the number of times a condition needs to be tested. The method is frequently used for conditionally invoking test/deb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop Unrolling

Loop unrolling, also known as loop unwinding, is a loop transformation technique that attempts to optimize a program's execution speed at the expense of its binary size, which is an approach known as space–time tradeoff. The transformation can be undertaken manually by the programmer or by an optimizing compiler. On modern processors, loop unrolling is often counterproductive, as the increased code size can cause more cache misses; ''cf.'' Duff's device. The goal of loop unwinding is to increase a program's speed by reducing or eliminating instructions that control the loop, such as pointer arithmetic and "end of loop" tests on each iteration; reducing branch penalties; as well as hiding latencies, including the delay in reading data from memory. To eliminate this computational overhead, loops can be re-written as a repeated sequence of similar independent statements. Loop unrolling is also part of certain formal verification techniques, in particular bounded model chec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transmeta Crusoe

The Transmeta Crusoe is a family of x86-compatible microprocessors developed by Transmeta and introduced in 2000. Instead of the instruction set architecture being implemented in hardware, or translated by specialized hardware, the Crusoe runs a software abstraction layer, or a virtual machine, known as the Code Morphing Software (CMS). The CMS translates machine code instructions received from programs into native instructions for the microprocessor. In this way, the Crusoe can software emulation, emulate other instruction set architectures (ISAs). This is used to allow the microprocessors to emulate the Intel x86 instruction set. Design The Crusoe is notable for its method of achieving x86 compatibility. Instead of the instruction set architecture being implemented in hardware, or translated by specialized hardware, the Crusoe runs a software abstraction layer, or a virtual machine, known as the Code Morphing Software (CMS). The CMS translates machine code instruction ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Code Generation (compiler)

tuo.maxnam computing, code generation is part of the process chain of a compiler, in which an intermediate representation of source code is converted into a form (e.g., machine code) that can be readily executed by the target system. Sophisticated compilers typically perform multiple passes over various intermediate forms. This multi-stage process is used because many algorithms for code optimization are easier to apply one at a time, or because the input to one optimization relies on the completed processing performed by another optimization. This organization also facilitates the creation of a single compiler that can target multiple architectures, as only the last of the code generation stages (the ''backend'') needs to change from target to target. (For more information on compiler design, see Compiler.) The input to the code generator typically consists of a parse tree or an abstract syntax tree. The tree is converted into a linear sequence of instructions, usually in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Register Allocation

In compiler optimization, register allocation is the process of assigning local automatic variables and Expression (computer science), expression results to a limited number of processor registers. Register allocation can happen over a basic block (''local register allocation''), over a whole function/Subroutine, procedure (''global register allocation''), or across function boundaries traversed via call-graph (''interprocedural register allocation''). When done per function/procedure the calling convention may require insertion of save/restore around each Call site, call-site. Context Principle {, class="wikitable floatright" , + Different number of general-purpose registers in the most common architectures , - ! Architecture ! scope="col" , 32 bit ! scope="col" , 64 bit , - ! scope="row" , ARM , 15 , 31 , - ! scope="row" , Intel x86 , 8 , 16 , - ! scope="row" , MIPS , 32 , 32 , - ! scope="row" , POWER/PowerPC , 32 , 32 , - ! scope ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs). The form, CPU design, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic operation, arithmetic and Bitwise operation, logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the #Fetch, fetching (from memory), #Decode, decoding and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |