|

Reasoning Language Model

Reasoning language models are artificial intelligence systems that combine natural language processing with structured reasoning capabilities. These models are usually constructed by prompting, supervised finetuning (SFT), and reinforcement learning (RL) initialized with pretrained language models. Prompting A language model is a generative model of a training dataset of texts. Prompting means constructing a text prompt, such that, conditional on the text prompt, the language model generates a solution to the task. Prompting can be applied to a pretrained model ("base model"), a base model that has undergone SFT, or RL, or both. Chain of thought Chain of Thought prompting (CoT) prompts the model to answer a question by first generating a "chain of thought", i.e. steps of reasoning that mimic a train of thought. It was published in 2022 by the Brain team of Google on the PaLM-540B model. In CoT prompting, the prompt is of the form " Let's think step by step", and the mod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-entropy

In information theory, the cross-entropy between two probability distributions p and q over the same underlying set of events measures the average number of bits needed to identify an event drawn from the set if a coding scheme used for the set is optimized for an estimated probability distribution q, rather than the true distribution p. Definition The cross-entropy of the distribution q relative to a distribution p over a given set is defined as follows: :H(p, q) = -\operatorname_plog q/math>, where E_pcdot/math> is the expected value operator with respect to the distribution p. The definition may be formulated using the Kullback–Leibler divergence D_(p \parallel q), divergence of p from q (also known as the ''relative entropy'' of p with respect to q). :H(p, q) = H(p) + D_(p \parallel q), where H(p) is the entropy of p. For discrete probability distributions p and q with the same support \mathcal this means The situation for continuous distributions is analogous. We ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

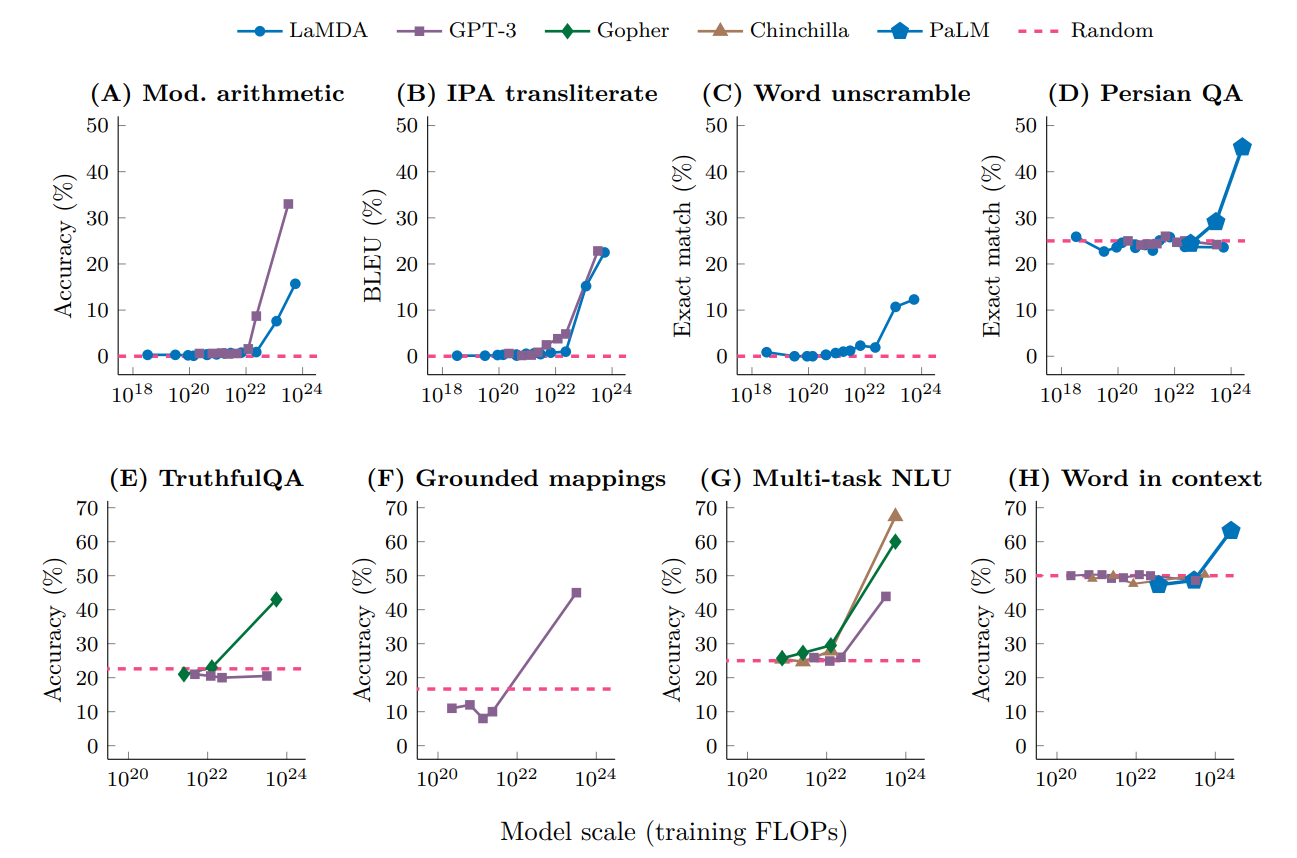

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reflection (artificial Intelligence)

Reflection in artificial intelligence, notably used in Large language model, large language models, specifically in Reasoning language model, Reasoning Language Models (RLMs), is the ability for an artificial neural network to provide Bottom-up and top-down design, top-down feedback to its input or previous layers, based on their outputs or subsequent layers. This process involves self-assessment and internal deliberation, aiming to enhance Automated reasoning, reasoning accuracy, minimize errors (like Hallucination (artificial intelligence), hallucinations), and increase AI interpretability, interpretability. Reflection is a form of "test-time compute", where additional computational resources are used during inference. Introduction Traditional neural networks process inputs in a Feedforward neural network, feedforward manner, generating outputs in a single pass. However, their limitations in handling complex tasks, and especially compositional ones, have led to the developmen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automated Reasoning

In computer science, in particular in knowledge representation and reasoning and metalogic, the area of automated reasoning is dedicated to understanding different aspects of reasoning. The study of automated reasoning helps produce computer programs that allow computers to reason completely, or nearly completely, automatically. Although automated reasoning is considered a sub-field of artificial intelligence, it also has connections with theoretical computer science and philosophy. The most developed subareas of automated reasoning are automated theorem proving (and the less automated but more pragmatic subfield of interactive theorem proving) and automated proof checking (viewed as guaranteed correct reasoning under fixed assumptions). Extensive work has also been done in reasoning by analogy using induction and abduction. Other important topics include reasoning under uncertainty and non-monotonic reasoning. An important part of the uncertainty field is that of argumentati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MMLU

In artificial intelligence, Measuring Massive Multitask Language Understanding (MMLU) is a benchmark for evaluating the capabilities of large language models. Benchmark It consists of about 16,000 multiple-choice questions spanning 57 academic subjects including mathematics, philosophy, law, and medicine. It is one of the most commonly used benchmarks for comparing the capabilities of large language models, with over 100 million downloads as of July 2024. The MMLU was released by Dan Hendrycks and a team of researchers in 2020 and was designed to be more challenging than then-existing benchmarks such as General Language Understanding Evaluation (GLUE) on which new language models were achieving better-than-human accuracy. At the time of the MMLU's release, most existing language models performed around the level of random chance (25%), with the best performing GPT-3 model achieving 43.9% accuracy. The developers of the MMLU estimate that human domain-experts achieve around 89.8% ac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Primary School

A primary school (in Ireland, the United Kingdom, Australia, Trinidad and Tobago, Jamaica, and South Africa), junior school (in Australia), elementary school or grade school (in North America and the Philippines) is a school for primary education of children who are four to eleven years of age. Primary schooling follows pre-school and precedes secondary schooling. The International Standard Classification of Education considers primary education as a single phase where programmes are typically designed to provide fundamental skills in reading, writing, and mathematics and to establish a solid foundation for learning. This is ISCED Level 1: Primary education or first stage of basic education.Annex III in the ISCED 2011 English.pdf Navigate to International Standard Classification of Educati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unit Testing

In computer programming, unit testing is a software testing method by which individual units of source code—sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures—are tested to determine whether they are fit for use. History Before unit testing, capture and replay testing tools were the norm. In 1997, Kent Beck and Erich Gamma developed and released JUnit, a unit test framework that became popular with Java developers. Google embraced automated testing around 2005–2006. Description Unit tests are typically automated tests written and run by software developers to ensure that a section of an application (known as the "unit") meets its design and behaves as intended. In procedural programming, a unit could be an entire module, but it is more commonly an individual function or procedure. In object-oriented programming, a unit is often an entire interface, such as a class, or an individu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Competitive Programming

Competitive programming is a mind sport usually held over the Internet or a local network, involving participants trying to program according to provided specifications. Contestants are referred to as ''sport programmers''. Competitive programming is recognized and supported by several multinational software and Internet companies, such as Google and Facebook. A programming competition generally involves the host presenting a set of logical or mathematical problems, also known as puzzles, to the contestants (who can vary in number from tens or even hundreds to several thousands), and contestants are required to write computer programs capable of solving each problem. Judging is based mostly upon number of problems solved and time spent for writing successful solutions, but may also include other factors (quality of output produced, execution time, memory usage, program size, etc.) History One of the oldest contests known is the International Collegiate Programming Contest ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt. The architecture is a standard transformer network (with a few engineering tweaks) with the unprecedented size of 2048-token-long context and 175 billion parameters (requiring 800 GB of storage). The training method is "generative pretraining", meaning that it is trained to predict what the next token is. The model demonstrated strong few-shot learning on many text-based tasks. It is the third-generation language prediction model in the GPT-n series (and the successor to GPT-2) created by OpenAI, a San Francisco-based artificial intelligence research laboratory. GPT-3, which was introduced in May 2020, and was in beta testing as of July 2020, is part of a trend in natural language processing (NLP) systems of pre-trained language representations. The quality of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tree Traversal

In computer science, tree traversal (also known as tree search and walking the tree) is a form of graph traversal and refers to the process of visiting (e.g. retrieving, updating, or deleting) each node in a tree data structure, exactly once. Such traversals are classified by the order in which the nodes are visited. The following algorithms are described for a binary tree, but they may be generalized to other trees as well. Types Unlike linked lists, one-dimensional arrays and other linear data structures, which are canonically traversed in linear order, trees may be traversed in multiple ways. They may be traversed in depth-first or breadth-first order. There are three common ways to traverse them in depth-first order: in-order, pre-order and post-order. Beyond these basic traversals, various more complex or hybrid schemes are possible, such as depth-limited searches like iterative deepening depth-first search. The latter, as well as breadth-first search, can also be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Scaling Law

In machine learning, a neural scaling law is an empirical scaling law that describes how neural network performance changes as key factors are scaled up or down. These factors typically include the number of parameters, training dataset size, and training cost. Introduction In general, a neural model can be characterized by 4 parameters: size of the model, size of the training dataset, cost of training, error rate after training. Each of these four variables can be precisely defined into a real number, and they are empirically found to be related by simple statistical laws, called "scaling laws". These are usually written as N, D, C, L (number of parameters, dataset size, computing cost, loss). Size of the model In most cases, the size of the model is simply the number of parameters. However, one complication arises with the use of sparse models, such as mixture-of-expert models. In sparse models, during every inference, only a fraction of the parameters are used. In compariso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |