|

Privatization (computer Programming)

Privatization is a technique used in shared-memory programming to enable parallelism, by removing dependencies that occur across different threads in a parallel program. Dependencies between threads arise from two or more threads reading or writing a variable at the same time. Privatization gives each thread a private copy, so it can read and write it independently and thus, simultaneously. Each parallel algorithm specifies whether a variable is shared or private. Many errors in implementation can arise if the variable is declared to be shared but the algorithm requires it to be private, or vice versa. Traditionally, parallelizing compilers could apply privatization to scalar elements only. To exploit parallelism that occurs across iterations within a parallel program ( loop-level parallelism), the need grew for compilers that can also perform array variable privatization. Most of today's compilers can performing array privatization with more features and functions to enhance t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synchronization (computer Science)

In computer science, synchronization is the task of coordinating multiple processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action. Motivation The need for synchronization does not arise merely in multi-processor systems but for any kind of concurrent processes; even in single processor systems. Mentioned below are some of the main needs for synchronization: '' Forks and Joins:'' When a job arrives at a fork point, it is split into N sub-jobs which are then serviced by n tasks. After being serviced, each sub-job waits until all other sub-jobs are done processing. Then, they are joined again and leave the system. Thus, parallel programming requires synchronization as all the parallel processes wait for several other processes to occur. '' Producer-Consumer:'' In a producer-consumer relationship, the consumer process is dependent on the producer process until the necessary data has been produced. ''Exclusiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

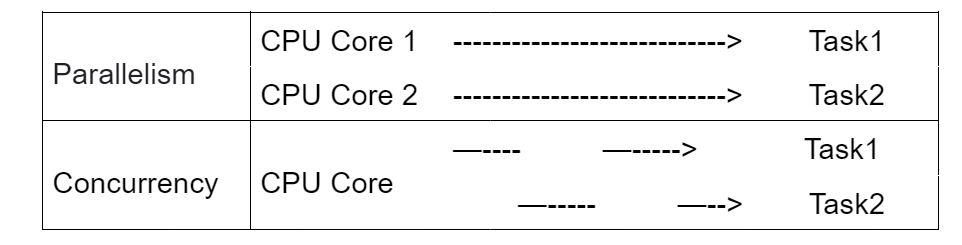

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Morgan Kaufmann

Morgan Kaufmann Publishers is a Burlington, Massachusetts (San Francisco, California until 2008) based publisher specializing in computer science and engineering content. Since 1984, Morgan Kaufmann has been publishing contents on information technology, computer architecture, data management, computer networking, computer systems, human computer interaction, computer graphics, multimedia information and systems, artificial intelligence, computer security, and software engineering. Morgan Kaufmann's audience includes the research and development communities, information technology (IS/IT) managers, and students in professional degree programs. The company was founded in 1984 by publishers Michael B. Morgan and William Kaufmann and computer scientist Nils Nilsson. It was held privately until 1998, when it was acquired by Harcourt General and became an imprint of the Academic Press, a subsidiary of Harcourt. Harcourt was acquired by Reed Elsevier in 2001; Morgan Kaufmann is now ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenMP

OpenMP is an application programming interface (API) that supports multi-platform shared-memory multiprocessing programming in C, C++, and Fortran, on many platforms, instruction-set architectures and operating systems, including Solaris, AIX, FreeBSD, HP-UX, Linux, macOS, Windows and OpenHarmony. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior. OpenMP is managed by the nonprofit technology consortium ''OpenMP Architecture Review Board'' (or ''OpenMP ARB''), jointly defined by a broad swath of leading computer hardware and software vendors, including Arm, AMD, IBM, Intel, Cray, HP, Fujitsu, Nvidia, NEC, Red Hat, Texas Instruments, and Oracle Corporation. OpenMP uses a portable, scalable model that gives programmers a simple and flexible interface for developing parallel applications for platforms ranging from the standard desktop computer to the supercomputer. An application built with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Commutativity

In mathematics, a binary operation is commutative if changing the order of the operands does not change the result. It is a fundamental property of many binary operations, and many mathematical proofs depend on it. Perhaps most familiar as a property of arithmetic, e.g. or , the property can also be used in more advanced settings. The name is needed because there are operations, such as division and subtraction, that do not have it (for example, ); such operations are ''not'' commutative, and so are referred to as noncommutative operations. The idea that simple operations, such as the multiplication and addition of numbers, are commutative was for many centuries implicitly assumed. Thus, this property was not named until the 19th century, when new algebraic structures started to be studied. Definition A binary operation * on a set ''S'' is ''commutative'' if x * y = y * x for all x,y \in S. An operation that is not commutative is said to be ''noncommutative''. One says ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loop Unrolling

Loop unrolling, also known as loop unwinding, is a loop transformation technique that attempts to optimize a program's execution speed at the expense of its binary size, which is an approach known as space–time tradeoff. The transformation can be undertaken manually by the programmer or by an optimizing compiler. On modern processors, loop unrolling is often counterproductive, as the increased code size can cause more cache misses; ''cf.'' Duff's device. The goal of loop unwinding is to increase a program's speed by reducing or eliminating instructions that control the loop, such as pointer arithmetic and "end of loop" tests on each iteration; reducing branch penalties; as well as hiding latencies, including the delay in reading data from memory. To eliminate this computational overhead, loops can be re-written as a repeated sequence of similar independent statements. Loop unrolling is also part of certain formal verification techniques, in particular bounded model chec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symbolic Computation

In mathematics and computer science, computer algebra, also called symbolic computation or algebraic computation, is a scientific area that refers to the study and development of algorithms and software for manipulating mathematical expressions and other mathematical objects. Although computer algebra could be considered a subfield of scientific computing, they are generally considered as distinct fields because scientific computing is usually based on numerical computation with approximate floating point numbers, while symbolic computation emphasizes ''exact'' computation with expressions containing variables that have no given value and are manipulated as symbols. Software applications that perform symbolic calculations are called '' computer algebra systems'', with the term ''system'' alluding to the complexity of the main applications that include, at least, a method to represent mathematical data in a computer, a user programming language (usually different from the la ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Exclusion

In computer science, mutual exclusion is a property of concurrency control, which is instituted for the purpose of preventing race conditions. It is the requirement that one thread of execution never enters a critical section while a concurrent thread of execution is already accessing said critical section, which refers to an interval of time during which a thread of execution accesses a shared resource or shared memory. The shared resource is a data object, which two or more concurrent threads are trying to modify (where two concurrent read operations are permitted but, no two concurrent write operations or one read and one write are permitted, since it leads to data inconsistency). Mutual exclusion algorithms ensure that if a process is already performing write operation on a data object ritical sectionno other process/thread is allowed to access/modify the same object until the first process has finished writing upon the data object ritical sectionand released the obj ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reduction (complexity)

In computability theory and computational complexity theory, a reduction is an algorithm for transforming one problem into another problem. A sufficiently efficient reduction from one problem to another may be used to show that the second problem is at least as difficult as the first. Intuitively, problem ''A'' is reducible to problem ''B'', if an algorithm for solving problem ''B'' efficiently (if it exists) could also be used as a subroutine to solve problem ''A'' efficiently. When this is true, solving ''A'' cannot be harder than solving ''B''. "Harder" means having a higher estimate of the required computational resources in a given context (e.g., higher time complexity, greater memory requirement, expensive need for extra hardware processor cores for a parallel solution compared to a single-threaded solution, etc.). The existence of a reduction from ''A'' to ''B'' can be written in the shorthand notation ''A'' ≤m ''B'', usually with a subscript on the ≤ to indicate the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Parallelization

Automatic parallelization, also auto parallelization, or autoparallelization refers to converting sequential code into multi-threaded and/or vectorized code in order to use multiple processors simultaneously in a shared-memory multiprocessor ( SMP) machine. Fully automatic parallelization of sequential programs is a challenge because it requires complex program analysis and the best approach may depend upon parameter values that are not known at compilation time. The programming control structures on which autoparallelization places the most focus are loops, because, in general, most of the execution time of a program takes place inside some form of loop. There are two main approaches to parallelization of loops: pipelined multi-threading and cyclic multi-threading. For example, consider a loop that on each iteration applies a hundred operations, and runs for a thousand iterations. This can be thought of as a grid of 100 columns by 1000 rows, a total of 100,000 operations. Cyc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |