|

Princeton Sound Lab

The Princeton Sound Lab is a research laboratory in the Department of Computer Science at Princeton University, in collaboration with the Department of Music. The Sound Lab conducts research in a variety of areas in computer music, including physical modeling, audio analysis, audio synthesis, programming languages for audio and multimedia, interactive controller design, psychoacoustics Psychoacoustics is the branch of psychophysics involving the scientific study of sound perception and audiology—how humans perceive various sounds. More specifically, it is the branch of science studying the psychological responses associated wit ..., and real-time systems for composition and performance. External links * Princeton University Audio engineering {{music-org-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Princeton University

Princeton University is a private research university in Princeton, New Jersey. Founded in 1746 in Elizabeth as the College of New Jersey, Princeton is the fourth-oldest institution of higher education in the United States and one of the nine colonial colleges chartered before the American Revolution. It is one of the highest-ranked universities in the world. The institution moved to Newark in 1747, and then to the current site nine years later. It officially became a university in 1896 and was subsequently renamed Princeton University. It is a member of the Ivy League. The university is governed by the Trustees of Princeton University and has an endowment of $37.7 billion, the largest endowment per student in the United States. Princeton provides undergraduate and graduate instruction in the humanities, social sciences, natural sciences, and engineering to approximately 8,500 students on its main campus. It offers postgraduate degrees through the Princeton Schoo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Music

Computer music is the application of computing technology in music composition, to help human composers create new music or to have computers independently create music, such as with algorithmic composition programs. It includes the theory and application of new and existing computer software technologies and basic aspects of music, such as sound synthesis, digital signal processing, sound design, sonic diffusion, acoustics, electrical engineering and psychoacoustics. The field of computer music can trace its roots back to the origins of electronic music, and the first experiments and innovations with electronic instruments at the turn of the 20th century. History Much of the work on computer music has drawn on the relationship between music and mathematics, a relationship which has been noted since the Ancient Greeks described the " harmony of the spheres". Musical melodies were first generated by the computer originally named the CSIR Mark 1 (later renamed CSIRAC) in Au ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Physical Modeling

Physical modelling synthesis refers to sound synthesis methods in which the waveform of the sound to be generated is computed using a mathematical model, a set of equations and algorithms to simulate a physical source of sound, usually a musical instrument. General methodology Modelling attempts to replicate laws of physics that govern sound production, and will typically have several parameters, some of which are constants that describe the physical materials and dimensions of the instrument, while others are time-dependent functions describing the player's interaction with the instrument, such as plucking a string, or covering toneholes. For example, to model the sound of a drum, there would be a mathematical model of how striking the drumhead injects energy into a two-dimensional membrane. Incorporating this, a larger model would simulate the properties of the membrane (mass density, stiffness, etc.), its coupling with the resonance of the cylindrical body of the drum, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Audio Analysis

Audio analysis refers to the extraction of information and meaning from audio signals for analysis, classification, storage, retrieval, synthesis, etc. The observation mediums and interpretation methods vary, as audio analysis can refer to the human ear and how people interpret the audible sound source, or it could refer to using technology such as an Audio analyzer to evaluate other qualities of a sound source such as amplitude, distortion, frequency response, and more. Once an audio source's information has been observed, the information revealed can then be processed for the logical, emotional, descriptive, or otherwise relevant interpretation by the user. Natural Analysis The most prevalent form of audio analysis is derived from the sense of hearing. A type of sensory perception that occurs in much of the planet's fauna, audio analysis is a fundamental process of many living beings. Sounds made by the surrounding environment or other living beings provides input to the hearin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Audio Synthesis

A synthesizer (also spelled synthesiser) is an electronic musical instrument that generates audio signals. Synthesizers typically create sounds by generating waveforms through methods including subtractive synthesis, additive synthesis and frequency modulation synthesis. These sounds may be altered by components such as filters, which cut or boost frequencies; envelopes, which control articulation, or how notes begin and end; and low-frequency oscillators, which modulate parameters such as pitch, volume, or filter characteristics affecting timbre. Synthesizers are typically played with keyboards or controlled by sequencers, software or other instruments, and may be synchronized to other equipment via MIDI. Synthesizer-like instruments emerged in the United States in the mid-20th century with instruments such as the RCA Mark II, which was controlled with punch cards and used hundreds of vacuum tubes. The Moog synthesizer, developed by Robert Moog and first sold in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

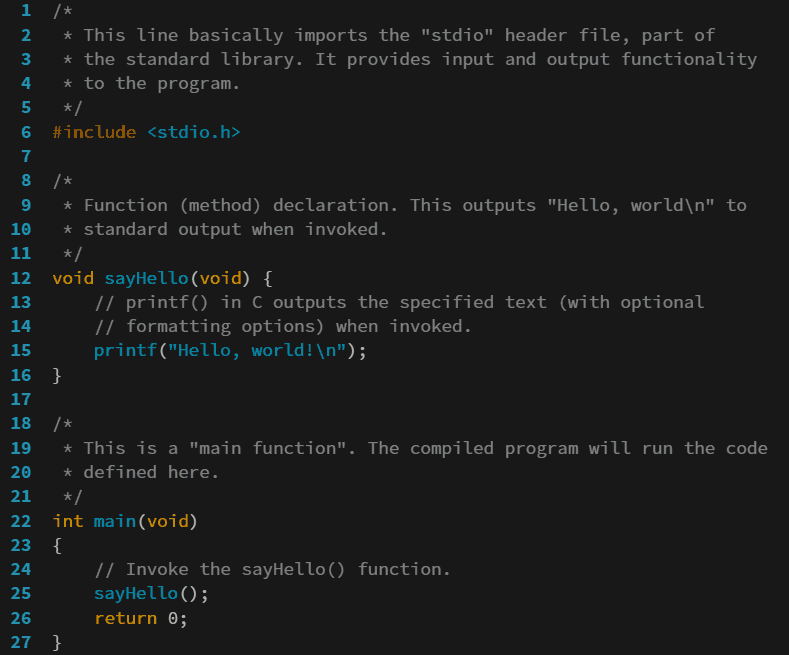

Programming Languages

A programming language is a system of notation for writing computer program, computer programs. Most programming languages are text-based formal languages, but they may also be visual programming language, graphical. They are a kind of computer language. The description of a programming language is usually split into the two components of Syntax (programming languages), syntax (form) and semantics (computer science), semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C (programming language), C programming language is specified by an International Organization for Standardization, ISO Standard) while other languages (such as Perl) have a dominant Programming language implementation, implementation that is treated as a reference implementation, reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being commo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Psychoacoustics

Psychoacoustics is the branch of psychophysics involving the scientific study of sound perception and audiology—how humans perceive various sounds. More specifically, it is the branch of science studying the psychological responses associated with sound (including noise, speech, and music). Psychoacoustics is an interdisciplinary field of many areas, including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science. Background Hearing is not a purely mechanical phenomenon of wave propagation, but is also a sensory and perceptual event; in other words, when a person hears something, that something arrives at the ear as a mechanical sound wave traveling through the air, but within the ear it is transformed into neural action potentials. The outer hair cells (OHC) of a mammalian cochlea give rise to enhanced sensitivity and better frequency resolution of the mechanical response of the cochlear partition. These nerve pulses then trave ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |