|

Lexical Chain

The sequence between semantic related ordered words is classified as a lexical chain. A lexical chain is a sequence of related words in writing, spanning short (adjacent words or sentences) or long distances (entire text). A chain is independent of the grammatical structure of the text and in effect it is a list of words that captures a portion of the cohesive structure of the text. A lexical chain can provide a context for the resolution of an ambiguous term and enable identification of the concept that the term represents. * Rome → capital → city → inhabitant * Wikipedia → resource → web About Morris and Hirst introduce the term ''lexical chain'' as an expansion of ''lexical cohesion.'' A text in which many of its sentences are semantically connected often produces a certain degree of continuity in its ideas, providing good cohesion among its sentences. The definition used for lexical cohesion states that coherence is a result of cohesion, not the other way around. C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word

A word is a basic element of language that carries an objective or practical meaning, can be used on its own, and is uninterruptible. Despite the fact that language speakers often have an intuitive grasp of what a word is, there is no consensus among linguists on its definition and numerous attempts to find specific criteria of the concept remain controversial. Different standards have been proposed, depending on the theoretical background and descriptive context; these do not converge on a single definition. Some specific definitions of the term "word" are employed to convey its different meanings at different levels of description, for example based on phonological, grammatical or orthographic basis. Others suggest that the concept is simply a convention used in everyday situations. The concept of "word" is distinguished from that of a morpheme, which is the smallest unit of language that has a meaning, even if it cannot stand on its own. Words are made out of at leas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Document Clustering

Document clustering (or text clustering) is the application of cluster analysis to textual documents. It has applications in automatic document organization, topic extraction and fast information retrieval or filtering. Overview Document clustering involves the use of descriptors and descriptor extraction. Descriptors are sets of words that describe the contents within the cluster. Document clustering is generally considered to be a centralized process. Examples of document clustering include web document clustering for search users. The application of document clustering can be categorized to two types, online and offline. Online applications are usually constrained by efficiency problems when compared to offline applications. Text clustering may be used for different tasks, such as grouping similar documents (news, tweets, etc.) and the analysis of customer/employee feedback, discovering meaningful implicit subjects across all documents. In general, there are two common algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Resource

In digital lexicography, natural language processing, and digital humanities, a lexical resource is a language resource consisting of data regarding the lexemes of the lexicon of one or more languages e.g., in the form of a database. Characteristics Different standards for the machine-readable edition of lexical resources exist, e.g., Lexical Markup Framework (LMF) an ISO standard for encoding lexical resources, comprising an abstract data model and an XML serialization, and OntoLex-Lemon, an RDF vocabulary for publishing lexical resources as knowledge graphs on the web, e.g., as Linguistic Linked Open Data. Depending on the type of languages that are addressed, a lexical resource may be qualified as monolingual, bilingual or multilingual. For bilingual and multilingual lexical resources, the words may be connected or not connected from one language to another. When connected, the equivalence from a language to another is performed through a bilingual link (for bilingual le ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Embedding

In natural language processing (NLP), word embedding is a term used for the representation of words for text analysis, typically in the form of a real-valued vector that encodes the meaning of the word such that the words that are closer in the vector space are expected to be similar in meaning. Word embeddings can be obtained using a set of language modeling and feature learning techniques where words or phrases from the vocabulary are mapped to vectors of real numbers. Methods to generate this mapping include neural networks, dimensionality reduction on the word co-occurrence matrix, probabilistic models, explainable knowledge base method, and explicit representation in terms of the context in which words appear. Word and phrase embeddings, when used as the underlying input representation, have been shown to boost the performance in NLP tasks such as syntactic parsing and sentiment analysis. Development and history of the approach In Distributional semantics, a quantitative ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bag-of-words Model

The bag-of-words model is a simplifying representation used in natural language processing and information retrieval (IR). In this model, a text (such as a sentence or a document) is represented as the bag (multiset) of its words, disregarding grammar and even word order but keeping multiplicity. The bag-of-words model has also been used for computer vision. The bag-of-words model is commonly used in methods of document classification where the (frequency of) occurrence of each word is used as a feature for training a classifier. An early reference to "bag of words" in a linguistic context can be found in Zellig Harris's 1954 article on ''Distributional Structure''. The Bag-of-words model is one example of a Vector space model. Example implementation The following models a text document using bag-of-words. Here are two simple text documents: (1) John likes to watch movies. Mary likes movies too. (2) Mary also likes to watch football games. Based on these two text ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synset

In metadata, a synonym ring or synset, is a group of data elements that are considered semantically equivalent for the purposes of information retrieval. These data elements are frequently found in different metadata registries. Although a group of terms can be considered equivalent, metadata registries store the synonyms at a central location called the preferred data element. According to WordNet, a ''synset'' or synonym set is defined as a set of one or more synonyms that are interchangeable in some context without changing the truth value of the proposition in which they are embedded. Example The following are considered semantically equivalent and form a synonym ring: foaf:person gjxdm:Person niem:Person sumo:Human cyc:Person umbel:Person Note that each data element has two components: # Namespace prefix, which is a shorthand for the name of the metadata registry # Data element name, which is the name of the object in each of the distinct metadata registry ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Question Answering

Question answering (QA) is a computer science discipline within the fields of information retrieval and natural language processing (NLP), which is concerned with building systems that automatically answer questions posed by humans in a natural language. Overview A question answering implementation, usually a computer program, may construct its answers by querying a structured database of knowledge or information, usually a knowledge base. More commonly, question answering systems can pull answers from an unstructured collection of natural language documents. Some examples of natural language document collections used for question answering systems include: * a local collection of reference texts * internal organization documents and web pages * compiled newswire reports * a set of Wikipedia pages * a subset of World Wide Web pages Types of question answering Question answering research attempts to deal with a wide range of question types including: fact, list, definition, ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

WordNet

WordNet is a lexical database of semantic relations between words in more than 200 languages. WordNet links words into semantic relations including synonyms, hyponyms, and meronyms. The synonyms are grouped into '' synsets'' with short definitions and usage examples. WordNet can thus be seen as a combination and extension of a dictionary and thesaurus. While it is accessible to human users via a web browser, its primary use is in automatic text analysis and artificial intelligence applications. WordNet was first created in the English language and the English WordNet database and software tools have been released under a BSD style license and are freely available for download from that WordNet website. History and team members WordNet was first created in English only in the Cognitive Science Laboratory of Princeton University under the direction of psychology professor George Armitage Miller starting in 1985 and was later directed by Christiane Fellbaum. The project wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Summarization

Automatic summarization is the process of shortening a set of data computationally, to create a subset (a summary) that represents the most important or relevant information within the original content. Artificial intelligence algorithms are commonly developed and employed to achieve this, specialized for different types of data. Text summarization is usually implemented by natural language processing methods, designed to locate the most informative sentences in a given document. On the other hand, visual content can be summarized using computer vision algorithms. Image summarization is the subject of ongoing research; existing approaches typically attempt to display the most representative images from a given image collection, or generate a video that only includes the most important content from the entire collection. Video summarization algorithms identify and extract from the original video content the most important frames (''key-frames''), and/or the most important video se ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word-sense Disambiguation

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference. Given that natural language requires reflection of neurological reality, as shaped by the abilities provided by the brain's neural networks, computer science has had a long-term challenge in developing the ability in computers to do natural language processing and machine learning. Many techniques have been researched, including dictionary-based methods that use the knowledge encoded in lexical resources, supervised machine le ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

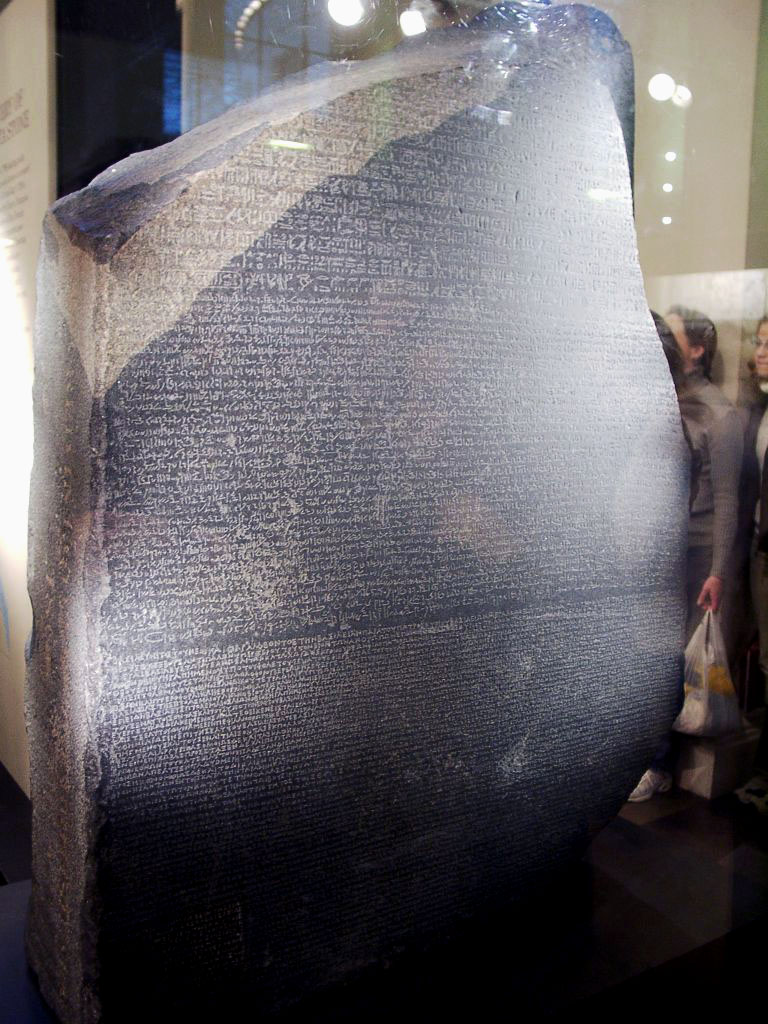

Writing

Writing is a medium of human communication which involves the representation of a language through a system of physically inscribed, mechanically transferred, or digitally represented symbols. Writing systems do not themselves constitute human languages (with the debatable exception of computer languages); they are a means of rendering language into a form that can be reconstructed by other humans separated by time and/or space. While not all languages use a writing system, those that do can complement and extend capacities of spoken language by creating durable forms of language that can be transmitted across space (e.g. written correspondence) and stored over time (e.g. libraries or other public records). It has also been observed that the activity of writing itself can have knowledge-transforming effects, since it allows humans to externalize their thinking in forms that are easier to reflect on, elaborate, reconsider, and revise. A system of writing relies on many of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled " Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)