|

Learning To Rank

Learning to rank. Slides from Tie-Yan Liu's talk at World Wide Web Conference, WWW 2009 conference aravailable online or machine-learned ranking (MLR) is the application of machine learning, typically Supervised learning, supervised, Semi-supervised learning, semi-supervised or reinforcement learning, in the construction of ranking function, ranking models for information retrieval systems. Training data may, for example, consist of lists of items with some partial order specified between items in each list. This order is typically induced by giving a numerical or ordinal score or a binary judgment (e.g. "relevant" or "not relevant") for each item. The goal of constructing the ranking model is to rank new, unseen lists in a similar way to rankings in the training data. Applications In information retrieval Ranking is a central part of many information retrieval problems, such as document retrieval, collaborative filtering, sentiment analysis, and online advertising. A possi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

World Wide Web Conference

The ACM Web Conference (formerly known as International World Wide Web Conference, abbreviated as WWW) is a yearly international academic conference on the topic of the future direction of the World Wide Web. The first conference of many was held and organized by Robert Cailliau in 1994 at CERN in Geneva, Switzerland. The conference has been organized by the International World Wide Web Conference Committee (IW3C2), also founded by Robert Cailliau and colleague Joseph Hardin, every year since. In 2020, the Web Conference series became affiliated with the Association for Computing Machinery (ACM), where it is supported by ACM SIGWEB. The conference's location rotates among North America, Europe, and Asia and its events usually span a period of five days. The conference aims to provide a forum in which "key influencers, decision makers, technologists, businesses and standards bodies" can both present their ongoing work, research, and opinions as well as receive feedback from some ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google SearchWiki

SearchWiki was a Google Search feature which allowed logged-in users to annotate and re-order search results. The annotations and modified order only applied to the user's searches, but it was possible to view other users' annotation An annotation is extra information associated with a particular point in a document or other piece of information. It can be a note that includes a comment or explanation. Annotations are sometimes presented Marginalia, in the margin of book page ...s for a given search query. SearchWiki was released on November 20, 2008 and discontinued on March 3, 2010. All previously created SearchWiki edits are preserved on the logged in user'SearchWiki notes page Google Stars replaced SearchWiki. Users no longer have the option to annotate or re-order search results. Instead, a user clicks on a "star marker" which makes the entry appear in a starred results list at the top of a new search. References SearchWiki Internet search engines {{searc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Modeling

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"Semantic parsing as machine translation". Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). natural language generation (generating more human-like text), optical character recognition, route optimization, handwriting recognition, grammar induction, and information retrieval. Large language models (LLMs), currently their most advanced form, are predominantly based on transformers trained on larger datasets (frequently using words scraped from the public internet). They have superseded recurrent neural network-based models, which had previously superseded the purely statistical models, such as word ''n''-gram language model. History Noam Chomsky did pioneering work on lang ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Query-level Feature

A query-level feature or QLF is a ranking feature utilized in a machine-learned ranking algorithm. Example QLFs: * How many times has this query been run in the last month? * How many words are in the query? * What is the sum/average/min/max/median of the BM25F values for the query? {{compu-ai-stub Machine learning algorithms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PageRank

PageRank (PR) is an algorithm used by Google Search to rank web pages in their search engine results. It is named after both the term "web page" and co-founder Larry Page. PageRank is a way of measuring the importance of website pages. According to Google: Currently, PageRank is not the only algorithm used by Google to order search results, but it is the first algorithm that was used by the company, and it is the best known. As of September 24, 2019, all patents associated with PageRank have expired. Description PageRank is a link analysis algorithm and it assigns a numerical weighting to each element of a hyperlinked set of documents, such as the World Wide Web, with the purpose of "measuring" its relative importance within the set. The algorithm may be applied to any collection of entities with reciprocal quotations and references. The numerical weight that it assigns to any given element ''E'' is referred to as the ''PageRank of E'' and denoted by PR(E). A PageRank resu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature (machine Learning)

In machine learning and pattern recognition, a feature is an individual measurable property or characteristic of a data set. Choosing informative, discriminating, and independent features is crucial to produce effective algorithms for pattern recognition, classification, and regression tasks. Features are usually numeric, but other types such as strings and graphs are used in syntactic pattern recognition, after some pre-processing step such as one-hot encoding. The concept of "features" is related to that of explanatory variables used in statistical techniques such as linear regression. Feature types In feature engineering, two types of features are commonly used: numerical and categorical. Numerical features are continuous values that can be measured on a scale. Examples of numerical features include age, height, weight, and income. Numerical features can be used in machine learning algorithms directly. Categorical features are discrete values that can be grouped into ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bag Of Words

The bag-of-words (BoW) model is a model of text which uses an unordered collection (a "bag") of words. It is used in natural language processing and information retrieval (IR). It disregards word order (and thus most of syntax or grammar) but captures multiplicity. The bag-of-words model is commonly used in methods of document classification where, for example, the (frequency of) occurrence of each word is used as a feature for training a classifier. It has also been used for computer vision. An early reference to "bag of words" in a linguistic context can be found in Zellig Harris's 1954 article on ''Distributional Structure''. Definition The following models a text document using bag-of-words. Here are two simple text documents: (1) John likes to watch movies. Mary likes movies too. (2) Mary also likes to watch football games. Based on these two text documents, a list is constructed as follows for each document: "John","likes","to","watch","movies","Mary","likes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Vector

In machine learning and pattern recognition, a feature is an individual measurable property or characteristic of a data set. Choosing informative, discriminating, and independent features is crucial to produce effective algorithms for pattern recognition, classification, and regression tasks. Features are usually numeric, but other types such as strings and graphs are used in syntactic pattern recognition, after some pre-processing step such as one-hot encoding. The concept of "features" is related to that of explanatory variables used in statistical techniques such as linear regression. Feature types In feature engineering, two types of features are commonly used: numerical and categorical. Numerical features are continuous values that can be measured on a scale. Examples of numerical features include age, height, weight, and income. Numerical features can be used in machine learning algorithms directly. Categorical features are discrete values that can be grouped into c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recommender System

A recommender system (RecSys), or a recommendation system (sometimes replacing ''system'' with terms such as ''platform'', ''engine'', or ''algorithm'') and sometimes only called "the algorithm" or "algorithm", is a subclass of information filtering system that provides suggestions for items that are most pertinent to a particular user. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer. Modern recommendation systems such as those used on large social media sites make extensive use of AI, machine learning and related techniques to learn the behavior and preferences of each user and categorize content to tailor their feed individually. Typically, the suggestions refer to various decision-making processes, such as what product to purchase, what music to listen to, or what online news to read. Recommender systems are used in a variety of areas, with commonly recognised ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

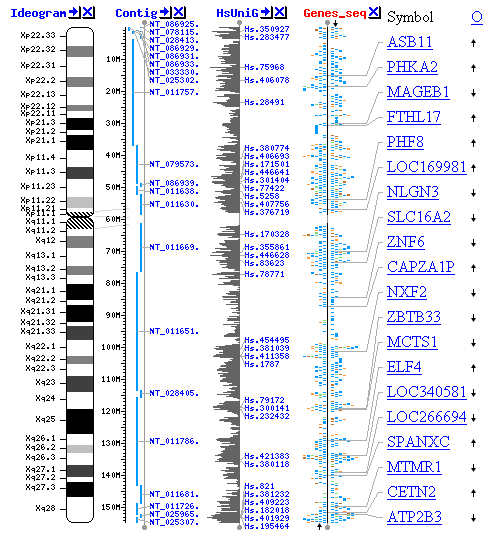

Computational Biology

Computational biology refers to the use of techniques in computer science, data analysis, mathematical modeling and Computer simulation, computational simulations to understand biological systems and relationships. An intersection of computer science, biology, and data science, the field also has foundations in applied mathematics, molecular biology, cell biology, chemistry, and genetics. History Bioinformatics, the analysis of informatics processes in biological systems, began in the early 1970s. At this time, research in artificial intelligence was using network models of the human brain in order to generate new algorithms. This use of biological data pushed biological researchers to use computers to evaluate and compare large data sets in their own field. By 1982, researchers shared information via Punched card, punch cards. The amount of data grew exponentially by the end of the 1980s, requiring new computational methods for quickly interpreting relevant information. Per ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation is use of computational techniques to translate text or speech from one language to another, including the contextual, idiomatic and pragmatic nuances of both languages. Early approaches were mostly rule-based or statistical. These methods have since been superseded by neural machine translation and large language models. History Origins The origins of machine translation can be traced back to the work of Al-Kindi, a ninth-century Arabic cryptographer who developed techniques for systemic language translation, including cryptanalysis, frequency analysis, and probability and statistics, which are used in modern machine translation. The idea of machine translation later appeared in the 17th century. In 1629, René Descartes proposed a universal language, with equivalent ideas in different tongues sharing one symbol. The idea of using digital computers for translation of natural languages was proposed as early as 1947 by England's A. D. Booth and Warr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |