|

Jaime Carbonell

Jaime Guillermo Carbonell (July 29, 1953 – February 28, 2020) was a computer scientist who made seminal contributions to the development of natural language processing tools and technologies. His extensive research in machine translation resulted in the development of several state-of-the-art language translation and artificial intelligence systems. He earned his B.S. degrees in Physics and in Mathematics from MIT in 1975 and did his Ph.D. under Dr. Roger Schank at Yale University in 1979. He joined Carnegie Mellon University as an assistant professor of computer science in 1979 and lived in Pittsburgh from then. He was affiliated with the Language Technologies Institute, Computer Science Department, Machine Learning Department, and Computational Biology Department at Carnegie Mellon. His interests spanned several areas of artificial intelligence, language technologies and machine learning. In particular, his research focused on areas such as text mining (extraction, ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yale University

Yale University is a Private university, private Ivy League research university in New Haven, Connecticut, United States. Founded in 1701, Yale is the List of Colonial Colleges, third-oldest institution of higher education in the United States, and one of the nine colonial colleges chartered before the American Revolution. Yale was established as the Collegiate School in 1701 by Congregationalism in the United States, Congregationalist clergy of the Connecticut Colony. Originally restricted to instructing ministers in theology and sacred languages, the school's curriculum expanded, incorporating humanities and sciences by the time of the American Revolution. In the 19th century, the college expanded into graduate and professional instruction, awarding the first Doctor of Philosophy, PhD in the United States in 1861 and organizing as a university in 1887. Yale's faculty and student populations grew rapidly after 1890 due to the expansion of the physical campus and its scientif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lycos

Lycos, Inc. (stylized as LYCOS), is a web search engine and web portal established in 1994, spun out of Carnegie Mellon University. Lycos also encompasses a network of email, web hosting, social networking, and entertainment websites. The company is based in Waltham, Massachusetts, and is a subsidiary of Ybrant Digital. Etymology The word "Lycos" is short for "Lycosidae", which is Latin for " wolf spider". History Lycos is a university spin-off that began in May 1994 as a research project by Michael Loren Mauldin of Carnegie Mellon University in Pittsburgh. Lycos Inc. was formed with approximately US$2 million in venture capital funding from CMGI. Bob Davis became the CEO and first employee of the new company in 1995, and concentrated on building the company into an advertising-supported web portal, led by Bill Townsend, who served as Vice President, Advertising. Lycos enjoyed several years of growth during the 1990s and became the most visited online destination in the w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Case-based Reasoning

Case-based reasoning (CBR), broadly construed, is the process of solving new problems based on the solutions of similar past problems. In everyday life, an auto mechanic who fixes an engine by recalling another car that exhibited similar symptoms is using case-based reasoning. A lawyer who advocates a particular outcome in a trial based on legal precedents or a judge who creates case law is using case-based reasoning. So, too, an engineer copying working elements of nature (practicing biomimicry) is treating nature as a database of solutions to problems. Case-based reasoning is a prominent type of analogy solution making. It has been argued that case-based reasoning is not only a powerful method for computer reasoning, but also a pervasive behavior in everyday human problem solving; or, more radically, that all reasoning is based on past cases personally experienced. This view is related to prototype theory, which is most deeply explored in cognitive science. Process Case ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Example-based Machine Translation

Example-based machine translation (EBMT) is a method of machine translation often characterized by its use of a bilingual corpus with parallel texts as its main knowledge base at run-time. It is essentially a translation by analogy and can be viewed as an implementation of a case-based reasoning approach to machine learning. Translation by analogy At the foundation of example-based machine translation is the idea of translation by analogy. When applied to the process of human translation, the idea that translation takes place by analogy is a rejection of the idea that people translate sentences by doing deep linguistic analysis. Instead, it is founded on the belief that people translate by first decomposing a sentence into certain phrases, then by translating these phrases, and finally by properly composing these fragments into one long sentence. Phrasal translations are translated by analogy to previous translations. The principle of translation by analogy is encoded to example-b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Machine Translation

Statistical machine translation (SMT) is a machine translation approach where translations are generated on the basis of statistical models whose parameters are derived from the analysis of bilingual text corpora. The statistical approach contrasts with the rule-based approaches to machine translation as well as with example-based machine translation, that superseded the previous rule-based approach that required explicit description of each and every linguistic rule, which was costly, and which often did not generalize to other languages. The first ideas of statistical machine translation were introduced by Warren Weaver in 1949, including the ideas of applying Claude Shannon's information theory. Statistical machine translation was re-introduced in the late 1980s and early 1990s by researchers at IBM's Thomas J. Watson Research Center. Before the introduction of neural machine translation, it was by far the most widely studied machine translation method. Basis The idea b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Question Answering

Question answering (QA) is a computer science discipline within the fields of information retrieval and natural language processing (NLP) that is concerned with building systems that automatically answer questions that are posed by humans in a natural language. Overview A question-answering implementation, usually a computer program, may construct its answers by querying a structured database of knowledge or information, usually a knowledge base. More commonly, question-answering systems can pull answers from an unstructured collection of natural language documents. Some examples of natural language document collections used for question answering systems include: * a collection of reference texts * internal organization documents and web pages * compiled newswire reports * a set of Wikipedia pages * a subset of World Wide Web pages Types of question answering Question-answering research attempts to develop ways of answering a wide range of question types, including fact, li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Summarization

Automatic summarization is the process of shortening a set of data computationally, to create a subset (a summary) that represents the most important or relevant information within the original content. Artificial intelligence algorithms are commonly developed and employed to achieve this, specialized for different types of data. Text summarization is usually implemented by natural language processing methods, designed to locate the most informative sentences in a given document. On the other hand, visual content can be summarized using computer vision algorithms. Image summarization is the subject of ongoing research; existing approaches typically attempt to display the most representative images from a given image collection, or generate a video that only includes the most important content from the entire collection. Video summarization algorithms identify and extract from the original video content the most important frames (''key-frames''), and/or the most important video seg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

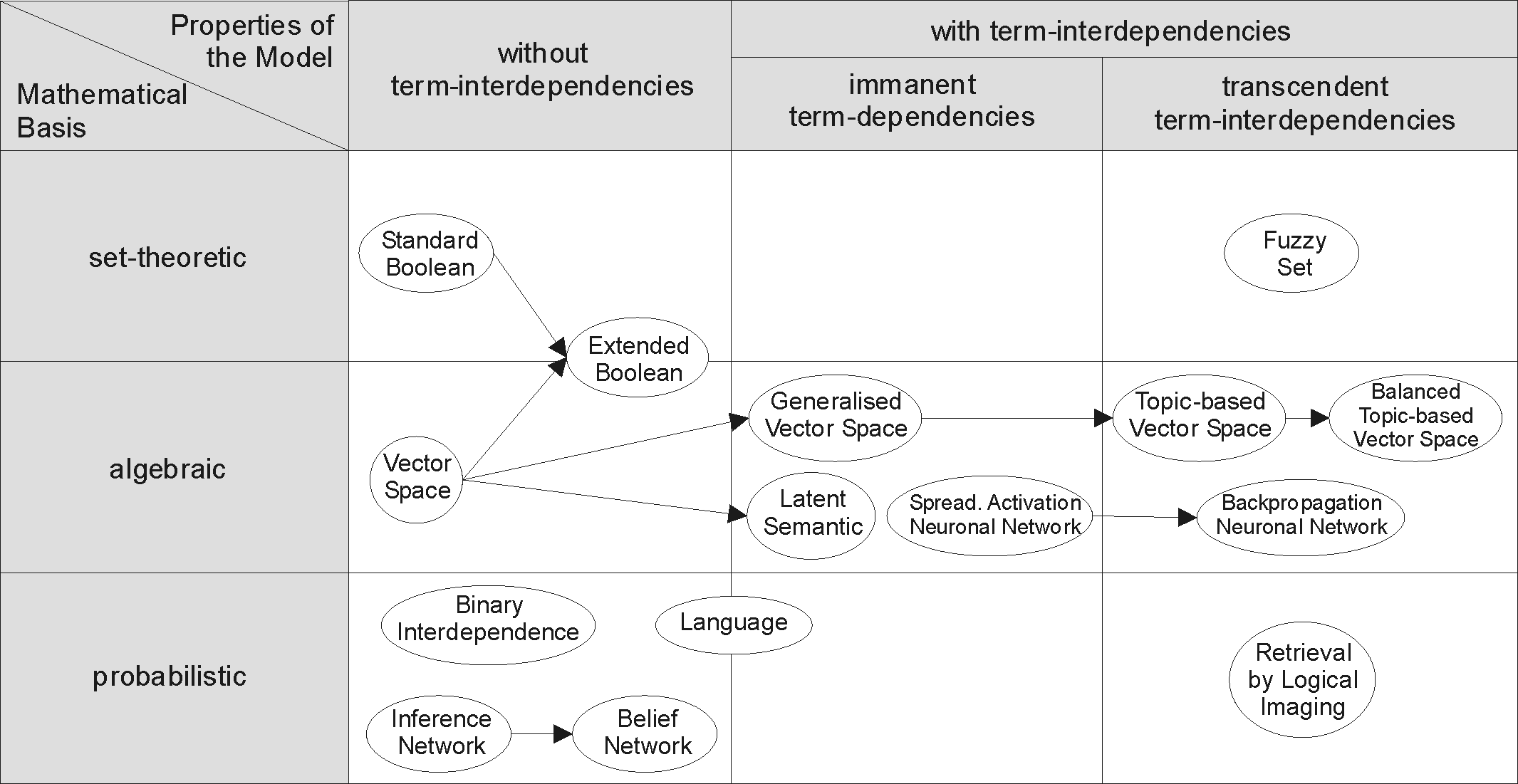

Information Retrieval

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text search, full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; it also stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user enters a query into the sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Mining

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005), there are three perspectives of text mining: information extraction, data mining, and knowledge discovery in databases (KDD). Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and interpretation of the output. 'High quality' in text mining usually ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Technologies

Language technology, often called human language technology (HLT), studies methods of how computer programs or electronic devices can analyze, produce, modify or respond to human texts and speech. Working with language technology often requires broad knowledge not only about linguistics but also about computer science. It consists of natural language processing (NLP) and computational linguistics (CL) on the one hand, many application oriented aspects of these, and more low-level aspects such as encoding and speech technology on the other hand. Note that these elementary aspects are normally not considered to be within the scope of related terms such as natural language processing Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related ... and (applied) computational linguistics, which are o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |