|

Iteratively Reweighted Least Squares

The method of iteratively reweighted least squares (IRLS) is used to solve certain optimization problems with objective functions of the form of a ''p''-norm: :\underset \sum_^n \big, y_i - f_i (\boldsymbol\beta) \big, ^p, by an iterative method in which each step involves solving a weighted least squares problem of the form:C. Sidney Burrus, Iterative Reweighted Least Squares' :\boldsymbol\beta^ = \underset \sum_^n w_i (\boldsymbol\beta^) \big, y_i - f_i (\boldsymbol\beta) \big, ^2. IRLS is used to find the maximum likelihood estimates of a generalized linear model, and in robust regression to find an M-estimator, as a way of mitigating the influence of outliers in an otherwise normally-distributed data set. For example, by minimizing the least absolute errors rather than the least square errors. One of the advantages of IRLS over linear programming and convex programming is that it can be used with Gauss–Newton and Levenberg–Marquardt numerical algorithms. E ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Objective Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lp Quasi-norm

In mathematics, the spaces are function spaces defined using a natural generalization of the -norm for finite-dimensional vector spaces. They are sometimes called Lebesgue spaces, named after Henri Lebesgue , although according to the Bourbaki group they were first introduced by Frigyes Riesz . spaces form an important class of Banach spaces in functional analysis, and of topological vector spaces. Because of their key role in the mathematical analysis of measure and probability spaces, Lebesgue spaces are used also in the theoretical discussion of problems in physics, statistics, economics, finance, engineering, and other disciplines. Applications Statistics In statistics, measures of central tendency and statistical dispersion, such as the mean, median, and standard deviation, are defined in terms of metrics, and measures of central tendency can be characterized as solutions to variational problems. In penalized regression, "L1 penalty" and "L2 penalty" refer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weiszfeld's Algorithm

In geometry, the geometric median of a discrete set of sample points in a Euclidean space is the point minimizing the sum of distances to the sample points. This generalizes the median, which has the property of minimizing the sum of distances for one-dimensional data, and provides a central tendency in higher dimensions. It is also known as the 1-median, spatial median, Euclidean minisum point, or Torricelli point. The geometric median is an important estimator of location in statistics, where it is also known as the ''L''1 estimator. It is also a standard problem in facility location, where it models the problem of locating a facility to minimize the cost of transportation. The special case of the problem for three points in the plane (that is, = 3 and = 2 in the definition below) is sometimes also known as Fermat's problem; it arises in the construction of minimal Steiner trees, and was originally posed as a problem by Pierre de Fermat and solved by Evangelista Torr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feasible Generalized Least Squares

In statistics, generalized least squares (GLS) is a technique for estimating the unknown parameters in a linear regression model when there is a certain degree of correlation between the residuals in a regression model. In these cases, ordinary least squares and weighted least squares can be statistically inefficient, or even give misleading inferences. GLS was first described by Alexander Aitken in 1936. Method outline In standard linear regression models we observe data \_ on ''n'' statistical units. The response values are placed in a vector \mathbf = \left( y_, \dots, y_ \right)^, and the predictor values are placed in the design matrix \mathbf = \left( \mathbf_^, \dots, \mathbf_^ \right)^, where \mathbf_ = \left( 1, x_, \dots, x_ \right) is a vector of the ''k'' predictor variables (including a constant) for the ''i''th unit. The model forces the conditional mean of \mathbf given \mathbf to be a linear function of \mathbf, and assumes the conditional variance o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Huber Loss

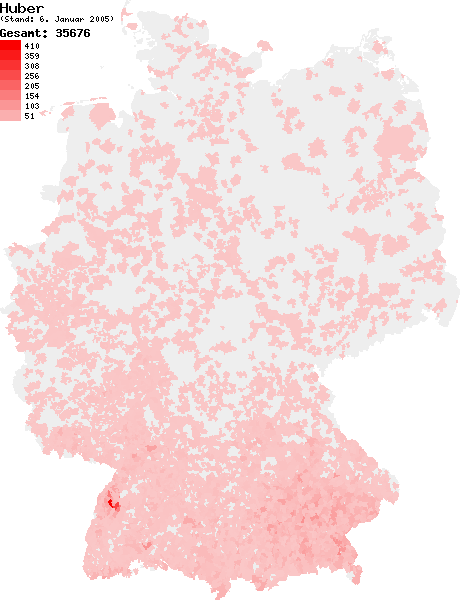

Huber is a German surname, German-language surname. It derives from the German word ''Hube'' meaning Hide (unit), hide, a unit of land a farmer might possess, granting them the status of a free tenant. It is in the top ten most common surnames in the German-speaking Europe, German-speaking world, especially in Austria and Switzerland where it is the surname of approximately 0.3% of the population. Variants arising from varying dialectal pronunciation of the surname include Hueber, Hüber, Huemer, Humer, Haumer, Huebner and (anglicized) Hoover (surname), Hoover. People with the surname Huber A *Adam Huber (born 1987), American actor and model. *Alexander Huber (born 1968), German climber and mountaineer *Alexander Huber (football) (born 1985), German football player *Alyson Huber (born 1972), Californian legislator elected to the State Assembly in 2008 *Anja Huber (born 1983), German skeleton racer *Anke Huber (born 1974), German tennis player *Anthony Huber (born 1994), killed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regularization (mathematics)

In mathematics, statistics, finance, computer science, particularly in machine learning and inverse problems, regularization is a process that changes the result answer to be "simpler". It is often used to obtain results for ill-posed problems or to prevent overfitting. Although regularization procedures can be divided in many ways, following delineation is particularly helpful: * Explicit regularization is regularization whenever one explicitly adds a term to the optimization problem. These terms could be priors, penalties, or constraints. Explicit regularization is commonly employed with ill-posed optimization problems. The regularization term, or penalty, imposes a cost on the optimization function to make the optimal solution unique. * Implicit regularization is all other forms of regularization. This includes, for example, early stopping, using a robust loss function, and discarding outliers. Implicit regularization is essentially ubiquitous in modern machine learning app ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Worcester Polytechnic Institute

Worcester Polytechnic Institute (WPI) is a Private university, private research university in Worcester, Massachusetts. Founded in 1865 in Worcester, WPI was one of the United States' first engineering and technology universities and now has 14 academic departments with over 50 undergraduate and graduate degree programs in science, engineering, technology, management, the social sciences, and the humanities and arts, leading to bachelor's, master's and PhD degrees. WPI's faculty works with students in a number of research areas, including biotechnology, fuel cells, information security, surface metrology, materials processing, and nanotechnology. It is Carnegie Classification of Institutions of Higher Education, classified among "R2: Doctoral Universities – High research activity". History Worcester Polytechnic Institute was founded by self-made tinware manufacturer, John Boynton (Worcester Polytechnic Institute), John Boynton, and Ichabod Washburn, owner of the world's larges ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least Absolute Deviation

Least absolute deviations (LAD), also known as least absolute errors (LAE), least absolute residuals (LAR), or least absolute values (LAV), is a statistical optimality criterion and a statistical optimization technique based minimizing the '' sum of absolute deviations'' (sum of absolute residuals or sum of absolute errors) or the ''L''1 norm of such values. It is analogous to the least squares technique, except that it is based on ''absolute values'' instead of squared values. It attempts to find a function which closely approximates a set of data by minimizing residuals between points generated by the function and corresponding data points. The LAD estimate also arises as the maximum likelihood estimate if the errors have a Laplace distribution. It was introduced in 1757 by Roger Joseph Boscovich. Formulation Suppose that the data set consists of the points (''x''''i'', ''y''''i'') with ''i'' = 1, 2, ..., ''n''. We want to find a function ''f'' such that f(x_i)\approx y_i. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diagonal Matrix

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is \left begin 3 & 0 \\ 0 & 2 \end\right/math>, while an example of a 3×3 diagonal matrix is \left begin 6 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end\right/math>. An identity matrix of any size, or any multiple of it (a scalar matrix), is a diagonal matrix. A diagonal matrix is sometimes called a scaling matrix, since matrix multiplication with it results in changing scale (size). Its determinant is the product of its diagonal values. Definition As stated above, a diagonal matrix is a matrix in which all off-diagonal entries are zero. That is, the matrix with ''n'' columns and ''n'' rows is diagonal if \forall i,j \in \, i \ne j \implies d_ = 0. However, the main diagonal entries are unrestricted. The term ''diagonal matrix'' may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Least Squares (mathematics)

Linear least squares (LLS) is the least squares approximation of linear functions to data. It is a set of formulations for solving statistical problems involved in linear regression, including variants for ordinary (unweighted), weighted, and generalized (correlated) residuals. Numerical methods for linear least squares include inverting the matrix of the normal equations and orthogonal decomposition methods. Main formulations The three main linear least squares formulations are: * Ordinary least squares (OLS) is the most common estimator. OLS estimates are commonly used to analyze both experimental and observational data. The OLS method minimizes the sum of squared residuals, and leads to a closed-form expression for the estimated value of the unknown parameter vector ''β'': \hat = (\mathbf^\mathsf\mathbf)^ \mathbf^\mathsf \mathbf, where \mathbf is a vector whose ''i''th element is the ''i''th observation of the dependent variable, and \mathbf is a matrix whose '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Regression

In statistics, linear regression is a linear approach for modelling the relationship between a scalar response and one or more explanatory variables (also known as dependent and independent variables). The case of one explanatory variable is called '' simple linear regression''; for more than one, the process is called multiple linear regression. This term is distinct from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable. In linear regression, the relationships are modeled using linear predictor functions whose unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, the conditional mean of the response given the values of the explanatory variables (or predictors) is assumed to be an affine function of those values; less commonly, the conditional median or some other quantile is used. Like all forms of regression analysis, linear regression focuse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lp Space

In mathematics, the spaces are function spaces defined using a natural generalization of the -norm for finite-dimensional vector spaces. They are sometimes called Lebesgue spaces, named after Henri Lebesgue , although according to the Bourbaki group they were first introduced by Frigyes Riesz . spaces form an important class of Banach spaces in functional analysis, and of topological vector spaces. Because of their key role in the mathematical analysis of measure and probability spaces, Lebesgue spaces are used also in the theoretical discussion of problems in physics, statistics, economics, finance, engineering, and other disciplines. Applications Statistics In statistics, measures of central tendency and statistical dispersion, such as the mean, median, and standard deviation, are defined in terms of metrics, and measures of central tendency can be characterized as solutions to variational problems. In penalized regression, "L1 penalty" and "L2 penalty" refer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |