|

Interval (statistics)

In statistics, interval estimation is the use of sample data to estimate an '' interval'' of plausible values of a parameter of interest. This is in contrast to point estimation, which gives a single value. The most prevalent forms of interval estimation are ''confidence intervals'' (a frequentist method) and ''credible intervals'' (a Bayesian method); less common forms include '' likelihood intervals'' and '' fiducial intervals''. Other forms of statistical intervals include '' tolerance intervals'' (covering a proportion of a sampled population) and '' prediction intervals'' (an estimate of a future observation, used mainly in regression analysis). Non-statistical methods that can lead to interval estimates include fuzzy logic. Discussion The scientific problems associated with interval estimation may be summarised as follows: :*When interval estimates are reported, they should have a commonly held interpretation in the scientific community and more widely. In this regard, cr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-parametric Statistics

Nonparametric statistics is the branch of statistics that is not based solely on parametrized families of probability distributions (common examples of parameters are the mean and variance). Nonparametric statistics is based on either being distribution-free or having a specified distribution but with the distribution's parameters unspecified. Nonparametric statistics includes both descriptive statistics and statistical inference. Nonparametric tests are often used when the assumptions of parametric tests are violated. Definitions The term "nonparametric statistics" has been imprecisely defined in the following two ways, among others: Applications and purpose Non-parametric methods are widely used for studying populations that take on a ranked order (such as movie reviews receiving one to four stars). The use of non-parametric methods may be necessary when data have a ranking but no clear numerical interpretation, such as when assessing preferences. In terms of levels o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Behrens–Fisher Problem

In statistics, the Behrens–Fisher problem, named after Walter Behrens and Ronald Fisher, is the problem of interval estimation and hypothesis testing concerning the difference between the means of two normally distributed populations when the variances of the two populations are not assumed to be equal, based on two independent samples. Specification One difficulty with discussing the Behrens–Fisher problem and proposed solutions, is that there are many different interpretations of what is meant by "the Behrens–Fisher problem". These differences involve not only what is counted as being a relevant solution, but even the basic statement of the context being considered. Context Let ''X''1, ..., ''X''''n'' and ''Y''1, ..., ''Y''''m'' be i.i.d. samples from two populations which both come from the same location–scale family of distributions. The scale parameters are assumed to be unknown and not necessarily equal, and the problem is to assess whether ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predictive Inference

Statistical inference is the process of using data analysis to infer properties of an underlying distribution of probability.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of a population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is sampled from a larger population. Inferential statistics can be contrasted with descriptive statistics. Descriptive statistics is solely concerned with properties of the observed data, and it does not rest on the assumption that the data come from a larger population. In machine learning, the term ''inference'' is sometimes used instead to mean "make a prediction, by evaluating an already trained model"; in this context inferring properties of the model is referred to as ''training'' or ''learning'' (rather than ''inference''), and using a model for prediction is referred to as ''inference'' (instead of ''prediction'') ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Philosophy Of Statistics

The philosophy of statistics involves the meaning, justification, utility, use and abuse of statistics and its methodology, and ethical and epistemological issues involved in the consideration of choice and interpretation of data and methods of statistics. Topics of interest * Foundations of statistics involves issues in theoretical statistics, its goals and optimization methods to meet these goals, parametric assumptions or lack thereof considered in nonparametric statistics, model selection for the underlying probability distribution, and interpretation of the meaning of inferences made using statistics, related to the philosophy of probability and the philosophy of science. Discussion of the selection of the goals and the meaning of optimization, in foundations of statistics, are the subject of the philosophy of statistics. Selection of distribution models, and of the means of selection, is the subject of the philosophy of statistics, whereas the mathematics of optimizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Comparisons

In statistics, the multiple comparisons, multiplicity or multiple testing problem occurs when one considers a set of statistical inferences simultaneously or infers a subset of parameters selected based on the observed values. The more inferences are made, the more likely erroneous inferences become. Several statistical techniques have been developed to address that problem, typically by requiring a stricter significance threshold for individual comparisons, so as to compensate for the number of inferences being made. History The problem of multiple comparisons received increased attention in the 1950s with the work of statisticians such as Tukey and Scheffé. Over the ensuing decades, many procedures were developed to address the problem. In 1996, the first international conference on multiple comparison procedures took place in Israel. Definition Multiple comparisons arise when a statistical analysis involves multiple simultaneous statistical tests, each of which has a poten ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Margin Of Error

The margin of error is a statistic expressing the amount of random sampling error in the results of a survey. The larger the margin of error, the less confidence one should have that a poll result would reflect the result of a census of the entire population. The margin of error will be positive whenever a population is incompletely sampled and the outcome measure has positive variance, which is to say, the measure ''varies''. The term ''margin of error'' is often used in non-survey contexts to indicate observational error in reporting measured quantities. Concept Consider a simple ''yes/no'' poll P as a sample of n respondents drawn from a population N \text(n \ll N) reporting the percentage p of ''yes'' responses. We would like to know how close p is to the true result of a survey of the entire population N, without having to conduct one. If, hypothetically, we were to conduct poll P over subsequent samples of n respondents (newly drawn from N), we would expect those su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Induction (philosophy)

Inductive reasoning is a method of reasoning in which a general principle is derived from a body of observations. It consists of making broad generalizations based on specific observations. Inductive reasoning is distinct from ''deductive'' reasoning. If the premises are correct, the conclusion of a deductive argument is ''certain''; in contrast, the truth of the conclusion of an inductive argument is '' probable'', based upon the evidence given. Types The types of inductive reasoning include generalization, prediction, statistical syllogism, argument from analogy, and causal inference. Inductive generalization A generalization (more accurately, an ''inductive generalization'') proceeds from a premise about a sample to a conclusion about the population. The observation obtained from this sample is projected onto the broader population. : The proportion Q of the sample has attribute A. : Therefore, the proportion Q of the population has attribute A. For example, say ther ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

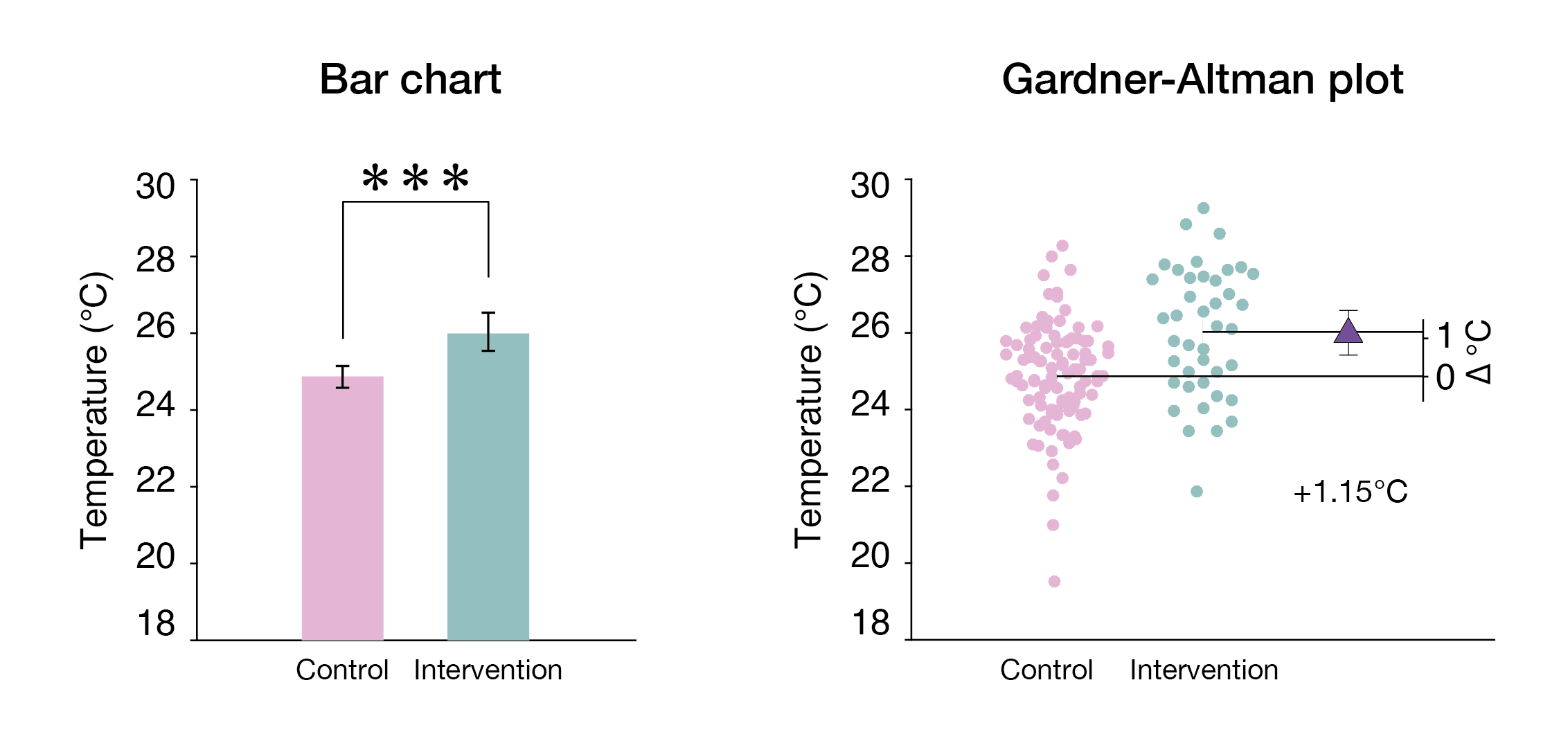

Estimation Statistics

Estimation statistics, or simply estimation, is a data analysis framework that uses a combination of effect sizes, confidence intervals, precision planning, and meta-analysis to plan experiments, analyze data and interpret results. It complements hypothesis testing approaches such as null hypothesis significance testing (NHST), by going beyond the question is an effect present or not, and provides information about how large an effect is. Estimation statistics is sometimes referred to as ''the new statistics''. The primary aim of estimation methods is to report an effect size (a point estimate) along with its confidence interval, the latter of which is related to the precision of the estimate. The confidence interval summarizes a range of likely values of the underlying population effect. Proponents of estimation see reporting a ''P'' value as an unhelpful distraction from the important business of reporting an effect size with its confidence intervals, and believe that estimat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Inference

Algorithmic inference gathers new developments in the statistical inference methods made feasible by the powerful computing devices widely available to any data analyst. Cornerstones in this field are computational learning theory, granular computing, bioinformatics, and, long ago, structural probability . The main focus is on the algorithms which compute statistics rooting the study of a random phenomenon, along with the amount of data they must feed on to produce reliable results. This shifts the interest of mathematicians from the study of the distribution laws to the functional properties of the statistics, and the interest of computer scientists from the algorithms for processing data to the information they process. The Fisher parametric inference problem Concerning the identification of the parameters of a distribution law, the mature reader may recall lengthy disputes in the mid 20th century about the interpretation of their variability in terms of fiducial distributio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayes Action

In estimation theory and decision theory, a Bayes estimator or a Bayes action is an estimator or decision rule that minimizes the posterior expected value of a loss function (i.e., the posterior expected loss). Equivalently, it maximizes the posterior expectation of a utility function. An alternative way of formulating an estimator within Bayesian statistics is maximum a posteriori estimation. Definition Suppose an unknown parameter \theta is known to have a prior distribution \pi. Let \widehat = \widehat(x) be an estimator of \theta (based on some measurements ''x''), and let L(\theta,\widehat) be a loss function, such as squared error. The Bayes risk of \widehat is defined as E_\pi(L(\theta, \widehat)), where the expectation is taken over the probability distribution of \theta: this defines the risk function as a function of \widehat. An estimator \widehat is said to be a ''Bayes estimator'' if it minimizes the Bayes risk among all estimators. Equivalently, the estimator ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |