|

Intensity Of Counting Processes

The intensity \lambda of a counting process is a measure of the rate of change of its predictable part. If a stochastic process \ is a counting process, then it is a submartingale, and in particular its Doob-Meyer decomposition is :N(t) = M(t) + \Lambda(t) where M(t) is a martingale and \Lambda(t) is a predictable increasing process. \Lambda(t) is called the cumulative intensity of N(t) and it is related to \lambda by :\Lambda(t) = \int_^ \lambda(s)ds. Definition Given probability space (\Omega, \mathcal, \mathbb) and a counting process \ which is adapted to the filtration \, the intensity of N is the process \ defined by the following limit: : \lambda(t) = \lim_ \frac \mathbb \mathcal_t. The right-continuity property of counting processes allows us to take this limit from the right. Estimation In statistical learning, the variation between \lambda and its estimator In statistics, an estimator is a rule for calculating an estimate of a given quantity based on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Counting Process

A counting process is a stochastic process In probability theory and related fields, a stochastic () or random process is a mathematical object usually defined as a family of random variables in a probability space, where the index of the family often has the interpretation of time. Sto ... \ with values that are non-negative, integer, and non-decreasing: # N(t)\geq0. # N(t) is an integer. # If s\leq t then N(s)\leq N(t). If s [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Process

In probability theory and related fields, a stochastic () or random process is a mathematical object usually defined as a family of random variables in a probability space, where the index of the family often has the interpretation of time. Stochastic processes are widely used as mathematical models of systems and phenomena that appear to vary in a random manner. Examples include the growth of a bacterial population, an electrical current fluctuating due to thermal noise, or the movement of a gas molecule. Stochastic processes have applications in many disciplines such as biology, chemistry, ecology Ecology () is the natural science of the relationships among living organisms and their Natural environment, environment. Ecology considers organisms at the individual, population, community (ecology), community, ecosystem, and biosphere lev ..., neuroscience, physics, image processing, signal processing, stochastic control, control theory, information theory, computer scien ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Submartingale

In probability theory, a martingale is a stochastic process in which the expected value of the next observation, given all prior observations, is equal to the most recent value. In other words, the conditional expectation of the next value, given the past, is equal to the present value. Martingales are used to model fair games, where future expected winnings are equal to the current amount regardless of past outcomes. History Originally, '' martingale'' referred to a class of betting strategies that was popular in 18th-century France. The simplest of these strategies was designed for a game in which the gambler wins their stake if a coin comes up heads and loses it if the coin comes up tails. The strategy had the gambler double their bet after every loss so that the first win would recover all previous losses plus win a profit equal to the original stake. As the gambler's wealth and available time jointly approach infinity, their probability of eventually flipping heads approa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimator

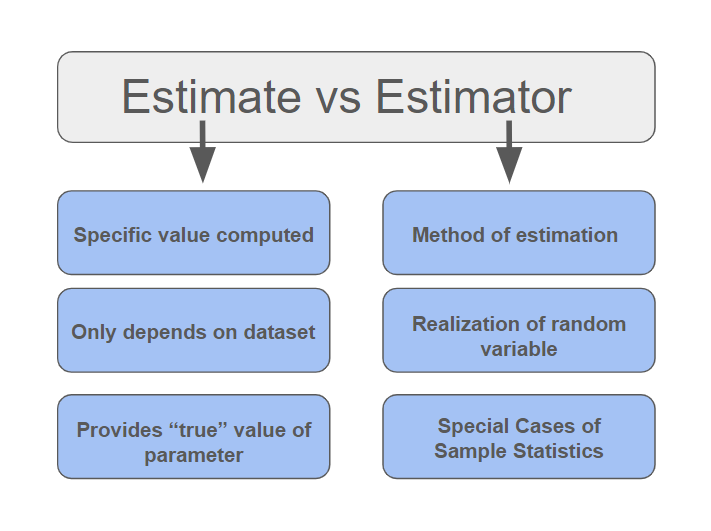

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguished. For example, the sample mean is a commonly used estimator of the population mean. There are point estimator, point and interval estimators. The point estimators yield single-valued results. This is in contrast to an interval estimator, where the result would be a range of plausible values. "Single value" does not necessarily mean "single number", but includes vector valued or function valued estimators. ''Estimation theory'' is concerned with the properties of estimators; that is, with defining properties that can be used to compare different estimators (different rules for creating estimates) for the same quantity, based on the same data. Such properties can be used to determine the best rules to use under given circumstances. Howeve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent And Identically Distributed Random Variables

In probability theory and statistics, a collection of random variables is independent and identically distributed (''i.i.d.'', ''iid'', or ''IID'') if each random variable has the same probability distribution as the others and all are mutually independence (probability theory), independent. IID was first defined in statistics and finds application in many fields, such as data mining and signal processing. Introduction Statistics commonly deals with random samples. A random sample can be thought of as a set of objects that are chosen randomly. More formally, it is "a sequence of independent, identically distributed (IID) random data points." In other words, the terms ''random sample'' and ''IID'' are synonymous. In statistics, "''random sample''" is the typical terminology, but in probability, it is more common to say "IID." * Identically distributed means that there are no overall trends — the distribution does not fluctuate and all items in the sample are taken from the sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |