|

Heterogenous Unified Memory Access

Uniform memory access (UMA) is a shared memory architecture used in parallel computers. All the processors in the UMA model share the physical memory uniformly. In an UMA architecture, access time to a memory location is independent of which processor makes the request or which memory chip contains the transferred data. Uniform memory access computer architectures are often contrasted with non-uniform memory access (NUMA) architectures. In the NUMA architecture, each processor may use a private cache. Peripherals are also shared in some fashion. The UMA model is suitable for general purpose and time sharing applications by multiple users. It can be used to speed up the execution of a single large program in time-critical applications. Types of architectures There are three types of UMA architectures: * UMA using bus-based symmetric multiprocessing (SMP) architectures; * UMA using crossbar switches; * UMA using multistage interconnection networks. hUMA In April 2013, the term hUM ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unified Memory Architecture

{{disambiguation ...

Unified may refer to: * The Unified, a wine symposium held in Sacramento, California, USA * ''Unified'', the official student newspaper of Canterbury Christ Church University * UNFD, an Australian record label * ''Unified'' (Sweet & Lynch album), 2017 * ''Unified'' (Super8 & Tab album), 2014 Unify may refer to: * ''Unify'', an album by Electric Universe * Unify Corporation, former name of Daegis Inc. * Unify Gathering, an Australian music festival * Unify GmbH & Co. KG, formerly Siemens Enterprise Communications See also * * * * Unification (other) * United (other) * Unity (other) Unity may refer to: Buildings * Unity Building, Oregon, Illinois, US; a historic building * Unity Building (Chicago), Illinois, US; a skyscraper * Unity Buildings, Liverpool, UK; two buildings in England * Unity Chapel, Wyoming, Wisconsin, US; ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared Memory Architecture

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between programs. Depending on context, programs may run on a single processor or on multiple separate processors. Using memory for communication inside a single program, e.g. among its multiple threads, is also referred to as shared memory. In hardware In computer hardware, ''shared memory'' refers to a (typically large) block of random access memory (RAM) that can be accessed by several different central processing units (CPUs) in a multiprocessor computer system. Shared memory systems may use: * uniform memory access (UMA): all the processors share the physical memory uniformly; * non-uniform memory access (NUMA): memory access time depends on the memory location relative to a processor; * cache-only memory architecture (COMA): the local memor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computer

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-uniform Memory Access

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC, now Dell Technologies), and Digital (later Compaq, then HP, now HPE). Techniques developed by these companies later featur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Sharing

In computing, time-sharing is the sharing of a computing resource among many users at the same time by means of multiprogramming and multi-tasking.DEC Timesharing (1965), by Peter Clark, The DEC Professional, Volume 1, Number 1 Its emergence as the prominent model of computing in the 1970s represented a major technological shift in the history of computing. By allowing many users to interact concurrently with a single computer, time-sharing dramatically lowered the cost of providing computing capability, made it possible for individuals and organizations to use a computer without owning one, and promoted the interactive use of computers and the development of new interactive applications. History Batch processing The earliest computers were extremely expensive devices, and very slow in comparison to later models. Machines were typically dedicated to a particular set of tasks and operated by control panels, the operator manually entering small programs via switches in order ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Real-time Computing

Real-time computing (RTC) is the computer science term for hardware and software systems subject to a "real-time constraint", for example from event to system response. Real-time programs must guarantee response within specified time constraints, often referred to as "deadlines". Ben-Ari, Mordechai; "Principles of Concurrent and Distributed Programming", ch. 16, Prentice Hall, 1990, , page 164 Real-time responses are often understood to be in the order of milliseconds, and sometimes microseconds. A system not specified as operating in real time cannot usually ''guarantee'' a response within any timeframe, although ''typical'' or ''expected'' response times may be given. Real-time processing ''fails'' if not completed within a specified deadline relative to an event; deadlines must always be met, regardless of system load. A real-time system has been described as one which "controls an environment by receiving data, processing them, and returning the results sufficiently qui ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Multiprocessing

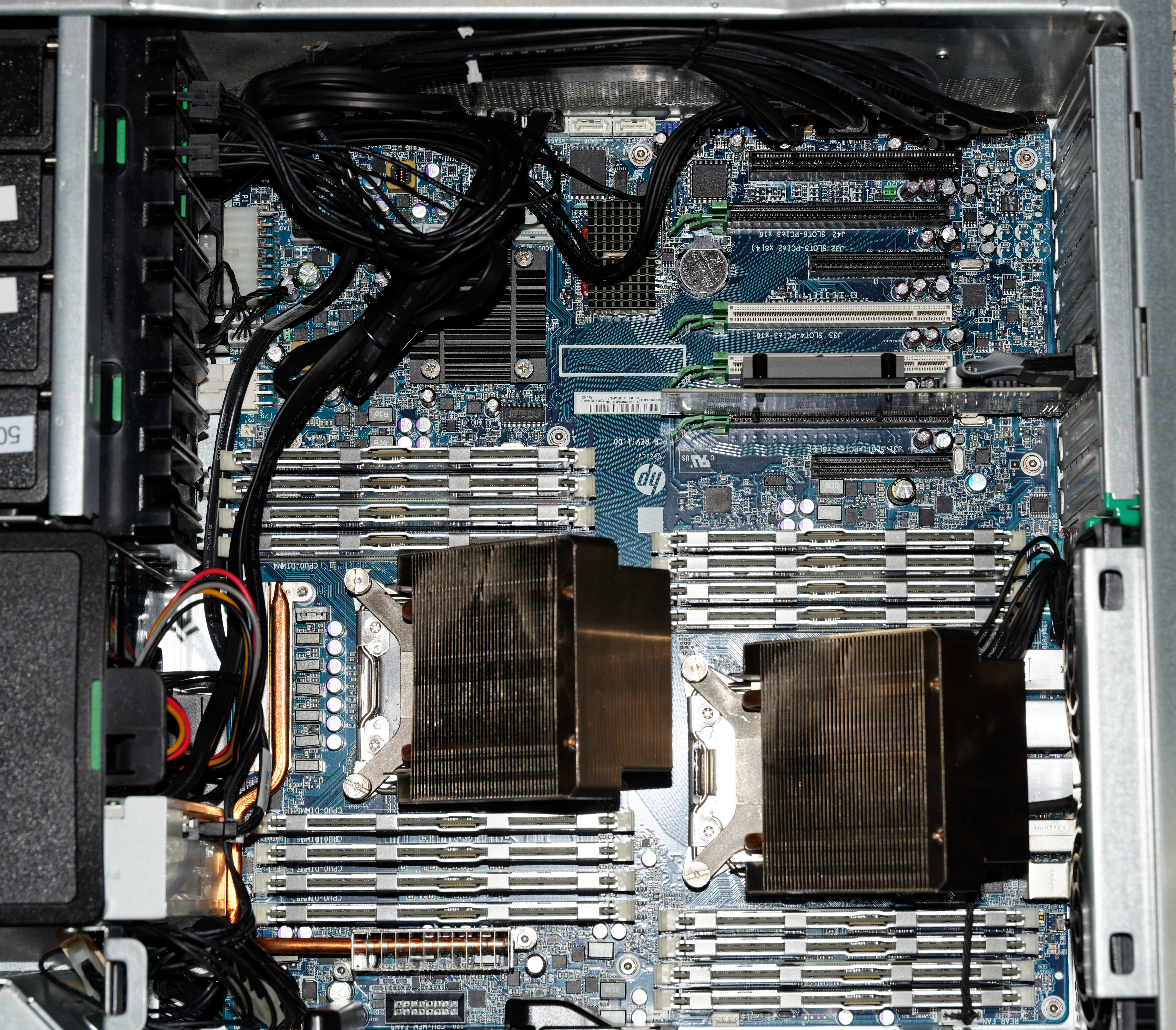

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors. Professor John D. Kubiatowicz considers traditionally SMP systems to contain processors without caches. Culler and Pal-Singh in their 1998 book "Parallel Computer Architecture: A Hardware/Software Approach" mention: "The term SMP is widely used but causes a bit of confusion. ..The more precise description of what is intended by SMP is a shared memory multiprocessor where the cost of accessing a memory locatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Crossbar Switch

In electronics and telecommunications, a crossbar switch (cross-point switch, matrix switch) is a collection of switches arranged in a matrix configuration. A crossbar switch has multiple input and output lines that form a crossed pattern of interconnecting lines between which a connection may be established by closing a switch located at each intersection, the elements of the matrix. Originally, a crossbar switch consisted literally of crossing metal bars that provided the input and output paths. Later implementations achieved the same switching topology in solid-state electronics. The crossbar switch is one of the principal telephone exchange architectures, together with a rotary switch, memory switch, and a crossover switch. General properties A crossbar switch is an assembly of individual switches between a set of inputs and a set of outputs. The switches are arranged in a matrix. If the crossbar switch has M inputs and N outputs, then a crossbar has a matrix with ''M'' × ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multistage Interconnection Networks

Multistage interconnection networks (MINs) are a class of high-speed computer networks usually composed of processing elements (PEs) on one end of the network and memory elements (MEs) on the other end, connected by switching elements (SEs). The switching elements themselves are usually connected to each other in stages, hence the name. MINs are typically used in high-performance or parallel computing as a low- latency interconnection (as opposed to traditional packet switching In telecommunications, packet switching is a method of grouping data into '' packets'' that are transmitted over a digital network. Packets are made of a header and a payload. Data in the header is used by networking hardware to direct the p ... networks), though they could be implemented on top of a packet switching network. Though the network is typically used for routing purposes, it could also be used as a co-processor to the actual processors for such uses as sorting; cyclic shifting, as in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Coherence

In computer architecture, cache coherence is the uniformity of shared resource data that ends up stored in multiple local caches. When clients in a system maintain caches of a common memory resource, problems may arise with incoherent data, which is particularly the case with CPUs in a multiprocessing system. In the illustration on the right, consider both the clients have a cached copy of a particular memory block from a previous read. Suppose the client on the bottom updates/changes that memory block, the client on the top could be left with an invalid cache of memory without any notification of the change. Cache coherence is intended to manage such conflicts by maintaining a coherent view of the data values in multiple caches. Overview In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-uniform Memory Access

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users. NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard), Honeywell Information Systems Italy (HISI) (later Groupe Bull), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC, now Dell Technologies), and Digital (later Compaq, then HP, now HPE). Techniques developed by these companies later featur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache-only Memory Architecture

Cache only memory architecture (COMA) is a computer memory organization for use in multiprocessors in which the local memories (typically DRAM) at each node are used as cache. This is in contrast to using the local memories as actual main memory, as in NUMA organizations. In NUMA, each address in the global address space is typically assigned a fixed home node. When processors access some data, a copy is made in their local cache, but space remains allocated in the home node. Instead, with COMA, there is no home. An access from a remote node may cause that data to migrate. Compared to NUMA, this reduces the number of redundant copies and may allow more efficient use of the memory resources. On the other hand, it raises problems of how to find a particular data (there is no longer a home node) and what to do if a local memory fills up (migrating some data into the local memory then needs to evict some other data, which doesn't have a home to go to). Hardware memory coherence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)