|

HPCC Systems

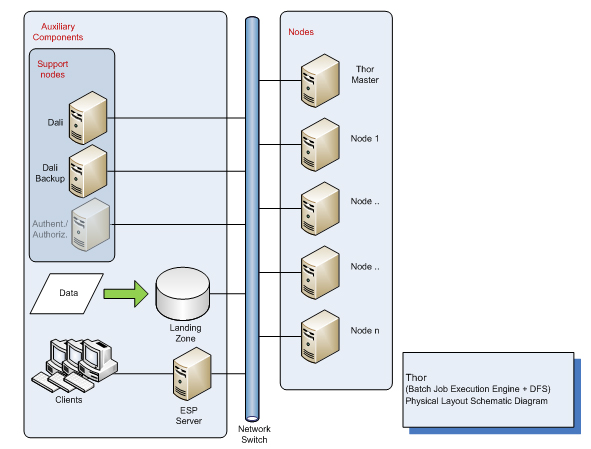

HPCC (High-Performance Computing Cluster), also known as DAS (Data Analytics Supercomputer), is an open source, data-intensive computing system platform developed by LexisNexis Risk Solutions. The HPCC platform incorporates a software architecture implemented on commodity computing clusters to provide high-performance, data-parallel processing for applications utilizing big data. The HPCC platform includes system configurations to support both parallel batch data processing (Thor) and high-performance online query applications using indexed data files (Roxie). The HPCC platform also includes a data-centric declarative programming language for parallel data processing called ECL. The public release of HPCC waannouncedin 2011, after ten years of in-house development (according to LexisNexis). It is an alternative to Hadoop and other Big data platforms. System architecture The HPCC system architecture includes two distinct cluster processing environments Thor and Roxie, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LexisNexis Risk Solutions

LexisNexis Risk Solutions is a global data and analytics company that provides data and technology services, analytics, predictive insights and fraud prevention for a wide range of industries. It is headquartered in Alpharetta, Georgia (part of the Atlanta metropolitan area) and has offices throughout the U.S. and in Australia, Brazil, China, France, Hong Kong SAR, India, Ireland, Israel, Philippines and the U.K. The company's customers include businesses within the insurance, financial services, healthcare and corporate sectors as well as the local, state and federal government, law enforcement and public safety. LexisNexis Risk Solutions operates within the Risk & Business Analytics market segment of RELX, a multinational information and analytics company based in London. Overview Market Segments LexisNexis Risk Solutions operates in four market segments: * Insurance Services * Business Services * Health Care Services * Government Services Technology LexisNexis Risk Solut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Elasticsearch

Elasticsearch is a search engine based on the Lucene library. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. Elasticsearch is developed in Java and is dual-licensed under the source-available Server Side Public License and the Elastic license, while other parts fall under the proprietary ( ''source-available'') ''Elastic License''. Official clients are available in Java, .NET ( C#), PHP, Python, Ruby and many other languages. According to the DB-Engines ranking, Elasticsearch is the most popular enterprise search engine. History Shay Banon created the precursor to Elasticsearch, called Compass, in 2004. While thinking about the third version of Compass he realized that it would be necessary to rewrite big parts of Compass to "create a scalable search solution". So he created "a solution built from the ground up to be distributed" and used a common interface, JSON over HTTP, suitable f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Declarative Programming Languages

Declarative may refer to: * Declarative learning, acquiring information that one can speak about * Declarative memory, one of two types of long term human memory * Declarative programming, a computer programming paradigm * Declarative sentence, a type of sentence that makes a statement * Declarative mood, a grammatical verb form used in declarative sentences See also * Declaration (other) Declaration may refer to: Arts, entertainment, and media Literature * ''Declaration'' (book), a self-published electronic pamphlet by Michael Hardt and Antonio Negri * ''The Declaration'' (novel), a 2008 children's novel by Gemma Malley Music ... {{disamb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Computing

A distributed system is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. Distributed computing is a field of computer science that studies distributed systems. The components of a distributed system interact with one another in order to achieve a common goal. Three significant challenges of distributed systems are: maintaining concurrency of components, overcoming the lack of a global clock, and managing the independent failure of components. When a component of one system fails, the entire system does not fail. Examples of distributed systems vary from SOA-based systems to massively multiplayer online games to peer-to-peer applications. A computer program that runs within a distributed system is called a distributed program, and ''distributed programming'' is the process of writing such programs. There are many different types of implementations for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sector/Sphere

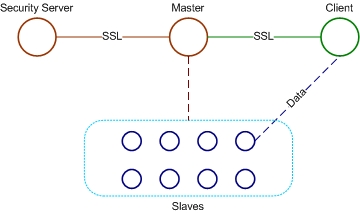

Sector/Sphere is an open source software suite for high-performance distributed data storage and processing. It can be broadly compared to Google's GFS and MapReduce technology. Sector is a distributed file system targeting data storage over a large number of commodity computers. Sphere is the programming architecture framework that supports in-storage parallel data processing for data stored in Sector. Sector/Sphere operates in a wide area network (WAN) setting. The system was created by Yunhong Gu (the author of UDP-based Data Transfer Protocol) in 2006 and was then maintained by a group of other developers. Architecture Sector/Sphere consists of four components. The security server maintains the system security policies such as user accounts and the IP access control list. One or more master servers control operations of the overall system in addition to responding to various user requests. The slave nodes store the data files and process them upon request. The clients ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Aster Data Systems

Aster Data Systems was a data management and analysis software company headquartered in San Carlos, California. It was founded in 2005 and acquired by Teradata in 2011. History Aster Data was co-founded in 2005 by Stanford University graduate students George Candea, Mayank Bawa and Tasso Argyros. It received funding from First Round Capital, Sequoia Capital, Institutional Venture Partners, Cambrian Ventures, Jafco Ventures as well as angel investors including Rajeev Motwani, Ron Conway and David Cheriton. It received its first round of funding of $5 million in 2005, then a second round of $17 million in February 2009, and third round of $30 million in September 2010. Aster was mentioned in 2010 by Intelligent Enterprise' editor. It was ranked seventh in 2011 of venture-funded companies by ''The Wall Street Journal''. Argyros (Chief Technical Officer at the time) was listed as a technology pioneer of information technologies and new media by the World Economic Forum in 201 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Spark

Apache Spark is an open-source unified analytics engine for large-scale data processing. Spark provides an interface for programming clusters with implicit data parallelism and fault tolerance. Originally developed at the University of California, Berkeley's AMPLab, the Spark codebase was later donated to the Apache Software Foundation, which has maintained it since. Overview Apache Spark has its architectural foundation in the resilient distributed dataset (RDD), a read-only multiset of data items distributed over a cluster of machines, that is maintained in a fault-tolerant way. The Dataframe API was released as an abstraction on top of the RDD, followed by the Dataset API. In Spark 1.x, the RDD was the primary application programming interface (API), but as of Spark 2.x use of the Dataset API is encouraged even though the RDD API is not deprecated. The RDD technology still underlies the Dataset API. Spark and its RDDs were developed in 2012 in response to limitations in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making pred ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Amazon Web Services

Amazon Web Services, Inc. (AWS) is a subsidiary of Amazon that provides on-demand cloud computing platforms and APIs to individuals, companies, and governments, on a metered pay-as-you-go basis. These cloud computing web services provide distributed computing processing capacity and software tools via AWS server farms. One of these services is Amazon Elastic Compute Cloud (EC2), which allows users to have at their disposal a virtual cluster of computers, available all the time, through the Internet. AWS's virtual computers emulate most of the attributes of a real computer, including hardware central processing units (CPUs) and graphics processing units (GPUs) for processing; local/ RAM memory; hard-disk/ SSD storage; a choice of operating systems; networking; and pre-loaded application software such as web servers, databases, and customer relationship management (CRM). AWS services are delivered to customers via a network of AWS server farms located throughout the world ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Middleware

Middleware is a type of computer software that provides services to software applications beyond those available from the operating system. It can be described as "software glue". Middleware makes it easier for software developers to implement communication and input/output, so they can focus on the specific purpose of their application. It gained popularity in the 1980s as a solution to the problem of how to link newer applications to older legacy systems, although the term had been in use since 1968. In distributed applications The term is most commonly used for software that enables communication and management of data in distributed applications. An IETF workshop in 2000 defined middleware as "those services found above the transport (i.e. over TCP/IP) layer set of services but below the application environment" (i.e. below application-level APIs). In this more specific sense ''middleware'' can be described as the dash ("-") in '' client-server'', or the ''-to-'' in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |