|

Detrended Fluctuation Analysis

In stochastic processes, chaos theory and time series analysis, detrended fluctuation analysis (DFA) is a method for determining the statistical self-affinity of a signal. It is useful for analysing time series that appear to be long-memory processes (diverging correlation time, e.g. power-law decaying autocorrelation function) or 1/f noise. The obtained exponent is similar to the Hurst exponent, except that DFA may also be applied to signals whose underlying statistics (such as mean and variance) or dynamics are non-stationary (changing with time). It is related to measures based upon spectral techniques such as autocorrelation and Fourier transform. Peng et al. introduced DFA in 1994 in a paper that has been cited over 3,000 times as of 2022 and represents an extension of the (ordinary) fluctuation analysis (FA), which is affected by non-stationarities. Systematic studies of the advantages and limitations of the DFA method were performed by PCh Ivanov et al. in a serie ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Processes

In probability theory and related fields, a stochastic () or random process is a mathematical object usually defined as a family of random variables in a probability space, where the index of the family often has the interpretation of time. Stochastic processes are widely used as mathematical models of systems and phenomena that appear to vary in a random manner. Examples include the growth of a bacterial population, an electrical current fluctuating due to thermal noise, or the movement of a gas molecule. Stochastic processes have applications in many disciplines such as biology, chemistry, ecology, neuroscience, physics, image processing, signal processing, control theory, information theory, computer science, and telecommunications. Furthermore, seemingly random changes in financial markets have motivated the extensive use of stochastic processes in finance. Applications and the study of phenomena have in turn inspired the proposal of new stochastic processes. Examples of su ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Walk

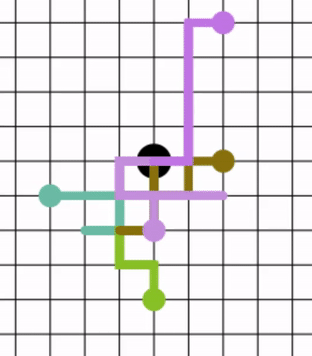

In mathematics, a random walk, sometimes known as a drunkard's walk, is a stochastic process that describes a path that consists of a succession of random steps on some Space (mathematics), mathematical space. An elementary example of a random walk is the random walk on the integer number line \mathbb Z which starts at 0, and at each step moves +1 or −1 with equal probability. Other examples include the path traced by a molecule as it travels in a liquid or a gas (see Brownian motion), the search path of a foraging animal, or the price of a fluctuating random walk hypothesis, stock and the financial status of a gambler. Random walks have applications to engineering and many scientific fields including ecology, psychology, computer science, physics, chemistry, biology, economics, and sociology. The term ''random walk'' was first introduced by Karl Pearson in 1905. Realizations of random walks can be obtained by Monte Carlo Simulation, Monte Carlo simulation. Lattice random ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hausdorff Dimension

In mathematics, Hausdorff dimension is a measure of ''roughness'', or more specifically, fractal dimension, that was introduced in 1918 by mathematician Felix Hausdorff. For instance, the Hausdorff dimension of a single point is zero, of a line segment is 1, of a square is 2, and of a cube is 3. That is, for sets of points that define a smooth shape or a shape that has a small number of corners—the shapes of traditional geometry and science—the Hausdorff dimension is an integer agreeing with the usual sense of dimension, also known as the topological dimension. However, formulas have also been developed that allow calculation of the dimension of other less simple objects, where, solely on the basis of their properties of scaling and self-similarity, one is led to the conclusion that particular objects—including fractals—have non-integer Hausdorff dimensions. Because of the significant technical advances made by Abram Samoilovitch Besicovitch allowing computation of di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fractal Dimension

In mathematics, a fractal dimension is a term invoked in the science of geometry to provide a rational statistical index of complexity detail in a pattern. A fractal pattern changes with the Scaling (geometry), scale at which it is measured. It is also a measure of the Space-filling curve, space-filling capacity of a pattern and tells how a fractal scales differently, in a fractal (non-integer) dimension. The main idea of "fractured" Hausdorff dimension, dimensions has a long history in mathematics, but the term itself was brought to the fore by Benoit Mandelbrot based on How Long Is the Coast of Britain? Statistical Self-Similarity and Fractional Dimension, his 1967 paper on self-similarity in which he discussed ''fractional dimensions''. In that paper, Mandelbrot cited previous work by Lewis Fry Richardson describing the counter-intuitive notion that a coastline's measured length changes with the length of the measuring stick used (see #coastline, Fig. 1). In terms of that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood Estimation

In statistics, maximum likelihood estimation (MLE) is a method of estimation theory, estimating the Statistical parameter, parameters of an assumed probability distribution, given some observed data. This is achieved by Mathematical optimization, maximizing a likelihood function so that, under the assumed statistical model, the Realization (probability), observed data is most probable. The point estimate, point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is Differentiable function, differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Self-similarity

In mathematics, a self-similar object is exactly or approximately similar to a part of itself (i.e., the whole has the same shape as one or more of the parts). Many objects in the real world, such as coastlines, are statistically self-similar: parts of them show the same statistical properties at many scales. Self-similarity is a typical property of fractals. Scale invariance is an exact form of self-similarity where at any magnification there is a smaller piece of the object that is similar to the whole. For instance, a side of the Koch snowflake is both symmetrical and scale-invariant; it can be continually magnified 3x without changing shape. The non-trivial similarity evident in fractals is distinguished by their fine structure, or detail on arbitrarily small scales. As a counterexample, whereas any portion of a straight line may resemble the whole, further detail is not revealed. Peitgen ''et al.'' explain the concept as such: Since mathematically, a fractal may sho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fractional Gaussian Noise

In probability theory, fractional Brownian motion (fBm), also called a fractal Brownian motion, is a generalization of Brownian motion. Unlike classical Brownian motion, the increments of fBm need not be independent. fBm is a continuous-time Gaussian process B_H(t) on , T/math>, that starts at zero, has expectation zero for all t in , T/math>, and has the following covariance function: :E _H(t) B_H (s)\tfrac (, t, ^+, s, ^-, t-s, ^), where ''H'' is a real number in (0, 1), called the Hurst index or Hurst parameter associated with the fractional Brownian motion. The Hurst exponent describes the raggedness of the resultant motion, with a higher value leading to a smoother motion. It was introduced by . The value of ''H'' determines what kind of process the ''fBm'' is: * if ''H'' = 1/2 then the process is in fact a Brownian motion or Wiener process; * if ''H'' > 1/2 then the increments of the process are positively correlated; * if ''H'' < 1/2 then the increments of t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Walk

In mathematics, a random walk, sometimes known as a drunkard's walk, is a stochastic process that describes a path that consists of a succession of random steps on some Space (mathematics), mathematical space. An elementary example of a random walk is the random walk on the integer number line \mathbb Z which starts at 0, and at each step moves +1 or −1 with equal probability. Other examples include the path traced by a molecule as it travels in a liquid or a gas (see Brownian motion), the search path of a foraging animal, or the price of a fluctuating random walk hypothesis, stock and the financial status of a gambler. Random walks have applications to engineering and many scientific fields including ecology, psychology, computer science, physics, chemistry, biology, economics, and sociology. The term ''random walk'' was first introduced by Karl Pearson in 1905. Realizations of random walks can be obtained by Monte Carlo Simulation, Monte Carlo simulation. Lattice random ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Brownian Noise

In science, Brownian noise, also known as Brown noise or red noise, is the type of signal noise produced by Brownian motion, hence its alternative name of random walk noise. The term "Brown noise" does not come from brown, the color, but after Robert Brown (Scottish botanist from Montrose), Robert Brown, who documented the erratic motion for multiple types of inanimate particles in water. The term "red noise" comes from the "white noise"/"white light" analogy; red noise is strong in longer wavelengths, similar to the red end of the visible spectrum. Explanation The graphic representation of the sound signal mimics a Brownian pattern. Its spectral density is inversely proportional to ''f'' 2, meaning it has higher intensity at lower frequencies, even more so than pink noise. It decreases in intensity by 6 Decibel, dB per Octave (electronics), octave (20 dB per Decade (log scale), decade) and, when heard, has a "damped" or "soft" quality compared to white noise, white and p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pink Noise

Pink noise, noise, fractional noise or fractal noise is a signal (information theory), signal or process with a frequency spectrum such that the power spectral density (power per frequency interval) is inversely proportional to the frequency of the signal. In pink noise, each Octave (electronics), octave interval (halving or doubling in frequency) carries an equal amount of noise energy. Pink noise sounds like a waterfall. It is often used to tune loudspeaker systems in professional audio. Pink noise is one of the most commonly observed signals in biological systems. The name arises from the pink appearance of visible light with this power spectrum. This is in contrast with white noise which has equal intensity per frequency interval. Definition Within the scientific literature, the term "1/f noise" is sometimes used loosely to refer to any noise with a power spectral density of the form S(f) \propto \frac, where is frequency, and , with exponent usually close to 1. On ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log–log Plot

In science and engineering, a log–log graph or log–log plot is a two-dimensional graph of numerical data that uses logarithmic scales on both the horizontal and vertical axes. Exponentiation#Power_functions, Power functions – relationships of the form y=ax^k – appear as straight lines in a log–log graph, with the exponent corresponding to the slope, and the coefficient corresponding to the intercept. Thus these graphs are very useful for recognizing these relationships and estimating parameters. Any base can be used for the logarithm, though most commonly base 10 (common logs) are used. Relation with monomials Given a monomial equation y=ax^k, taking the logarithm of the equation (with any base) yields: \log y = k \log x + \log a. Setting X = \log x and Y = \log y, which corresponds to using a log–log graph, yields the equation Y = mX + b where ''m'' = ''k'' is the slope of the line (Grade (slope), gradient) and ''b'' = log ''a'' is the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |