|

Cox–Ingersoll–Ross Model

In mathematical finance, the Cox–Ingersoll–Ross (CIR) model describes the evolution of interest rates. It is a type of "one factor model" (short-rate model) as it describes interest rate movements as driven by only one source of market risk. The model can be used in the valuation of interest rate derivatives. It was introduced in 1985 by John C. Cox, Jonathan E. Ingersoll and Stephen A. Ross as an extension of the Vasicek model, itself an Ornstein–Uhlenbeck process. The model The CIR model describes the instantaneous interest rate r_t with a Feller square-root process, whose stochastic differential equation is :dr_t = a(b-r_t)\, dt + \sigma\sqrt\, dW_t, where W_t is a Wiener process (modelling the random market risk factor) and a , b , and \sigma\, are the parameters. The parameter a corresponds to the speed of adjustment to the mean b , and \sigma\, to volatility. The drift factor, a(b-r_t), is exactly the same as in the Vasicek model. It ensures mean rev ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Deviation

In statistics, the standard deviation is a measure of the amount of variation of the values of a variable about its Expected value, mean. A low standard Deviation (statistics), deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, while a high standard deviation indicates that the values are spread out over a wider range. The standard deviation is commonly used in the determination of what constitutes an outlier and what does not. Standard deviation may be abbreviated SD or std dev, and is most commonly represented in mathematical texts and equations by the lowercase Greek alphabet, Greek letter Sigma, σ (sigma), for the population standard deviation, or the Latin script, Latin letter ''s'', for the sample standard deviation. The standard deviation of a random variable, Sample (statistics), sample, statistical population, data set, or probability distribution is the square root of its variance. (For a finite population, v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Basic Affine Jump Diffusion

In mathematics probability theory, a basic affine jump diffusion (basic AJD) is a stochastic process Z of the form : dZ_t=\kappa (\theta -Z_t)\,dt+\sigma \sqrt\,dB_t+dJ_t,\qquad t\geq 0, Z_\geq 0, where B is a standard Brownian motion, and J is an independent compound Poisson process with constant jump intensity l and independent exponentially distributed jumps with mean \mu . For the process to be well defined, it is necessary that \kappa \theta \geq 0 and \mu \geq 0 . A basic AJD is a special case of an affine process and of a jump diffusion. On the other hand, the Cox–Ingersoll–Ross (CIR) process is a special case of a basic AJD. Basic AJDs are attractive for modeling default times in credit risk applications, since both the moment generating function : m\left( q\right) =\operatorname \left( e^\right) ,\qquad q\in \mathbb, and the characteristic function In mathematics, the term "characteristic function" can refer to any of several distinct concepts: * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

No-arbitrage Bounds

In financial mathematics, no-arbitrage bounds are mathematical relationships specifying limits on financial portfolio prices. These price bounds are a specific example of good–deal bounds, and are in fact the greatest extremes for good–deal bounds. The most frequent nontrivial example of no-arbitrage bounds is put–call parity for option prices. In incomplete markets, the bounds are given by the subhedging and superhedging prices. The essence of no-arbitrage in mathematical finance is excluding the possibility of "making money out of nothing" in the financial market. This is necessary because the existence of persistent risk free arbitrage Arbitrage (, ) is the practice of taking advantage of a difference in prices in two or more marketsstriking a combination of matching deals to capitalize on the difference, the profit being the difference between the market prices at which th ... opportunities is not only unrealistic, but also contradicts the possibility of an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Discretization

In applied mathematics, discretization is the process of transferring continuous functions, models, variables, and equations into discrete counterparts. This process is usually carried out as a first step toward making them suitable for numerical evaluation and implementation on digital computers. Dichotomization is the special case of discretization in which the number of discrete classes is 2, which can approximate a continuous variable as a binary variable (creating a dichotomy for modeling purposes, as in binary classification). Discretization is also related to discrete mathematics, and is an important component of granular computing. In this context, ''discretization'' may also refer to modification of variable or category ''granularity'', as when multiple discrete variables are aggregated or multiple discrete categories fused. Whenever continuous data is discretized, there is always some amount of discretization error. The goal is to reduce the amount to a level ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Simulation

A stochastic simulation is a simulation of a system that has variables that can change stochastically (randomly) with individual probabilities.DLOUHÝ, M.; FÁBRY, J.; KUNCOVÁ, M.. Simulace pro ekonomy. Praha : VŠE, 2005. Realizations of these random variables are generated and inserted into a model of the system. Outputs of the model are recorded, and then the process is repeated with a new set of random values. These steps are repeated until a sufficient amount of data is gathered. In the end, the distribution of the outputs shows the most probable estimates as well as a frame of expectations regarding what ranges of values the variables are more or less likely to fall in. Often random variables inserted into the model are created on a computer with a random number generator (RNG). The U(0,1) uniform distribution outputs of the random number generator are then transformed into random variables with probability distributions that are used in the system model. Etymology ''St ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum Likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed statistical model, the observed data is most probable. The point in the parameter space that maximizes the likelihood function is called the maximum likelihood estimate. The logic of maximum likelihood is both intuitive and flexible, and as such the method has become a dominant means of statistical inference. If the likelihood function is differentiable, the derivative test for finding maxima can be applied. In some cases, the first-order conditions of the likelihood function can be solved analytically; for instance, the ordinary least squares estimator for a linear regression model maximizes the likelihood when the random errors are assumed to have normal distributions with the same variance. From the perspective of Bayesian inference, ML ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ordinary Least Squares

In statistics, ordinary least squares (OLS) is a type of linear least squares method for choosing the unknown parameters in a linear regression In statistics, linear regression is a statistical model, model that estimates the relationship between a Scalar (mathematics), scalar response (dependent variable) and one or more explanatory variables (regressor or independent variable). A mode ... model (with fixed level-one effects of a linear function of a set of explanatory variables) by the principle of least squares: minimizing the sum of the squares of the differences between the observed dependent variable (values of the variable being observed) in the input dataset and the output of the (linear) function of the independent variable. Some sources consider OLS to be linear regression. Geometrically, this is seen as the sum of the squared distances, parallel to the axis of the dependent variable, between each data point in the set and the corresponding point on the regression ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Reversion (finance)

Mean reversion is a financial term for the assumption that an asset's price will tend to converge to the average price over time. Using mean reversion as a timing strategy involves both the identification of the trading range for a security and the computation of the average price using quantitative methods. Mean reversion is a phenomenon that can be exhibited in a host of financial time-series data, from price data, earnings data, and book value. When the current market price is less than the average past price, the security is considered attractive for purchase, with the expectation that the price will rise. When the current market price is above the average past price, the market price is expected to fall. In other words, deviations from the average price are expected to revert to the average. This expectation serves as the cornerstone of multiple trading strategies. Stock reporting services commonly offer moving averages for periods such as 50 and 100 days. While reporting s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

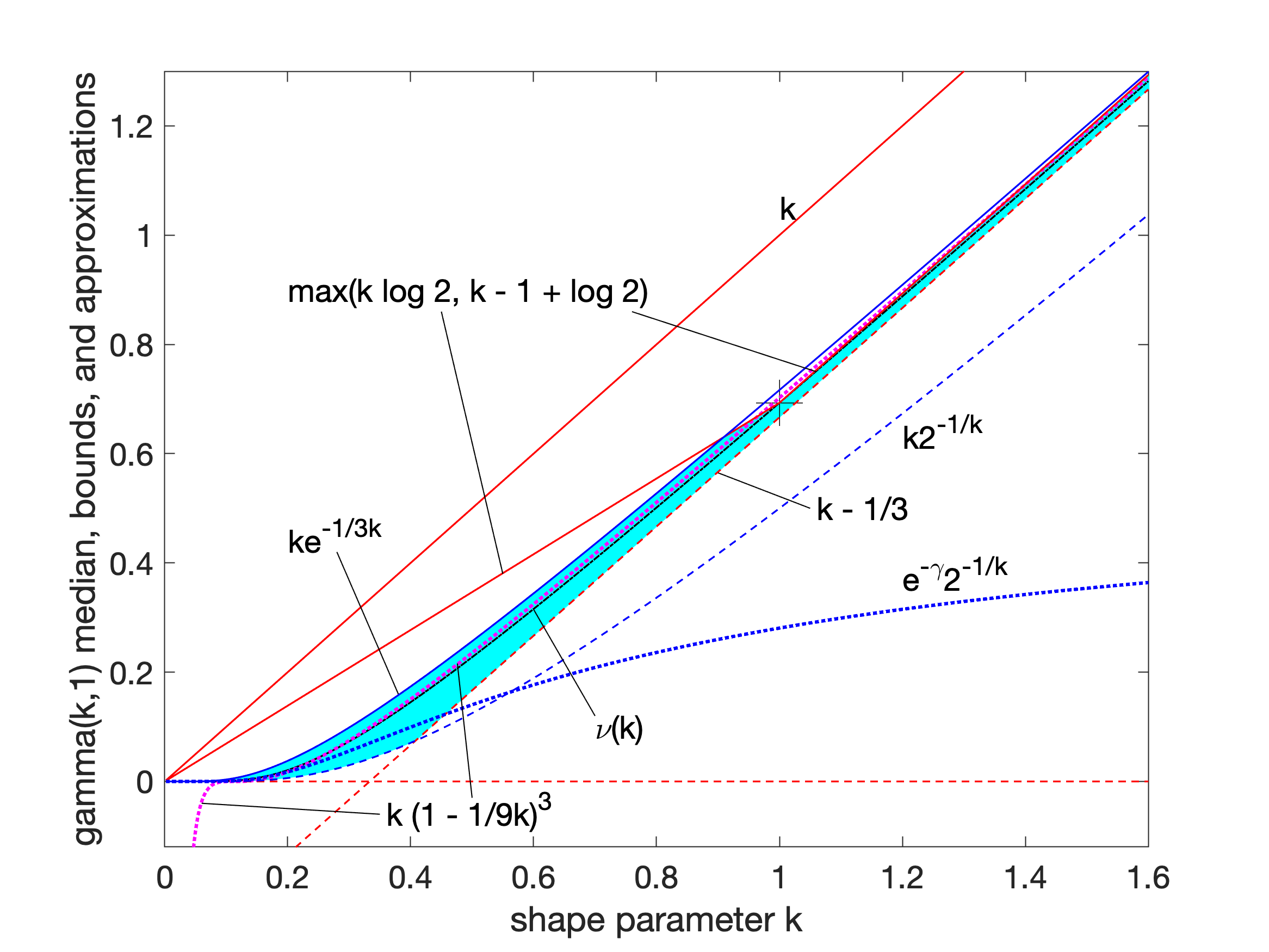

Gamma Distribution

In probability theory and statistics, the gamma distribution is a versatile two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use: # With a shape parameter and a scale parameter # With a shape parameter \alpha and a rate parameter In each of these forms, both parameters are positive real numbers. The distribution has important applications in various fields, including econometrics, Bayesian statistics, and life testing. In econometrics, the (''α'', ''θ'') parameterization is common for modeling waiting times, such as the time until death, where it often takes the form of an Erlang distribution for integer ''α'' values. Bayesian statisticians prefer the (''α'',''λ'') parameterization, utilizing the gamma distribution as a conjugate prior for several inverse scale parameters, facilit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noncentral Chi-squared Distribution

In probability theory and statistics, the noncentral chi-squared distribution (or noncentral chi-square distribution, noncentral \chi^2 distribution) is a noncentral generalization of the chi-squared distribution. It often arises in the power analysis of statistical tests in which the null distribution is (perhaps asymptotically) a chi-squared distribution; important examples of such tests are the likelihood-ratio tests. Definitions Background Let (X_1,X_2, \ldots, X_i, \ldots,X_k) be ''k'' independent, normally distributed random variables with means \mu_i and unit variances. Then the random variable : \sum_^k X_i^2 is distributed according to the noncentral chi-squared distribution. It has two parameters: k which specifies the number of degrees of freedom (i.e. the number of X_i), and \lambda which is related to the mean of the random variables X_i by: : \lambda=\sum_^k \mu_i^2. \lambda is sometimes called the noncentrality parameter. Note that some references de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |