|

Covariance Structure Modeling

Structural equation modeling (SEM) is a diverse set of methods used by scientists for both observational and experimental research. SEM is used mostly in the social and behavioral science fields, but it is also used in epidemiology, business, and other fields. A common definition of SEM is, "...a class of methodologies that seeks to represent hypotheses about the means, variances, and covariances of observed data in terms of a smaller number of 'structural' parameters defined by a hypothesized underlying conceptual or theoretical model,". SEM involves a model representing how various aspects of some phenomenon are thought to causally connect to one another. Structural equation models often contain postulated causal connections among some latent variables (variables thought to exist but which can't be directly observed). Additional causal connections link those latent variables to observed variables whose values appear in a data set. The causal connections are represented using ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Econometrics

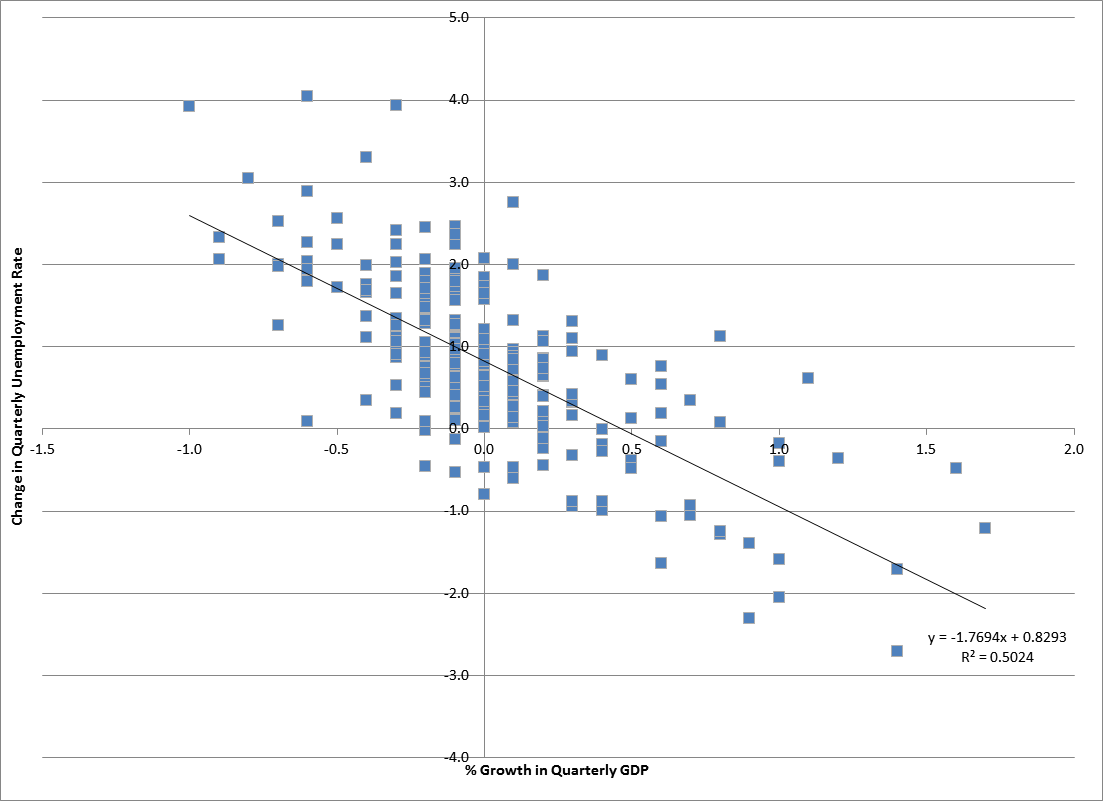

Econometrics is an application of statistical methods to economic data in order to give empirical content to economic relationships. M. Hashem Pesaran (1987). "Econometrics", '' The New Palgrave: A Dictionary of Economics'', v. 2, p. 8 p. 8–22 Reprinted in J. Eatwell ''et al.'', eds. (1990). ''Econometrics: The New Palgrave''p. 1 p. 1–34Abstract ( 2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran). More precisely, it is "the quantitative analysis of actual economic phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference." An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships." Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used today. A basic tool for econometrics is the multiple linear regression model. ''Econome ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Comparative Fit Index

In statistics, confirmatory factor analysis (CFA) is a special form of factor analysis, most commonly used in social science research.Kline, R. B. (2010). ''Principles and practice of structural equation modeling (3rd ed.).'' New York, New York: Guilford Press. It is used to test whether measures of a construct are consistent with a researcher's understanding of the nature of that construct (or factor). As such, the objective of confirmatory factor analysis is to test whether the data fit a hypothesized measurement model. This hypothesized model is based on theory and/or previous analytic research. CFA was first developed by Jöreskog (1969) and has built upon and replaced older methods of analyzing construct validity such as the MTMM Matrix as described in Campbell & Fiske (1959). In confirmatory factor analysis, the researcher first develops a hypothesis about what factors they believe are underlying the measures used (e.g., " Depression" being the factor underlying the Beck De ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standardized Root Mean Squared Residual

Standardization (American English) or standardisation (British English) is the process of implementing and developing technical standards based on the consensus of different parties that include firms, users, interest groups, standards organizations and governments. Standardization can help maximize compatibility, interoperability, safety, repeatability, efficiency, and quality. It can also facilitate a normalization of formerly custom processes. In social sciences, including economics, the idea of ''standardization'' is close to the solution for a coordination problem, a situation in which all parties can realize mutual gains, but only by making mutually consistent decisions. Divergent national standards impose costs on consumers and can be a form of non-tariff trade barrier. History Early examples Standard weights and measures were developed by the Indus Valley civilization.Iwata, Shigeo (2008), "Weights and Measures in the Indus Valley", ''Encyclopaedia of the History of S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Root Mean Square Error Of Approximation

In vascular plants, the roots are the organs of a plant that are modified to provide anchorage for the plant and take in water and nutrients into the plant body, which allows plants to grow taller and faster. They are most often below the surface of the soil, but roots can also be aerial or aerating, that is, growing up above the ground or especially above water. Function The major functions of roots are absorption of water, plant nutrition and anchoring of the plant body to the ground. Types of Roots (major rooting system) Plants exhibit two main root system types: ''taproot'' and ''fibrous'', with variations like adventitious, aerial, and buttress roots, each serving specific functions. Taproot System Characterized by a single, main root growing vertically downward, with smaller lateral roots branching off. Examples. Dandelions, carrots, and many dicot plants. Fibrous RootSystem Consists of a network of thin, branching roots that spread out from the base of the stem, l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Likelihood

A likelihood function (often simply called the likelihood) measures how well a statistical model explains observed data by calculating the probability of seeing that data under different parameter values of the model. It is constructed from the joint probability distribution of the random variable that (presumably) generated the observations. When evaluated on the actual data points, it becomes a function solely of the model parameters. In maximum likelihood estimation, the argument that maximizes the likelihood function serves as a point estimate for the unknown parameter, while the Fisher information (often approximated by the likelihood's Hessian matrix at the maximum) gives an indication of the estimate's precision. In contrast, in Bayesian statistics, the estimate of interest is the ''converse'' of the likelihood, the so-called posterior probability of the parameter given the observed data, which is calculated via Bayes' rule. Definition The likelihood function, para ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of Sample (statistics), sample data (and similar data from a larger Statistical population, population). A statistical model represents, often in considerably idealized form, the Data generating process, data-generating process. When referring specifically to probability, probabilities, the corresponding term is probabilistic model. All Statistical hypothesis testing, statistical hypothesis tests and all Estimator, statistical estimators are derived via statistical models. More generally, statistical models are part of the foundation of statistical inference. A statistical model is usually specified as a mathematical relationship between one or more random variables and other non-random variables. As such, a statistical model is "a formal representation of a theory" (Herman J. Adèr, Herman Adèr quoting Kenneth A. Bollen, Kenneth Bollen). Introduction Informally, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parameter

A parameter (), generally, is any characteristic that can help in defining or classifying a particular system (meaning an event, project, object, situation, etc.). That is, a parameter is an element of a system that is useful, or critical, when identifying the system, or when evaluating its performance, status, condition, etc. ''Parameter'' has more specific meanings within various disciplines, including mathematics, computer programming, engineering, statistics, logic, linguistics, and electronic musical composition. In addition to its technical uses, there are also extended uses, especially in non-scientific contexts, where it is used to mean defining characteristics or boundaries, as in the phrases 'test parameters' or 'game play parameters'. Modelization When a system theory, system is modeled by equations, the values that describe the system are called ''parameters''. For example, in mechanics, the masses, the dimensions and shapes (for solid bodies), the densities and t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Akaike Information Criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection. AIC is founded on information theory. When a statistical model is used to represent the process that generated the data, the representation will almost never be exact; so some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model. In estimating the amount of information lost by a model, AIC deals with the trade-off between the goodness of fit of the model and the simplicity of the model. In other words, AIC deals with both the risk of overfitting and the risk of underfitting. The Akaike information crite ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-square Test

A chi-squared test (also chi-square or test) is a statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine whether two categorical variables (''two dimensions of the contingency table'') are independent in influencing the test statistic (''values within the table''). The test is valid when the test statistic is chi-squared distributed under the null hypothesis, specifically Pearson's chi-squared test and variants thereof. Pearson's chi-squared test is used to determine whether there is a statistically significant difference between the expected frequencies and the observed frequencies in one or more categories of a contingency table. For contingency tables with smaller sample sizes, a Fisher's exact test is used instead. In the standard applications of this test, the observations are classified into mutually exclusive classes. If the null hypothesis that there are no dif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Test

A chi-squared test (also chi-square or test) is a Statistical hypothesis testing, statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine whether two categorical variables (''two dimensions of the contingency table'') are independent in influencing the test statistic (''values within the table''). The test is Validity (statistics), valid when the test statistic is chi-squared distribution, chi-squared distributed under the null hypothesis, specifically Pearson's chi-squared test and variants thereof. Pearson's chi-squared test is used to determine whether there is a Statistical significance, statistically significant difference between the expected frequency (statistics), frequencies and the observed frequencies in one or more categories of a contingency table. For contingency tables with smaller sample sizes, a Fisher's exact test is used instead. In the standard application ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Path Analysis (statistics)

In statistics, path analysis is used to describe the directed dependencies among a set of variables. This includes models equivalent to any form of multiple regression analysis, factor analysis, canonical correlation analysis, discriminant analysis, as well as more general families of models in the multivariate analysis of variance and covariance analyses (MANOVA, ANOVA, ANCOVA). In addition to being thought of as a form of multiple regression focusing on causality, path analysis can be viewed as a special case of structural equation modeling (SEM) – one in which only single indicators are employed for each of the variables in the causal model. That is, path analysis is SEM with a structural model, but no measurement model. Other terms used to refer to path analysis include causal modeling and analysis of covariance structures. Path analysis is considered by Judea Pearl to be a direct ancestor to the techniques of causal inference. History Path analysis was developed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |