|

Convolutional Layer

In artificial neural networks, a convolutional layer is a type of network layer that applies a convolution operation to the input. Convolutional layers are some of the primary building blocks of convolutional neural networks In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networ ... (CNNs), a class of neural network most commonly applied to images, video, audio, and other data that have the property of uniform translational symmetry. The convolution operation in a convolutional layer involves sliding a small window (called a Kernel (image processing), kernel or filter) across the input data and computing the dot product between the values in the kernel and the input at each position. This process creates a feature map that represents detected Feature (computer vision), features in the inpu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Networks

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a '' weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typicall ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Yann LeCun

Yann André LeCun ( , ; originally spelled Le Cun; born 8 July 1960) is a French computer scientist working primarily in the fields of machine learning, computer vision, mobile robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at New York University and Vice-President, Chief AI Scientist at Meta. He is well known for his work on optical character recognition and computer vision using convolutional neural networks (CNN), and is a founding father of convolutional nets. He is also one of the main creators of the DjVu image compression technology (together with Léon Bottou and Patrick Haffner). He co-developed the Lush programming language with Léon Bottou. LeCun received the 2018 Turing Award (often referred to as " Nobel Prize of Computing"), together with Yoshua Bengio and Geoffrey Hinton, for their work on deep learning. The three are sometimes referred to as the "Godfathers of AI" and "Godfathers of Deep Lea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do. Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scien ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be Supervised learning, supervised, Semi-supervised learning, semi-supervised or Unsupervised learning, unsupervised. Deep-learning architectures such as #Deep_neural_networks, deep neural networks, deep belief networks, deep reinforcement learning, recurrent neural networks, convolutional neural networks and Transformer (machine learning model), Transformers have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, Climatology, climate science, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance. Artificial neural networks (ANNs) were inspired by information processing and distr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

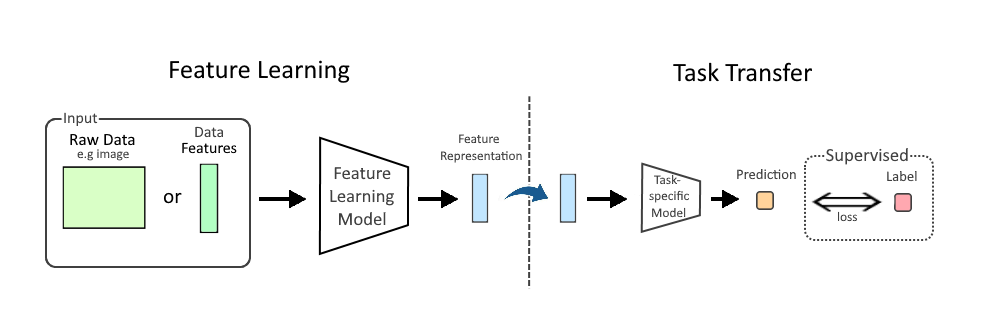

Feature Learning

In machine learning, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that machine learning tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data such as images, video, and sensor data has not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. Feature learning can be either supervised, unsupervised or self-supervised. * In supervised feature learning, features are learned using labeled input data. Labeled data includes input-label pairs where t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pooling Layer

In neural networks, a pooling layer is a kind of network layer that downsamples and aggregates information that is dispersed among many vectors into fewer vectors. It has several uses. It removes redundant information, reducing the amount of computation and memory required, makes the model more robust to small variations in the input, and increases the receptive field of neurons in later layers in the network. Convolutional neural network pooling Pooling is most commonly used in convolutional neural networks (CNN). Below is a description of pooling in 2-dimensional CNNs. The generalization to n-dimensions is immediate. As notation, we consider a tensor x \in \R^, where H is height, W is width, and C is the number of channels. A pooling layer outputs a tensor y \in \R^. We define two variables f, s called "filter size" (aka "kernel size") and "stride". Sometimes, it is necessary to use a different filter size and stride for horizontal and vertical directions. In such cases, we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networks (SIANN), based on the shared-weight architecture of the convolution kernels or filters that slide along input features and provide translation- equivariant responses known as feature maps. Counter-intuitively, most convolutional neural networks are not invariant to translation, due to the downsampling operation they apply to the input. They have applications in image and video recognition, recommender systems, image classification, image segmentation, medical image analysis, natural language processing, brain–computer interfaces, and financial time series. CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be Supervised learning, supervised, Semi-supervised learning, semi-supervised or Unsupervised learning, unsupervised. Deep-learning architectures such as #Deep_neural_networks, deep neural networks, deep belief networks, deep reinforcement learning, recurrent neural networks, convolutional neural networks and Transformer (machine learning model), Transformers have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, Climatology, climate science, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance. Artificial neural networks (ANNs) were inspired by information processing and distr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alex Krizhevsky

Alex Krizhevsky is a Ukrainian-born Canadian computer scientist most noted for his work on artificial neural networks and deep learning. Shortly after having won the ImageNet challenge in 2012 with AlexNet, he and his colleagues sold their startup, DNN Research Inc., to Google. Krizhevsky left Google in September 2017 after losing interest in the work, to work at the company Dessa in support of new deep-learning techniques. Many of his numerous papers on machine learning and computer vision Computer vision is an Interdisciplinarity, interdisciplinary scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate t ... are frequently cited by other researchers. He is the creator of the CIFAR-10 and CIFAR-100 datasets. References External links Alex Krizhevsky's home page Living people Computer scientists Artificial intelligence researchers Computer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Primary Visual Cortex

The visual cortex of the brain is the area of the cerebral cortex that processes visual information. It is located in the occipital lobe. Sensory input originating from the eyes travels through the lateral geniculate nucleus in the thalamus and then reaches the visual cortex. The area of the visual cortex that receives the sensory input from the lateral geniculate nucleus is the primary visual cortex, also known as visual area 1 ( V1), Brodmann area 17, or the striate cortex. The extrastriate areas consist of visual areas 2, 3, 4, and 5 (also known as V2, V3, V4, and V5, or Brodmann area 18 and all Brodmann area 19). Both hemispheres of the brain include a visual cortex; the visual cortex in the left hemisphere receives signals from the right visual field, and the visual cortex in the right hemisphere receives signals from the left visual field. Introduction The primary visual cortex (V1) is located in and around the calcarine fissure in the occipital lobe. Each hemispher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simple Cell

A simple cell in the primary visual cortex is a cell that responds primarily to oriented edges and gratings (bars of particular orientations). These cells were discovered by Torsten Wiesel and David Hubel in the late 1950s. Such cells are tuned to different frequencies and orientations, even with different phase relationships, possibly for extracting disparity (depth) information and to attribute depth to detected lines and edges. This may result in a 3D 'wire-frame' representation as used in computer graphics. The fact that input from the left and right eyes is very close in the so-called cortical hypercolumns is an indication that depth processing occurs at a very early stage, aiding recognition of 3D objects. Later, many other cells with specific functions have been discovered: (a) end-stopped cells which are thought to detect singularities like line and edge crossings, vertices and line endings; (b) bar and grating cells. The latter are not linear operators because a bar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bruno Olshausen

Bruno Adolphus Olshausen is an American neuroscientist and professor at the University of California, Berkeley, known for his work on computational neuroscience, vision science, and sparse coding. He currently serves as a Professor in the Helen Wills Neuroscience Institute and the UC Berkeley School of Optometry, with an affiliated appointment in Electrical Engineering and Computer Sciences. He is also the Director of the Redwood Center for Theoretical Neuroscience at UC Berkeley. Career Olshausen received his B.S. and M.S. degrees in Electrical Engineering from Stanford University in 1986 and 1987 respectively. He earned his Ph.D. in Computation and Neural Systems from the California Institute of Technology in 1994. After completing his doctoral studies, he held postdoctoral positions at Department of Psychology, Cornell University and Center for Biological and Computational Learning, Massachusetts Institute of Technology. Olshausen has served in several editorial and advis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_(cropped).png)