|

Color Normalization

Color normalization is a topic in computer vision concerned with artificial color vision and object recognition. In general, the distribution of color values in an image depends on the illumination, which may vary depending on lighting conditions, cameras, and other factors. Color normalization allows for object recognition techniques based on color to compensate for these variations. Main concepts Color constancy Color constancy is a feature of the human internal model of perception, which provides humans with the ability to assign a relatively constant color to objects even under different illumination conditions. This is helpful for object recognition as well as identification of light sources in an environment. For example, humans see an object approximately as the same color when the sun is bright or when the sun is dim. Applications Color normalization has been used for object recognition on color images in the field of robotics, bioinformatics and general artifici ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Color Vision

Color vision, a feature of visual perception, is an ability to perceive differences between light composed of different frequencies independently of light intensity. Color perception is a part of the larger visual system and is mediated by a complex process between neurons that begins with differential stimulation of different types of photoreceptors by light entering the eye. Those photoreceptors then emit outputs that are propagated through many layers of neurons ultimately leading to higher cognitive functions in the brain. Color vision is found in many animals and is mediated by similar underlying mechanisms with common types of biological molecules and a complex history of the evolution of color vision within different animal taxa. In primates, color vision may have evolved under selective pressure for a variety of visual tasks including the foraging for nutritious young leaves, ripe fruit, and flowers, as well as detecting predator camouflage and emotional states in othe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Color Constancy

Color constancy is an example of subjective constancy and a feature of the human color perception system which ensures that the perceived color of objects remains relatively constant under varying illumination conditions. A green apple for instance looks green to us at midday, when the main illumination is white sunlight, and also at sunset, when the main illumination is red. This helps us identify objects. History Ibn al-Haytham gave an early explanation of color constancy by observing that the light reflected from an object is modified by the object's color. He explained that the quality of the light and the color of the object are mixed, and the visual system separates light and color. He writes:Again the light does not travel from the colored object to the eye unaccompanied by the color, nor does the form of the color pass from the colored object to the eye unaccompanied by the light. Neither the form of the light nor that of the color existing in the colored objec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

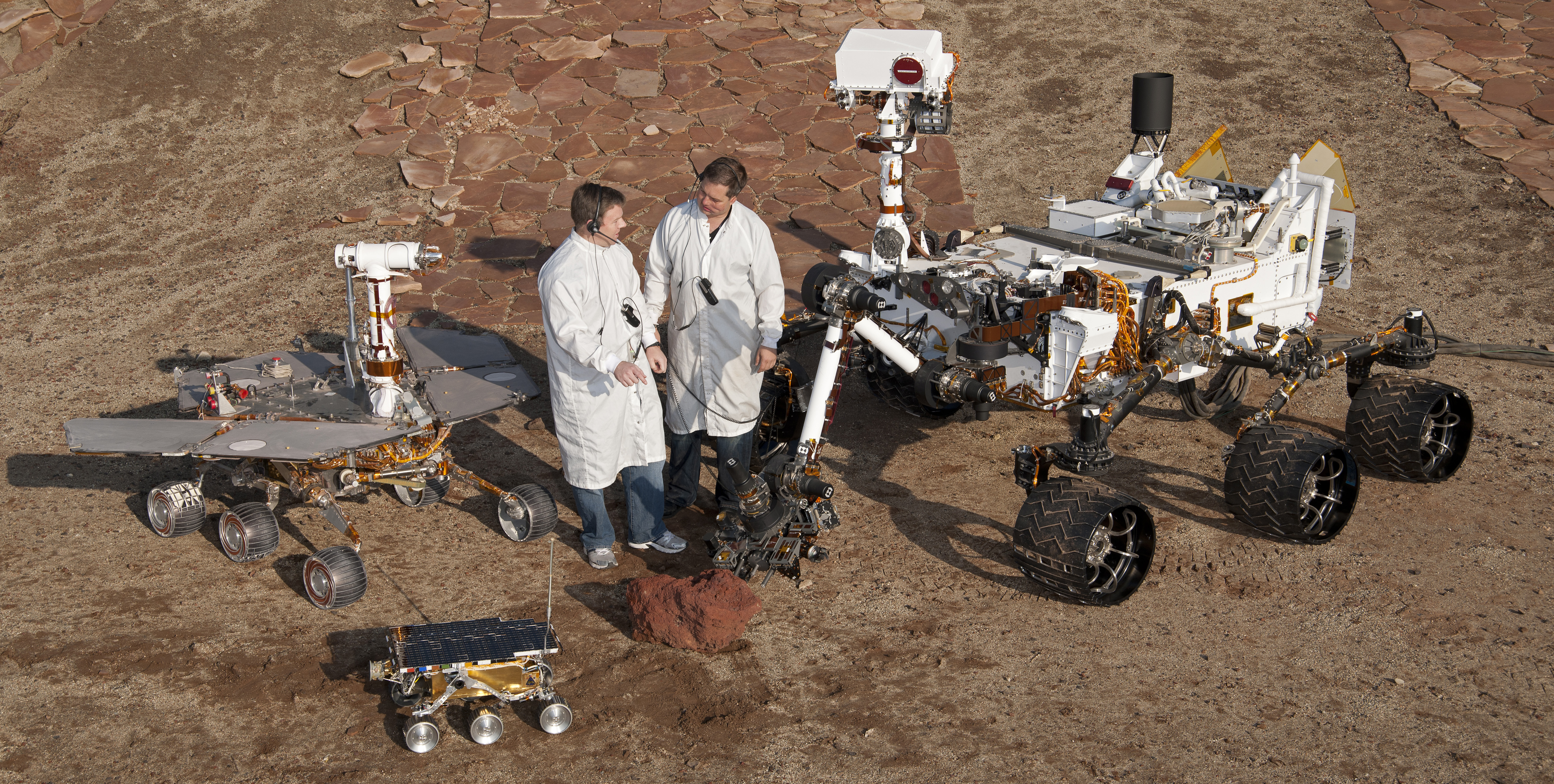

Robotics

Robotics is the interdisciplinary study and practice of the design, construction, operation, and use of robots. Within mechanical engineering, robotics is the design and construction of the physical structures of robots, while in computer science, robotics focuses on robotic automation algorithms. Other disciplines contributing to robotics include electrical engineering, electrical, control engineering, control, software engineering, software, Information engineering (field), information, electronics, electronic, telecommunications engineering, telecommunication, computer engineering, computer, mechatronic, and materials engineering, materials engineering. The goal of most robotics is to design machines that can help and assist humans. Many robots are built to do jobs that are hazardous to people, such as finding survivors in unstable ruins, and exploring space, mines and shipwrecks. Others replace people in jobs that are boring, repetitive, or unpleasant, such as cleaning, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, chemistry, physics, computer science, data science, computer programming, information engineering, mathematics and statistics to analyze and interpret biological data. The process of analyzing and interpreting data can sometimes be referred to as computational biology, however this distinction between the two terms is often disputed. To some, the term ''computational biology'' refers to building and using models of biological systems. Computational, statistical, and computer programming techniques have been used for In silico, computer simulation analyses of biological queries. They include reused specific analysis "pipelines", particularly in the field of genomics, such as by the identification of genes and single nucleotide polymorphis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to machine perception, perceive their environment and use machine learning, learning and intelligence to take actions that maximize their chances of achieving defined goals. High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon (company), Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Amazon Alexa, Alexa); autonomous vehicles (e.g., Waymo); Generative artificial intelligence, generative and Computational creativity, creative tools (e.g., ChatGPT and AI art); and Superintelligence, superhuman play and analysis in strategy games (e.g., ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Surveillance

Surveillance is the monitoring of behavior, many activities, or information for the purpose of information gathering, influencing, managing, or directing. This can include observation from a distance by means of electronic equipment, such as closed-circuit television (CCTV), or interception of electronically transmitted information like Internet traffic. Increasingly, Government, governments may also obtain Customer data, consumer data through the purchase of online information, effectively expanding surveillance capabilities through commercially available digital records. It can also include simple technical methods, such as Human intelligence (intelligence gathering), human intelligence gathering and postal interception. Surveillance is used by citizens, for instance for protecting their neighborhoods. It is widely used by governments for intelligence gathering, including espionage, prevention of crime, the protection of a process, person, group or object, or the investigat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thresholding (image Processing)

In digital image processing, thresholding is the simplest method of segmenting images. From a grayscale image, thresholding can be used to create binary images. Definition The simplest thresholding methods replace each pixel in an image with a black pixel if the image intensity I_ is less than a fixed value called the threshold T, or a white pixel if the pixel intensity is greater than that threshold. In the example image on the right, this results in the dark tree becoming completely black, and the bright snow becoming completely white. Automatic thresholding While in some cases, the threshold T can be selected manually by the user, there are many cases where the user wants the threshold to be automatically set by an algorithm. In those cases, the threshold should be the "best" threshold in the sense that the partition of the pixels above and below the threshold should match as closely as possible the actual partition between the two classes of objects represented by those ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Springer Publishing

Springer Publishing Company is an American publishing company of academic journals and books, focusing on the fields of nursing, gerontology, psychology, social work, counseling, public health, and rehabilitation (neuropsychology). It was established in 1951 by Bernhard Springer, a great-grandson of Julius Springer, and is based in Midtown Manhattan, New York City. History Springer Publishing Company was founded in 1950 by Bernhard Springer, the Berlin-born great-grandson of Julius Springer, who founded Springer Science+Business Media, Springer-Verlag (now Springer Science+Business Media). Springer Publishing's first landmark publications included ''Livestock Health Encyclopedia'' by R. Seiden and the 1952 ''Handbook of Cardiology for Nurses''. The company's books soon branched into other fields, including medicine and psychology. Nursing publications grew rapidly in number, as Modell's ''Drugs in Current Use'', a small annual paperback, sold over 150,000 copies over several edi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

No Free Lunch Theorem

In mathematical folklore, the "no free lunch" (NFL) theorem (sometimes pluralized) of David Wolpert and William Macready, alludes to the saying "no such thing as a free lunch", that is, there are no easy shortcuts to success. It appeared in the 1997 "No Free Lunch Theorems for Optimization". Wolpert had previously derived no free lunch theorems for machine learning (statistical inference).Wolpert, David (1996),The Lack of ''A Priori'' Distinctions between Learning Algorithms, ''Neural Computation'', pp. 1341–1390. In 2005, Wolpert and Macready themselves indicated that the first theorem in their paper "state that any two optimization algorithms are equivalent when their performance is averaged across all possible problems". The "no free lunch" (NFL) theorem is an easily stated and easily understood consequence of theorems Wolpert and Macready actually prove. It is objectively weaker than the proven theorems, and thus does not encapsulate them. Various investigators have exte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Histogram Equalization

Histogram equalization is a method in image processing of contrast adjustment using the image's histogram. Histogram equalization is a specific case of the more general class of histogram remapping methods. These methods seek to adjust the image to make it easier to analyze or improve visual quality (e.g., retinex). Overview This method usually increases the global contrast of many images, especially when the image is represented by a narrow range of intensity values. Through this adjustment, the intensities can be better distributed on the histogram utilizing the full range of intensities evenly. This allows for areas of lower local contrast to gain a higher contrast. Histogram equalization accomplishes this by effectively spreading out the highly populated intensity values, which tend to degrade image contrast. The method is useful in images with backgrounds and foregrounds that are both bright or both dark. In particular, the method can lead to better views of bone s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real number, real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the Mean#Mean of a probability distribution, mean or expected value, expectation of the distribution (and also its median and mode (statistics), mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural science, natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |