|

Cache Stampede

A cache stampede is a type of cascading failure that can occur when massively parallel computing systems with caching mechanisms come under a very high load. This behaviour is sometimes also called dog-piling. To understand how cache stampedes occur, consider a web server A web server is computer software and underlying Computer hardware, hardware that accepts requests via Hypertext Transfer Protocol, HTTP (the network protocol created to distribute web content) or its secure variant HTTPS. A user agent, co ... that uses memcached to cache rendered pages for some period of time, to ease system load. Under particularly high load to a single URL, the system remains responsive as long as the resource remains cached, with requests being handled by accessing the cached copy. This minimizes the expensive rendering operation. Under low load, cache misses result in a single recalculation of the rendering operation. The system will continue as before, with the average load bein ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cascading Failure

A cascading failure is a failure in a system of interconnection, interconnected parts in which the failure of one or few parts leads to the failure of other parts, growing progressively as a result of positive feedback. This can occur when a single part fails, increasing the probability that other portions of the system fail. Such a failure may happen in many types of systems, including power transmission, computer networking, finance, transportation systems, organisms, the human body, and ecosystems. Cascading failures may occur when one part of the system fails. When this happens, other parts must then compensate for the failed component. This in turn overloads these nodes, causing them to fail as well, prompting additional nodes to fail one after another. In power transmission Cascading failure is common in power grids when one of the elements fails (completely or partially) and shifts its load to nearby elements in the system. Those nearby elements are then pushed beyond t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Caching (computing)

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective, caches must be relatively small. Nevertheless, caches are effective in many areas of computing because typical Application software, computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested, and spatial locality, where data is requested that is stored near dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Server

A web server is computer software and underlying Computer hardware, hardware that accepts requests via Hypertext Transfer Protocol, HTTP (the network protocol created to distribute web content) or its secure variant HTTPS. A user agent, commonly a web browser or web crawler, initiates communication by making a request for a web page or other Web Resource, resource using HTTP, and the server (computing), server responds with the content of that resource or an List of HTTP status codes, error message. A web server can also accept and store resources sent from the user agent if configured to do so. The hardware used to run a web server can vary according to the volume of requests that it needs to handle. At the low end of the range are embedded systems, such as a router (computing), router that runs a small web server as its configuration interface. A high-traffic Internet website might handle requests with hundreds of servers that run on racks of high-speed computers. A reso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memcached

Memcached (pronounced variously /mɛmkæʃˈdiː/ ''mem-cash-dee'' or /ˈmɛmkæʃt/ ''mem-cashed'') is a general-purpose distributed memory-caching system. It is often used to speed up dynamic database-driven websites by caching data and objects in RAM to reduce the number of times an external data source (such as a database or API) must be read. Memcached is free and open-source software, licensed under the Revised BSD license. Memcached runs on Unix-like operating systems (Linux and macOS) and on Microsoft Windows. It depends on the libevent library. Memcached's APIs provide a very large hash table distributed across multiple machines. When the table is full, subsequent inserts cause older data to be purged in least recently used (LRU) order. Applications using Memcached typically layer requests and additions into RAM before falling back on a slower backing store, such as a database. Memcached has no internal mechanism to track misses which may happen. However, some third p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrency (computer Science)

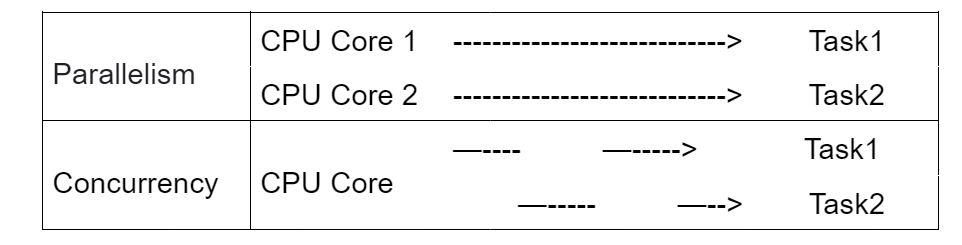

Concurrency refers to the ability of a system to execute multiple tasks through simultaneous execution or time-sharing (context switching), sharing resources and managing interactions. Concurrency improves responsiveness, throughput, and scalability in modern computing, including: * Operating systems and embedded systems * Distributed systems, parallel computing, and high-performance computing * Database systems, web applications, and cloud computing Related concepts Concurrency is a broader concept that encompasses several related ideas, including: * Parallelism (simultaneous execution on multiple processing units). Parallelism executes tasks independently on multiple CPU cores. Concurrency allows for multiple ''threads of control'' at the program level, which can use parallelism or time-slicing to perform these tasks. Programs may exhibit parallelism only, concurrency only, both parallelism and concurrency, neither. * Multi-threading and multi-processing (shared ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Congestion Collapse

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of new connections. A consequence of congestion is that an incremental increase in offered load leads either only to a small increase or even a decrease in network throughput. Network protocols that use aggressive retransmissions to compensate for packet loss due to congestion can increase congestion, even after the initial load has been reduced to a level that would not normally have induced network congestion. Such networks exhibit two stable states under the same level of load. The stable state with low throughput is known as congestive collapse. Networks use congestion control and congestion avoidance techniques to try to avoid collapse. These include: exponential backoff in protocols such as CSMA/CA in 802.11 and the similar CSMA/CD ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Engineering Failures

Engineering is the practice of using natural science, mathematics, and the engineering design process to Problem solving#Engineering, solve problems within technology, increase efficiency and productivity, and improve Systems engineering, systems. Modern engineering comprises many subfields which include designing and improving infrastructure, machinery, vehicles, electronics, Materials engineering, materials, and energy systems. The Academic discipline, discipline of engineering encompasses a broad range of more Academic specialization, specialized fields of engineering, each with a more specific emphasis for applications of applied mathematics, mathematics and applied science, science. See glossary of engineering. The word '':wikt:engineering, engineering'' is derived from the Latin . Definition The American Engineers' Council for Professional Development (the predecessor of the Accreditation Board for Engineering and Technology aka ABET) has defined "engineering" as: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Optimization

Mathematical optimization is the theory and computation of extrema or stationary points of functions. Optimization, optimisation, or optimality may also refer to: * Engineering optimization * Feedback-directed optimisation, in computing * Optimality model in biology * Optimality theory, in linguistics * Optimization (role-playing games), a gaming play style * ''Optimize'' (magazine) * Process optimization, in business and engineering, methodologies for improving the efficiency of a production process * Product optimization, in business and marketing, methodologies for improving the quality and desirability of a product or product concept * Program optimization, in computing, methodologies for improving the efficiency of software * Search engine optimization, in internet marketing *Supply chain optimization, a methodology aiming to ensure the optimal operation of a manufacturing and distribution supply chain *Social media optimization, in internet marketing, involves optimizing s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |