|

CAVLC

Context-adaptive variable-length coding (CAVLC) is a form of entropy coding used in H.264/MPEG-4 AVC video encoding. It is an inherently lossless compression technique, like almost all entropy-coders. In H.264/MPEG-4 AVC, it is used to encode residual, zig-zag order, blocks of transform coefficients. It is an alternative to context-adaptive binary arithmetic coding (CABAC). CAVLC requires considerably less processing to decode than CABAC, although it does not compress the data quite as effectively. CAVLC is supported in all H.264 profiles, unlike CABAC which is not supported in Baseline and Extended profiles. CAVLC is used to encode residual, zig-zag ordered 4×4 (and 2×2) blocks of transform coefficients. CAVLC is designed to take advantage of several characteristics of quantized 4×4 blocks: * After prediction, transformation and quantization, blocks are typically sparse (containing mostly zeros). * The highest non-zero coefficients after zig-zag scan are often sequences of + ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Advanced Video Coding

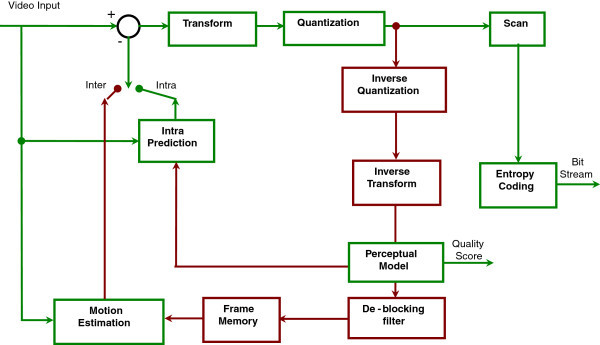

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, is a video compression standard based on block-oriented, motion-compensated coding. It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 84–86% of video industry developers . It supports a maximum resolution of 8K UHD. The intent of the H.264/AVC project was to create a standard capable of providing good video quality at substantially lower bit rates than previous standards (i.e., half or less the bit rate of MPEG-2, H.263, or MPEG-4 Part 2), without increasing the complexity of design so much that it would be impractical or excessively expensive to implement. This was achieved with features such as a reduced-complexity integer discrete cosine transform (integer DCT), variable block-size segmentation, and multi-picture inter-picture prediction. An additional goal was to provide enough flexibility to allow the standard to be applied ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Context-adaptive Binary Arithmetic Coding

Context-adaptive binary arithmetic coding (CABAC) is a form of entropy encoding used in the H.264/MPEG-4 AVC and High Efficiency Video Coding (HEVC) standards. It is a lossless compression technique, although the video coding standards in which it is used are typically for lossy compression applications. CABAC is notable for providing much better compression than most other entropy encoding algorithms used in video encoding, and it is one of the key elements that provides the H.264/AVC encoding scheme with better compression capability than its predecessors. In H.264/MPEG-4 AVC, CABAC is only supported in the Main and higher profiles (but not the extended profile) of the standard, as it requires a larger amount of processing to decode than the simpler scheme known as context-adaptive variable-length coding (CAVLC) that is used in the standard's Baseline profile. CABAC is also difficult to parallelize and vectorize, so other forms of parallelism (such as spatial region paralleli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variable-length Code

In coding theory, a variable-length code is a code which maps source symbols to a ''variable'' number of bits. The equivalent concept in computer science is '' bit string''. Variable-length codes can allow sources to be compressed and decompressed with ''zero'' error (lossless data compression) and still be read back symbol by symbol. With the right coding strategy, an independent and identically-distributed source may be compressed almost arbitrarily close to its entropy. This is in contrast to fixed-length coding methods, for which data compression is only possible for large blocks of data, and any compression beyond the logarithm of the total number of possibilities comes with a finite (though perhaps arbitrarily small) probability of failure. Some examples of well-known variable-length coding strategies are Huffman coding, Lempel–Ziv coding, arithmetic coding, and context-adaptive variable-length coding. Codes and their extensions The extension of a code is the m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy Coding

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared by Shannon's source coding theorem, which states that any lossless data compression method must have an expected code length greater than or equal to the entropy of the source. More precisely, the source coding theorem states that for any source distribution, the expected code length satisfies \operatorname E_ ell(d(x))\geq \operatorname E_ \log_b(P(x))/math>, where \ell is the function specifying the number of symbols in a code word, d is the coding function, b is the number of symbols used to make output codes and P is the probability of the source symbol. An entropy coding attempts to approach this lower bound. Two of the most common entropy coding techniques are Huffman coding and arithmetic coding. If the approximate entropy characteristics of a data stream are known in advance (especially for signal compression), a simple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossless Compression

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates (and therefore reduced media sizes). By operation of the pigeonhole principle, no lossless compression algorithm can shrink the size of all possible data: Some data will get longer by at least one symbol or bit. Compression algorithms are usually effective for human- and machine-readable documents and cannot shrink the size of random data that contain no redundancy. Different algorithms exist that are designed either with a specific type of input data in mind or with specific assumptions about what kinds of redundancy the uncompressed data are likely to contain. Lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossless Compression

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates (and therefore reduced media sizes). By operation of the pigeonhole principle, no lossless compression algorithm can shrink the size of all possible data: Some data will get longer by at least one symbol or bit. Compression algorithms are usually effective for human- and machine-readable documents and cannot shrink the size of random data that contain no redundancy. Different algorithms exist that are designed either with a specific type of input data in mind or with specific assumptions about what kinds of redundancy the uncompressed data are likely to contain. Lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy Coding

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared by Shannon's source coding theorem, which states that any lossless data compression method must have an expected code length greater than or equal to the entropy of the source. More precisely, the source coding theorem states that for any source distribution, the expected code length satisfies \operatorname E_ ell(d(x))\geq \operatorname E_ \log_b(P(x))/math>, where \ell is the function specifying the number of symbols in a code word, d is the coding function, b is the number of symbols used to make output codes and P is the probability of the source symbol. An entropy coding attempts to approach this lower bound. Two of the most common entropy coding techniques are Huffman coding and arithmetic coding. If the approximate entropy characteristics of a data stream are known in advance (especially for signal compression), a simple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MPEG

The Moving Picture Experts Group (MPEG) is an alliance of working groups established jointly by International Organization for Standardization, ISO and International Electrotechnical Commission, IEC that sets standards for media coding, including compression coding of audio compression (data), audio, video compression, video, graphics, and Compression of Genomic Sequencing Data, genomic data; and transmission and Container format (digital), file formats for various applications.John Watkinson, ''The MPEG Handbook'', p. 1 Together with Joint Photographic Experts Group, JPEG, MPEG is organized under ISO/IEC JTC 1/ISO/IEC JTC 1/SC 29, SC 29 – ''Coding of audio, picture, multimedia and hypermedia information'' (ISO/IEC Joint Technical Committee 1, Subcommittee 29). MPEG formats are used in various multimedia systems. The most well known older MPEG media formats typically use MPEG-1, MPEG-2, and MPEG-4 AVC media coding and MPEG-2 systems MPEG transport stream, transport streams an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |