survival analysis on:

[Wikipedia]

[Google]

[Amazon]

Survival analysis is a branch of

The null hypothesis for a log-rank test is that the groups have the same survival. The expected number of subjects surviving at each time point in each is adjusted for the number of subjects at risk in the groups at each event time. The log-rank test determines if the observed number of events in each group is significantly different from the expected number. The formal test is based on a chi-squared statistic. When the log-rank statistic is large, it is evidence for a difference in the survival times between the groups. The log-rank statistic approximately has a

The null hypothesis for a log-rank test is that the groups have the same survival. The expected number of subjects surviving at each time point in each is adjusted for the number of subjects at risk in the groups at each event time. The log-rank test determines if the observed number of events in each group is significantly different from the expected number. The formal test is based on a chi-squared statistic. When the log-rank statistic is large, it is evidence for a difference in the survival times between the groups. The log-rank statistic approximately has a

The Cox regression results are interpreted as follows.

*Sex is encoded as a numeric vector (1: female, 2: male). The Rsummary for the Cox model gives the hazard ratio (HR) for the second group relative to the first group, that is, male versus female.

*coef = 0.662 is the estimated logarithm of the hazard ratio for males versus females.

*exp(coef) = 1.94 = exp(0.662) - The log of the hazard ratio (coef= 0.662) is transformed to the hazard ratio using exp(coef). The summary for the Cox model gives the hazard ratio for the second group relative to the first group, that is, male versus female. The estimated hazard ratio of 1.94 indicates that males have higher risk of death (lower survival rates) than females, in these data.

*se(coef) = 0.265 is the standard error of the log hazard ratio.

*z = 2.5 = coef/se(coef) = 0.662/0.265. Dividing the coef by its standard error gives the z score.

*p=0.013. The p-value corresponding to z=2.5 for sex is p=0.013, indicating that there is a significant difference in survival as a function of sex.

The summary output also gives upper and lower 95% confidence intervals for the hazard ratio: lower 95% bound = 1.15; upper 95% bound = 3.26.

Finally, the output gives p-values for three alternative tests for overall significance of the model:

*Likelihood ratio test = 6.15 on 1 df, p=0.0131

*Wald test = 6.24 on 1 df, p=0.0125

*Score (log-rank) test = 6.47 on 1 df, p=0.0110

These three tests are asymptotically equivalent. For large enough N, they will give similar results. For small N, they may differ somewhat. The last row, "Score (logrank) test" is the result for the log-rank test, with p=0.011, the same result as the log-rank test, because the log-rank test is a special case of a Cox PH regression. The Likelihood ratio test has better behavior for small sample sizes, so it is generally preferred.

The Cox regression results are interpreted as follows.

*Sex is encoded as a numeric vector (1: female, 2: male). The Rsummary for the Cox model gives the hazard ratio (HR) for the second group relative to the first group, that is, male versus female.

*coef = 0.662 is the estimated logarithm of the hazard ratio for males versus females.

*exp(coef) = 1.94 = exp(0.662) - The log of the hazard ratio (coef= 0.662) is transformed to the hazard ratio using exp(coef). The summary for the Cox model gives the hazard ratio for the second group relative to the first group, that is, male versus female. The estimated hazard ratio of 1.94 indicates that males have higher risk of death (lower survival rates) than females, in these data.

*se(coef) = 0.265 is the standard error of the log hazard ratio.

*z = 2.5 = coef/se(coef) = 0.662/0.265. Dividing the coef by its standard error gives the z score.

*p=0.013. The p-value corresponding to z=2.5 for sex is p=0.013, indicating that there is a significant difference in survival as a function of sex.

The summary output also gives upper and lower 95% confidence intervals for the hazard ratio: lower 95% bound = 1.15; upper 95% bound = 3.26.

Finally, the output gives p-values for three alternative tests for overall significance of the model:

*Likelihood ratio test = 6.15 on 1 df, p=0.0131

*Wald test = 6.24 on 1 df, p=0.0125

*Score (log-rank) test = 6.47 on 1 df, p=0.0110

These three tests are asymptotically equivalent. For large enough N, they will give similar results. For small N, they may differ somewhat. The last row, "Score (logrank) test" is the result for the log-rank test, with p=0.011, the same result as the log-rank test, because the log-rank test is a special case of a Cox PH regression. The Likelihood ratio test has better behavior for small sample sizes, so it is generally preferred.

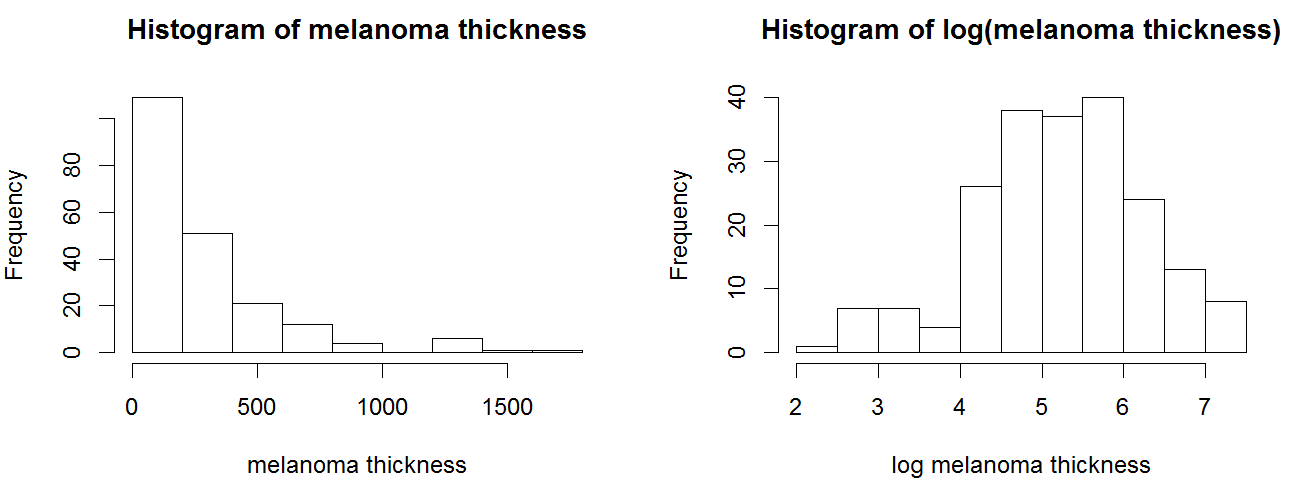

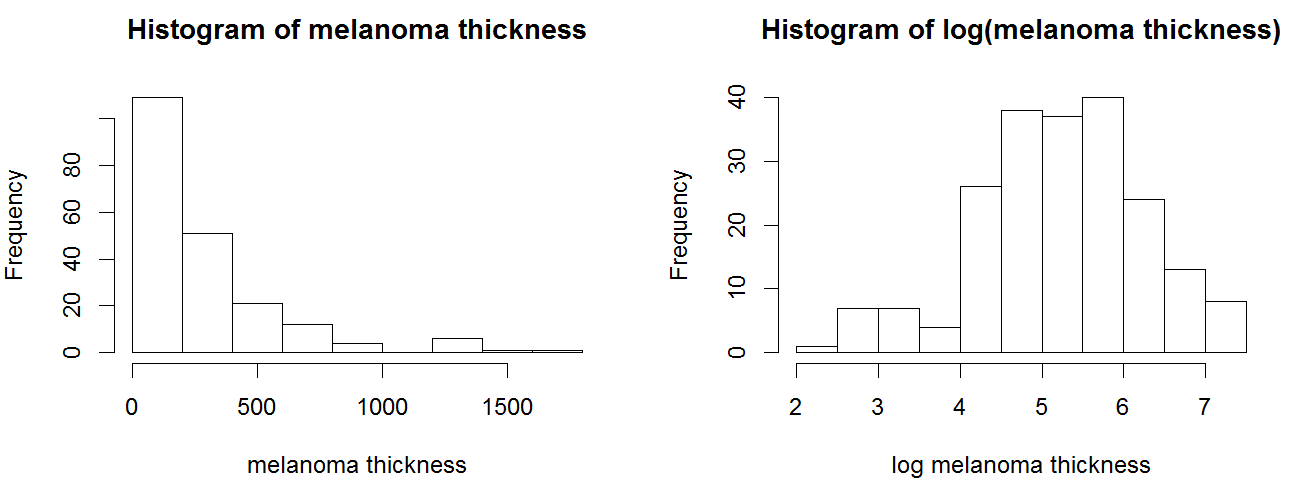

In the histograms, the thickness values are positively skewed and do not have a Gaussian-like,

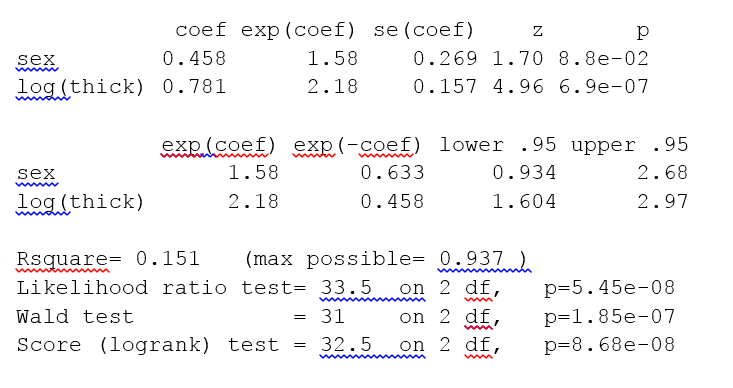

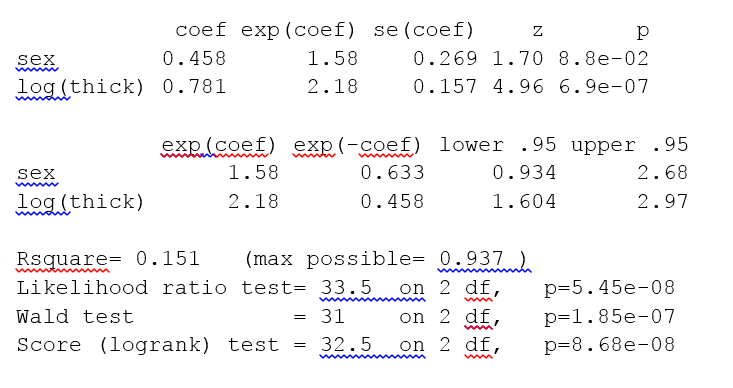

In the histograms, the thickness values are positively skewed and do not have a Gaussian-like,  The p-value for all three overall tests (likelihood, Wald, and score) are significant, indicating that the model is significant. The p-value for log(thick) is 6.9e-07, with a hazard ratio HR = exp(coef) = 2.18, indicating a strong relationship between the thickness of the tumor and increased risk of death.

By contrast, the p-value for sex is now p=0.088. The hazard ratio HR = exp(coef) = 1.58, with a 95% confidence interval of 0.934 to 2.68. Because the confidence interval for HR includes 1, these results indicate that sex makes a smaller contribution to the difference in the HR after controlling for the thickness of the tumor, and only trend toward significance. Examination of graphs of log(thickness) by sex and a t-test of log(thickness) by sex both indicate that there is a significant difference between men and women in the thickness of the tumor when they first see the clinician.

The Cox model assumes that the hazards are proportional. The proportional hazard assumption may be tested using the Rfunction cox.zph(). A p-value which is less than 0.05 indicates that the hazards are not proportional. For the melanoma data we obtain p=0.222. Hence, we cannot reject the null hypothesis of the hazards being proportional. Additional tests and graphs for examining a Cox model are described in the textbooks cited.

The p-value for all three overall tests (likelihood, Wald, and score) are significant, indicating that the model is significant. The p-value for log(thick) is 6.9e-07, with a hazard ratio HR = exp(coef) = 2.18, indicating a strong relationship between the thickness of the tumor and increased risk of death.

By contrast, the p-value for sex is now p=0.088. The hazard ratio HR = exp(coef) = 1.58, with a 95% confidence interval of 0.934 to 2.68. Because the confidence interval for HR includes 1, these results indicate that sex makes a smaller contribution to the difference in the HR after controlling for the thickness of the tumor, and only trend toward significance. Examination of graphs of log(thickness) by sex and a t-test of log(thickness) by sex both indicate that there is a significant difference between men and women in the thickness of the tumor when they first see the clinician.

The Cox model assumes that the hazards are proportional. The proportional hazard assumption may be tested using the Rfunction cox.zph(). A p-value which is less than 0.05 indicates that the hazards are not proportional. For the melanoma data we obtain p=0.222. Hence, we cannot reject the null hypothesis of the hazards being proportional. Additional tests and graphs for examining a Cox model are described in the textbooks cited.

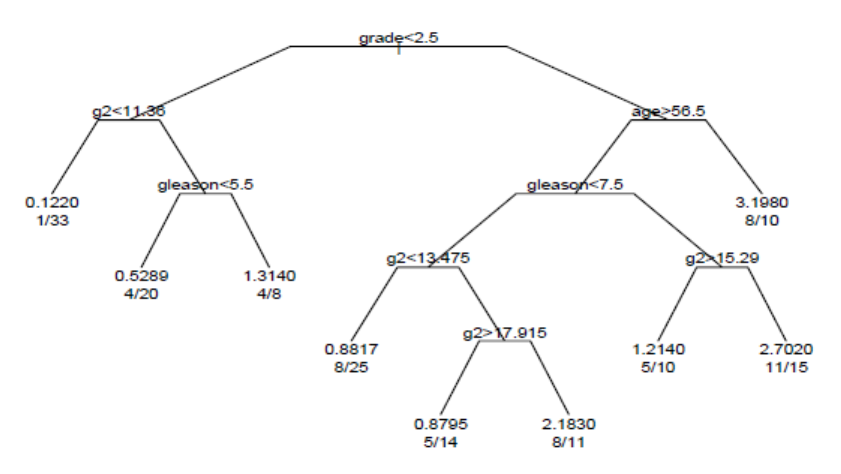

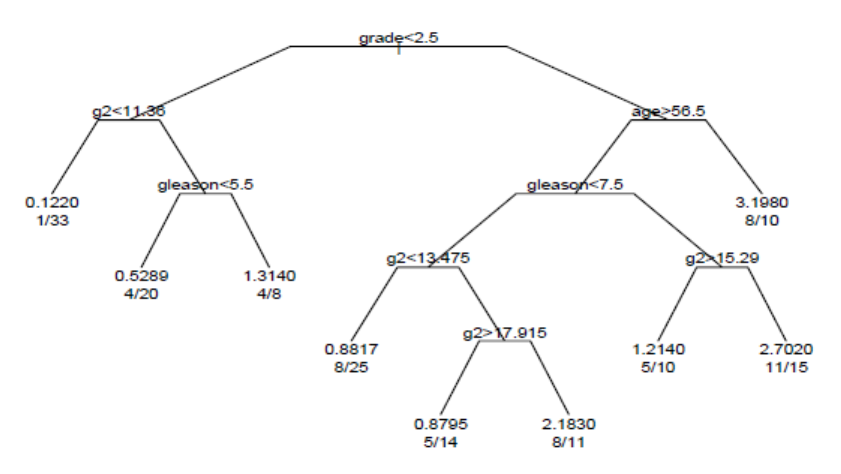

Each branch in the tree indicates a split on the value of a variable. For example, the root of the tree splits subjects with grade < 2.5 versus subjects with grade 2.5 or greater. The terminal nodes indicate the number of subjects in the node, the number of subjects who have events, and the relative event rate compared to the root. In the node on the far left, the values 1/33 indicate that one of the 33 subjects in the node had an event, and that the relative event rate is 0.122. In the node on the far right bottom, the values 11/15 indicate that 11 of 15 subjects in the node had an event, and the relative event rate is 2.7.

Each branch in the tree indicates a split on the value of a variable. For example, the root of the tree splits subjects with grade < 2.5 versus subjects with grade 2.5 or greater. The terminal nodes indicate the number of subjects in the node, the number of subjects who have events, and the relative event rate compared to the root. In the node on the far left, the values 1/33 indicate that one of the 33 subjects in the node had an event, and that the relative event rate is 0.122. In the node on the far right bottom, the values 11/15 indicate that 11 of 15 subjects in the node had an event, and the relative event rate is 2.7.

statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

for analyzing the expected duration of time until one event occurs, such as death in biological organisms and failure in mechanical systems. This topic is called reliability theory, reliability analysis or reliability engineering

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability is defined as the probability that a product, system, or service will perform its intended functi ...

in engineering

Engineering is the practice of using natural science, mathematics, and the engineering design process to Problem solving#Engineering, solve problems within technology, increase efficiency and productivity, and improve Systems engineering, s ...

, duration analysis or duration modelling in economics

Economics () is a behavioral science that studies the Production (economics), production, distribution (economics), distribution, and Consumption (economics), consumption of goods and services.

Economics focuses on the behaviour and interac ...

, and event history analysis in sociology

Sociology is the scientific study of human society that focuses on society, human social behavior, patterns of Interpersonal ties, social relationships, social interaction, and aspects of culture associated with everyday life. The term sociol ...

. Survival analysis attempts to answer certain questions, such as what is the proportion of a population which will survive past a certain time? Of those that survive, at what rate will they die or fail? Can multiple causes of death or failure be taken into account? How do particular circumstances or characteristics increase or decrease the probability of survival

Survival or survivorship, the act of surviving, is the propensity of something to continue existing, particularly when this is done despite conditions that might kill or destroy it. The concept can be applied to humans and other living things ...

?

To answer such questions, it is necessary to define "lifetime". In the case of biological survival, death

Death is the end of life; the irreversible cessation of all biological functions that sustain a living organism. Death eventually and inevitably occurs in all organisms. The remains of a former organism normally begin to decompose sh ...

is unambiguous, but for mechanical reliability, failure

Failure is the social concept of not meeting a desirable or intended objective, and is usually viewed as the opposite of success. The criteria for failure depends on context, and may be relative to a particular observer or belief system. On ...

may not be well-defined, for there may well be mechanical systems in which failure is partial, a matter of degree, or not otherwise localized in time

Time is the continuous progression of existence that occurs in an apparently irreversible process, irreversible succession from the past, through the present, and into the future. It is a component quantity of various measurements used to sequ ...

. Even in biological problems, some events (for example, heart attack

A myocardial infarction (MI), commonly known as a heart attack, occurs when Ischemia, blood flow decreases or stops in one of the coronary arteries of the heart, causing infarction (tissue death) to the heart muscle. The most common symptom ...

or other organ failure) may have the same ambiguity. The theory

A theory is a systematic and rational form of abstract thinking about a phenomenon, or the conclusions derived from such thinking. It involves contemplative and logical reasoning, often supported by processes such as observation, experimentation, ...

outlined below assumes well-defined events at specific times; other cases may be better treated by models which explicitly account for ambiguous events.

More generally, survival analysis involves the modelling of time to event data; in this context, death or failure is considered an "event" in the survival analysis literature – traditionally only a single event occurs for each subject, after which the organism or mechanism is dead or broken. ''Recurring event'' or ''repeated event'' models relax that assumption. The study of recurring events is relevant in systems reliability, and in many areas of social sciences and medical research.

Introduction to survival analysis

Survival analysis is used in several ways: *To describe the survival times of members of a group **Life table

In actuarial science and demography, a life table (also called a mortality table or actuarial table) is a table which shows, for each age, the probability that a person of that age will die before their next birthday ("probability of death"). In ...

s

** Kaplan–Meier curves

**Survival function

The survival function is a function that gives the probability that a patient, device, or other object of interest will survive past a certain time.

The survival function is also known as the survivor function

or reliability function.

The term ...

**Hazard function

A hazard is a potential source of harm. Substances, events, or circumstances can constitute hazards when their nature would potentially allow them to cause damage to health, life, property, or any other interest of value. The probability of that ...

*To compare the survival times of two or more groups

**Log-rank test

The logrank test, or log-rank test, is a hypothesis test to compare the survival distributions of two samples. It is a nonparametric test and appropriate to use when the data are right skewed and censored (technically, the censoring must be non ...

*To describe the effect of categorical or quantitative variables on survival

** Cox proportional hazards regression

**Parametric survival models

**Survival trees

**Survival random forests

Definitions of common terms in survival analysis

The following terms are commonly used in survival analyses: *Event: Death, disease occurrence, disease recurrence, recovery, or other experience of interest *Time: The time from the beginning of an observation period (such as surgery or beginning treatment) to (i) an event, or (ii) end of the study, or (iii) loss of contact or withdrawal from the study. *Censoring / Censored observation: Censoring occurs when we have some information about individual survival time, but we do not know the survival time exactly. The subject is censored in the sense that nothing is observed or known about that subject after the time of censoring. A censored subject may or may not have an event after the end of observation time. *Survival function

The survival function is a function that gives the probability that a patient, device, or other object of interest will survive past a certain time.

The survival function is also known as the survivor function

or reliability function.

The term ...

S(t): The probability that a subject survives longer than time t.

Example: Acute myelogenous leukemia survival data

This example uses theAcute Myelogenous Leukemia

Acute myeloid leukemia (AML) is a cancer of the myeloid line of blood cells, characterized by the rapid growth of abnormal cells that build up in the bone marrow and blood and interfere with normal blood cell production. Symptoms may includ ...

survival data set "aml" from the "survival" package in R. The data set is from Miller (1997)

and the question is whether the standard course of chemotherapy should be extended ('maintained') for additional cycles.

The aml data set sorted by survival time is shown in the box.

* Time is indicated by the variable "time", which is the survival or censoring time

* Event (recurrence of aml cancer) is indicated by the variable "status". 0= no event (censored), 1= event (recurrence)

* Treatment group: the variable "x" indicates if maintenance chemotherapy was given

The last observation (11), at 161 weeks, is censored. Censoring indicates that the patient did not have an event (no recurrence of aml cancer). Another subject, observation 3, was censored at 13 weeks (indicated by status=0). This subject was in the study for only 13 weeks, and the aml cancer did not recur during those 13 weeks. It is possible that this patient was enrolled near the end of the study, so that they could be observed for only 13 weeks. It is also possible that the patient was enrolled early in the study, but was lost to follow up or withdrew from the study. The table shows that other subjects were censored at 16, 28, and 45 weeks (observations 17, 6, and9 with status=0). The remaining subjects all experienced events (recurrence of aml cancer) while in the study. The question of interest is whether recurrence occurs later in maintained patients than in non-maintained patients.

Kaplan–Meier plot for the aml data

Thesurvival function

The survival function is a function that gives the probability that a patient, device, or other object of interest will survive past a certain time.

The survival function is also known as the survivor function

or reliability function.

The term ...

''S''(''t''), is the probability that a subject survives longer than time ''t''. ''S''(''t'') is theoretically a smooth curve, but it is usually estimated using the Kaplan–Meier (KM) curve. The graph shows the KM plot for the aml data and can be interpreted as follows:

*The ''x'' axis is time, from zero (when observation began) to the last observed time point.

*The ''y'' axis is the proportion of subjects surviving. At time zero, 100% of the subjects are alive without an event.

*The solid line (similar to a staircase) shows the progression of event occurrences.

*A vertical drop indicates an event. In the aml table shown above, two subjects had events at five weeks, two had events at eight weeks, one had an event at nine weeks, and so on. These events at five weeks, eight weeks and so on are indicated by the vertical drops in the KM plot at those time points.

*At the far right end of the KM plot there is a tick mark at 161 weeks. The vertical tick mark indicates that a patient was censored at this time. In the aml data table five subjects were censored, at 13, 16, 28, 45 and 161 weeks. There are five tick marks in the KM plot, corresponding to these censored observations.

Life table for the aml data

Alife table

In actuarial science and demography, a life table (also called a mortality table or actuarial table) is a table which shows, for each age, the probability that a person of that age will die before their next birthday ("probability of death"). In ...

summarizes survival data in terms of the number of events and the proportion surviving at each event time point. The life table for the aml data, created using the Rsoftware, is shown.

The life table summarizes the events and the proportion surviving at each event time point. The columns in the life table have the following interpretation:

*time gives the time points at which events occur.

*n.risk is the number of subjects at risk immediately before the time point, t. Being "at risk" means that the subject has not had an event before time t, and is not censored before or at time t.

*n.event is the number of subjects who have events at time t.

*survival is the proportion surviving, as determined using the Kaplan–Meier product-limit estimate.

*std.err is the standard error of the estimated survival. The standard error of the Kaplan–Meier product-limit estimate it is calculated using Greenwood's formula, and depends on the number at risk (n.risk in the table), the number of deaths (n.event in the table) and the proportion surviving (survival in the table).

*lower 95% CI and upper 95% CI are the lower and upper 95% confidence bounds for the proportion surviving.

Log-rank test: Testing for differences in survival in the aml data

Thelog-rank test

The logrank test, or log-rank test, is a hypothesis test to compare the survival distributions of two samples. It is a nonparametric test and appropriate to use when the data are right skewed and censored (technically, the censoring must be non ...

compares the survival times of two or more groups. This example uses a log-rank test for a difference in survival in the maintained versus non-maintained treatment groups in the aml data. The graph shows KM plots for the aml data broken out by treatment group, which is indicated by the variable "x" in the data.

Chi-squared distribution

In probability theory and statistics, the \chi^2-distribution with k Degrees of freedom (statistics), degrees of freedom is the distribution of a sum of the squares of k Independence (probability theory), independent standard normal random vari ...

with one degree of freedom, and the p-value

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means ...

is calculated using the Chi-squared test

A chi-squared test (also chi-square or test) is a Statistical hypothesis testing, statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine w ...

.

For the example data, the log-rank test for difference in survival gives a p-value of p=0.0653, indicating that the treatment groups do not differ significantly in survival, assuming an alpha level of 0.05. The sample size of 23 subjects is modest, so there is little power to detect differences between the treatment groups. The chi-squared test is based on asymptotic approximation, so the p-value should be regarded with caution for small sample sizes.

Cox proportional hazards (PH) regression analysis

Kaplan–Meier curves and log-rank tests are most useful when the predictor variable is categorical (e.g., drug vs. placebo), or takes a small number of values (e.g., drug doses 0, 20, 50, and 100 mg/day) that can be treated as categorical. The log-rank test and KM curves don't work easily with quantitative predictors such as gene expression, white blood count, or age. For quantitative predictor variables, an alternative method is Cox proportional hazards regression analysis. Cox PH models work also with categorical predictor variables, which are encoded as indicator or dummy variables. The log-rank test is a special case of a Cox PH analysis, and can be performed using Cox PH software.Example: Cox proportional hazards regression analysis for melanoma

This example uses the melanoma data set from Dalgaard Chapter 14. Data are in the R package ISwR. The Cox proportional hazards regression usingR gives the results shown in the box. The Cox regression results are interpreted as follows.

*Sex is encoded as a numeric vector (1: female, 2: male). The Rsummary for the Cox model gives the hazard ratio (HR) for the second group relative to the first group, that is, male versus female.

*coef = 0.662 is the estimated logarithm of the hazard ratio for males versus females.

*exp(coef) = 1.94 = exp(0.662) - The log of the hazard ratio (coef= 0.662) is transformed to the hazard ratio using exp(coef). The summary for the Cox model gives the hazard ratio for the second group relative to the first group, that is, male versus female. The estimated hazard ratio of 1.94 indicates that males have higher risk of death (lower survival rates) than females, in these data.

*se(coef) = 0.265 is the standard error of the log hazard ratio.

*z = 2.5 = coef/se(coef) = 0.662/0.265. Dividing the coef by its standard error gives the z score.

*p=0.013. The p-value corresponding to z=2.5 for sex is p=0.013, indicating that there is a significant difference in survival as a function of sex.

The summary output also gives upper and lower 95% confidence intervals for the hazard ratio: lower 95% bound = 1.15; upper 95% bound = 3.26.

Finally, the output gives p-values for three alternative tests for overall significance of the model:

*Likelihood ratio test = 6.15 on 1 df, p=0.0131

*Wald test = 6.24 on 1 df, p=0.0125

*Score (log-rank) test = 6.47 on 1 df, p=0.0110

These three tests are asymptotically equivalent. For large enough N, they will give similar results. For small N, they may differ somewhat. The last row, "Score (logrank) test" is the result for the log-rank test, with p=0.011, the same result as the log-rank test, because the log-rank test is a special case of a Cox PH regression. The Likelihood ratio test has better behavior for small sample sizes, so it is generally preferred.

The Cox regression results are interpreted as follows.

*Sex is encoded as a numeric vector (1: female, 2: male). The Rsummary for the Cox model gives the hazard ratio (HR) for the second group relative to the first group, that is, male versus female.

*coef = 0.662 is the estimated logarithm of the hazard ratio for males versus females.

*exp(coef) = 1.94 = exp(0.662) - The log of the hazard ratio (coef= 0.662) is transformed to the hazard ratio using exp(coef). The summary for the Cox model gives the hazard ratio for the second group relative to the first group, that is, male versus female. The estimated hazard ratio of 1.94 indicates that males have higher risk of death (lower survival rates) than females, in these data.

*se(coef) = 0.265 is the standard error of the log hazard ratio.

*z = 2.5 = coef/se(coef) = 0.662/0.265. Dividing the coef by its standard error gives the z score.

*p=0.013. The p-value corresponding to z=2.5 for sex is p=0.013, indicating that there is a significant difference in survival as a function of sex.

The summary output also gives upper and lower 95% confidence intervals for the hazard ratio: lower 95% bound = 1.15; upper 95% bound = 3.26.

Finally, the output gives p-values for three alternative tests for overall significance of the model:

*Likelihood ratio test = 6.15 on 1 df, p=0.0131

*Wald test = 6.24 on 1 df, p=0.0125

*Score (log-rank) test = 6.47 on 1 df, p=0.0110

These three tests are asymptotically equivalent. For large enough N, they will give similar results. For small N, they may differ somewhat. The last row, "Score (logrank) test" is the result for the log-rank test, with p=0.011, the same result as the log-rank test, because the log-rank test is a special case of a Cox PH regression. The Likelihood ratio test has better behavior for small sample sizes, so it is generally preferred.

Cox model using a covariate in the melanoma data

The Cox model extends the log-rank test by allowing the inclusion of additional covariates. This example use the melanoma data set where the predictor variables include a continuous covariate, the thickness of the tumor (variable name = "thick"). In the histograms, the thickness values are positively skewed and do not have a Gaussian-like,

In the histograms, the thickness values are positively skewed and do not have a Gaussian-like, Symmetric probability distribution

In statistics, a symmetric probability distribution is a probability distribution—an assignment of probabilities to possible occurrences—which is unchanged when its probability density function (for continuous probability distribution) or pro ...

. Regression models, including the Cox model, generally give more reliable results with normally-distributed variables. For this example we may use a logarithm

In mathematics, the logarithm of a number is the exponent by which another fixed value, the base, must be raised to produce that number. For example, the logarithm of to base is , because is to the rd power: . More generally, if , the ...

ic transform. The log of the thickness of the tumor looks to be more normally distributed, so the Cox models will use log thickness. The Cox PH analysis gives the results in the box.

The p-value for all three overall tests (likelihood, Wald, and score) are significant, indicating that the model is significant. The p-value for log(thick) is 6.9e-07, with a hazard ratio HR = exp(coef) = 2.18, indicating a strong relationship between the thickness of the tumor and increased risk of death.

By contrast, the p-value for sex is now p=0.088. The hazard ratio HR = exp(coef) = 1.58, with a 95% confidence interval of 0.934 to 2.68. Because the confidence interval for HR includes 1, these results indicate that sex makes a smaller contribution to the difference in the HR after controlling for the thickness of the tumor, and only trend toward significance. Examination of graphs of log(thickness) by sex and a t-test of log(thickness) by sex both indicate that there is a significant difference between men and women in the thickness of the tumor when they first see the clinician.

The Cox model assumes that the hazards are proportional. The proportional hazard assumption may be tested using the Rfunction cox.zph(). A p-value which is less than 0.05 indicates that the hazards are not proportional. For the melanoma data we obtain p=0.222. Hence, we cannot reject the null hypothesis of the hazards being proportional. Additional tests and graphs for examining a Cox model are described in the textbooks cited.

The p-value for all three overall tests (likelihood, Wald, and score) are significant, indicating that the model is significant. The p-value for log(thick) is 6.9e-07, with a hazard ratio HR = exp(coef) = 2.18, indicating a strong relationship between the thickness of the tumor and increased risk of death.

By contrast, the p-value for sex is now p=0.088. The hazard ratio HR = exp(coef) = 1.58, with a 95% confidence interval of 0.934 to 2.68. Because the confidence interval for HR includes 1, these results indicate that sex makes a smaller contribution to the difference in the HR after controlling for the thickness of the tumor, and only trend toward significance. Examination of graphs of log(thickness) by sex and a t-test of log(thickness) by sex both indicate that there is a significant difference between men and women in the thickness of the tumor when they first see the clinician.

The Cox model assumes that the hazards are proportional. The proportional hazard assumption may be tested using the Rfunction cox.zph(). A p-value which is less than 0.05 indicates that the hazards are not proportional. For the melanoma data we obtain p=0.222. Hence, we cannot reject the null hypothesis of the hazards being proportional. Additional tests and graphs for examining a Cox model are described in the textbooks cited.

Extensions to Cox models

Cox models can be extended to deal with variations on the simple analysis. *Stratification. The subjects can be divided into strata, where subjects within a stratum are expected to be relatively more similar to each other than to randomly chosen subjects from other strata. The regression parameters are assumed to be the same across the strata, but a different baseline hazard may exist for each stratum. Stratification is useful for analyses using matched subjects, for dealing with patient subsets, such as different clinics, and for dealing with violations of the proportional hazard assumption. *Time-varying covariates. Some variables, such as gender and treatment group, generally stay the same in a clinical trial. Other clinical variables, such as serum protein levels or dose of concomitant medications may change over the course of a study. Cox models may be extended for such time-varying covariates.Tree-structured survival models

The Cox PH regression model is a linear model. It is similar to linear regression and logistic regression. Specifically, these methods assume that a single line, curve, plane, or surface is sufficient to separate groups (alive, dead) or to estimate a quantitative response (survival time). In some cases alternative partitions give more accurate classification or quantitative estimates. One set of alternative methods are tree-structured survival models, including survival random forests. Tree-structured survival models may give more accurate predictions than Cox models. Examining both types of models for a given data set is a reasonable strategy.Example survival tree analysis

This example of a survival tree analysis uses the Rpackage "rpart". The example is based on 146 stageC prostate cancer patients in the data set stagec in rpart. Rpart and the stagec example are described in Atkinson and Therneau (1997), which is also distributed as a vignette of the rpart package. The variables in stages are: *pgtime: time to progression, or last follow-up free of progression *pgstat: status at last follow-up (1=progressed, 0=censored) *age: age at diagnosis *eet: early endocrine therapy (1=no, 0=yes) *ploidy: diploid/tetraploid/aneuploid DNA pattern *g2: % of cells in G2 phase *grade: tumor grade (1-4) *gleason: Gleason grade (3-10) The survival tree produced by the analysis is shown in the figure. Each branch in the tree indicates a split on the value of a variable. For example, the root of the tree splits subjects with grade < 2.5 versus subjects with grade 2.5 or greater. The terminal nodes indicate the number of subjects in the node, the number of subjects who have events, and the relative event rate compared to the root. In the node on the far left, the values 1/33 indicate that one of the 33 subjects in the node had an event, and that the relative event rate is 0.122. In the node on the far right bottom, the values 11/15 indicate that 11 of 15 subjects in the node had an event, and the relative event rate is 2.7.

Each branch in the tree indicates a split on the value of a variable. For example, the root of the tree splits subjects with grade < 2.5 versus subjects with grade 2.5 or greater. The terminal nodes indicate the number of subjects in the node, the number of subjects who have events, and the relative event rate compared to the root. In the node on the far left, the values 1/33 indicate that one of the 33 subjects in the node had an event, and that the relative event rate is 0.122. In the node on the far right bottom, the values 11/15 indicate that 11 of 15 subjects in the node had an event, and the relative event rate is 2.7.

Survival random forests

An alternative to building a single survival tree is to build many survival trees, where each tree is constructed using a sample of the data, and average the trees to predict survival. This is the method underlying the survival random forest models. Survival random forest analysis is available in the Rpackage "randomForestSRC". The randomForestSRC package includes an example survival random forest analysis using the data set pbc. This data is from the Mayo Clinic Primary Biliary Cirrhosis (PBC) trial of the liver conducted between 1974 and 1984. In the example, the random forest survival model gives more accurate predictions of survival than the Cox PH model. The prediction errors are estimated by bootstrap re-sampling.Deep Learning survival models

Recent advancements in deep representation learning have been extended to survival estimation. The DeepSurv model proposes to replace the log-linear parameterization of the CoxPH model with a multi-layer perceptron. Further extensions like Deep Survival Machines and Deep Cox Mixtures involve the use of latent variable mixture models to model the time-to-event distribution as a mixture of parametric or semi-parametric distributions while jointly learning representations of the input covariates. Deep learning approaches have shown superior performance especially on complex input data modalities such as images and clinical time-series.General formulation

Survival function

The object of primary interest is the survival function, conventionally denoted ''S'', which is defined as where ''t'' is some time, ''T'' is arandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a Mathematics, mathematical formalization of a quantity or object which depends on randomness, random events. The term 'random variable' in its mathema ...

denoting the time of death, and "Pr" stands for probability

Probability is a branch of mathematics and statistics concerning events and numerical descriptions of how likely they are to occur. The probability of an event is a number between 0 and 1; the larger the probability, the more likely an e ...

. That is, the survival function is the probability that the time of death is later than some specified time ''t''.

The survival function is also called the ''survivor function'' or ''survivorship function'' in problems of biological survival, and the ''reliability function'' in mechanical survival problems. In the latter case, the reliability function is denoted ''R''(''t'').

Usually one assumes ''S''(0) = 1, although it could be less than 1if there is the possibility of immediate death or failure.

The survival function must be non-increasing: ''S''(''u'') ≤ ''S''(''t'') if ''u'' ≥ ''t''. This property follows directly because ''T''>''u'' implies ''T''>''t''. This reflects the notion that survival to a later age is possible only if all younger ages are attained. Given this property, the lifetime distribution function and event density (''F'' and ''f'' below) are well-defined.

The survival function is usually assumed to approach zero as age increases without bound (i.e., ''S''(''t'') → 0 as ''t'' → ∞), although the limit could be greater than zero if eternal life is possible. For instance, we could apply survival analysis to a mixture of stable and unstable carbon isotopes; unstable isotopes would decay sooner or later, but the stable isotopes would last indefinitely.

Lifetime distribution function and event density

Related quantities are defined in terms of the survival function. The lifetime distribution function, conventionally denoted ''F'', is defined as the complement of the survival function, If ''F'' isdifferentiable

In mathematics, a differentiable function of one real variable is a function whose derivative exists at each point in its domain. In other words, the graph of a differentiable function has a non- vertical tangent line at each interior point in ...

then the derivative, which is the density function of the lifetime distribution, is conventionally denoted ''f'',

The function ''f'' is sometimes called the event density; it is the rate of death or failure events per unit time.

The survival function can be expressed in terms of probability distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical descri ...

and probability density function

In probability theory, a probability density function (PDF), density function, or density of an absolutely continuous random variable, is a Function (mathematics), function whose value at any given sample (or point) in the sample space (the s ...

s

Similarly, a survival event density function can be defined as

In other fields, such as statistical physics, the survival event density function is known as the first passage time

In statistics, first-hitting-time models are simplified models that estimate the amount of time that passes before some random or stochastic process crosses a barrier, boundary or reaches a specified state, termed the first hitting time, or the ...

density.

Hazard function and cumulative hazard function

Thehazard function

A hazard is a potential source of harm. Substances, events, or circumstances can constitute hazards when their nature would potentially allow them to cause damage to health, life, property, or any other interest of value. The probability of that ...

is defined as the event rate at time conditional on survival at time

Synonyms for ''hazard function'' in different fields include hazard rate, force of mortality In actuarial science, force of mortality represents the instantaneous rate of mortality at a certain age measured on an annualized basis. It is identical in concept to failure rate, also called hazard function, in reliability theory.

Motivation an ...

(demography

Demography () is the statistical study of human populations: their size, composition (e.g., ethnic group, age), and how they change through the interplay of fertility (births), mortality (deaths), and migration.

Demographic analysis examine ...

and actuarial science

Actuarial science is the discipline that applies mathematics, mathematical and statistics, statistical methods to Risk assessment, assess risk in insurance, pension, finance, investment and other industries and professions.

Actuary, Actuaries a ...

, denoted by ), force of failure, or failure rate

Failure is the social concept of not meeting a desirable or intended objective, and is usually viewed as the opposite of success. The criteria for failure depends on context, and may be relative to a particular observer or belief system. On ...

(engineering

Engineering is the practice of using natural science, mathematics, and the engineering design process to Problem solving#Engineering, solve problems within technology, increase efficiency and productivity, and improve Systems engineering, s ...

, denoted ). For example, in actuarial science, denotes rate of death for people aged , whereas in reliability engineering

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability is defined as the probability that a product, system, or service will perform its intended functi ...

denotes rate of failure of components after operation for time .

Suppose that an item has survived for a time and we desire the probability that it will not survive for an additional time :

Any function is a hazard function if and only if it satisfies the following properties:

# ,

# .

In fact, the hazard rate is usually more informative about the underlying mechanism of failure than the other representations of a lifetime distribution.

The hazard function must be non-negative, , and its integral over