Regulation Of Artificial Intelligence on:

[Wikipedia]

[Google]

[Amazon]

Regulation of artificial intelligence is the development of public sector

The development of a global governance board to regulate AI development was suggested at least as early as 2017. In December 2018, Canada and France announced plans for a G7-backed International Panel on Artificial Intelligence, modeled on the International Panel on Climate Change, to study the global effects of AI on people and economies and to steer AI development. In 2019, the Panel was renamed the Global Partnership on AI.

The Global Partnership on Artificial Intelligence (GPAI) was launched in June 2020, stating a need for AI to be developed in accordance with human rights and democratic values, to ensure public confidence and trust in the technology, as outlined in the

The development of a global governance board to regulate AI development was suggested at least as early as 2017. In December 2018, Canada and France announced plans for a G7-backed International Panel on Artificial Intelligence, modeled on the International Panel on Climate Change, to study the global effects of AI on people and economies and to steer AI development. In 2019, the Panel was renamed the Global Partnership on AI.

The Global Partnership on Artificial Intelligence (GPAI) was launched in June 2020, stating a need for AI to be developed in accordance with human rights and democratic values, to ensure public confidence and trust in the technology, as outlined in the

The regulatory and policy landscape for AI is an emerging issue in regional and national jurisdictions globally, for example in the European Union and Russia. Since early 2016, many national, regional and international authorities have begun adopting strategies, actions plans and policy papers on AI. These documents cover a wide range of topics such as regulation and governance, as well as industrial strategy, research, talent and infrastructure.

Different countries have approached the problem in different ways. Regarding the three largest economies, it has been said that "the United States is following a market-driven approach, China is advancing a state-driven approach, and the EU is pursuing a rights-driven approach."

The regulatory and policy landscape for AI is an emerging issue in regional and national jurisdictions globally, for example in the European Union and Russia. Since early 2016, many national, regional and international authorities have begun adopting strategies, actions plans and policy papers on AI. These documents cover a wide range of topics such as regulation and governance, as well as industrial strategy, research, talent and infrastructure.

Different countries have approached the problem in different ways. Regarding the three largest economies, it has been said that "the United States is following a market-driven approach, China is advancing a state-driven approach, and the EU is pursuing a rights-driven approach."

policies

Policy is a deliberate system of guidelines to guide decisions and achieve rational outcomes. A policy is a statement of intent and is implemented as a procedure or protocol. Policies are generally adopted by a governance body within an orga ...

and laws for promoting and regulating artificial intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of re ...

(AI). It is part of the broader regulation of algorithms

Regulation of algorithms, or algorithmic regulation, is the creation of laws, rules and public sector policies for promotion and regulation of algorithms, particularly in artificial intelligence and machine learning. For the subset of AI algorith ...

. The regulatory and policy landscape for AI is an emerging issue in jurisdictions worldwide, including for international organizations without direct enforcement power like the IEEE

The Institute of Electrical and Electronics Engineers (IEEE) is an American 501(c)(3) organization, 501(c)(3) public charity professional organization for electrical engineering, electronics engineering, and other related disciplines.

The IEEE ...

or the OECD

The Organisation for Economic Co-operation and Development (OECD; , OCDE) is an international organization, intergovernmental organization with 38 member countries, founded in 1961 to stimulate economic progress and international trade, wor ...

.

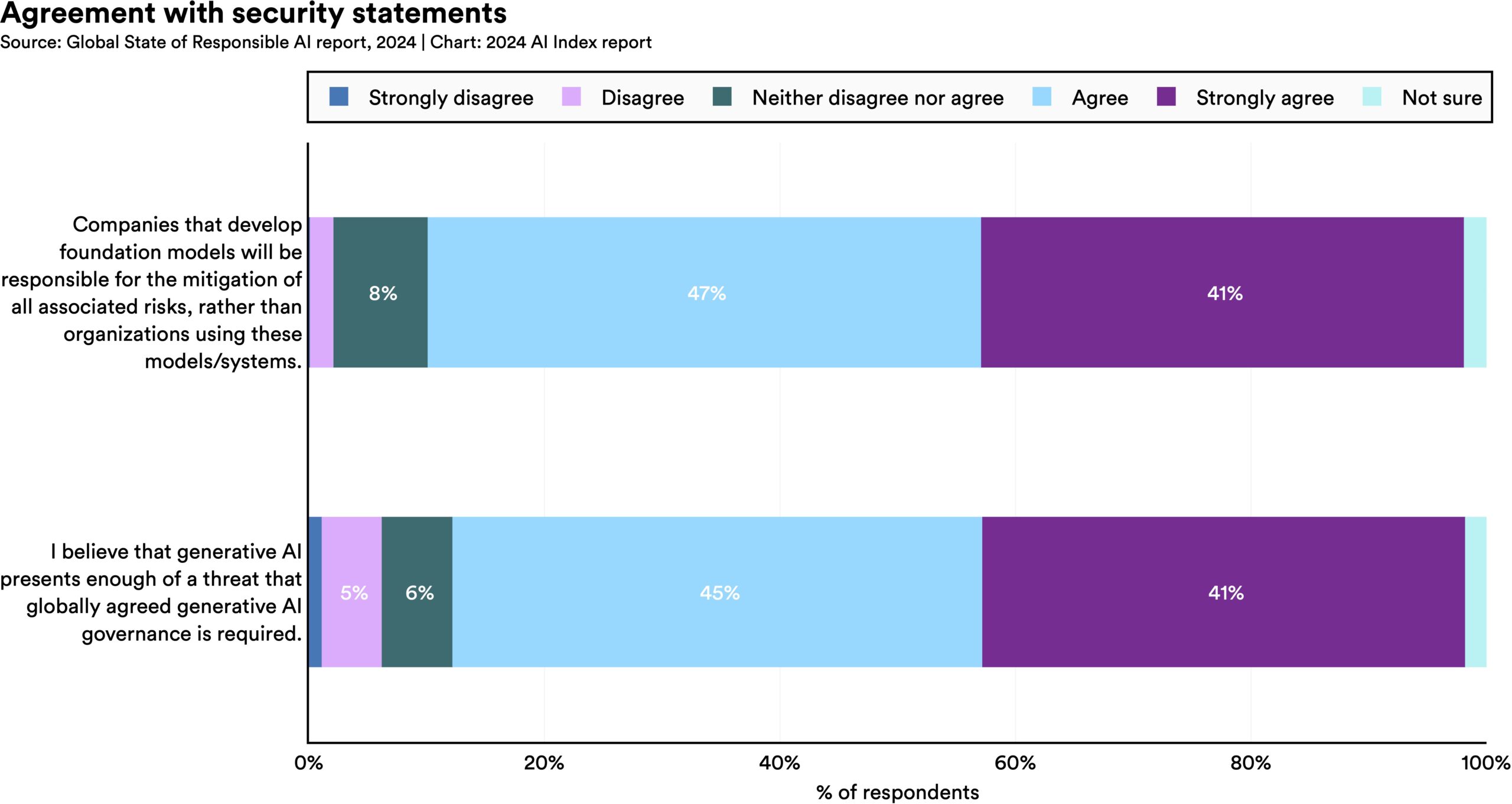

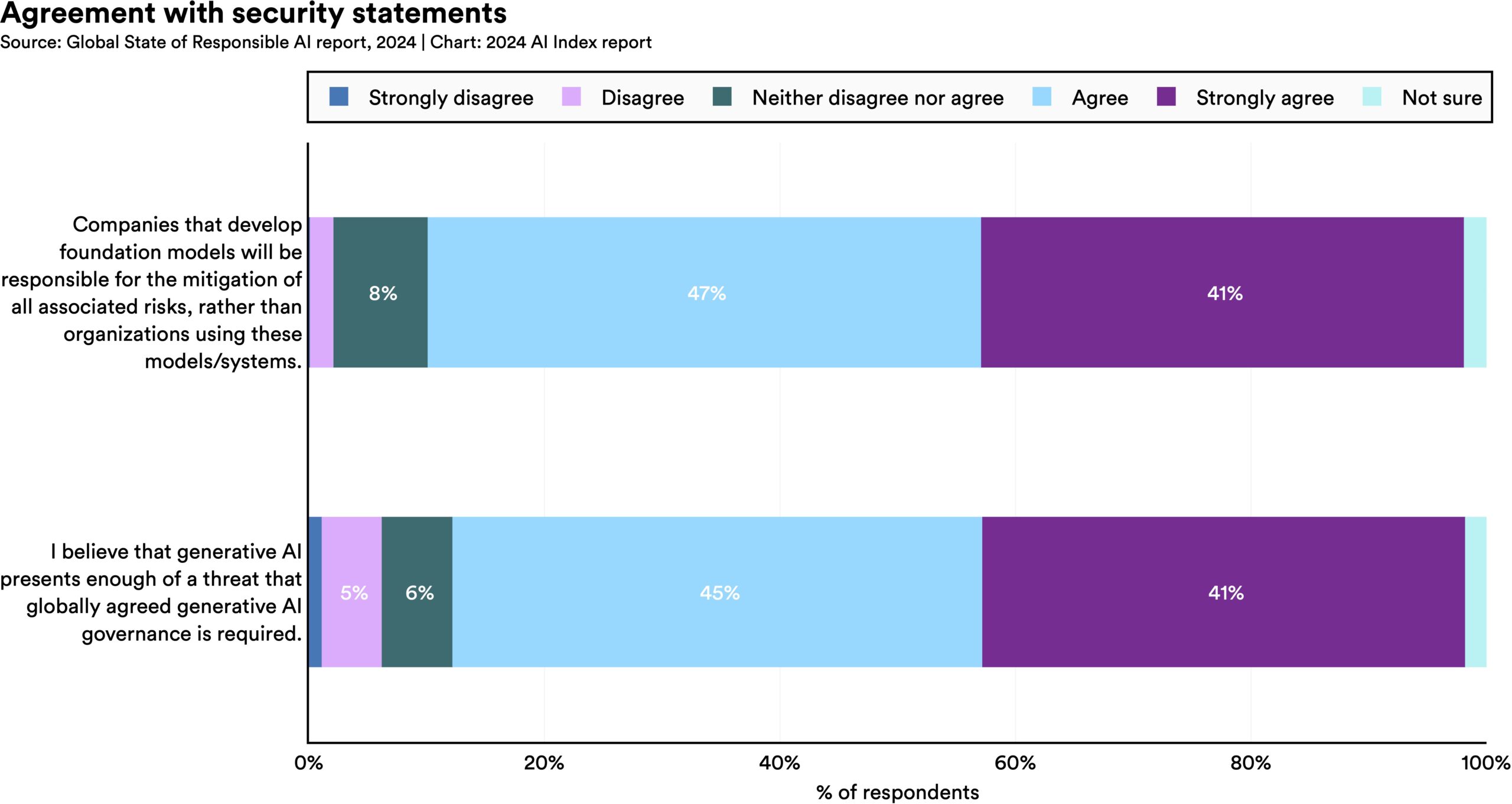

Since 2016, numerous AI ethics guidelines have been published in order to maintain social control over the technology. Regulation is deemed necessary to both foster AI innovation and manage associated risks.

Furthermore, organizations deploying AI have a central role to play in creating and implementing trustworthy AI

Trustworthy AI refers to artificial intelligence systems designed and deployed to be transparent, robust and respectful of data privacy.

Trustworthy AI makes use of a number of Privacy-enhancing technologies (PETs), including homomorphic encrypti ...

, adhering to established principles, and taking accountability for mitigating risks.

Regulating AI through mechanisms such as review boards can also be seen as social means to approach the AI control problem.

Background

According toStanford University

Leland Stanford Junior University, commonly referred to as Stanford University, is a Private university, private research university in Stanford, California, United States. It was founded in 1885 by railroad magnate Leland Stanford (the eighth ...

's 2025 AI Index, legislative mentions of AI rose 21.3% across 75 countries since 2023, marking a ninefold increase since 2016. The U.S. federal agencies introduced 59 AI-related regulations in 2024—more than double the number in 2023.

There is currently no broad consensus on the degree or mechanics of AI regulation. Several prominent figures in the field, including Elon Musk

Elon Reeve Musk ( ; born June 28, 1971) is a businessman. He is known for his leadership of Tesla, SpaceX, X (formerly Twitter), and the Department of Government Efficiency (DOGE). Musk has been considered the wealthiest person in th ...

, Sam Altman

Samuel Harris Altman (born April 22, 1985) is an American technology entrepreneur, investor, and the chief executive officer of OpenAI since 2019 (he was Removal of Sam Altman from OpenAI, briefly dismissed and reinstated in November 2023). He ...

, Dario Amodei

Dario Amodei (born 1983) is an American artificial intelligence researcher and entrepreneur. He is the co-founder and CEO of Anthropic, the company behind the large language model series Claude. He was previously the vice president of research ...

, and Demis Hassabis

Sir Demis Hassabis (born 27 July 1976) is a British artificial intelligence (AI) researcher, and entrepreneur. He is the chief executive officer and co-founder of Google DeepMind, and Isomorphic Labs, and a UK Government AI Adviser. In 2024, Ha ...

have publicly called for immediate regulation of AI. In 2023, following ChatGPT-4

ChatGPT is a generative artificial intelligence chatbot developed by OpenAI and released on November 30, 2022. It uses large language models (LLMs) such as GPT-4o as well as other multimodal models to create human-like responses in text, spe ...

's creation, Elon Musk

Elon Reeve Musk ( ; born June 28, 1971) is a businessman. He is known for his leadership of Tesla, SpaceX, X (formerly Twitter), and the Department of Government Efficiency (DOGE). Musk has been considered the wealthiest person in th ...

and others signed an open letter urging a moratorium on the training of more powerful AI systems. Others, such as Mark Zuckerberg

Mark Elliot Zuckerberg (; born May 14, 1984) is an American businessman who co-founded the social media service Facebook and its parent company Meta Platforms, of which he is the chairman, chief executive officer, and controlling sharehold ...

and Marc Andreessen

Marc Lowell Andreessen ( ; born July 9, 1971) is an American businessman and former software engineer. He is the co-author of Mosaic, the first widely used web browser with a graphical user interface; co-founder of Netscape; and co-founder and ...

, have warned about the risk of preemptive regulation stifling innovation.

In a 2022 Ipsos

Ipsos Group S.A. (; derived from the Latin expression, ) is a multinational market research and consulting firm with headquarters in Paris, France. The company was founded in 1975 by Didier Truchot, Chairman of the company, and has been publ ...

survey, attitudes towards AI varied greatly by country; 78% of Chinese citizens, but only 35% of Americans,In 2023, following ChatGPT-4

ChatGPT is a generative artificial intelligence chatbot developed by OpenAI and released on November 30, 2022. It uses large language models (LLMs) such as GPT-4o as well as other multimodal models to create human-like responses in text, spe ...

's creation, Elon Musk

Elon Reeve Musk ( ; born June 28, 1971) is a businessman. He is known for his leadership of Tesla, SpaceX, X (formerly Twitter), and the Department of Government Efficiency (DOGE). Musk has been considered the wealthiest person in th ...

and others signed an open letter urging a moratorium on the training of more powerful AI systems. agreed that "products and services using AI have more benefits than drawbacks". A 2023 Reuters

Reuters ( ) is a news agency owned by Thomson Reuters. It employs around 2,500 journalists and 600 photojournalists in about 200 locations worldwide writing in 16 languages. Reuters is one of the largest news agencies in the world.

The agency ...

/Ipsos poll found that 61% of Americans agree, and 22% disagree, that AI poses risks to humanity. In a 2023 Fox News

The Fox News Channel (FNC), commonly known as Fox News, is an American Multinational corporation, multinational Conservatism in the United States, conservative List of news television channels, news and political commentary Television stati ...

poll, 35% of Americans thought it "very important", and an additional 41% thought it "somewhat important", for the federal government to regulate AI, versus 13% responding "not very important" and 8% responding "not at all important".

Perspectives

The regulation of artificial intelligence is the development of public sector policies and laws for promoting and regulating AI. Regulation is now generally considered necessary to both encourage AI and manage associated risks. Public administration and policy considerations generally focus on the technical and economic implications and on trustworthy and human-centered AI systems, regulation of artificialsuperintelligence

A superintelligence is a hypothetical intelligent agent, agent that possesses intelligence surpassing that of the brightest and most intellectual giftedness, gifted human minds. "Superintelligence" may also refer to a property of advanced problem- ...

, the risks and biases of machine-learning algorithms, the explainability

Explainable AI (XAI), often overlapping with interpretable AI, or explainable machine learning (XML), is a field of research within artificial intelligence (AI) that explores methods that provide humans with the ability of ''intellectual oversig ...

of model outputs, and the tension between open source AI and unchecked AI use.

There have been both hard law

{{Judicial interpretation

Hard law refers to actual binding legal instruments and laws. In contrast with soft law, hard law gives states and international actors actual binding responsibilities as well as rights. The term is common in internationa ...

and soft law

The term ''soft law'' refers to quasi-legal instruments (like recommendations or guidelines) which do not have any legally binding force, or whose binding force is somewhat weaker than the binding force of traditional law. Soft law is often contra ...

proposals to regulate AI. Some legal scholars have noted that hard law approaches to AI regulation have substantial challenges. Among the challenges, AI technology is rapidly evolving leading to a "pacing problem" where traditional laws and regulations often cannot keep up with emerging applications and their associated risks and benefits. Similarly, the diversity of AI applications challenges existing regulatory agencies, which often have limited jurisdictional scope. As an alternative, some legal scholars argue that soft law approaches to AI regulation are promising because soft laws can be adapted more flexibly to meet the needs of emerging and evolving AI technology and nascent applications. However, soft law approaches often lack substantial enforcement potential.

Cason Schmit, Megan Doerr, and Jennifer Wagner proposed the creation of a quasi-governmental regulator by leveraging intellectual property rights (i.e., copyleft

Copyleft is the legal technique of granting certain freedoms over copies of copyrighted works with the requirement that the same rights be preserved in derivative works. In this sense, ''freedoms'' refers to the use of the work for any purpose, ...

licensing) in certain AI objects (i.e., AI models and training datasets) and delegating enforcement rights to a designated enforcement entity. They argue that AI can be licensed under terms that require adherence to specified ethical practices and codes of conduct. (e.g., soft law principles).

Prominent youth organizations focused on AI, namely Encode Justice, have also issued comprehensive agendas calling for more stringent AI regulations and public-private partnerships.

AI regulation could derive from basic principles. A 2020 Berkman Klein Center for Internet & Society meta-review of existing sets of principles, such as the Asilomar Principles and the Beijing Principles, identified eight such basic principles: privacy, accountability, safety and security, transparency and explainability, fairness and non-discrimination, human control of technology, professional responsibility, and respect for human values. AI law and regulations have been divided into three main topics, namely governance of autonomous intelligence systems, responsibility and accountability for the systems, and privacy and safety issues. A public administration approach sees a relationship between AI law and regulation, the ethics of AI, and 'AI society', defined as workforce substitution and transformation, social acceptance and trust in AI, and the transformation of human to machine interaction. The development of public sector strategies for management and regulation of AI is deemed necessary at the local, national, and international levels and in a variety of fields, from public service management and accountability to law enforcement, healthcare (especially the concept of a Human Guarantee), the financial sector, robotics, autonomous vehicles, the military and national security, and international law.

Henry Kissinger

Henry Alfred Kissinger (May 27, 1923 – November 29, 2023) was an American diplomat and political scientist who served as the 56th United States secretary of state from 1973 to 1977 and the 7th National Security Advisor (United States), natio ...

, Eric Schmidt

Eric Emerson Schmidt (born April 27, 1955) is an American businessman and former computer engineer who was the chief executive officer of Google from 2001 to 2011 and the company's chairman, executive chairman from 2011 to 2015. He also was the ...

, and Daniel Huttenlocher

Daniel Peter Huttenlocher is an American computer scientist, academic administrator and corporate director. He is the inaugural dean of the Schwarzman College of Computing at the Massachusetts Institute of Technology (MIT).Matheson, Rob"Dan Hutte ...

published a joint statement in November 2021 entitled "Being Human in an Age of AI", calling for a government commission to regulate AI.

As a response to the AI control problem

Regulation of AI can be seen as positive social means to manage the AI control problem (the need to ensure long-term beneficial AI), with other social responses such as doing nothing or banning being seen as impractical, and approaches such as enhancing human capabilities throughtranshumanism

Transhumanism is a philosophical and intellectual movement that advocates the human enhancement, enhancement of the human condition by developing and making widely available new and future technologies that can greatly enhance longevity, cogni ...

techniques like brain-computer interfaces being seen as potentially complementary. Regulation of research into artificial general intelligence

Artificial general intelligence (AGI)—sometimes called human‑level intelligence AI—is a type of artificial intelligence that would match or surpass human capabilities across virtually all cognitive tasks.

Some researchers argue that sta ...

(AGI) focuses on the role of review boards, from university or corporation to international levels, and on encouraging research into AI safety

AI safety is an interdisciplinary field focused on preventing accidents, misuse, or other harmful consequences arising from artificial intelligence (AI) systems. It encompasses machine ethics and AI alignment, which aim to ensure AI systems are mor ...

, together with the possibility of differential intellectual progress (prioritizing protective strategies over risky strategies in AI development) or conducting international mass surveillance to perform AGI arms control. For instance, the 'AGI Nanny' is a proposed strategy, potentially under the control of humanity, for preventing the creation of a dangerous superintelligence

A superintelligence is a hypothetical intelligent agent, agent that possesses intelligence surpassing that of the brightest and most intellectual giftedness, gifted human minds. "Superintelligence" may also refer to a property of advanced problem- ...

as well as for addressing other major threats to human well-being, such as subversion of the global financial system, until a true superintelligence can be safely created. It entails the creation of a smarter-than-human, but not superintelligent, AGI system connected to a large surveillance network, with the goal of monitoring humanity and protecting it from danger. Regulation of conscious, ethically aware AGIs focuses on how to integrate them with existing human society and can be divided into considerations of their legal standing and of their moral rights. Regulation of AI has been seen as restrictive, with a risk of preventing the development of AGI.

Global guidance

The development of a global governance board to regulate AI development was suggested at least as early as 2017. In December 2018, Canada and France announced plans for a G7-backed International Panel on Artificial Intelligence, modeled on the International Panel on Climate Change, to study the global effects of AI on people and economies and to steer AI development. In 2019, the Panel was renamed the Global Partnership on AI.

The Global Partnership on Artificial Intelligence (GPAI) was launched in June 2020, stating a need for AI to be developed in accordance with human rights and democratic values, to ensure public confidence and trust in the technology, as outlined in the

The development of a global governance board to regulate AI development was suggested at least as early as 2017. In December 2018, Canada and France announced plans for a G7-backed International Panel on Artificial Intelligence, modeled on the International Panel on Climate Change, to study the global effects of AI on people and economies and to steer AI development. In 2019, the Panel was renamed the Global Partnership on AI.

The Global Partnership on Artificial Intelligence (GPAI) was launched in June 2020, stating a need for AI to be developed in accordance with human rights and democratic values, to ensure public confidence and trust in the technology, as outlined in the OECD

The Organisation for Economic Co-operation and Development (OECD; , OCDE) is an international organization, intergovernmental organization with 38 member countries, founded in 1961 to stimulate economic progress and international trade, wor ...

''Principles on Artificial Intelligence'' (2019). The 15 founding members of the Global Partnership on Artificial Intelligence are Australia, Canada, the European Union, France, Germany, India, Italy, Japan, the Republic of Korea, Mexico, New Zealand, Singapore, Slovenia, the United States and the UK. In 2023, the GPAI has 29 members. The GPAI Secretariat is hosted by the OECD in Paris, France. GPAI's mandate covers four themes, two of which are supported by the International Centre of Expertise in Montréal for the Advancement of Artificial Intelligence, namely, responsible AI and data governance. A corresponding centre of excellence in Paris will support the other two themes on the future of work, and on innovation and commercialization. GPAI also investigated how AI can be leveraged to respond to the COVID-19 pandemic.

The OECD AI Principles were adopted in May 2019, and the G20 AI Principles in June 2019. In September 2019 the World Economic Forum

The World Economic Forum (WEF) is an international non-governmental organization, international advocacy non-governmental organization and think tank, based in Cologny, Canton of Geneva, Switzerland. It was founded on 24 January 1971 by German ...

issued ten 'AI Government Procurement Guidelines'. In February 2020, the European Union published its draft strategy paper for promoting and regulating AI.

At the United Nations (UN), several entities have begun to promote and discuss aspects of AI regulation and policy, including the UNICRI Centre for AI and Robotics

The Centre for Artificial Intelligence and Robotics at the United Nations Interregional Crime and Justice Research Institute (UNICRI) was established to advance understanding of artificial intelligence (AI), robotics and related technologies with a ...

. In partnership with INTERPOL, UNICRI's Centre issued the report ''AI and Robotics for Law Enforcement'' in April 2019 and the follow-up report ''Towards Responsible AI Innovation'' in May 2020. At UNESCO

The United Nations Educational, Scientific and Cultural Organization (UNESCO ) is a List of specialized agencies of the United Nations, specialized agency of the United Nations (UN) with the aim of promoting world peace and International secur ...

's Scientific 40th session in November 2019, the organization commenced a two-year process to achieve a "global standard-setting instrument on ethics of artificial intelligence". In pursuit of this goal, UNESCO forums and conferences on AI were held to gather stakeholder views. A draft text of a ''Recommendation on the Ethics of AI'' of the UNESCO Ad Hoc Expert Group was issued in September 2020 and included a call for legislative gaps to be filled. (The CC BY 4.0 licence means that everyone have the right to reuse the text that is quoted here, or other parts of the original article itself, if they credit the authors. More info: Creative Commons license

A Creative Commons (CC) license is one of several public copyright licenses that enable the free distribution of an otherwise copyrighted "work". A CC license is used when an author wants to give other people the right to share, use, and bu ...

) Changes were made as follows: citations removed and minor grammatical amendments. UNESCO tabled the international instrument on the ethics of AI for adoption at its General Conference in November 2021; this was subsequently adopted. While the UN is making progress with the global management of AI, its institutional and legal capability to manage the AGI existential risk is more limited.

An initiative of International Telecommunication Union

The International Telecommunication Union (ITU)In the other common languages of the ITU:

*

* is a list of specialized agencies of the United Nations, specialized agency of the United Nations responsible for many matters related to information ...

(ITU) in partnership with 40 UN sister agencies, AI for Good is a global platform which aims to identify practical applications of AI to advance the United Nations Sustainable Development Goals

The ''2030 Agenda for Sustainable Development'', adopted by all United Nations (UN) members in 2015, created 17 world Sustainable Development Goals (SDGs). The aim of these global goals is "peace and prosperity for people and the planet" – wh ...

and scale those solutions for global impact. It is an action-oriented, global & inclusive United Nations platform fostering development of AI to positively impact health, climate, gender, inclusive prosperity, sustainable infrastructure, and other global development priorities.

Recent research has indicated that countries will also begin to use artificial intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of re ...

as a tool for national cyberdefense. AI is a new factor in the cyber arms industry, as it can be used for defense purposes. Therefore, academics urge that nations should establish regulations for the use of AI, similar to how there are regulations for other military industries.

In recent years, academic researchers have made more efforts to promote multilateral dialogue and policy development, advocating for the adoption of international frameworks that govern the deployment of AI in military and cybersecurity contexts, with a strong emphasis on human rights and international humanitarian law. Initiatives such as the Munich Convention process, which brought together scholars from institutions including the Technical University of Munich

The Technical University of Munich (TUM or TU Munich; ) is a public research university in Munich, Bavaria, Germany. It specializes in engineering, technology, medicine, and applied and natural sciences.

Established in 1868 by King Ludwig II ...

, Rutgers University

Rutgers University ( ), officially Rutgers, The State University of New Jersey, is a Public university, public land-grant research university consisting of three campuses in New Jersey. Chartered in 1766, Rutgers was originally called Queen's C ...

, Stellenbosch University

Stellenbosch University (SU) (, ) is a public research university situated in Stellenbosch, a town in the Western Cape province of South Africa. Stellenbosch is the oldest university in South Africa and the oldest extant university in Sub-Sahara ...

, Ulster University

Ulster University (; Ulster Scots: or ), legally the University of Ulster, is a multi-campus public research university located in Northern Ireland. It is often referred to informally and unofficially as Ulster, or by the abbreviation UU. It i ...

, and University of Edinburgh

The University of Edinburgh (, ; abbreviated as ''Edin.'' in Post-nominal letters, post-nominals) is a Public university, public research university based in Edinburgh, Scotland. Founded by the City of Edinburgh Council, town council under th ...

, have called for a binding international agreement to protect human rights in the age of AI.

Regional and national regulation

The regulatory and policy landscape for AI is an emerging issue in regional and national jurisdictions globally, for example in the European Union and Russia. Since early 2016, many national, regional and international authorities have begun adopting strategies, actions plans and policy papers on AI. These documents cover a wide range of topics such as regulation and governance, as well as industrial strategy, research, talent and infrastructure.

Different countries have approached the problem in different ways. Regarding the three largest economies, it has been said that "the United States is following a market-driven approach, China is advancing a state-driven approach, and the EU is pursuing a rights-driven approach."

The regulatory and policy landscape for AI is an emerging issue in regional and national jurisdictions globally, for example in the European Union and Russia. Since early 2016, many national, regional and international authorities have begun adopting strategies, actions plans and policy papers on AI. These documents cover a wide range of topics such as regulation and governance, as well as industrial strategy, research, talent and infrastructure.

Different countries have approached the problem in different ways. Regarding the three largest economies, it has been said that "the United States is following a market-driven approach, China is advancing a state-driven approach, and the EU is pursuing a rights-driven approach."

Australia

In October 2023, theAustralian Computer Society

The Australian Computer Society (ACS) is an association for information and communications technology professionals with 40,000+ members Australia-wide. According to its Constitution, its objectives are "to advance professional excellence in ...

, Business Council of Australia

The Business Council of Australia (BCA) is an industry association that comprises the chief executives of more than 130 of Australia's biggest corporations. It was formed in 1983 by the merger of the Business Roundtable – a spin-off of the Co ...

, Australian Chamber of Commerce and Industry

The Australian Chamber of Commerce and Industry (ACCI) is Australia's largest business association, comprising state and territory chambers of commerce and national industry associations. ACCI represents Australian businesses of all shapes ...

, Ai Group (aka Australian Industry Group), Council of Small Business Organisations Australia, and Tech Council of Australia jointly published an open letter calling for a national approach to AI strategy. The letter backs the federal government establishing a whole-of-government AI taskforce.

Additionally, in August of 2024, the Australian government set a Voluntary AI Safety Standard, which was followed by a Proposals Paper later in September of that year, outlining potential guardrails for high-risk AI that could become mandatory. These guardrails include areas such as model testing, transparency, human oversight, and record-keeping, all of which may be enforced through new legislation. As noted, however, Australia has not yet passed AI-specific laws, but existing statutes such as the Privacy Act 1988

The ''Privacy Act 1988'' is an Australian law dealing with privacy.

Section 14 of the Act stipulates a number of privacy rights known as the Australian Privacy Principles (APPs). These principles apply to Australian Government and Australian C ...

, Corporations Act 2001

The ''Corporations Act 2001'' is an Act of the Parliament of Australia, which sets out the laws dealing with business entities in Australia. The company is the Act's primary focus, but other entities, such as partnerships and managed invest ...

, and Online Safety Act 2021

In computer technology and telecommunications, online indicates a state of connectivity, and offline indicates a disconnected state. In modern terminology, this usually refers to an Internet connection, but (especially when expressed as "on lin ...

all have applications which apply to AI use.

In September 2024, a bill also was introduced which granted the Australian Communications and Media Authority

The Australian Communications and Media Authority (ACMA) is an Australian government statutory authority within the Communications portfolio. ACMA was formed on 1 July 2005 with the merger of the Australian Broadcasting Authority and the Aus ...

powers to regulate AI-generated misinformation. Several agencies, including the ACMA, ACCC, and Office of the Australian Information Commissioner

The Office of the Australian Information Commissioner (OAIC), known until 2010 as the Office of the Australian Privacy Commissioner is an independent Australian Government agency, acting as the national data protection authority for Australia, e ...

, are all expected to play roles in future AI regulation.

Brazil

On September 30, 2021, the Brazilian Chamber of Deputies (Câmara dos Deputados) approved the Brazilian Legal Framework for Artificial Intelligence (Marco Legal da Inteligência Artificial). This legislation aimed to regulate AI development and usage while promoting research and innovation in ethical AI solutions that prioritize culture, justice, fairness, and accountability. The 10-article bill established several key objectives: developing ethical principles for AI, promoting sustained research investment, and removing barriers to innovation. Article 4 specifically emphasized preventing discriminatory AI solutions, ensuring plurality, and protecting human rights. When the bill was first released to the public, it faced substantial criticism, alarming the government for critical provisions. The underlying issue is that this bill failed to thoroughly and carefully address accountability, transparency, and inclusivity principles. Article VI establishes subjective liability, meaning any individual that is damaged by an AI system and is wishing to receive compensation must specify the stakeholder and prove that there was a mistake in the machine's life cycle. Scholars emphasize that it is out of legal order to assign an individual responsible for proving algorithmic errors given the high degree of autonomy, unpredictability, and complexity of AI systems. This also drew attention to the currently occurring issues with face recognition systems in Brazil leading to unjust arrests by the police, which would then imply that when this bill is adopted, individuals would have to prove and justify these machine errors. The main controversy of this draft bill was directed to three proposed principles. First, the non-discrimination principle, suggests that AI must be developed and used in a way that merely mitigates the possibility of abusive and discriminatory practices. Secondly, the pursuit of neutrality principle lists recommendations for stakeholders to mitigate biases; however, with no obligation to achieve this goal. Lastly, the transparency principle states that a system's transparency is only necessary when there is a high risk of violating fundamental rights. As easily observed, the Brazilian Legal Framework for Artificial Intelligence lacks binding and obligatory clauses and is rather filled with relaxed guidelines. In fact, experts emphasize that this bill may even make accountability for AI discriminatory biases even harder to achieve. Compared to the EU's proposal of extensive risk-based regulations, the Brazilian Bill has 10 articles proposing vague and generic recommendations. The Brazilian AI Bill lacks the diverse perspectives that characterized earlier Brazilian internet legislation. When Brazil drafted the Marco Civil da Internet (Brazilian Internet Bill of Rights) in the 2000s, it used a multistakeholder approach that brought together various groups—including government, civil society, academia, and industry—to participate in dialogue, decision-making, and implementation. This collaborative process helps capture different viewpoints and trade-offs among stakeholders with varying interests, ultimately improving transparency and effectiveness in AI regulation. In May 2023, a new bill was passed, superseding the 2021 bill. It calls for risk assessments of AI systems before deployment and distinguishes "high risk" and "excessive risk" systems. The latter are characterized by their potential to expose or exploit vulnerabilities and will be subject to regulation by the Executive Branch.Canada

The ''Pan-Canadian Artificial Intelligence Strategy'' (2017) is supported by federal funding of Can $125 million with the objectives of increasing the number of outstanding AI researchers and skilled graduates in Canada, establishing nodes of scientific excellence at the three major AI centres, developing 'global thought leadership' on the economic, ethical, policy and legal implications of AI advances and supporting a national research community working on AI. The Canada CIFAR AI Chairs Program is the cornerstone of the strategy. It benefits from funding of Can$86.5 million over five years to attract and retain world-renowned AI researchers. The federal government appointed an Advisory Council on AI in May 2019 with a focus on examining how to build on Canada's strengths to ensure that AI advancements reflect Canadian values, such as human rights, transparency and openness. The Advisory Council on AI has established a working group on extracting commercial value from Canadian-owned AI and data analytics. In 2020, the federal government and Government of Quebec announced the opening of the International Centre of Expertise in Montréal for the Advancement of Artificial Intelligence, which will advance the cause of responsible development of AI. In June 2022, the government of Canada started a second phase of the Pan-Canadian Artificial Intelligence Strategy. In November 2022, Canada has introduced the Digital Charter Implementation Act (Bill C-27), which proposes three acts that have been described as a holistic package of legislation for trust and privacy: the Consumer Privacy Protection Act, the Personal Information and Data Protection Tribunal Act, and the Artificial Intelligence & Data Act (AIDA). In September of 2023, the Canadian Government introduced a Voluntary Code of Conduct for the Responsible Development and Management of AdvancedGenerative AI

Generative artificial intelligence (Generative AI, GenAI, or GAI) is a subfield of artificial intelligence that uses generative models to produce text, images, videos, or other forms of data. These models learn the underlying patterns and str ...

Systems. The code, based initially on public consultations, seeks to provide interim guidance to Canadian companies on responsible AI practices. Ultimately, its intended to serve as a stopgap

{{Short pages monitor