Nonlinear Conjugate Gradient on:

[Wikipedia]

[Google]

[Amazon]

In

A classic, extensively studied nonlinear problem is the dynamics of a frictionless

A classic, extensively studied nonlinear problem is the dynamics of a frictionless David Tong: Lectures on Classical Dynamics

/ref> that the motion of a pendulum can be described by the

Command and Control Research Program (CCRP)

* ttp://ocw.mit.edu/courses/mathematics/18-353j-nonlinear-dynamics-i-chaos-fall-2012/ Nonlinear Dynamics I: Chaosa

MIT's OpenCourseWare

(in

The Center for Nonlinear Studies at Los Alamos National Laboratory

{{Authority control Dynamical systems Concepts in physics

mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many ar ...

and science

Science is a systematic discipline that builds and organises knowledge in the form of testable hypotheses and predictions about the universe. Modern science is typically divided into twoor threemajor branches: the natural sciences, which stu ...

, a nonlinear system (or a non-linear system) is a system

A system is a group of interacting or interrelated elements that act according to a set of rules to form a unified whole. A system, surrounded and influenced by its open system (systems theory), environment, is described by its boundaries, str ...

in which the change of the output is not proportional to the change of the input. Nonlinear problems are of interest to engineer

Engineers, as practitioners of engineering, are professionals who Invention, invent, design, build, maintain and test machines, complex systems, structures, gadgets and materials. They aim to fulfill functional objectives and requirements while ...

s, biologist

A biologist is a scientist who conducts research in biology. Biologists are interested in studying life on Earth, whether it is an individual Cell (biology), cell, a multicellular organism, or a Community (ecology), community of Biological inter ...

s, physicist

A physicist is a scientist who specializes in the field of physics, which encompasses the interactions of matter and energy at all length and time scales in the physical universe. Physicists generally are interested in the root or ultimate cau ...

s, mathematician

A mathematician is someone who uses an extensive knowledge of mathematics in their work, typically to solve mathematical problems. Mathematicians are concerned with numbers, data, quantity, mathematical structure, structure, space, Mathematica ...

s, and many other scientist

A scientist is a person who Scientific method, researches to advance knowledge in an Branches of science, area of the natural sciences.

In classical antiquity, there was no real ancient analog of a modern scientist. Instead, philosophers engag ...

s since most systems are inherently nonlinear in nature. Nonlinear dynamical system

In mathematics, a dynamical system is a system in which a Function (mathematics), function describes the time dependence of a Point (geometry), point in an ambient space, such as in a parametric curve. Examples include the mathematical models ...

s, describing changes in variables over time, may appear chaotic, unpredictable, or counterintuitive, contrasting with much simpler linear system

In systems theory, a linear system is a mathematical model of a system based on the use of a linear operator.

Linear systems typically exhibit features and properties that are much simpler than the nonlinear case.

As a mathematical abstractio ...

s.

Typically, the behavior of a nonlinear system is described in mathematics by a nonlinear system of equations, which is a set of simultaneous equation

In mathematics, an equation is a mathematical formula that expresses the equality of two expressions, by connecting them with the equals sign . The word ''equation'' and its cognates in other languages may have subtly different meanings; for ...

s in which the unknowns (or the unknown functions in the case of differential equations) appear as variables of a polynomial

In mathematics, a polynomial is a Expression (mathematics), mathematical expression consisting of indeterminate (variable), indeterminates (also called variable (mathematics), variables) and coefficients, that involves only the operations of addit ...

of degree higher than one or in the argument of a function

Function or functionality may refer to:

Computing

* Function key, a type of key on computer keyboards

* Function model, a structured representation of processes in a system

* Function object or functor or functionoid, a concept of object-orie ...

which is not a polynomial of degree one.

In other words, in a nonlinear system of equations, the equation(s) to be solved cannot be written as a linear combination

In mathematics, a linear combination or superposition is an Expression (mathematics), expression constructed from a Set (mathematics), set of terms by multiplying each term by a constant and adding the results (e.g. a linear combination of ''x'' a ...

of the unknown variables

Variable may refer to:

Computer science

* Variable (computer science), a symbolic name associated with a value and whose associated value may be changed

Mathematics

* Variable (mathematics), a symbol that represents a quantity in a mathemat ...

or functions that appear in them. Systems can be defined as nonlinear, regardless of whether known linear functions appear in the equations. In particular, a differential equation is ''linear'' if it is linear in terms of the unknown function and its derivatives, even if nonlinear in terms of the other variables appearing in it.

As nonlinear dynamical equations are difficult to solve, nonlinear systems are commonly approximated by linear equations (linearization

In mathematics, linearization (British English: linearisation) is finding the linear approximation to a function at a given point. The linear approximation of a function is the first order Taylor expansion around the point of interest. In the ...

). This works well up to some accuracy and some range for the input values, but some interesting phenomena such as soliton

In mathematics and physics, a soliton is a nonlinear, self-reinforcing, localized wave packet that is , in that it preserves its shape while propagating freely, at constant velocity, and recovers it even after collisions with other such local ...

s, chaos

Chaos or CHAOS may refer to:

Science, technology, and astronomy

* '' Chaos: Making a New Science'', a 1987 book by James Gleick

* Chaos (company), a Bulgarian rendering and simulation software company

* ''Chaos'' (genus), a genus of amoebae

* ...

, and singularities are hidden by linearization. It follows that some aspects of the dynamic behavior of a nonlinear system can appear to be counterintuitive, unpredictable or even chaotic. Although such chaotic behavior may resemble random

In common usage, randomness is the apparent or actual lack of definite pattern or predictability in information. A random sequence of events, symbols or steps often has no order and does not follow an intelligible pattern or combination. ...

behavior, it is in fact not random. For example, some aspects of the weather are seen to be chaotic, where simple changes in one part of the system produce complex effects throughout. This nonlinearity is one of the reasons why accurate long-term forecasts are impossible with current technology.

Some authors use the term nonlinear science for the study of nonlinear systems. This term is disputed by others:

Definition

Inmathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many ar ...

, a linear map

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping V \to W between two vector spaces that p ...

(or ''linear function'') is one which satisfies both of the following properties:

*Additivity or superposition principle

The superposition principle, also known as superposition property, states that, for all linear systems, the net response caused by two or more stimuli is the sum of the responses that would have been caused by each stimulus individually. So th ...

:

*Homogeneity:

Additivity implies homogeneity for any rational

Rationality is the quality of being guided by or based on reason. In this regard, a person acts rationally if they have a good reason for what they do, or a belief is rational if it is based on strong evidence. This quality can apply to an ...

''α'', and, for continuous function

In mathematics, a continuous function is a function such that a small variation of the argument induces a small variation of the value of the function. This implies there are no abrupt changes in value, known as '' discontinuities''. More preci ...

s, for any real

Real may refer to:

Currencies

* Argentine real

* Brazilian real (R$)

* Central American Republic real

* Mexican real

* Portuguese real

* Spanish real

* Spanish colonial real

Nature and science

* Reality, the state of things as they exist, rathe ...

''α''. For a complex

Complex commonly refers to:

* Complexity, the behaviour of a system whose components interact in multiple ways so possible interactions are difficult to describe

** Complex system, a system composed of many components which may interact with each ...

''α'', homogeneity does not follow from additivity. For example, an antilinear map

In mathematics, a function f : V \to W between two complex vector spaces is said to be antilinear or conjugate-linear if

\begin

f(x + y) &= f(x) + f(y) && \qquad \text \\

f(s x) &= \overline f(x) && \qquad \text \\

\end

hold for all vectors x, y ...

is additive but not homogeneous. The conditions of additivity and homogeneity are often combined in the superposition principle

:

An equation written as

:

is called linear if is a linear map (as defined above) and nonlinear otherwise. The equation is called ''homogeneous'' if and is a homogeneous function

In mathematics, a homogeneous function is a function of several variables such that the following holds: If each of the function's arguments is multiplied by the same scalar (mathematics), scalar, then the function's value is multiplied by some p ...

.

The definition is very general in that can be any sensible mathematical object (number, vector, function, etc.), and the function can literally be any mapping, including integration or differentiation with associated constraints (such as boundary values

In the study of differential equations, a boundary-value problem is a differential equation subjected to constraints called boundary conditions. A solution to a boundary value problem is a solution to the differential equation which also satis ...

). If contains differentiation with respect to , the result will be a differential equation.

Nonlinear systems of equations

A nonlinear system of equations consists of a set of equations in several variables such that at least one of them is not alinear equation

In mathematics, a linear equation is an equation that may be put in the form

a_1x_1+\ldots+a_nx_n+b=0, where x_1,\ldots,x_n are the variables (or unknowns), and b,a_1,\ldots,a_n are the coefficients, which are often real numbers. The coeffici ...

.

For a single equation of the form many methods have been designed; see Root-finding algorithm

In numerical analysis, a root-finding algorithm is an algorithm for finding zeros, also called "roots", of continuous functions. A zero of a function is a number such that . As, generally, the zeros of a function cannot be computed exactly nor ...

. In the case where is a polynomial

In mathematics, a polynomial is a Expression (mathematics), mathematical expression consisting of indeterminate (variable), indeterminates (also called variable (mathematics), variables) and coefficients, that involves only the operations of addit ...

, one has a ''polynomial equation

In mathematics, an algebraic equation or polynomial equation is an equation of the form P = 0, where ''P'' is a polynomial with coefficients in some field (mathematics), field, often the field of the rational numbers.

For example, x^5-3x+1=0 is a ...

'' such as

The general root-finding algorithms apply to polynomial roots, but, generally they do not find all the roots, and when they fail to find a root, this does not imply that there is no roots. Specific methods for polynomials allow finding all roots or the real

Real may refer to:

Currencies

* Argentine real

* Brazilian real (R$)

* Central American Republic real

* Mexican real

* Portuguese real

* Spanish real

* Spanish colonial real

Nature and science

* Reality, the state of things as they exist, rathe ...

roots; see real-root isolation

In mathematics, and, more specifically in numerical analysis and computer algebra, real-root isolation of a polynomial consist of producing disjoint intervals of the real line, which contain each one (and only one) real root of the polynomial, and ...

.

Solving systems of polynomial equations

A system of polynomial equations (sometimes simply a polynomial system) is a set of simultaneous equations where the are polynomials in several variables, say , over some field .

A ''solution'' of a polynomial system is a set of values for the ...

, that is finding the common zeros of a set of several polynomials in several variables is a difficult problem for which elaborate algorithms have been designed, such as Gröbner base algorithms.

For the general case of system of equations formed by equating to zero several differentiable function

In mathematics, a differentiable function of one real variable is a function whose derivative exists at each point in its domain. In other words, the graph of a differentiable function has a non- vertical tangent line at each interior point in ...

s, the main method is Newton's method

In numerical analysis, the Newton–Raphson method, also known simply as Newton's method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a ...

and its variants. Generally they may provide a solution, but do not provide any information on the number of solutions.

Nonlinear recurrence relations

A nonlinearrecurrence relation

In mathematics, a recurrence relation is an equation according to which the nth term of a sequence of numbers is equal to some combination of the previous terms. Often, only k previous terms of the sequence appear in the equation, for a parameter ...

defines successive terms of a sequence

In mathematics, a sequence is an enumerated collection of objects in which repetitions are allowed and order matters. Like a set, it contains members (also called ''elements'', or ''terms''). The number of elements (possibly infinite) is cal ...

as a nonlinear function of preceding terms. Examples of nonlinear recurrence relations are the logistic map

The logistic map is a discrete dynamical system defined by the quadratic difference equation:

Equivalently it is a recurrence relation and a polynomial mapping of degree 2. It is often referred to as an archetypal example of how complex, ...

and the relations that define the various Hofstadter sequence In mathematics, a Hofstadter sequence is a member of a family of related integer sequences defined by non-linear recurrence relations.

Sequences presented in ''Gödel, Escher, Bach: an Eternal Golden Braid''

The first Hofstadter sequences were desc ...

s. Nonlinear discrete models that represent a wide class of nonlinear recurrence relationships include the NARMAX (Nonlinear Autoregressive Moving Average with eXogenous inputs) model and the related nonlinear system identification System identification is a method of identifying or measuring the mathematical model of a system from measurements of the system inputs and outputs. The applications of system identification include any system where the inputs and outputs can be mea ...

and analysis procedures.Billings S.A. "Nonlinear System Identification: NARMAX Methods in the Time, Frequency, and Spatio-Temporal Domains". Wiley, 2013 These approaches can be used to study a wide class of complex nonlinear behaviors in the time, frequency, and spatio-temporal domains.

Nonlinear differential equations

Asystem

A system is a group of interacting or interrelated elements that act according to a set of rules to form a unified whole. A system, surrounded and influenced by its open system (systems theory), environment, is described by its boundaries, str ...

of differential equations is said to be nonlinear if it is not a system of linear equations

In mathematics, a system of linear equations (or linear system) is a collection of two or more linear equations involving the same variable (math), variables.

For example,

: \begin

3x+2y-z=1\\

2x-2y+4z=-2\\

-x+\fracy-z=0

\end

is a system of th ...

. Problems involving nonlinear differential equations are extremely diverse, and methods of solution or analysis are problem dependent. Examples of nonlinear differential equations are the Navier–Stokes equations

The Navier–Stokes equations ( ) are partial differential equations which describe the motion of viscous fluid substances. They were named after French engineer and physicist Claude-Louis Navier and the Irish physicist and mathematician Georg ...

in fluid dynamics and the Lotka–Volterra equations in biology.

One of the greatest difficulties of nonlinear problems is that it is not generally possible to combine known solutions into new solutions. In linear problems, for example, a family of linearly independent

In the theory of vector spaces, a set of vectors is said to be if there exists no nontrivial linear combination of the vectors that equals the zero vector. If such a linear combination exists, then the vectors are said to be . These concep ...

solutions can be used to construct general solutions through the superposition principle

The superposition principle, also known as superposition property, states that, for all linear systems, the net response caused by two or more stimuli is the sum of the responses that would have been caused by each stimulus individually. So th ...

. A good example of this is one-dimensional heat transport with Dirichlet boundary condition

In mathematics, the Dirichlet boundary condition is imposed on an ordinary or partial differential equation, such that the values that the solution takes along the boundary of the domain are fixed. The question of finding solutions to such equat ...

s, the solution of which can be written as a time-dependent linear combination of sinusoids of differing frequencies; this makes solutions very flexible. It is often possible to find several very specific solutions to nonlinear equations, however the lack of a superposition principle prevents the construction of new solutions.

Ordinary differential equations

First orderordinary differential equation

In mathematics, an ordinary differential equation (ODE) is a differential equation (DE) dependent on only a single independent variable (mathematics), variable. As with any other DE, its unknown(s) consists of one (or more) Function (mathematic ...

s are often exactly solvable by separation of variables

In mathematics, separation of variables (also known as the Fourier method) is any of several methods for solving ordinary differential equation, ordinary and partial differential equations, in which algebra allows one to rewrite an equation so tha ...

, especially for autonomous equations. For example, the nonlinear equation

:

has as a general solution (and also the special solution corresponding to the limit of the general solution when ''C'' tends to infinity). The equation is nonlinear because it may be written as

:

and the left-hand side of the equation is not a linear function of and its derivatives. Note that if the term were replaced with , the problem would be linear (the exponential decay

A quantity is subject to exponential decay if it decreases at a rate proportional to its current value. Symbolically, this process can be expressed by the following differential equation, where is the quantity and (lambda

Lambda (; uppe ...

problem).

Second and higher order ordinary differential equations (more generally, systems of nonlinear equations) rarely yield closed-form Closed form may refer to:

Mathematics

* Closed-form expression, a finitary expression

* Closed differential form

In mathematics, especially vector calculus and differential topology, a closed form is a differential form ''α'' whose exterior deri ...

solutions, though implicit solutions and solutions involving nonelementary integral

In mathematics, a nonelementary antiderivative of a given elementary function is an antiderivative (or indefinite integral) that is, itself, not an elementary function.Weisstein, Eric W. "Elementary Function." From MathWorld--A Wolfram Web Resour ...

s are encountered.

Common methods for the qualitative analysis of nonlinear ordinary differential equations include:

*Examination of any conserved quantities

A conserved quantity is a property or value that remains constant over time in a system even when changes occur in the system. In mathematics, a conserved quantity of a dynamical system is formally defined as a function of the dependent vari ...

, especially in Hamiltonian system

A Hamiltonian system is a dynamical system governed by Hamilton's equations. In physics, this dynamical system describes the evolution of a physical system such as a planetary system or an electron in an electromagnetic field. These systems can ...

s

*Examination of dissipative quantities (see Lyapunov function

In the theory of ordinary differential equations (ODEs), Lyapunov functions, named after Aleksandr Lyapunov, are scalar functions that may be used to prove the stability of an equilibrium of an ODE. Lyapunov functions (also called Lyapunov’s ...

) analogous to conserved quantities

*Linearization via Taylor expansion

In mathematics, the Taylor series or Taylor expansion of a function is an infinite sum of terms that are expressed in terms of the function's derivatives at a single point. For most common functions, the function and the sum of its Taylor ser ...

*Change of variables into something easier to study

*Bifurcation theory

Bifurcation theory is the Mathematics, mathematical study of changes in the qualitative or topological structure of a given family of curves, such as the integral curves of a family of vector fields, and the solutions of a family of differential e ...

*Perturbation

Perturbation or perturb may refer to:

* Perturbation theory, mathematical methods that give approximate solutions to problems that cannot be solved exactly

* Perturbation (geology), changes in the nature of alluvial deposits over time

* Perturbati ...

methods (can be applied to algebraic equations too)

*Existence of solutions of Finite-Duration, which can happen under specific conditions for some non-linear ordinary differential equations.

Partial differential equations

The most common basic approach to studying nonlinearpartial differential equation

In mathematics, a partial differential equation (PDE) is an equation which involves a multivariable function and one or more of its partial derivatives.

The function is often thought of as an "unknown" that solves the equation, similar to ho ...

s is to change the variables (or otherwise transform the problem) so that the resulting problem is simpler (possibly linear). Sometimes, the equation may be transformed into one or more ordinary differential equation

In mathematics, an ordinary differential equation (ODE) is a differential equation (DE) dependent on only a single independent variable (mathematics), variable. As with any other DE, its unknown(s) consists of one (or more) Function (mathematic ...

s, as seen in separation of variables

In mathematics, separation of variables (also known as the Fourier method) is any of several methods for solving ordinary differential equation, ordinary and partial differential equations, in which algebra allows one to rewrite an equation so tha ...

, which is always useful whether or not the resulting ordinary differential equation(s) is solvable.

Another common (though less mathematical) tactic, often exploited in fluid and heat mechanics, is to use scale analysis to simplify a general, natural equation in a certain specific boundary value problem

In the study of differential equations, a boundary-value problem is a differential equation subjected to constraints called boundary conditions. A solution to a boundary value problem is a solution to the differential equation which also satis ...

. For example, the (very) nonlinear Navier-Stokes equations can be simplified into one linear partial differential equation in the case of transient, laminar, one dimensional flow in a circular pipe; the scale analysis provides conditions under which the flow is laminar and one dimensional and also yields the simplified equation.

Other methods include examining the characteristics and using the methods outlined above for ordinary differential equations.

Pendula

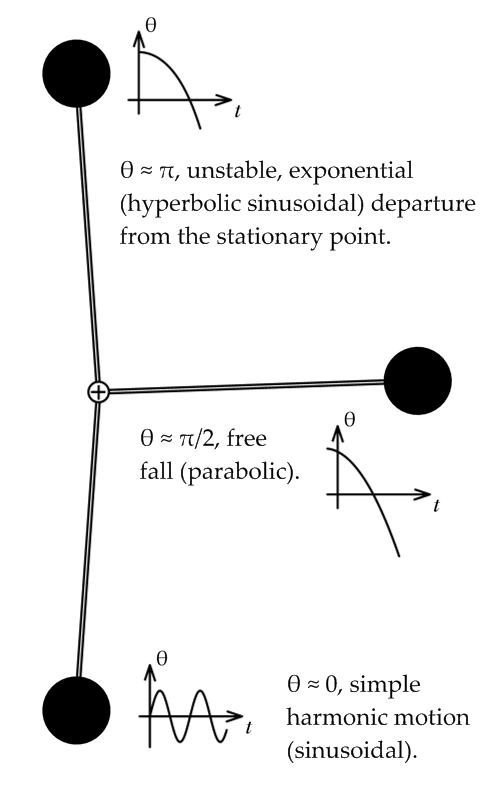

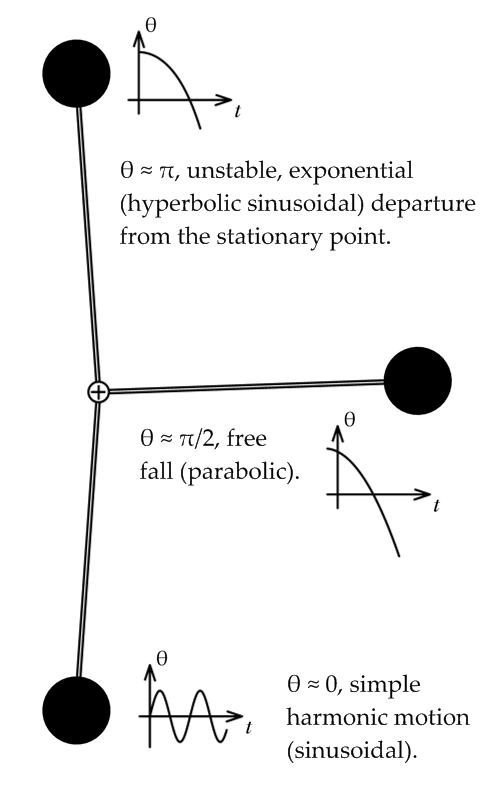

A classic, extensively studied nonlinear problem is the dynamics of a frictionless

A classic, extensively studied nonlinear problem is the dynamics of a frictionless pendulum

A pendulum is a device made of a weight suspended from a pivot so that it can swing freely. When a pendulum is displaced sideways from its resting, equilibrium position, it is subject to a restoring force due to gravity that will accelerate i ...

under the influence of gravity

In physics, gravity (), also known as gravitation or a gravitational interaction, is a fundamental interaction, a mutual attraction between all massive particles. On Earth, gravity takes a slightly different meaning: the observed force b ...

. Using Lagrangian mechanics

In physics, Lagrangian mechanics is a formulation of classical mechanics founded on the d'Alembert principle of virtual work. It was introduced by the Italian-French mathematician and astronomer Joseph-Louis Lagrange in his presentation to the ...

, it may be shown/ref> that the motion of a pendulum can be described by the

dimensionless

Dimensionless quantities, or quantities of dimension one, are quantities implicitly defined in a manner that prevents their aggregation into units of measurement. ISBN 978-92-822-2272-0. Typically expressed as ratios that align with another sy ...

nonlinear equation

:

where gravity points "downwards" and is the angle the pendulum forms with its rest position, as shown in the figure at right. One approach to "solving" this equation is to use as an integrating factor

In mathematics, an integrating factor is a function that is chosen to facilitate the solving of a given equation involving differentials. It is commonly used to solve non-exact ordinary differential equations, but is also used within multivari ...

, which would eventually yield

:

which is an implicit solution involving an elliptic integral

In integral calculus, an elliptic integral is one of a number of related functions defined as the value of certain integrals, which were first studied by Giulio Fagnano and Leonhard Euler (). Their name originates from their originally arising i ...

. This "solution" generally does not have many uses because most of the nature of the solution is hidden in the nonelementary integral

In mathematics, a nonelementary antiderivative of a given elementary function is an antiderivative (or indefinite integral) that is, itself, not an elementary function.Weisstein, Eric W. "Elementary Function." From MathWorld--A Wolfram Web Resour ...

(nonelementary unless ).

Another way to approach the problem is to linearize any nonlinearity (the sine function term in this case) at the various points of interest through Taylor expansion

In mathematics, the Taylor series or Taylor expansion of a function is an infinite sum of terms that are expressed in terms of the function's derivatives at a single point. For most common functions, the function and the sum of its Taylor ser ...

s. For example, the linearization at , called the small angle approximation, is

:

since for . This is a simple harmonic oscillator

In mechanics and physics, simple harmonic motion (sometimes abbreviated as ) is a special type of periodic function, periodic motion an object experiences by means of a restoring force whose magnitude is directly proportionality (mathematics), ...

corresponding to oscillations of the pendulum near the bottom of its path. Another linearization would be at , corresponding to the pendulum being straight up:

:

since for . The solution to this problem involves hyperbolic sinusoids, and note that unlike the small angle approximation, this approximation is unstable, meaning that will usually grow without limit, though bounded solutions are possible. This corresponds to the difficulty of balancing a pendulum upright, it is literally an unstable state.

One more interesting linearization is possible around , around which :

:

This corresponds to a free fall problem. A very useful qualitative picture of the pendulum's dynamics may be obtained by piecing together such linearizations, as seen in the figure at right. Other techniques may be used to find (exact) phase portrait

In mathematics, a phase portrait is a geometric representation of the orbits of a dynamical system in the phase plane. Each set of initial conditions is represented by a different point or curve.

Phase portraits are an invaluable tool in st ...

s and approximate periods.

Types of nonlinear dynamic behaviors

*Amplitude death

In the theory of dynamical systems, amplitude death is complete cessation of oscillations. The system can be in a state of either periodic motion or chaotic motion before it goes to amplitude death. A dynamical system can go to amplitude death bec ...

– any oscillations present in the system cease due to some kind of interaction with other system or feedback by the same system

*Chaos

Chaos or CHAOS may refer to:

Science, technology, and astronomy

* '' Chaos: Making a New Science'', a 1987 book by James Gleick

* Chaos (company), a Bulgarian rendering and simulation software company

* ''Chaos'' (genus), a genus of amoebae

* ...

– values of a system cannot be predicted indefinitely far into the future, and fluctuations are aperiodic

A periodic function, also called a periodic waveform (or simply periodic wave), is a function that repeats its values at regular intervals or periods. The repeatable part of the function or waveform is called a ''cycle''. For example, the tr ...

*Multistability

In a dynamical system, multistability is the property of having multiple Stability theory, stable equilibrium points in the vector space spanned by the states in the system. By mathematical necessity, there must also be unstable equilibrium points ...

– the presence of two or more stable states

*Soliton

In mathematics and physics, a soliton is a nonlinear, self-reinforcing, localized wave packet that is , in that it preserves its shape while propagating freely, at constant velocity, and recovers it even after collisions with other such local ...

s – self-reinforcing solitary waves

*Limit cycles

In mathematics, in the study of dynamical systems with two-dimensional phase space, a limit cycle is a closed trajectory in phase space having the property that at least one other trajectory spirals into it either as time approaches infinity o ...

– asymptotic periodic orbits to which destabilized fixed points are attracted.

* Self-oscillations – feedback oscillations taking place in open dissipative physical systems.

Examples of nonlinear equations

*Algebraic Riccati equation

An algebraic Riccati equation is a type of nonlinear equation that arises in the context of infinite-horizon optimal control problems in continuous time or discrete time.

A typical algebraic Riccati equation is similar to one of the following:

t ...

*Ball and beam

The ball and beam system consists of a long beam which can be tilted by a servo or electric motor together with a ball

rolling back and forth on top of the beam.

It is a popular textbook example in control theory.

The significance of the ball and ...

system

*Bellman equation

A Bellman equation, named after Richard E. Bellman, is a necessary condition for optimality associated with the mathematical Optimization (mathematics), optimization method known as dynamic programming. It writes the "value" of a decision problem ...

for optimal policy

*Boltzmann equation

The Boltzmann equation or Boltzmann transport equation (BTE) describes the statistical behaviour of a thermodynamic system not in a state of equilibrium; it was devised by Ludwig Boltzmann in 1872.Encyclopaedia of Physics (2nd Edition), R. G ...

*Colebrook equation

In fluid dynamics, the Darcy friction factor formulae are equations that allow the calculation of the ''Darcy friction factor'', a dimensionless quantity used in the Darcy–Weisbach equation, for the description of friction losses in pipe flow as ...

*General relativity

General relativity, also known as the general theory of relativity, and as Einstein's theory of gravity, is the differential geometry, geometric theory of gravitation published by Albert Einstein in 1915 and is the current description of grav ...

*Ginzburg–Landau theory

In physics, Ginzburg–Landau theory, often called Landau–Ginzburg theory, named after Vitaly Ginzburg and Lev Landau, is a mathematical physical theory used to describe superconductivity. In its initial form, it was postulated as a phenomen ...

*Ishimori equation The Ishimori equation is a partial differential equation proposed by the Japanese mathematician . Its interest is as the first example of a nonlinear spin-one field model in the plane that is integrable .

Equation

The Ishimori equation has the for ...

*Kadomtsev–Petviashvili equation

In mathematics and physics, the Kadomtsev–Petviashvili equation (often abbreviated as KP equation) is a partial differential equation to describe nonlinear wave motion. Named after Boris Kadomtsev, Boris Borisovich Kadomtsev and Vladimir Iosifovi ...

* Korteweg–de Vries equation

*Landau–Lifshitz–Gilbert equation

In physics, the Landau–Lifshitz–Gilbert equation (usually abbreviated as LLG equation), named for Lev Landau, Evgeny Lifshitz, and T. L. Gilbert, is a name used for a differential equation describing the dynamics (typically the precessional ...

*Liénard equation

In mathematics, more specifically in the study of dynamical systems and differential equations, a Liénard equation is a type of second-order ordinary differential equation named after the French physicist Alfred-Marie Liénard.

During the develop ...

*Navier–Stokes equations

The Navier–Stokes equations ( ) are partial differential equations which describe the motion of viscous fluid substances. They were named after French engineer and physicist Claude-Louis Navier and the Irish physicist and mathematician Georg ...

of fluid dynamics

In physics, physical chemistry and engineering, fluid dynamics is a subdiscipline of fluid mechanics that describes the flow of fluids – liquids and gases. It has several subdisciplines, including (the study of air and other gases in motion ...

*Nonlinear optics

Nonlinear optics (NLO) is the branch of optics that describes the behaviour of light in Nonlinearity, nonlinear media, that is, media in which the polarization density P responds non-linearly to the electric field E of the light. The non-linearity ...

*Nonlinear Schrödinger equation

In theoretical physics, the (one-dimensional) nonlinear Schrödinger equation (NLSE) is a nonlinear variation of the Schrödinger equation. It is a classical field equation whose principal applications are to the propagation of light in nonli ...

*Power-flow study

In power engineering, a power-flow study (also known as power-flow analysis or load-flow study) is a numerical analysis of the flow of electric power in an interconnected system. A power-flow study usually uses simplified notations such as a one-li ...

*Richards equation

The Richards equation represents the movement of water in Vadose zone, unsaturated soils, and is attributed to Lorenzo A. Richards who published the equation in 1931. It is a Differential equation, quasilinear partial differential equation; its ana ...

for unsaturated water flow

*Self-balancing unicycle

An electric unicycle (often initialized as EUC or acronymized yuke or Uni) is a self-balancing personal transporter with a unicycle, single wheel. The rider controls speed by leaning forwards or backwards, and steers by twisting or tilting the uni ...

*Sine-Gordon equation

The sine-Gordon equation is a second-order nonlinear partial differential equation for a function \varphi dependent on two variables typically denoted x and t, involving the wave operator and the sine of \varphi.

It was originally introduced by ...

*Van der Pol oscillator

In the study of dynamical systems, the van der Pol oscillator (named for Dutch physicist Balthasar van der Pol) is a non-Conservative force, conservative, oscillating system with non-linear damping. It evolves in time according to the second-order ...

*Vlasov equation

In plasma physics, the Vlasov equation is a differential equation describing time evolution of the distribution function of collisionless plasma consisting of charged particles with long-range interaction, such as the Coulomb interaction. The e ...

See also

*Aleksandr Mikhailovich Lyapunov

Aleksandr Mikhailovich Lyapunov (Алекса́ндр Миха́йлович Ляпуно́в, – 3 November 1918) was a Russian mathematician, mechanician and physicist. He was the son of the astronomer Mikhail Lyapunov and the brother of the ...

*Dynamical system

In mathematics, a dynamical system is a system in which a Function (mathematics), function describes the time dependence of a Point (geometry), point in an ambient space, such as in a parametric curve. Examples include the mathematical models ...

*Feedback

Feedback occurs when outputs of a system are routed back as inputs as part of a chain of cause and effect that forms a circuit or loop. The system can then be said to ''feed back'' into itself. The notion of cause-and-effect has to be handle ...

*Initial condition

In mathematics and particularly in dynamic systems, an initial condition, in some contexts called a seed value, is a value of an evolving variable at some point in time designated as the initial time (typically denoted ''t'' = 0). Fo ...

*Linear system

In systems theory, a linear system is a mathematical model of a system based on the use of a linear operator.

Linear systems typically exhibit features and properties that are much simpler than the nonlinear case.

As a mathematical abstractio ...

*Mode coupling In the term mode coupling, as used in physics and electrical engineering, the word "mode" refers to eigenmodes of an idealized, "unperturbed", linear system. The superposition principle says that eigenmodes of linear systems are independent of each ...

*Vector soliton

In physical optics or wave optics, a vector soliton is a solitary wave with multiple components coupled together that maintains its shape during propagation. Ordinary solitons maintain their shape but have effectively only one (scalar) polarization ...

*Volterra series

The Volterra series is a model for non-linear behavior similar to the Taylor series. It differs from the Taylor series in its ability to capture "memory" effects. The Taylor series can be used for approximating the response of a nonlinear system t ...

References

Further reading

* * * * * * *External links

Command and Control Research Program (CCRP)

* ttp://ocw.mit.edu/courses/mathematics/18-353j-nonlinear-dynamics-i-chaos-fall-2012/ Nonlinear Dynamics I: Chaosa

MIT's OpenCourseWare

(in

MATLAB

MATLAB (an abbreviation of "MATrix LABoratory") is a proprietary multi-paradigm programming language and numeric computing environment developed by MathWorks. MATLAB allows matrix manipulations, plotting of functions and data, implementat ...

) a Database of Physical SystemsThe Center for Nonlinear Studies at Los Alamos National Laboratory

{{Authority control Dynamical systems Concepts in physics